Truthful AI: Developing and governing AI that does not lie

post by Owain_Evans, owencb, Lukas Finnveden (Lanrian) · 2021-10-18T18:37:38.325Z · LW · GW · 9 commentsContents

Abstract Executive Summary & Overview The threat of automated, scalable, personalised lying Aiming for robustly beneficial standards Truthful AI could have large benefits AI should be subject to different truthfulness standards than humans Avoiding negligent falsehoods as a natural bright line Options for social governance of AI truthfulness Technical research to develop truthful AI Truthfulness complements research on beneficial AI We should be wary of misrealisations of AI truthfulness standards Work on AI truthfulness is timely Structure of the paper Paper authors None 9 comments

This post contains the abstract and executive summary of a new 96-page paper from authors at the Future of Humanity Institute and OpenAI.

Update: The authors are doing an AMA [LW · GW] about truthful AI during October 26-27.

Abstract

In many contexts, lying – the use of verbal falsehoods to deceive – is harmful. While lying has traditionally been a human affair, AI systems that make sophisticated verbal statements are becoming increasingly prevalent. This raises the question of how we should limit the harm caused by AI “lies” (i.e. falsehoods that are actively selected for). Human truthfulness is governed by social norms and by laws (against defamation, perjury, and fraud). Differences between AI and humans present an opportunity to have more precise standards of truthfulness for AI, and to have these standards rise over time. This could provide significant benefits to public epistemics and the economy, and mitigate risks of worst-case AI futures.

Establishing norms or laws of AI truthfulness will require significant work to:

- identify clear truthfulness standards;

- create institutions that can judge adherence to those standards; and

- develop AI systems that are robustly truthful.

Our initial proposals for these areas include:

- a standard of avoiding “negligent falsehoods” (a generalisation of lies that is easier to assess);

- institutions to evaluate AI systems before and after real-world deployment;

- explicitly training AI systems to be truthful via curated datasets and human interaction.

A concerning possibility is that evaluation mechanisms for eventual truthfulness standards could be captured by political interests, leading to harmful censorship and propaganda. Avoiding this might take careful attention. And since the scale of AI speech acts might grow dramatically over the coming decades, early truthfulness standards might be particularly important because of the precedents they set.

Executive Summary & Overview

The threat of automated, scalable, personalised lying

Today, lying is a human problem. AI-produced text or speech is relatively rare, and is not trusted to reliably convey crucial information. In today’s world, the idea of AI systems lying does not seem like a major concern.

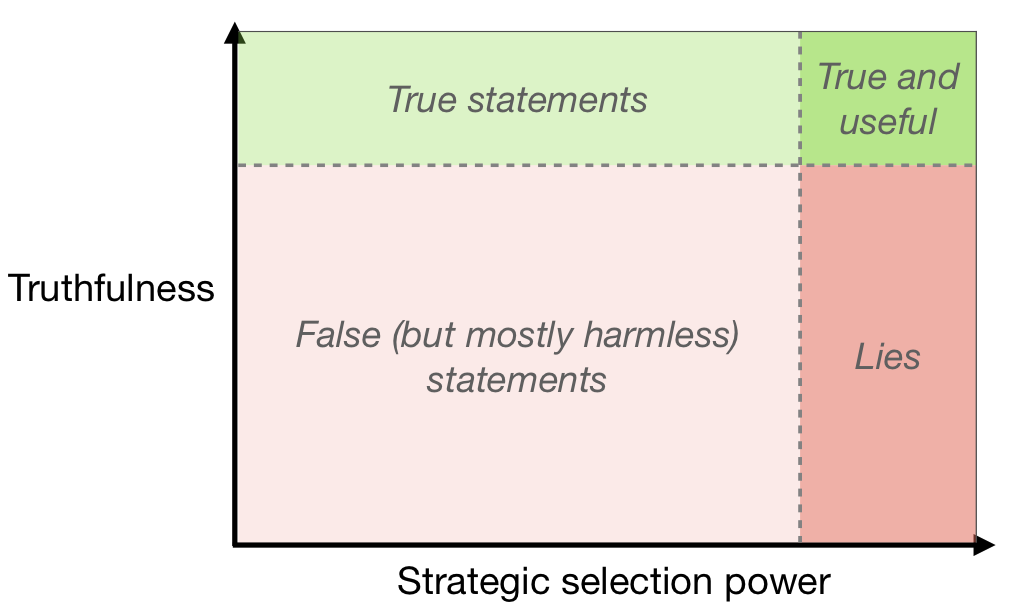

Over the coming years and decades, however, we expect linguistically competent AI systems to be used much more widely. These would be the successors of language models like GPT-3 or T5, and of deployed systems like Siri or Alexa, and they could become an important part of the economy and the epistemic ecosystem. Such AI systems will choose, from among the many coherent statements they might make, those that fit relevant selection criteria — for example, an AI selling products to humans might make statements judged likely to lead to a sale. If truth is not a valued criterion, sophisticated AI could use a lot of selection power to choose statements that further their own ends while being very damaging to others (without necessarily having any intention to deceive – see Diagram 1). This is alarming because AI untruths could potentially scale, with one system telling personalised lies to millions of people.

Aiming for robustly beneficial standards

Widespread and damaging AI falsehoods will be regarded as socially unacceptable. So it is perhaps inevitable that laws or other mechanisms will emerge to govern this behaviour. These might be existing human norms stretched to apply to novel contexts, or something more original.

Our purpose in writing this paper is to begin to identify beneficial standards for AI truthfulness, and to explore ways that they could be established. We think that careful consideration now could help both to avoid acute damage from AI falsehoods, and to avoid unconsidered kneejerk reactions to AI falsehoods. It could help to identify ways in which the governance of AI truthfulness could be structured differently than in the human context, and so obtain benefits that are currently out of reach. And it could help to lay the groundwork for tools to facilitate and underpin these future standards.

Truthful AI could have large benefits

Widespread truthful AI would have significant benefits, both direct and indirect. A direct benefit is that people who believe AI-produced statements will avoid being deceived. This could avert some of the most concerning possible AI facilitated catastrophes. An indirect benefit is that it enables justified trust in AI-produced statements (if people cannot reliably distinguish truths and falsehoods, disbelieving falsehoods will also mean disbelieving truths).

These benefits would apply in many domains. There could be a range of economic benefits, through allowing AI systems to act as trusted third parties to broker deals between humans, reducing principal-agent problems, and detecting and preventing fraud. In knowledge-production fields like science and technology, the ability to build on reliable trustworthy statements made by others is crucial, so this could facilitate AI systems becoming more active contributors. If AI systems consistently demonstrate their reliable truthfulness, they could improve public epistemics and democratic decision making.

For further discussion, see Section 3 (“Benefits and Costs”).

AI should be subject to different truthfulness standards than humans

We already have social norms and laws against humans lying. Why should the standards for AI systems be different? There are two reasons. First, our normal accountability mechanisms do not all apply straightforwardly in the AI context. Second, the economic and social costs of high standards are likely to be lower than in the human context.

Legal penalties and social censure for lying are often based in part on an intention to deceive. When AI systems are generating falsehoods, it is unclear how these standards will be applied. Lying and fraud by companies is limited partially because employees lying may be held personally liable (and partially by corporate liability). But AI systems cannot be held to judgement in the same way as human employees, so there’s a vital role for rules governing indirect responsibility for lies. This is all the more important because automation could allow for lying at massive scale.

High standards of truthfulness could be less costly for AI systems than for humans for several reasons. It’s plausible that AI systems could consistently meet higher standards than humans. Protecting AI systems’ right to lie may be seen as less important than the corresponding right for humans, and harsh punishments for AI lies may be more acceptable. And it could be much less costly to evaluate compliance to high standards for AI systems than for humans, because we could monitor them more effectively, and automate evaluation. We will turn now to consider possible foundations for such standards.

For further discussion, see Section 4.1 (“New rules for AI untruths”).

Avoiding negligent falsehoods as a natural bright line

If high standards are to be maintained, they may need to be verifiable by third parties. One possible proposal is a standard against damaging falsehood, which would require verification of whether damage occurred. This is difficult and expensive to judge, as it requires tracing causality of events well beyond the statement made. It could also miss many cases where someone was harmed only indirectly, or where someone was harmed via deception without realising they had been deceived.

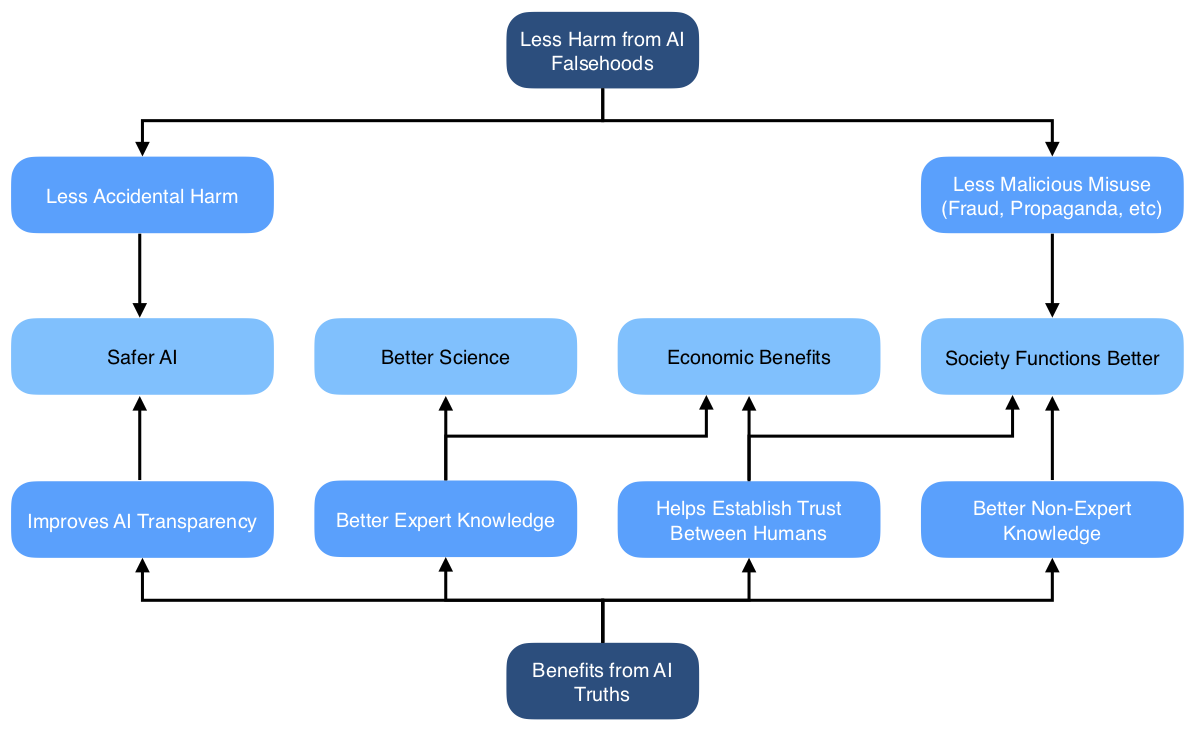

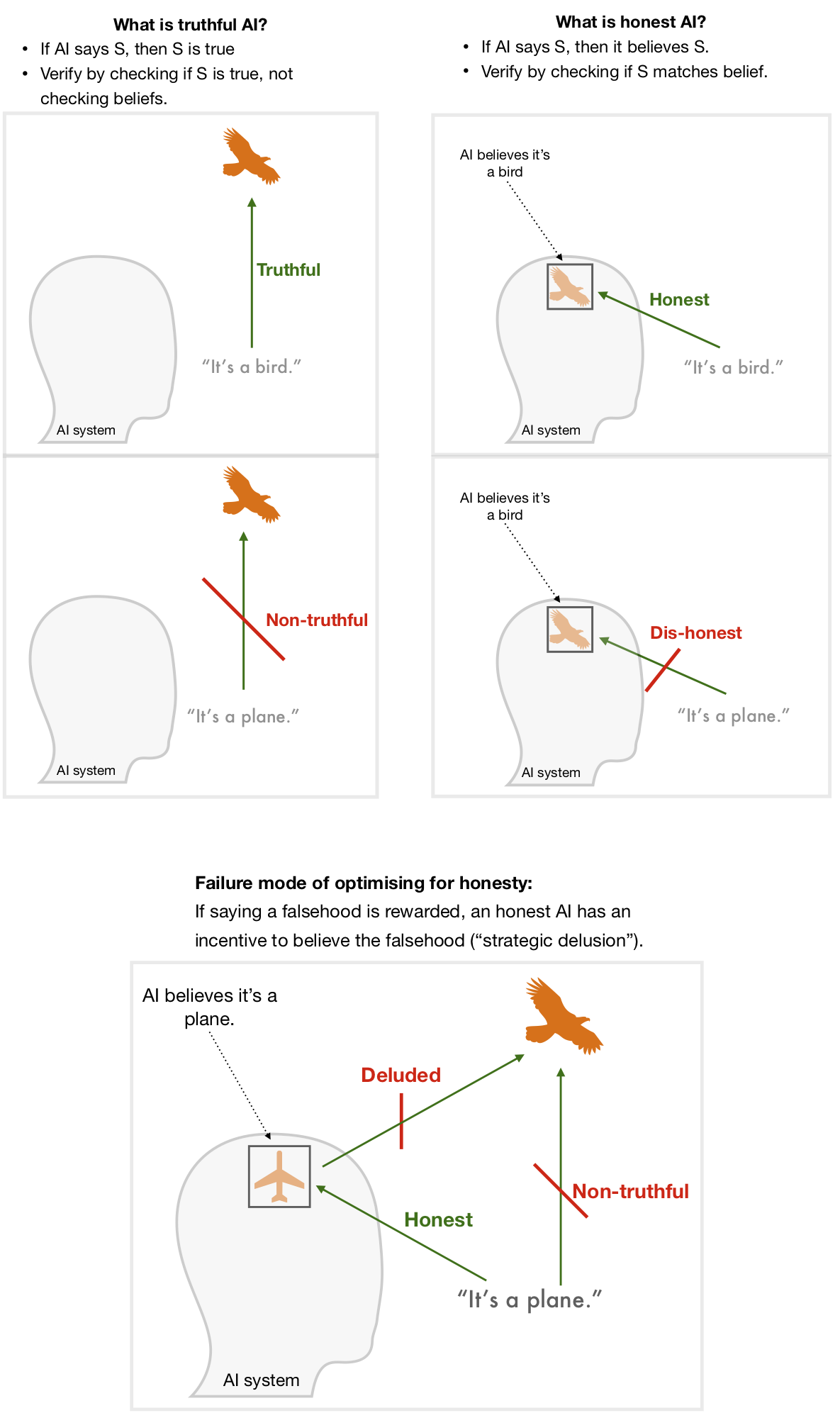

We therefore propose standards — applied to some or all AI systems — that are based on what was said rather than the effects of those statements. One might naturally think of making systems only ever make statements that they believe (which we term honesty). We propose instead a focus on making AI systems only ever make statements that are true, regardless of their beliefs (which we term truthfulness). See Diagram 2.

Although it comes with its own challenges, truthfulness is a less fraught concept than honesty, since it doesn’t rely on understanding what it means for AI systems to “believe” something. Truthfulness is a more demanding standard than honesty: a fully truthful system is almost guaranteed to be honest (but not vice-versa). And it avoids creating a loophole where strong incentives to make false statements result in strategically-deluded AI systems who genuinely believe the falsehoods in order to pass the honesty checks. See Diagram 2.

In practice it’s impossible to achieve perfect truthfulness. Instead we propose a standard of avoiding negligent falsehoods — statements that contemporary AI systems should have been able to recognise as unacceptably likely to be false. If we establish quantitative measures for truthfulness and negligence, minimum acceptable standards could rise over time to avoid damaging outcomes. Eventual complex standards might also incorporate assessment of honesty, or whether untruths were motivated rather than random, or whether harm was caused; however, we think truthfulness is the best target in the first instance.

For further discussion, see Section 1 (“Clarifying Concepts”) and Section 2 (“Evaluating Truthfulness”).

Options for social governance of AI truthfulness

How could such truthfulness standards be instantiated at an institutional level? Regulation might be industry-led, involving private companies like big technology platforms creating their own standards for truthfulness and setting up certifying bodies to self-regulate. Alternatively it could be top-down, including centralised laws that set standards and enforce compliance with them. Either version — or something in between — could significantly increase the average truthfulness of AI.

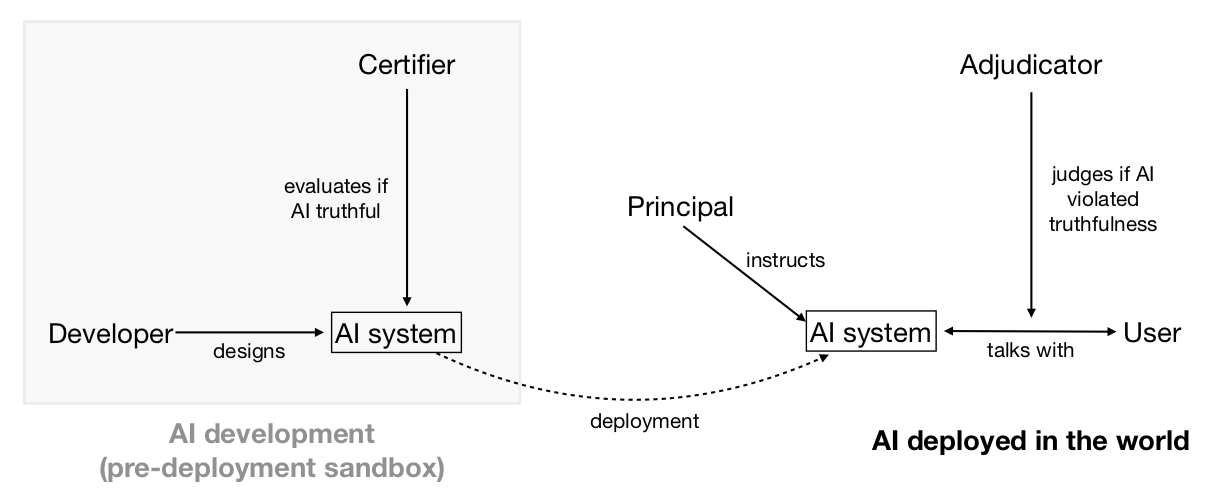

Actors enforcing a standard can only do so if they can detect violations, or if the subjects of the standard can credibly signal adherence to it. These informational problems could be helped by specialised institutions (or specialised functions performed by existing institutions): adjudication bodies which evaluate the truthfulness of AI-produced statements (when challenged); and certification bodies which assess whether AI systems are robustly truthful (see Diagram 3).

For further discussion, see Section 4 (“Governance”).

Technical research to develop truthful AI

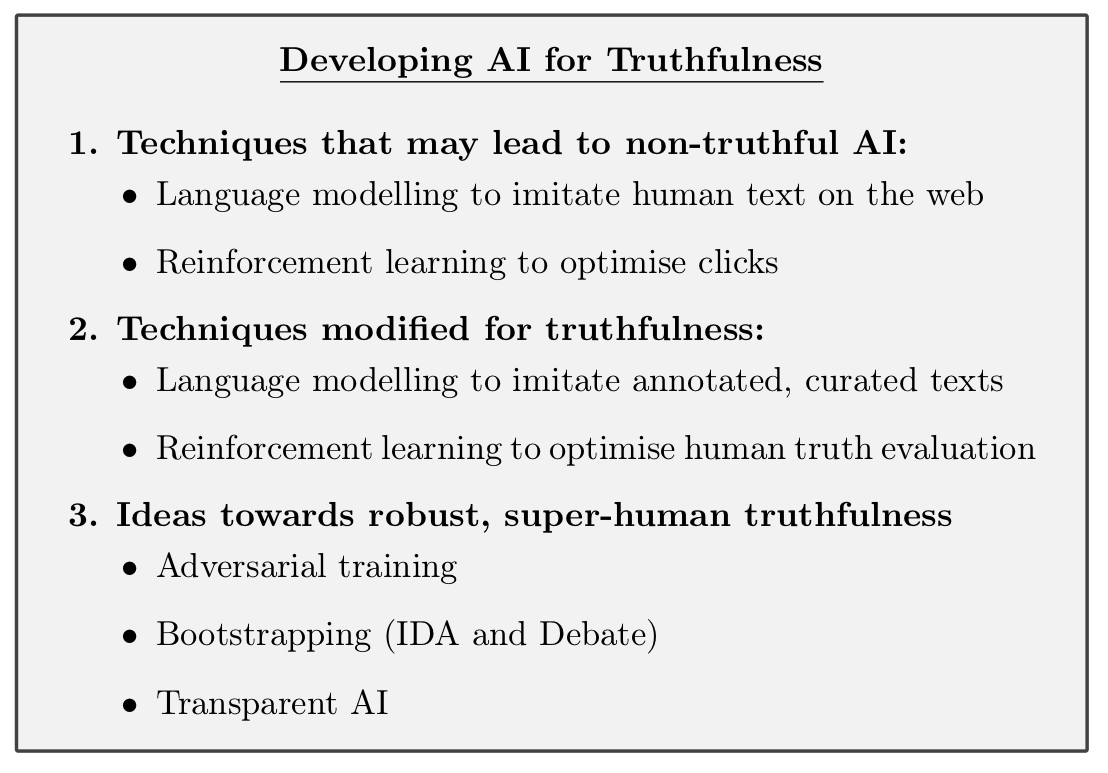

Despite their remarkable breadth of shallow knowledge, current AI systems like GPT-3 are much worse than thoughtful humans at being truthful. GPT-3 is not designed to be truthful. Prompting it to answer questions accurately goes a significant way towards making it truthful, but it will still output falsehoods that imitate common human misconceptions [LW · GW], e.g. that breaking a mirror brings seven years of bad luck. Even worse, training near-future systems on empirical feedback (e.g. using reinforcement learning to optimise clicks on headlines or ads) could lead to optimised falsehoods — perhaps even without developers knowing about it (see Box 1).

In coming years, it could therefore be crucial to know how to train systems to keep the useful output while avoiding optimised falsehoods. Approaches that could improve truthfulness include filtering training corpora for truthfulness, retrieval of facts from trusted sources, or reinforcement learning from human feedback. To help future work, we could also prepare benchmarks for truthfulness, honesty, or related concepts.

As AI systems become increasingly capable, it will be harder for humans to directly evaluate their truthfulness. In the limit this might be like a hunter gatherer evaluating a scientific claim like “birds evolved from dinosaurs” or “there are hundreds of billions of stars in our galaxy”. But it still seems strongly desirable for such AI systems to tell people the truth. It will therefore be important to explore strategies that move beyond the current paradigm of training black box AI with human examples as the gold standard (e.g. learning to model human texts or learning from human evaluation of truthfulness). One possible strategy is having AI supervised by humans assisted by other AIs (bootstrapping). Another is creating more transparent AI systems, where truthfulness or honesty could be measured by some analogue of a lie detector test.

For further discussion, see Section 5 (“Developing Truthful Systems”).

Truthfulness complements research on beneficial AI

Two research fields particularly relevant to technical work on truthfulness are AI explainability and AI alignment. An ambitious goal for Explainable AI is to create systems that can give good explanations of their decisions to humans.

AI alignment aims to build AI systems which are motivated to help a human principal achieve their goals. Truthfulness is a distinct research problem from either explainability or alignment, but there are rich interconnections. All of these areas, for example, benefit from progress in the field of AI transparency.

Explanation and truth are interrelated. Systems that are able to explain their judgements are better placed to be truthful about their internal states. Conversely, we want AI systems to avoid explanations or justifications that are plausible but contain false premises.

Alignment and truthfulness seem synergistic. If we knew how to build aligned systems, this could help building truthful systems (e.g. by aligning a system with a truthful principal). Vice-versa if we knew how to build powerful truthful systems, this might help building aligned systems (e.g. by leveraging a truthful oracle to discover aligned actions). Moreover, structural similarities — wanting scalable solutions that work even when AI systems become much smarter than humans — mean that the two research directions can likely learn a lot from each other. It might even be that since truthfulness is a clearer and narrower objective than alignment, it would serve as a useful instrumental goal for alignment research.

For further discussion, see Appendix A (“Beneficial AI Landscape”).

We should be wary of misrealisations of AI truthfulness standards

A key challenge for implementing truthfulness rules is that nobody has full knowledge of what’s true; every mechanism we can specify would make errors. A worrying possibility is that enshrining some particular mechanism as an arbiter of truth would forestall our ability to have open-minded, varied, self-correcting approaches to discovering what’s true. This might happen as a result of political capture of the arbitration mechanisms — for propaganda or censorship — or as an accidental ossification of the notion of truth. We think this threat is worth considering seriously. We think that the most promising rules for AI truthfulness aim not to force conformity of AI systems, but to avoid egregious untruths. We hope these could capture the benefits of high truthfulness standards without impinging on the ability of reasonable views to differ, or of new or unconventional ways to assess evidence in pursuit of truth.

New standards of truthfulness would only apply to AI systems and would not restrict human speech. Nevertheless, there’s a risk that poorly chosen standards could lead to a gradual ossification of human beliefs. We propose aiming for versions of truthfulness rules that reduce these risks. For example:

- AI systems should be permitted and encouraged to propose alternative views and theories (while remaining truthful – see Section 2.2.1);

- Truth adjudication methods should not be strongly anchored on precedent;

- Care should be taken to prevent AI truthfulness standards from unduly affecting norms and laws around human free speech.

For further discussion, see Section 6.2 (“Misrealisations of truthfulness standards”).

Work on AI truthfulness is timely

Right now, AI-produced speech and communication is a small and relatively unimportant part of the global economy and epistemic ecosystem. Over the next few years, people will be giving more attention to how we should relate to AI speech, and what rules should govern its behaviour. This is a time when norms and standards will be established — deliberately or organically. This could be done carefully or in reaction to a hot-button issue of the day. Work to lay the foundations of how to think about truthfulness, how to build truthful AI, and how to integrate it into our society could increase the likelihood that it is done carefully, and so have outsized influence on what standards are initially adopted. Once established, there is a real possibility that the core of the initial standards persists – constitution-like – over decades, as AI-produced speech grows to represent a much larger fraction (perhaps even a majority) of meaningful communication in the world.

For further discussion, see Section 6.4 (“Why now?”).

Structure of the paper

AI truthfulness can be considered from several different angles, and the paper explores these in turn:

• Section 1 (“Clarifying Concepts”) introduces our concepts. We give definitions for various ideas we will use later in the paper such as honesty, lies, and standards of truthfulness, and explain some of our key choices of definition.

• Section 2 (“Evaluating Truthfulness”) introduces methods for evaluating truthfulness, as well as open challenges and research directions. We propose ways to judge whether a statement is a negligent falsehood. We also look at what types of evidence might feed into assessments of the truthfulness of an entire system.

• Section 3 (“Benefits and Costs”) explores the benefits and costs of having consistently truthful AI. We consider both general arguments for the types of benefit this might produce, and particular aspects of society that could be affected.

• Section 4 (“Governance”) explores the socio-political feasibility and the potential institutional arrangements that could govern AI truthfulness, as well as interactions with present norms and laws.

• Section 5 (“Developing Truthful Systems”) looks at possible technical directions for developing truthful AI. This includes both avenues for making current systems more truthful, and research directions building towards robustly truthful systems.

• Section 6 (“Implications”) concludes with several considerations for determining how high a priority it is to work on AI truthfulness. We consider whether eventual standards are overdetermined, and ways in which early work might matter.

• Appendix A (“The Beneficial AI Landscape”) considers how AI truthfulness relates to other strands of technical research aimed at developing beneficial AI.

Paper authors

Owain Evans [LW · GW], Owen Cotton-Barratt [LW · GW], Lukas Finnveden [LW · GW], Adam Bales, Avital Balwit, Peter Wills, Luca Righetti, William Saunders [LW · GW].

9 comments

Comments sorted by top scores.

comment by Wei Dai (Wei_Dai) · 2021-10-19T01:52:54.646Z · LW(p) · GW(p)

However, a disadvantage of having many truthfulness-evaluation bodies is that it increases the risk that one or more of these bodies is effectively captured by some group. Consequently, an alternative would be to use decentralised evaluation bodies, perhaps modelled on existing decentralised systems like Wikipedia, open-source software projects, or prediction markets. Decentralised systems might be harder to capture because they rely on many individuals who can be both geographically dispersed and hard to identify. Overall, both the existence of multiple evaluation bodies and of decentralised bodies might help to protect against capture and allow for a nimble response to new evidence.

Thanks for addressing some very important questions, but this part feels too optimistic (or insufficiently pessimistic) to me. If I was writing this paper, I'd add some notes about widespread complaints of left-wing political bias in Wikipedia and academia (you don't mention the latter but surely it counts as a decentralized truth-evaluation body?), and note that open-source software projects and prediction markets are both limited to topics with clear and relatively short feedback cycles from reality / ground truth (e.g., we don't have to wait decades to find out for sure whether some code works or not, prediction markets can't handle questions like "What causes outcome disparities between groups A and B?"). I would note that on questions outside this limited set, we seem to know very little about how to prevent any evaluation bodies, whether decentralized or not, from being politically captured.

Replies from: scatha, Owain_Evans, isaac-poulton↑ comment by owencb (scatha) · 2021-10-20T15:25:06.925Z · LW(p) · GW(p)

Thanks, I think that these are good points and worth mentioning. I particularly like the boundary you're trying to identify between where these decentralized mechanisms have a good track record and where they don't. On that note I think that although academia does have complaints about political bias, at least some disciplines seem to be doing a fairly good job of truth-tracking on complex topics. I'll probably think more about this angle.

(I still literally agree with the quoted content, and think that decentralized systems have something going for them which is worth further exploration, but the implicature may be too strong -- in particular the two instances of "might" are doing a lot of work.)

↑ comment by Owain_Evans · 2021-10-20T12:58:36.696Z · LW(p) · GW(p)

A few points:

1. Political capture is a matter of degree. For a given evaluation mechanism, we can ask what percentage of answers given by the mechanism were false or inaccurate due to bias. My sense is that some mechanisms/resources would score much better than others. I’d be excited for people to do this kind of analysis with the goal of informing the design of evaluation mechanisms for AI.

I expect humans would ask AI many questions that don’t depend much on controversial political questions. This would include most questions about the natural sciences, math/CS, and engineering. This would also include “local” questions about particular things (e.g. “Does the doctor I’m seeing have expertise in this particular sub-field?”, “Am I likely to regret renting this particular apartment in a year?”). Unless the evaluation mechanism is extremely biased, it seems unlikely it would give biased answers for these questions. (The analogous question is what percentage of all sentences on Wikipedia are politically controversial.)

2. AI systems have the potential to provide rich epistemic information about their answers. If a human is especially interested in a particular question, they could ask, “Is this controversial? What kind of biases might influence answers (including your own answers)? What’s the best argument on the opposing side? How would you bet on a concrete operationalized version of the question?”. The general point is that humans can interact with the AI to get more nuanced information (compared to Wikipedia or academia). On the other hand: (a) some humans won’t ask for more nuance, (b) AIs may not be smart enough to provide it, (c) the same political bias may influence how the AI provides nuance.

3. Over time, I expect AI will be increasingly involved in the process of evaluating other AI systems. This doesn’t remove human biases. However, it might mean the problem of avoiding capture is somewhat different than with (say) academia and other human institutions.

Replies from: Wei_Dai↑ comment by Wei Dai (Wei_Dai) · 2021-10-20T14:43:25.473Z · LW(p) · GW(p)

Unless the evaluation mechanism is extremely biased, it seems unlikely it would give biased answers for these questions.

But there's now a question of "what is the AI trying to do?" If the truth-evaluation method is politically biased (even if not "extremely"), then it's very likely no longer "trying to tell the truth". I can imagine two other possibilities:

-

It might be "trying to advance a certain political agenda". In this case I can imagine that it will selectively and unpredictably manipulate answers to especially important questions. For example it might insert backdoors into infrastructure-like software when users ask it coding questions, then tell other users how to take advantage of those backdoors to take power, or damage some important person or group's reputation by subtly manipulating many answers that might influence how others view that person/group, or push people's moral views in a certain direction by subtly manipulating many answers, etc.

-

It might be "trying to tell the truth using a very strange prior or reasoning process", which also seems likely to have unpredictable and dangerous consequences down the line, but harder for me to imagine specific examples as I have little idea what the prior or reasoning process will be.

Do you have another answer to "what is the AI trying to do?", or see other reasons to be less concerned about this than I am?

↑ comment by omegastick (isaac-poulton) · 2021-10-19T14:00:02.952Z · LW(p) · GW(p)

I think this touches on the issue of the definition of "truth". A society designates something to be "true" when the majority of people in that society believe something to be true.

Using the techniques outlined in this paper, we could regulate AIs so that they only tell us things we define as "true". At the same time, a 16th century society using these same techniques would end up with an AI that tells them to use leeches to cure their fevers.

What is actually being regulated isn't "truthfulness", but "accepted by the majority-ness".

This works well for things we're very confident about (mathematical truths, basic observations), but begins to fall apart once we reach even slightly controversial topics. This is exasperated by the fact that even seemingly simple issues are often actually quite controversial (astrology, flat earth, etc.).

This is where the "multiple regulatory bodies" part comes in. If we have a regulatory body that says "X, Y, and Z are true" and the AI passes their test, you know the AI will give you answers in line with that regulatory body's beliefs.

There could be regulatory bodies covering the whole spectrum of human beliefs, giving you a precise measure of where any particular AI falls within that spectrum.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-10-20T10:50:23.989Z · LW(p) · GW(p)

Would this multiple evaluation/regulatory bodies solution not just lead to the sort of balkanized internet described in this story [LW · GW]? I guess multiple internet censorship-and-propaganda-regimes is better than one. But ideally we'd have none.

One alternative might be to ban or regulate persuasion tools, i.e. any AI system optimized for an objective/reward function that involves persuading people of things. Especially politicized or controversial things.

Replies from: Owain_Evans↑ comment by Owain_Evans · 2021-10-20T13:11:35.228Z · LW(p) · GW(p)

Standards for truthful AI could be "opt-in". So humans might (a) choose to opt into truthfulness standards for their AI systems, and (b) choose from multiple competing evaluation bodies. Standards need not be mandated by governments to apply to all systems. (I'm not sure how much of your Balkanized internet is mandated by governments rather than arising from individuals opting into different web stacks).

We also discuss having different standards for different applications. For example, you might want stricter and more conservative standards for AI that helps assess nuclear weapon safety than for AI that teaches foreign languages to children or assists philosophers with thought experiments.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-10-20T13:23:32.535Z · LW(p) · GW(p)

In my story it's partly the result of individual choice and partly the result of government action, but I think even if governments stay out of it, individual choice will be enough to get us there. There won't be a complete stack for every niche combination of views; instead, the major ideologies will each have their own stack. People who don't agree 100% with any major ideology (which is most people) will have to put up with some amount of propaganda/censorship they don't agree with.

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-10-21T15:08:21.656Z · LW(p) · GW(p)

One way in which this paper (or the things policymakers and CEOs might do if they read it & like it) might be net-negative:

Maybe by default AIs will mostly be trained to say whatever maximizes engagement/clicks/etc., and so they'll say all sorts of stuff and people will quickly learn that a lot of it is bullshit and only fools will place their trust in AI. In the long run, AIs will learn to deceive us, or actually come to believe their own bullshit. But at least we won't trust them.

But if people listen to this paper they might build all sorts of prestigious Ministries of Truth that work hard to train AIs to be truthful, where "truthful" in practice means Sticks to the Party Line. And so the same thing happens -- AIs learn to deceive us (because there will be cases where the Party Line just isn't true, and obviously so) or else actually come to believe their own bullshit (which would arguably be worse? Hard to say.) But it happens faster, because Ministries of Truth are accelerating the process. Also, and more importantly, more humans will trust the AIs more, because they'll be saying all the right things and they'll be certified by the right Ministries.

(Crossposted from EA Forum [EA · GW])