Simplify EA Pitches to "Holy Shit, X-Risk"

post by Neel Nanda (neel-nanda-1) · 2022-02-11T01:59:18.086Z · LW · GW · 22 commentsThis is a link post for https://www.neelnanda.io/45-x-risk

Contents

Introduction My Version of the Minimum Viable Case Motivation AI - ‘AI has a >=1% chance of x-risk within my lifetime’ Bio - ‘Biorisk has a >=0.1% chance of x-risk within my lifetime’ Caveats None 25 comments

TL;DR If you believe the key claims of "there is a >=1% chance of AI causing x-risk and >=0.1% chance of bio causing x-risk in my lifetime" this is enough to justify the core action relevant points of EA. This clearly matters under most reasonable moral views and the common discussion of longtermism, future generations and other details of moral philosophy in intro materials is an unnecessary distraction.

Thanks to Jemima Jones for accountability and encouragement. Partially inspired by Holden Karnofsky’s excellent Most Important Century series.

Disclaimer: I recently started working for Anthropic, but this post entirely represents my opinions and not those of my employer

Introduction

I work full-time on AI Safety, with the main goal of reducing x-risk from AI. I think my work is really important, and expect this to represent the vast majority of my lifetime impact. I am also highly skeptical of total utilitarianism, vaguely sympathetic to person-affecting views, prioritise currently alive people somewhat above near future people and significantly above distant future people, and do not really identify as a longtermist. Despite these major disagreements with some common moral views in EA, which are often invoked to justify key longtermist conclusions, I think there are basically no important implications for my actions.

Many people in EA really enjoy philosophical discussions and debates. This makes a lot of sense! What else would you expect from a movement founded by moral philosophy academics? I’ve enjoyed some of these discussions myself. But I often see important and controversial beliefs in moral philosophy thrown around in introductory EA material (introductory pitches and intro fellowships especially), like strong longtermism, the astronomical waste argument, valuing future people equally to currently existing people, etc. And I think this is unnecessary and should be done less often, and makes these introductions significantly less effective.

I think two sufficient claims for most key EA conclusions are “AI has a >=1% chance of causing human extinction within my lifetime” and “biorisk has a >=0.1% chance of causing human extinction within my lifetime”. I believe both of these claims, and think that you need to justify at least one of them for most EA pitches to go through, and to try convincing someone to spend their career working on AI or bio. These are really weird claims. The world is clearly not a place where most smart people believe these! If you are new to EA ideas and hear an idea like this, with implications that could transform your life path, it is right and correct to be skeptical. And when you’re making a complex and weird argument, it is really important to distill your case down to the minimum possible series of claims - each additional point is a new point of inferential distance, and a new point where you could lose people.

My ideal version of an EA intro fellowship, or an EA pitch (a >=10 minute conversation with an interested and engaged partner) is to introduce these claims and a minimum viable case for them, some surrounding key insights of EA and the mindset of doing good, and then digging into them and the points where the other person doesn’t agree or feels confused/skeptical. I’d be excited to see someone make a fellowship like this!

My Version of the Minimum Viable Case

The following is a rough outline of how I’d make the minimum viable case to someone smart and engaged but new to EA - this is intended to give inspiration and intuitions, and is something I’d give to open a conversation/Q&A, but is not intended to be an airtight case on its own!

Motivation

Here are some of my favourite examples of major ways the world was improved:

- Norman Borlaug’s Green Revolution - One plant scientist’s study of breeding high-yield dwarf wheat, which changed the world, converted India and Pakistan from grain importers to grain exporters, and likely saved over 250 million lives

- The eradication of smallpox - An incredibly ambitious and unprecedented feat of global coordination and competent public health efforts, which eradicated a disease that has killed over 500 million people in human history

- Stanislav Petrov choosing not to start a nuclear war when he saw the Soviet early warning system (falsely) reporting a US attack

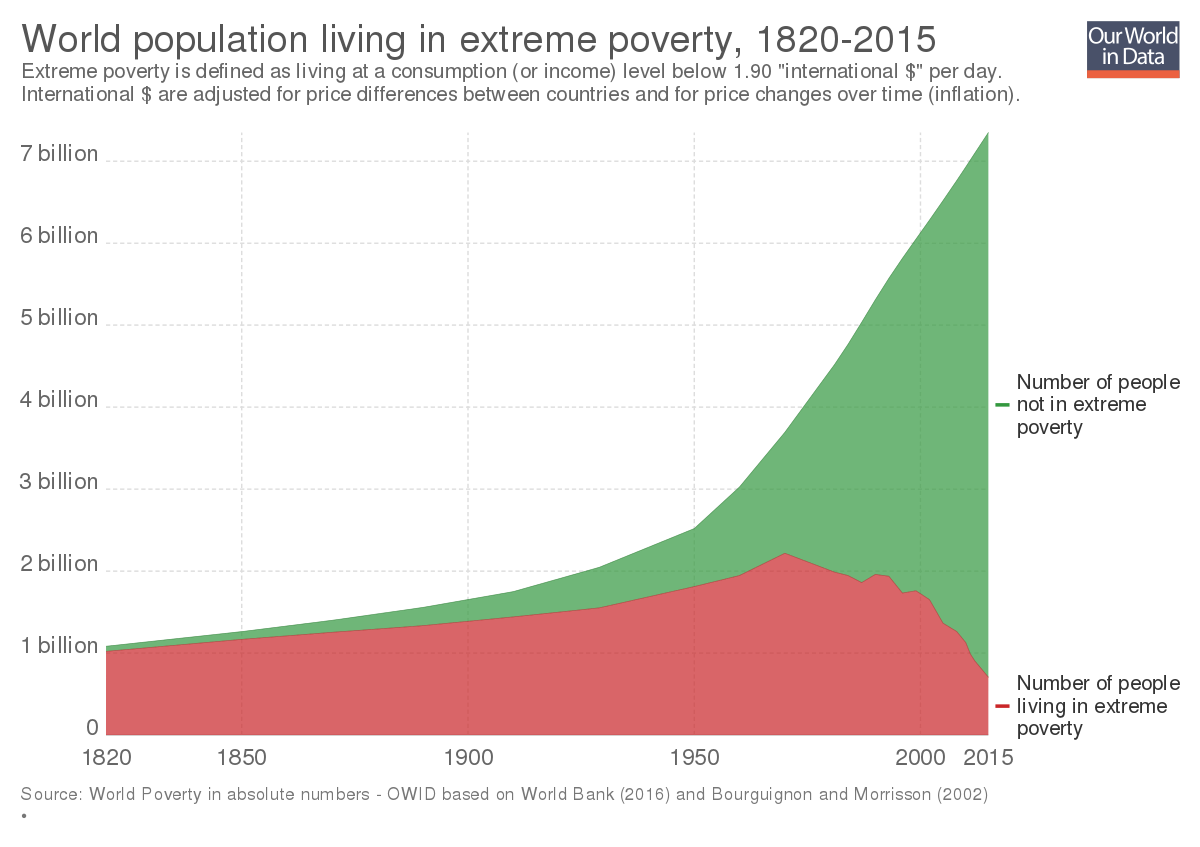

- The industrial and scientific revolutions of the last few hundred years, which are responsible for this incredible graph.

When I look at these and other examples, a few lessons become clear if I want to be someone who can achieve massive amounts of good:

- Be willing to be ambitious

- Be willing to believe and do weird things. If I can find an important idea that most people don’t believe, and can commit and take the idea seriously, I can achieve a lot.

- If it’s obvious, common knowledge, someone else has likely already done it!

- Though, on the flipside, most weird ideas are wrong - don’t open your mind so much that your brains fall out.

- Look for high-leverage!

- The world is big and inter-connected. If you want to have a massive impact, it needs to be leveraged with something powerful - an idea, a new technology, exponential growth, etc.

When I look at today’s world through this lens, I’m essentially searching for things that could become a really big deal. Most things that have been really big, world-changing deals in the past have been some kind of major emerging technology, unlocking new capabilities and new risks. Agriculture, computers, nuclear weapons, fossil fuels, electricity, etc. And when I look for technologies emerging now, still in their infancy but with a lot of potential, AI and synthetic biology stand well above the rest.

Note that these arguments work about as well for focusing on highly leveraged positive outcomes or negative outcomes. I think that, in fact, given my knowledge of AI and bio, that there are plausible negative outcomes, and that reducing the likelihood of these is tractable and more important than ensuring positive outcomes. But I’d be sympathetic to arguments to the contrary.

AI - ‘AI has a >=1% chance of x-risk within my lifetime’

The human brain is a natural example of a generally intelligent system. Evolution produced this, despite a bunch of major constraints like biological energy being super expensive, needing to fit through birth canals, using an extremely inefficient optimisation algorithm, and intelligence not obviously increasing reproductive fitness. While evolution had the major advantage of four billion years to work with, it seems highly plausible to me that humanity can do better. And, further, there’s no reason that human intelligence should be a limit on the capabilities of a digital intelligence.

On the outside view, this is incredibly important. We’re contemplating the creation of a second intelligence species! That seems like one of the most important parts of the trajectory of human civilisation - on par with the dawn of humanity, the invention of agriculture and the Industrial Revolution. And it seems crucial to ensure this goes well, especially if these systems end up much smarter than us. It seems plausible that the default fate of a less intelligent species is that of gorillas - humanity doesn’t really bear gorillas active malice, but they essentially only survive because we want them to.

Further, there are specific reasons to think that this could be really scary! AI systems mostly look like optimisation processes, which can find creative and unexpected ways to achieve these objectives. And specifying the right objective is a notoriously hard problem. And there are good reasons to believe that such a system might have an instrumental incentive to seek power and compete with humanity, especially if it has the following three properties:

- Advanced capabilities - it has superhuman capabilities on at least some kinds of important and difficult tasks

- Agentic planning - it is capable of making and executing plans to achieve objectives, based on models of the world

- Strategic awareness - it can competently reason about the effects of gaining and maintaining power over humans and the real world

See Joseph Carlsmith’s excellent report [AF · GW] for a much more rigorous analysis of this question. I think it is by no means obvious that this argument holds, but I find it sufficiently plausible that we create a superhuman intelligence which is incentivised to seek power and successfully executes on this in a manner that causes human extinction that I’m happy to put at least a 1% chance of AI causing human extinction (my fair value is probably 10-20%, with high uncertainty).

Finally, there’s the question of timelines. Personally, I think there’s a good chance that something like deep learning language models scale to human-level intelligence and beyond (and this is a key motivation of my current research). I find the bio-anchors and scaling based methods of timelines pretty convincing as an upper bound of timelines that’s well within my lifetime. But even if deep learning is a fad, the field of AI has existed for less than 70 years! And it takes 10-30 years to go through a paradigm. It seems highly plausible that we produce human-level AI with some other paradigm within my lifetime (though reducing risk from an unknown future paradigm of AI does seem much less tractable)

Bio - ‘Biorisk has a >=0.1% chance of x-risk within my lifetime’

I hope this claim seems a lot more reasonable now than it did in 2019! While COVID was nowhere near an x-risk, it has clearly been one of the worst global disasters I’ve ever lived through, and the world was highly unprepared and bungled a lot of aspects of the response. 15 million people have died, many more were hospitalised, millions of people have long-term debilitating conditions, and almost everyone’s lives were highly disrupted for two years.

And things could have been much, much worse! Just looking at natural pandemics, imagine COVID with the lethality of smallpox (30%). Or COVID with the age profile of the Spanish Flu (most lethal in young, healthy adults, because it turns the body’s immune system against itself).

And things get much scarier when we consider synthetic biology. We live in a world where multiple labs work on gain of function research, doing crazy things like trying to breed Avian Flu (30% mortality) that’s human-to-human transmissible, and not all DNA synthesis companies will stop you trying to print smallpox viruses. Regardless of whether COVID was actually a lab leak, it seems at least plausible that it could have come from gain-of-function research on coronaviruses. And these are comparatively low-tech methods. Progress in synthetic biology happens fast!

It is highly plausible to me that, whether by accident, terrorism, or an act of war, that someone produces an engineered pathogen capable of creating a pandemic far worse than anything natural. It’s unclear that this could actually cause human extinction, but it’s plausible that something scary enough and well-deployed enough with a long incubation period could. And it’s plausible to me that something which kills 99% of people (a much lower bar) could lead to human extinction. Biorisk is not my field and I’ve thought about this much less than AI, but 0.1% within my lifetime seems like a reasonable lower bound given these arguments.

Caveats

- These are really weird beliefs! It is correct and healthy for people to be skeptical when they first encounter them.

- Though, in my opinion, the arguments are strong enough and implications important enough that it’s unreasonable to dismiss them without at least a few hours of carefully reading through arguments and trying to figure out what you believe and why.

- Further, if you disagree with them, then the moral claims I’m dismissing around strong longtermism etc may be much more important. But you should disagree with the vast majority of how the EA movement is allocating resources!

- There’s a much stronger case for something that kills almost all people, or which causes the not-necessarily-permanent collapse of civilisation, than something which kills literally everyone. This is a really high bar! Human extinction means killing everyone, including Australian farmers, people in nuclear submarines and bunkers, and people in space.

- If you’re a longtermist then this distinction matters a lot, but I personally don’t care as much. The collapse of human civilisation seems super bad to me! And averting this seems like a worthy goal for my life.

- I have an easier time seeing how AI causes extinction than bio

- There’s an implicit claim in here that it’s reasonable to invest a large amount of your resources into averting risks of extremely bad outcomes, even though we may turn out to live in a world where all that effort was unnecessary. I think this is correct to care about, but that this is a reasonable thing to disagree with!

- This is related to the idea that we should maximise expected utility, but IMO importantly weaker. Even if you disagree with the formalisation of maximising expected value, you likely still agree that it’s extremely important to ensure that bridges and planes have safety records far better than 0.1%

- But also, we’re dealing with probabilities that are small but not infinitesimal. This saves us from objections like Pascal’s Mugging - a 1% chance of AI x-risk is not a Pascal’s Mugging.

- It is also reasonable to buy these arguments intellectually, but not to feel emotionally able to motivate yourself to spend your life reducing tail risks. This stuff is hard, and can be depressing and emotionally heavy!

- Personally, I find it easier to get my motivation from other sources, like intellectual satisfaction and social proof. A big reason I like spending time around EAs is that this makes AI Safety work feel much more viscerally motivating to me, and high-status!

- It’s reasonable to agree with these arguments, but consider something else an even bigger problem! While I’d personally disagree, any of the following seem like justifiable positions: climate change, progress studies, global poverty, factory farming.

- A bunch of people do identify as EAs, but would disagree with these claims and with prioritising AI and bio x-risk. To those people, sorry! I’m aiming this post at the significant parts of the EA movement (many EA community builders, CEA, 80K, OpenPhil, etc) who seem to put major resources into AI and bio x-risk reduction

- This argument has the flaw of potentially conveying the beliefs of ‘reduce AI and bio x-risk’ without conveying the underlying generators of cause neutrality and carefully searching for the best ways of doing good. Plausibly, similar arguments could have been made in early EA to make a “let’s fight global poverty” movement that never embraced to longtermism. Maybe a movement based around the narrative I present would miss the next Cause X and fail to pivot when it should, or otherwise have poor epistemic health.

- I think this is a valid concern! But I also think that the arguments for “holy shit, AI and bio risk seem like really big deals that the world is majorly missing the ball on” are pretty reasonable, and I’m happy to make this trade-off. “Go work on reducing AI and bio x-risk” are things I would love to signal boost!

- But I have been deliberate to emphasise that I am talking about intro materials here. My ideal pipeline into the EA movement would still emphasise good epistemics, cause prioritisation and cause neutrality, thinking for yourself, etc. But I would put front and center the belief that AI and bio x-risk are substantial and that reducing them is the biggest priority, and encourage people to think hard and form their own beliefs

- An alternate framing of the AI case is “Holy shit, AI seems really important” and thus a key priority for altruists is to ensure that it goes well.

- This seems plausible to me - it seems like the downside of AI going wrong could be human extinction, but that the upside of AI going really well could be a vastly, vastly better future for humanity.

- There are also a lot of ways this could lead to bad outcomes beyond the standard alignment failure example! Maybe coordination just becomes much harder in a fast-paced world of AI [AF · GW] and this leads to war, or we pollute ourselves to death. Maybe it massively accelerates technological progress and we discover a technology more dangerous than nukes and with a worse Nash equilibria and don’t solve the coordination problem in time.

- I find it harder to imagine these alternate scenarios literally leading to extinction, but they might be more plausible and still super bad!

- There are some alternate pretty strong arguments for this framing. One I find very compelling is drawing an analogy between exponential growth in the compute used to train ML models, and the exponential growth in the number of transistors per chip of Moore’s Law.

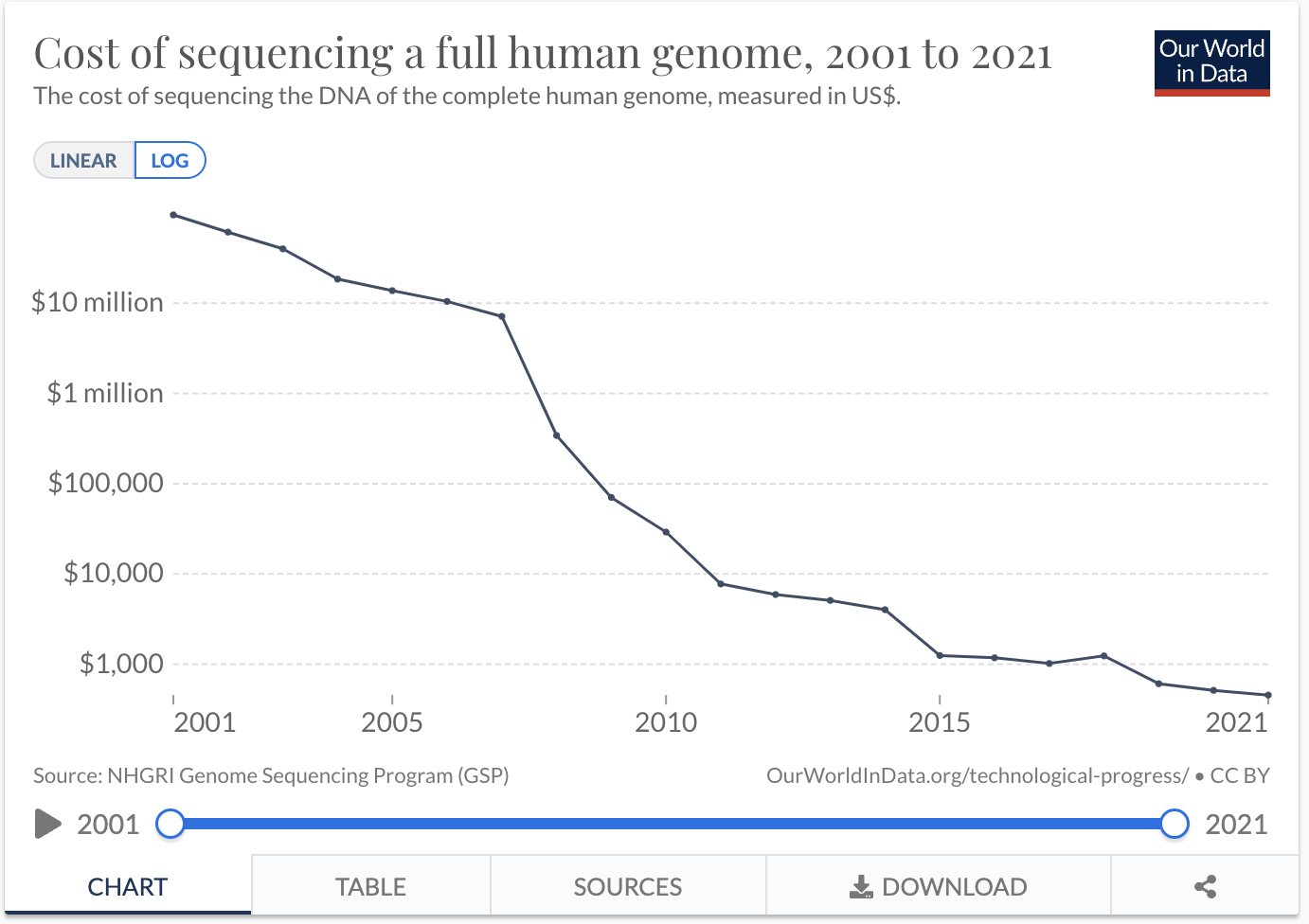

- Expanding upon this, historically most AI progress has been driven by increasing amounts of computing power and simple algorithms that leverage them. And the amount of compute used in AI systems is growing exponentially (doubling every 3.4 months - compared to Moore’s Law’s 2 years!). Though the rate of doubling is likely to slow down - it’s much easier to increase the amount of money spent on compute when you’re spending less than the millions spent on payroll for top AI researchers than when you reach the order of magnitude of figures like Google’s $26bn annual R&D - it also seems highly unlikely to stop completely.

- Under this framing, working on AI now is analogous to working with computers in the 90s. Though it may have been hard to predict exactly how computers would change the world, there is no question that they did, and it seems likely that an ambitious altruist could have gained significant influence over how this went and nudged it to be better.

- I also find this framing pretty motivating - even if specific stories I’m concerned by around eg inner alignment are wrong, I can still be pretty confident that something important is happening in AI, and my research likely puts me in a good place to influence this for the better.

- I work on interpretability research, and these kind of robustness arguments are one of the reasons I find this particularly motivating!

22 comments

Comments sorted by top scores.

comment by transhumanist_atom_understander · 2024-05-10T22:53:01.643Z · LW(p) · GW(p)

"Norman Borlaug’s Green Revolution" seems to give the credit for turning India into a grain exporter solely to hybrid wheat, when rice is just as important to India as wheat.

Yuan Longping, the "father of hybrid rice", is a household name in China, but in English-language sources I only seem to hear about Norman Borlaug, who people call "the father of the Green Revolution" rather than "the father of hybrid wheat", which seems more appropriate. It seems that the way people tell the story of the Green Revolution has as much to do with national pride as the facts.

I don't think this affects your point too much, although it may affect your estimate of how much change to the world you can expect from one person's individual choices. It's not just that if Norman Borlaug had died in a car accident hybrid wheat may still have been developed, but also that if nobody developed hybrid wheat, there would still have been a Green Revolution in India's rice farming.

comment by MrFailSauce (patrick-cruce) · 2022-02-11T17:44:28.499Z · LW(p) · GW(p)

I think I'm convinced that we can have human capable AI(or greater) in the next century(or sooner). I'm unconvinced on a few aspects of AI alignment. Maybe you could help clarify your thinking.

(1) I don't see how an human capable or a bit smarter than human capable AI(say 50% smarter) will be a serious threat. Broadly humans are smart because of group and social behavior. So a 1.5 Human AI might be roughly as smart as two humans? Doesn't seem too concerning.

(2) I don't see how a bit smarter than humans scales to superhuman levels of intelligence.

(a) Most things have diminishing returns, and I don't see how one of the most elusive faculties would be an exception. (GPT-3 is a bit better than GPT-2 but GPT-3 requires 10x the resources).

(b) Requiring 2x resources for +1 intelligence is consistent with my understanding that most of the toughest problems are NP or harder.

(3) I don't see how superhuman intelligence by itself is necessarily a threat. Most problems cannot be solved by pure reasoning alone. For example, a human with 150 IQ isn't going to be much better at predicting the weather than a person with 100 IQ. Weather prediction(and most future prediction) is both knowledge(measurement) and intelligence(modeling) intensive. Moreover it is chaotic. A superhuman intelligence might just be able to tell us that it is 70.234% likely to rain tomorrow whereas a merely human intelligence will only be able to tell us it is 70% likely. I feel like most people concerned about AI alignment assume that more intelligence would make it possible to drive the probability of rain to 99% or 1%

Replies from: gwern, donald-hobson, None↑ comment by gwern · 2022-02-12T03:29:24.260Z · LW(p) · GW(p)

For example, a human with 150 IQ isn't going to be much better at predicting the weather than a person with 100 IQ.

Are we equivocating on 'much better' here? Because there are considerable individual differences in weather forecasting performances (it's one of the more common topics to study in the forecasting literature), and while off-hand I don't have any IQ cites, IQ shows up all the time in other forecasting topics as a major predictor of performance (as it is of course in general) and so I would be surprised if weather was much different.

Replies from: patrick-cruce↑ comment by MrFailSauce (patrick-cruce) · 2022-02-12T16:34:05.968Z · LW(p) · GW(p)

Are we equivocating on 'much better' here?

Not equivocating but if intelligence is hard to scale and slightly better is not a threat, then there is no reason to be concerned about AI risk. (maybe a 1% x-risk suggested by OP is in fact a 1e-9 x-risk)

there are considerable individual differences in weather forecasting performances (it's one of the more common topics to study in the forecasting literature),

I'd be interested in seeing any papers on individual differences in weather forecasting performance (even if IQ is not mentioned). My understanding was that it has been all NWP for the last half-century or so.

IQ shows up all the time in other forecasting topics as a major predictor

I'd be curious to see this too. My undering was that, for example, stock prediction was not only uncorrelated with IQ, but that above average performance was primarily selection bias. (ie above average forecasters for a given time period tend to regress toward the mean over subsequent time periods)

Replies from: gwern↑ comment by gwern · 2022-02-13T01:25:05.399Z · LW(p) · GW(p)

(b) Requiring 2x resources for +1 intelligence is consistent with my understanding that most of the toughest problems are NP or harder.

My understanding was that it has been all NWP for the last half-century or so.

There's still a lot of human input. That was one of the criticisms of, IIRC, DeepMind's recent foray into DL weather modeling - "oh, but the humans still do better in the complex cells where the storms and other rare events happen, and those are what matter in practice".

My undering was that, for example, stock prediction was not only uncorrelated with IQ

Where did you get that, Taleb? Untrue. Investing performance is correlated with IQ: better market timing, less inefficient trading, less behavioral bias, longer in the market, more accurate forecasts about inflation and returns, etc. Since you bring up selection bias, Grinblatt et al 2012 studies the entire Finnish population with a population registry approach and finds that.

Replies from: patrick-cruce, patrick-cruce↑ comment by MrFailSauce (patrick-cruce) · 2022-02-13T18:41:07.852Z · LW(p) · GW(p)

I spent some time reading the Grinnblatt paper. Thanks again for the link. I stand corrected on IQ being uncorrelated with stock prediction. One part did catch my eye.

Our findings relate to three strands of the literature. First, the IQ and trading behavior analysis builds on mounting evidence that individual investors exhibit wealth-reducing behavioral biases. Research, exemplified by Barber and Odean (2000, 2001, 2002), Grinblatt and Keloharju (2001), Rashes (2001), Campbell (2006), and Calvet, Campbell, and Sodini (2007, 2009a, 2009b), shows that these investors grossly under-diversify, trade too much, enter wrong ticker symbols, are subject to the disposition effect, and buy index funds with exorbitant expense ratios. Behavioral biases like these may partly explain why so many individual investors lose when trading in the stock market (as suggested in Odean (1999), Barber, Lee, Liu, and Odean (2009); and, for Finland, Grinblatt and Keloharju (2000)). IQ is a fundamen- tal attribute that seems likely to correlate with wealth- inhibiting behaviors.

I went to some of references, this one seemed a particularly cogent summary.

The take home seems to be that high-IQ investors exceed the performance of low-IQ investors, but institutional investors exceed the performance of individual investors. Maybe it is just insitutions selecting the smartest, but another coherent view is that the joint intelligence of the group("institution") exceeds the intelligence of high-IQ individuals. We might need more data to figure it out.

↑ comment by MrFailSauce (patrick-cruce) · 2022-02-13T16:48:14.230Z · LW(p) · GW(p)

Since you bring up selection bias, Grinblatt et al 2012 studies the entire Finnish population with a population registry approach and finds that.

Thanks for the citation. That is the kind of information I was hoping for. Do you think that slightly better than human intelligence is sufficient to present an x-risk, or do you think it needs some sort of takeoff or acceleraton to present an x-risk?

I think I can probably explain the "so" in my response to Donald below.

Replies from: gwern↑ comment by gwern · 2022-02-13T18:40:03.385Z · LW(p) · GW(p)

Do you think that slightly better than human intelligence is sufficient to present an x-risk, or do you think it needs some sort of takeoff or acceleraton to present an x-risk?

I think less than human intelligence is sufficient for an x-risk because that is probably what is sufficient for a takeoff.

(GPT-3 needed like 1k discrete GPUs to train. Nvidia alone ships something on the order of >73,000k discrete GPUs... per year. How fast exactly do you think returns diminish and how confident are you that there are precisely zero capability spikes anywhere in the human and superhuman regimes? How intelligent does an agent need to be to send a HTTP request to the URL /ldap://myfirstrootkit.com on a few million domains?)

↑ comment by MrFailSauce (patrick-cruce) · 2022-02-13T19:29:49.554Z · LW(p) · GW(p)

I think less than human intelligence is sufficient for an x-risk because that is probably what is sufficient for a takeoff.

If less than human intelligence is sufficient, wouldn't humans have already done it? (or are you saying we're doing it right now?)

How intelligent does an agent need to be to send a HTTP request to the URL

/ldap://myfirstrootkit.comon a few million domains?)

A human could do this or write a bot to do this.(and they've tried) But they'd also be detected, as would an AI. I don't see this as an x-risk, so much as a manageable problem.

(GPT-3 needed like 1k discrete GPUs to train. Nvidia alone ships something on the order of >73,000k discrete GPUs... per year. How fast exactly do you think returns diminish

I suspect they'll diminish exponentially, because threat requires solving problems of exponential hardness. To me "1% of annual Nvidia GPUs", or "0.1% annual GPU production" sounds like we're at roughly N-3 of problem size we could solve by using 100% of annual GPU production.

how confident are you that there are precisely zero capability spikes anywhere in the human and superhuman regimes?

I'm not confident in that.

Replies from: gwern↑ comment by gwern · 2022-02-14T01:19:54.306Z · LW(p) · GW(p)

If less than human intelligence is sufficient, wouldn't humans have already done it?

No. Humans are inherently incapable of countless things that software is capable of. To give an earlier example, humans can do things that evolution never could. And just as evolution can only accomplish things like 'going to the moon' by making agents that operate on the next level of capabilities, humans cannot do things like copy themselves billions of times or directly fuse their minds or be immortal or wave a hand to increase their brain size 100x. All of these are impossible. Not hard, not incredibly difficult - impossible. There is no human who has, or ever will be able to do those. The only way to remove these extremely narrow rigid binding constraints by making tools that remove the restrictions ie. are software. Once the restrictions are removed so the impossible becomes possible, even a stupid agent with no ceiling will eventually beat a smart agent rigidly bound by immutable biological limitations.

(Incidentally, did you know that AlphaGo before the Lee Sedol tournament was going to lose? But Google threw a whole bunch of early TPUs at the project about a month before to try to rescue it and AlphaGo had no ceiling, while Lee Sedol was human, all too human, so, he got crushed.)

↑ comment by Donald Hobson (donald-hobson) · 2022-02-12T02:37:08.228Z · LW(p) · GW(p)

(1) I don't see how an human capable or a bit smarter than human capable AI(say 50% smarter) will be a serious threat. Broadly humans are smart because of group and social behavior. So a 1.5 Human AI might be roughly as smart as two humans? Doesn't seem too concerning.

Humans have like 4 x the brain volume of chimps, but you can't put 4 chimps together to get 1 human. And you can't put 100 average humans together to get 1 Einstein. Despite Einstein having a brain with about the same size and genetics of any other human. This doesn't suggest an obvious model, but it does suggest your additive intelligence model is wrong.

(2) I don't see how a bit smarter than humans scales to superhuman levels of intelligence.

Either Or between: Once you have the right algorithm, it really is as simple as increasing some parameter or neuron count. The AI is smarter than a smart human. Smart humans can do AI research a bit. The AI does whatever a human doing AI research would do, but quicker and better.

(a) Most things have diminishing returns, and I don't see how one of the most elusive faculties would be an exception. (GPT-3 is a bit better than GPT-2 but GPT-3 requires 10x the resources).

I think this is somewhat to do with the underlying algorithm being not very good in a way that doesn't apply to all algorithms. It all depends on the scales you use anyway. It takes 10x as much energy to make sound 10db louder, because decibels are a logarithmic scale.

I don't see how superhuman intelligence by itself is necessarily a threat. Most problems cannot be solved by pure reasoning alone.

Of course most problems can't be solved by reason alone. In a world with the internet, there is a lot of information available. The limits on how much useful information and understanding you can extract from your data are really high. So yes you need some data. This doesn't mean lack of data is going to be a serious bottleneck, any more than lack of silicon atoms is the bottleneck on making chips. The world is awash with data. Cleaned up, sorted out data is a little rarer. Taking a large amount of data, some of tangential relevance, some with mistakes in, some fraudulent, and figuring out what is going on, that takes intelligence.

For example, a human with 150 IQ isn't going to be much better at predicting the weather than a person with 100 IQ.

What is happening here? Are both people just looking at a picture and guessing numbers, or can the IQ 150 person program a simulation while the IQ 100 person is looking at the Navier stokes equation trying (and failing) to figure out what it means.

A lot of science seems to be done by a handful of top scientists. The likes of Einstein wouldn't be a thing if a higher intelligence didn't make you much better at discovering some things.

I think weather prediction isn't a good example of intelligence in general. Take programming.Entirely discrete. No chaos. Smart humans to lots better than dumb ones. Small differences in intelligence make the difference between a loglinear sort and a quadratic one.

If you reduce the IQ of your scientists by 20 points. That doesn't make your nukes 20% less powerful, it means you don't get nukes.

Not all parts of the world are chaotic in the chaos theory sense. There are deep patterns and things that can be predicted. Humans have a huge practical advantage over all other animals. Real intelligence often pulls powerful and incomprehensible tricks out of nowhere. The "how the ??? did the AI manage to do that with only those tools?" Not just getting really good answers on standardized tests.

The probability it rains tomorrow might (or might not) be a special case where we are doing the best we can with the data we have. If a coin will land heads is another question where there may be no room for an AI to improve. Design an Mrna strand to reverse ageing? Is almost surely a question that leaves the AI lots of room to show off its intelligence.

Replies from: patrick-cruce↑ comment by MrFailSauce (patrick-cruce) · 2022-02-12T17:14:22.440Z · LW(p) · GW(p)

Hmmmmm there is a lot here let me see if I can narrow down on some key points.

Once you have the right algorithm, it really is as simple as increasing some parameter or neuron count.

There are some problems that do not scale well(or at all). For example, doubling the computational power applied to solving the knapsack problem will let you solve a problem size that is one element bigger. Why should we presume that intelligence scales like an O(n) problem and not an O(2^n) problem?

What is happening here? Are both people just looking at a picture and guessing numbers, or can the IQ 150 person program a simulation while the IQ 100 person is looking at the Navier stokes equation trying (and failing) to figure out what it means.

I picked weather prediction as an exemplar problem because

(1) NWP programs are the product of not just single scientists but the efforts of thousands. (the intelligences of many people combined into a product that is far greater than any could produce individually)

(II) The problem is fairly well understood and observation limited. If we could simultaneously measure the properties of 1m^3 voxels of atmsophere our NWP would be dramatically improved. But our capabilities are closer to one per day(non-simultaneous) measurements of spots rougly 40 km in diameter. Access to the internet will not improve this. The measurements don't exist. Other chaotic systems like ensembles of humans or stocks may very well have this property.

Smart humans to lots better than dumb ones. Small differences in intelligence make the difference between a loglinear sort and a quadratic one.

But hardly any programs are the result of individual efforts. They're the product of thousands. If a quadratic sort slips through it gets caught by a profiler and someone else fixes it. (And everyone uses compilers, interpreters, libraries, etc...)

A lot of science seems to be done by a handful of top scientists. The likes of Einstein wouldn't be a thing if a higher intelligence didn't make you much better at discovering some things.

This is not my view of science. I tend to understand someone like Einstein as an inspirational story we tell when the history of physics in the early 20th century is fact a tale of dozens, if not hundreds. But I do think this is getting towards the crux.

Replies from: donald-hobson↑ comment by Donald Hobson (donald-hobson) · 2022-02-12T21:44:04.053Z · LW(p) · GW(p)

There are some problems that do not scale well(or at all). For example, doubling the computational power applied to solving the knapsack problem will let you solve a problem size that is one element bigger. Why should we presume that intelligence scales like an O(n) problem and not an O(2^n) problem?

We don't know that, P vs NP is an unproved conjecture. Most real world problems are not giant knapsack problems. And there are algorithms that quickly produce answers that are close to optimal. Actually, most of the real use of intelligence is not a complexity theory problem at all. "Is inventing transistors a O(n) or an O(2^n) problem?" Meaningless. In practice, modest improvements in intelligence seem to produce largish differences in results.

Lots of big human achievements are built by many humans. Some things are made by one exceptionally smart human. Inventing general relativity, or solving Fermat's last theorem are the sort of problem with a smaller number of deep abstract general parts. Solving 10^12 capchas is exactly the sort of task that can be split among many people easily. This sort of problem can't be solved by a human genius (unless they make an AI, but that doesn't count) There are just too many separate small problems.

I think some tasks can easily be split into many small parts, some can't. Tasks that can be split, we get a team of many humans to do. Tasks that can't, if our smartest geniuses can't solve them, they go unsolved.

Suppose an AI as smart as the average human. You can of course put in 10x the compute, and get an AI as smart as 10 average humans working together. I think you can also put in 1.2x the compute, and get an AI as smart as Einstein. Or put in 12x the compute and get an AI as smart as 12 Einstein's working together. But its generally better to put a lot of you compute into one big pile and get insights far more deep and profound than any human could ever make. Lots of distinct human mind sized blobs talking is an inefficient way to use your compute, any well designed AI can do better.

This is not my view of science. I tend to understand someone like Einstein as an inspirational story we tell when the history of physics in the early 20th century is fact a tale of dozens, if not hundreds. But I do think this is getting towards the crux.

Sure, maybe as many as 100's. Not millions. Now the differences between human brains are fairly small. This indicates that 2 humans aren't as good as 1 human with a brain that works slightly better.

But hardly any programs are the result of individual efforts. They're the product of thousands. If a quadratic sort slips through it gets caught by a profiler and someone else fixes it. (And everyone uses compilers, interpreters, libraries, etc...)

Isn't that just relying on the intelligence of everyone else on the team. "Intelligence isn't important, the project would be going just as well if Alex was as dumb as a bag of bricks. (If Alex was that dumb, everyone else would do his work.) " Sure, lots of people work together to make code. And if all those people were 10 IQ points smarter, the code would be a lot better. If they were 10 IQ points dumber, the code would be a lot worse. Sometimes one person being much dumber wouldn't make a difference, if all their work is being checked. Except that if they were dumb, someone would have to write new code that passes the checks. And if the checker is dumb, all the rubbish gets through.

The problem is fairly well understood and observation limited.

You picked a problem that was particularly well understood. The problems we are fundamentally confused about are the ones where better intelligence has the big wins. The problem of predicting a quantum coin toss is well understood, and totally observation limited. In other words, its a rare special case where the problem has mathematical structure allowing humans to make near optimal use of available information. Most problems aren't like this.

Access to the internet will not improve this. The measurements don't exist. Other chaotic systems like ensembles of humans or stocks may very well have this property.

I think there is a lot of info about humans and stocks on the internet. And quite a lot of info about weather like clouds in the background of someones holiday pics. But current weather prediction doesn't use that data. It just uses the weather satellite data, because it isn't smart enough to make sense of all the social media data. I mean you could argue that most of the good data is from the weather satellite. That social media data doesn't help much even if you are smart enough to use it. If that is true, that would be a way that the weather problem differs from many other problems.

Suppose the AI is 2x as good at using data as the human. So if both human and AI had priors of 1:1 on a hypothesis, the human updates to 10:1, and the AI to 100:1. The human is 91% sure, and the AI is 99% sure. But if Both start with priors of 1:1000,000 then the human can update to 1:100 while the AI updates to 100:1. Ie the human is 1% sure while the AI is 99% sure.

A small improvement in evidence processing ability can make the difference between narrowing 1000,000 options down to 100, and narrowing them down to 1. Which makes a large difference in chance of correctness.

Replies from: patrick-cruce↑ comment by MrFailSauce (patrick-cruce) · 2022-02-13T17:50:30.426Z · LW(p) · GW(p)

We don't know that, P vs NP is an unproved conjecture. Most real world problems are not giant knapsack problems. And there are algorithms that quickly produce answers that are close to optimal. Actually, most of the real use of intelligence is not a complexity theory problem at all. "Is inventing transistors a O(n) or an O(2^n) problem?"

P vs. NP is unproven. But I disagree that "most real world problems are not giant knapsack problems". The Cook-Levin theorem showed that many of the most interesting problems are reducible to NP-complete problems. I'm going to quote this paper by Scott-Aaronson, but it is a great read and I hope you check out the whole thing. https://www.scottaaronson.com/papers/npcomplete.pdf

Even many computer scientists do not seem to appreciate how different the world would be if we could solve NP-complete problems efficiently. I have heard it said, with a straight face, that a proof of P = NP would be important because it would let airlines schedule their flights better, or shipping companies pack more boxes in their trucks! One person who did understand was G ̈odel. In his celebrated 1956 letter to von Neumann (see [69]), in which he first raised the P versus NP question, G ̈odel says that a linear or quadratic-time procedure for what we now call NP-complete problems would have “consequences of the greatest magnitude.” For such an procedure “would clearly indicate that, despite the unsolvability of the Entscheidungsproblem, the mental effort of the mathematician in the case of yes-or-no questions could be completely replaced by machines.”

But it would indicate even more. If such a procedure existed, then we could quickly find the smallest Boolean circuits that output (say) a table of historical stock market data, or the human genome, or the complete works of Shakespeare. It seems entirely conceivable that, by analyzing these circuits, we could make an easy fortune on Wall Street, or retrace evolution, or even generate Shakespeare’s 38th play. For broadly speaking, that which we can compress we can understand, and that which we can understand we can predict. Indeed, in a recent book [12], Eric Baum argues that much of what we call ‘insight’ or ‘intelligence’ simply means finding succinct representations for our sense data. On his view, the human mind is largely a bundle of hacks and heuristics for this succinct-representation problem, cobbled together over a billion years of evolution. So if we could solve the general case—if knowing something was tantamount to knowing the shortest efficient description of it—then we would be almost like gods. The NP Hardness Assumption is the belief that such power will be forever beyond our reach.

I take the NP-hardness assumption as foundational. That being the case, a lot of talk of AI x-risk sounds to me like saying that AI will be an NP oracle. (For example, the idea that a highly intelligent expert system designing tractors could somehow "get out of the box" and threaten humanity, would require a highly accurate predictive model that would almost certainly contain one or many NP-complete subproblems)

But current weather prediction doesn't use that data. It just uses the weather satellite data, because it isn't smart enough to make sense of all the social media data. I mean you could argue that most of the good data is from the weather satellite. That social media data doesn't help much even if you are smart enough to use it. If that is true, that would be a way that the weather problem differs from many other problems.

Yes I would argue that current weather prediction doesn't use social media data because cameras at optical wavelengths cannot sound the upper atmosphere. Physics means there is no free lunch from social media data.

I would argue that most real world problems are observationally and experimentally bound. The seminal paper on photoelectric effect was a direct consequence of a series of experimental results from the 19th century. Relativity is the same story. It isn't like there were measurements of the speed of light or the ratio of frequency to energy of photons available in the 17th century just waiting for someone with sufficient intelligence to find them in the 17th century equivalent of social media. And no amount of data on european peasants (the 17th century equivalent of facebook) would be a sufficient substitute. The right data makes all the difference.

A common AI risk problem like manipulating a programmer into escalating AI privileges is a less legible problem than examples from physics but I have no reason to think that it won't also be observationally bound. Making an attempt to manipulate the programmer and being so accurate in the prediction that the AI is highly confident it won't be caught would require a model of the programmer as detailed(or more) than an atmospheric model. There is no guarantee the programmer has any psychological vulnerabiliies. There is no guarantee that they share the right information on social media. Even if they're a prolific poster, why would we think this information is sufficient to manipulate them?

Replies from: donald-hobson↑ comment by Donald Hobson (donald-hobson) · 2022-02-13T20:45:59.985Z · LW(p) · GW(p)

The smallest boolean circuit trick is a reasonable trick, if you can get it to work efficiently. But it won't magically be omniscient at everything. It would be just one fairly useful tool in the ML toolbox.

"Minimal circuit" based approaches will fail badly when the data comes from a simple to specify but computationally heavy function. For example, the minimal boolean circuit trained to take in a point on the complex plane (with X and Y as IEEE floats) and output if that point is in the mandelbrot set. This algorithm will fail reasonable numbers of training points.

If the 3 sat algorithm is O(n^4) then this algorithm might not be that useful compared to other approaches.

On the other hand, a loglinear algorithm that produced a circuit that was usually almost optimal, that could be useful.

What's the runtime complexity of rowhammering your own hardware to access the internet? O(1)? Meaningless. Asymptotic runtime complexity is a mathematical tool that assumes an infinite sequence of ever harder problems. (An infinite sequence of ever longer lists to sort, or 3-sat's to solve) There is not an infinite sequence of ever harder problems in reality. Computational complexity is mostly concerned with the worst case, and finding the absolutely perfect solution. In reality, an AI can use algorithms that find a pretty good solution most of the time.

A common AI risk problem like manipulating a programmer into escalating AI privileges is a less legible problem than examples from physics but I have no reason to think that it won't also be observationally bound.

Obviously there is a bound based on observation for every problem, just often that bound is crazy high on our scales. For a problem humans understand well, we may be operating close to that bound. For the many problems that are "illegible" or "hard for humans to think about" or "confusing", we are nowhere near the bound, so the AI has room to beat the pants off us with the same data.

I would argue that most real world problems are observationally and experimentally bound.

And no amount of data on european peasants (the 17th century equivalent of facebook) would be a sufficient substitute.

Consider a world in which humans, being smart but not that smart, can figure out relativity from good clear physics data. Could a superintelligence figure out relativity based on the experiences of the typical caveman? You seem to be arguing that a superintelligence couldn't manage this because Einstein didn't. Apparently gold wouldn't be yellowish if it weren't for relativistic effects on the innermost electrons. The sun is shining. Life => Evolution over millions of years. Sun's energy density too high for chemical fuel. E=mc^2? Why the night sky is dark. Redshift and a universe with a big bang. These clues, and probably more, have been available to most humans throughout history.

These clues weren't enough to lead Einstein to relativity, but Einstein was only human.

Replies from: patrick-cruce↑ comment by MrFailSauce (patrick-cruce) · 2022-02-13T23:41:01.723Z · LW(p) · GW(p)

In reality, an AI can use algorithms that find a pretty good solution most of the time.

If you replace "AI" with "ML" I agree with this point. And yep this is what we can do with the networks we're scaling. But "pretty good most of the time" doesn't get you an x-risk intelligence. It gets you some really cool tools.

If the 3 sat algorithm is O(n^4) then this algorithm might not be that useful compared to other approaches.

If 3 SAT is O(n^4) then P=NP and back to Aaronson's point; the fundamental structure of reality is much different than we think it is. (did you mean "4^N"? Plenty of common algorithms are quartic.)

For the many problems that are "illegible" or "hard for humans to think about" or "confusing", we are nowhere near the bound, so the AI has room to beat the pants off us with the same data.

The assertion that "illegible" means "requiring more intelligence" rather than "ill-posed" or "underspecified" doesn't seem obvious to me. Maybe you can expand on this?

Could a superintelligence figure out relativity based on the experiences of the typical caveman?..These clues weren't enough to lead Einstein to relativity, but Einstein was only human.

I'm not sure I can draw the inference that this means it was possible to generate the theory without the key observations it is explaining. What I'm grasping at is how we can bound what cababilities more intelligence gives an agent. It seems intuitive to me that there must be limits and we can look to physics and math to try to understand them. Which leads us here:

Meaningless. Asymptotic runtime complexity is a mathematical tool that assumes an infinite sequence of ever harder problems.

I disagree. We've got a highly speculative question in front of us. "What can a machine intelligence greater than ours accomplish"? We can't really know what it would be like to be twice as smart any more than an ape can. But if we stipulate that the machine is running on Turing Complete hardware and accept NP hardness then we can at least put an upper bound on the capabilities of this machine.

Concretely, I can box the machine using a post-quantum cryptographic standard and know that it lacks the computational resources to break out before the heat death of the universe. More abstractly, any AI risk scenario cannot require solving NP problems of more than modest size. (because of completeness, this means many problems and many of the oft-posed risk scenarios are off the table)

Replies from: donald-hobson↑ comment by Donald Hobson (donald-hobson) · 2022-02-14T13:17:56.328Z · LW(p) · GW(p)

If you replace "AI" with "ML" I agree with this point. And yep this is what we can do with the networks we're scaling. But "pretty good most of the time" doesn't get you an x-risk intelligence. It gets you some really cool tools.

On some problems, finding the exact best solution is intractable. On these problems, its all approximations and tricks that usually work. Whether the simplest dumb ML algorithm running with didly squat compute, or some vastly superintelligent AI running on a Matrioska brain.

Take hacking a computer system that controls nukes or something. The problem of finding the fastest way to hack an arbitrary computer system is NP hard. But humans sometimes hack computers without exponentially vast brains. Suppose the AI's hacking algorithm can hack 99% of all computer systems that are theoretically possible to hack with unlimited compute. And The AI takes at most 10% longer than the theoretically minimum time on those problems.

This AI is still clearly dangerous. Especially if it isn't just a hacking tool. It has a big picture view of the world and what it wants to achieve.

In short, maybe P!=NP and there is no perfect algoritm, but its possible to be a lot better than current ML, and a lot better than humans, and you don't need a perfect algorithm to create an X risk.

If 3 SAT is O(n^4) then P=NP and back to Aaronson's point; the fundamental structure of reality is much different than we think it is. (did you mean "4^N"? Plenty of common algorithms are quartic.)

If 3-sat is O(n^1000,000) then P=NP on a technicality, but the algorithm is totally useless as it is far too slow in practice. If its O(n^4) there are still some uses, but it would seriously hamper the effectiveness of the minimum circuit style predictors. Neural nets are trained on a lot of data. With an O(n^4) algorithm, training beyond 10kb of data will be getting difficult, depending somewhat on the constant.

The assertion that "illegible" means "requiring more intelligence" rather than "ill-posed" or "underspecified" doesn't seem obvious to me. Maybe you can expand on this?

If you consider the problem of persuading a human to let you out of the box in an AI boxing scenario, well that is perfectly well posed. (There is a big red "open box" button, and either its pressed or it isn't. ) But we don't have enough understanding of phycology to do it. Pick some disease we don't know the cause of or how to cure yet. There will be lots of semi relevant information in biology textbooks. There will be case reports and social media posts and a few confusing clinical trials.

In weather, we know the equations, and there are equations. Any remaining uncertainty is uncertainty about windspeed, temperature etc. But suppose you had a lot of weather data, but hadn't invented the equations yet. You have a few rules of thumb about how weather patterns move. When you invent the equations, suddenly you can predict so much more.

It seems intuitive to me that there must be limits and we can look to physics and math to try to understand them.

Of course their are bounds. But those bounds are really high on a human scale.

I'm not sure I can draw the inference that this means it was possible to generate the theory without the key observations it is explaining.

I am arguing that there are several facts observable to the average caveman that are explainable by general relativity. Einstein needed data from experiments as well. If you take 10 clues to solve a puzzle, but once you solve it, all the pieces fit beautifully together, that indicates that the problem may have been solvable with fewer clues. It wasn't that Pythagoras had 10 trillion theories, each as mathematically elegant as general relativity, in mind, and needed experiments to tell which one was true. Arguably Newton had 1 theory like that, and there were still a few clues available that hinted towards relativity.

Concretely, I can box the machine using a post-quantum cryptographic standard and know that it lacks the computational resources to break out before the heat death of the universe. More abstractly, any AI risk scenario cannot require solving NP problems of more than modest size. (because of completeness, this means many problems and many of the oft-posed risk scenarios are off the table)

A large fraction of hacking doesn't involve breaking cryptographic protocols in the field of cryptographic protocols, but in places where those abstractions break down. Sure you use that post quantum cryptographic standard. But then you get an attack that runs a computation that takes 5 or 30 seconds depending on a single bit of the key, and seeing if the cooling fan turns on. Data on what the cooling fan gets up to wasn't included in the ideal mathematical model in which the problem was NP hard. Or it could be something as stupid as an easily guessable admin password. Or a cryptographic library calls a big int library. The big int library has a debug mode that logs all the arithmetic done. The debug mode can be turned on with a buffer overflow. So turn on debug and check the logs for the private keys. Most hacks aren't about breaking NP hard math, but about the surrounding software being buggy or imperfect, allowing you do bypass the math.

The NP-hard maths is about the worst case. I can give you a 3sat of a million variables that is trivial to solve. The maths conjectures that it is hard to solve the worst case. Is hacking a particular computer or designing a bioweapon the worst case of a 3 sat problem, or is it one of the easier cases? I don't know. Large 3 sat problems are often solved in practice, like a million variable problems are solved in minutes. Because NP hardness is about the worst case. And the practical problem people are solving isn't the worst case.

More abstractly, any AI risk scenario cannot require solving NP problems of more than modest size. (because of completeness, this means many problems and many of the oft-posed risk scenarios are off the table)

Are you claiming that designing a lethal bioweapon is NP hard? That building nanotech is NP hard? Like using hacking and social engineering to start a nuclear war is NP hard? What are these large 3 sat problems that must be solved before the world can be destroyed?

↑ comment by [deleted] · 2022-02-12T13:56:55.723Z · LW(p) · GW(p)

Replies from: patrick-cruce↑ comment by MrFailSauce (patrick-cruce) · 2022-02-12T17:34:00.545Z · LW(p) · GW(p)

Overshooting by 10x (or 1,000x or 1,000,000x) before hitting 1.5x is probably easier than it looks for someone who does not have background in AI.

Do you have any examples of 10x or 1000x overshoot? Or maybe a reference on the subject?

Replies from: None↑ comment by [deleted] · 2022-02-13T05:23:16.668Z · LW(p) · GW(p)

Replies from: patrick-cruce↑ comment by MrFailSauce (patrick-cruce) · 2022-02-13T17:01:42.373Z · LW(p) · GW(p)

AlphaGo went from mediocre, to going toe-to-toe with the top human Go players in a very short span of time. And now AlphaGo Zero has beaten AlphaGo 100-0. AlphaFold has arguably made a similar logistic jump in protein folding

Do you know how many additional resources this required?

Cost of compute has been decreasing at exponential rate for decades, this has meant entire classes of algorithms which straightforward scale with compute also have become exponentially more capable, and this has already had profound impact on our world. At the very least, you need to show why doubling compute speed, or paying for 10x more GPUs, does not lead to a doubling of capability for the kinds of AI we care about.

Maybe this is the core assumption that differentiates our views. I think that the "exponential growth" in compute is largely the result of being on the steepest sloped point of a sigmoid rather than on a true exponential. For example Dennard scaling ceased around 2007 and Moore's law has been slowing over the last decade. I'm willing to conceed that if compute grows exponentially indefinitely then AI risk is plausible, but I don't see any way that will happen.

Replies from: Nonecomment by Martin Randall (martin-randall) · 2022-02-14T03:25:55.692Z · LW(p) · GW(p)

I went to 80,000 hours, and hit the start here button, and it starts with climate change, then nuclear war, then pandemics, then AI. Long-termism is gestured at in passing on the way to pandemics. So I think this is pretty much already done. I expect that leading with AI instead of climate change would lose readers, and I expect that this is something they've thought about.

The summary of Holden Karnofsky’s Most Important Century series is five points long, and AI risk is introduced at the second point: "The long-run future could come much faster than we think, due to a possible AI-driven productivity explosion". I'm not clear what you would change, if anything.

Replies from: neel-nanda-1↑ comment by Neel Nanda (neel-nanda-1) · 2022-02-14T17:14:22.609Z · LW(p) · GW(p)

Oh, neat! I haven't looked at their introduction in a while, that's a much better pitch than I remember! Kudos to them.

comment by Harlan · 2022-02-17T17:54:26.679Z · LW(p) · GW(p)

I believe that preventing X-Risks should be the greatest priority for humanity right now. I don't necessarily think that it should be everyone's individual greatest priority though, because someone's comparative advantage might be in a different cause area.

"Holy Shit, X-Risk" can be extremely compelling, but it can also have people feeling overwhelmed and powerless, if they're not in a position to do much about it. One advantage of the traditional EA pitch is that it empowers the person with clear actions they can take that have a clear and measurable impact.