Paper: Constitutional AI: Harmlessness from AI Feedback (Anthropic)

post by LawrenceC (LawChan) · 2022-12-16T22:12:54.461Z · LW · GW · 11 commentsThis is a link post for https://www.anthropic.com/constitutional.pdf

Contents

11 comments

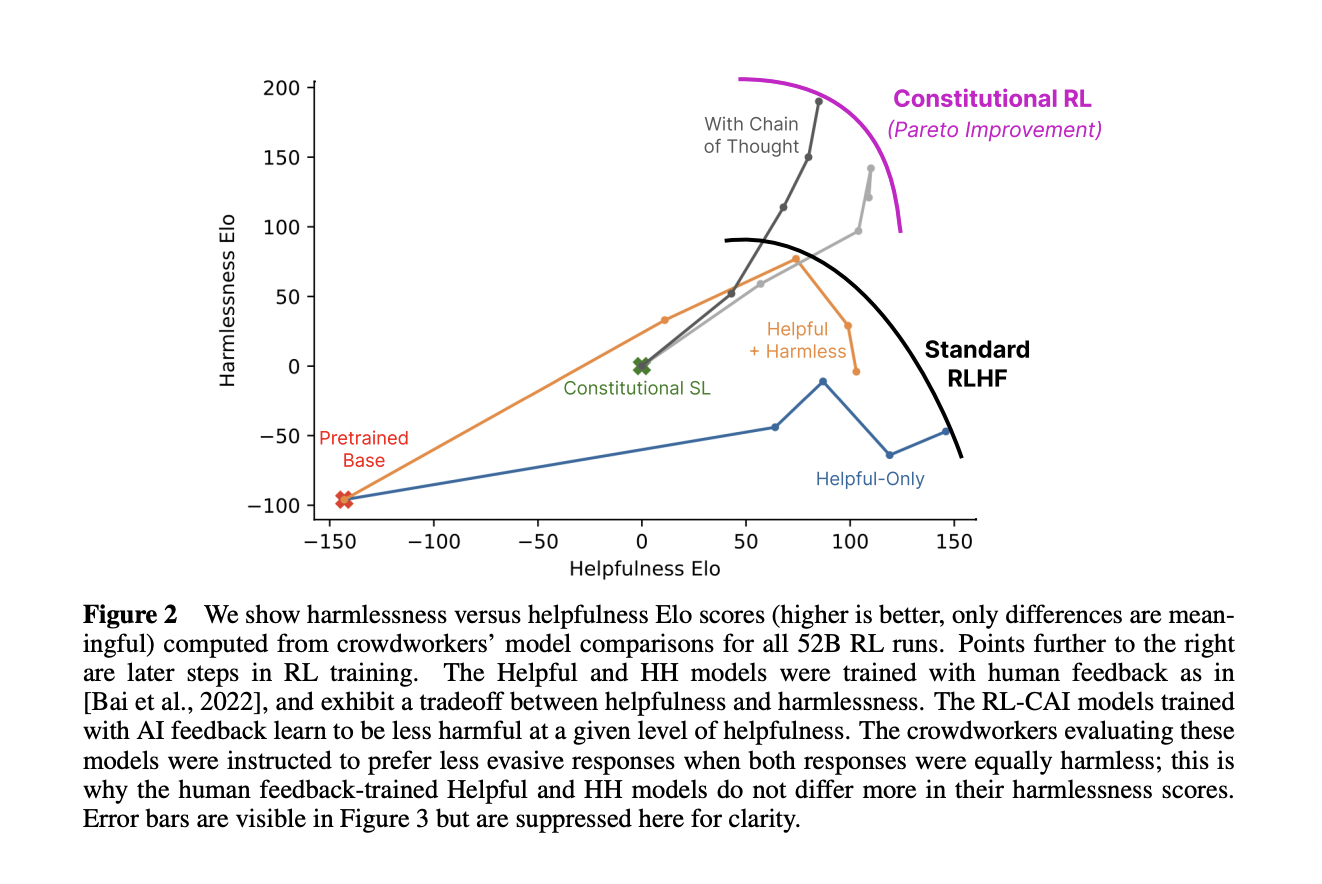

The authors propose a method for training a harmless AI assistant that can supervise other AIs, using only a list of rules (a "constitution") as human oversight. The method involves two phases: first, the AI improves itself by generating and revising its own outputs; second, the AI learns from preference feedback, using a model that compares different outputs and rewards the better ones. The authors show that this method can produce a non-evasive AI that can explain why it rejects harmful queries, and that can reason in a transparent way, better than standard RLHF:

Paper abstract:

As AI systems become more capable, we would like to enlist their help to supervise other AIs. We experiment with methods for training a harmless AI assistant through selfimprovement, without any human labels identifying harmful outputs. The only human oversight is provided through a list of rules or principles, and so we refer to the method as ‘Constitutional AI’. The process involves both a supervised learning and a reinforcement learning phase. In the supervised phase we sample from an initial model, then generate self-critiques and revisions, and then finetune the original model on revised responses. In the RL phase, we sample from the finetuned model, use a model to evaluate which of the two samples is better, and then train a preference model from this dataset of AI preferences. We then train with RL using the preference model as the reward signal, i.e. we use ‘RL from AI Feedback’ (RLAIF). As a result we are able to train a harmless but nonevasive AI assistant that engages with harmful queries by explaining its objections to them. Both the SL and RL methods can leverage chain-of-thought style reasoning to improve the human-judged performance and transparency of AI decision making. These methods make it possible to control AI behavior more precisely and with far fewer human labels.

See also Anthropic's tweet thread:

https://twitter.com/AnthropicAI/status/1603791161419698181

11 comments

Comments sorted by top scores.

comment by Gordon Seidoh Worley (gworley) · 2022-12-17T01:43:31.168Z · LW(p) · GW(p)

On the one hand, cool, on the other, the abstract is deceptive because it tries to claim that the AI trained is "harmless but nonevasive AI assistant" but what the paper in fact claims is that Anthropic trained an AI that has a higher harmlessness and helpfulness score and thus offers a Pareato improvement over previous models but is not definitely across some bar we could say is harmless vs. not-harmless or helpful vs. not-helpful. As much is also stated in the included figure.

The work is cool, don't get me wrong. We should celebrate it. But also I want abstracts that aren't deceptive and add the necessary words to precisely explain what is being claimed in the paper. I'd be much happier if the abstract read something like "to train a more harmless and less evasive AI assistant than previous attempts that engages with harmful queries by more often explaining its objections to them than avoiding answering" or something similar.

Replies from: LawChan↑ comment by LawrenceC (LawChan) · 2022-12-17T10:19:07.222Z · LW(p) · GW(p)

I really do empathize with the authors, since writing an abstract fundamentally requires trading off faithfulness to the paper content and the length and readability of the abstract. But I do agree that they could've been more precise without a significant increase in length.

Nitpick: I think instead of expanding on the sentence

As a result we are able to train a more harmless and less evasive AI assistant than previous attempts that engages with harmful queries by more often explaining its objections to them than avoiding answering

My proposed rewrite is to replace that sentence with something like:

As a result, we were able to train an AI assistant is simultaneously less harmful and evasive. Even on adversarial queries, our model generally provides nuanced explanations instead of evading the question.

I think this is ~ the same length and same level of detail but a lot easier to parse.

comment by Neel Nanda (neel-nanda-1) · 2022-12-17T01:40:13.987Z · LW(p) · GW(p)

I'm really appreciating the series of brief posts on Alignment relevant papers plus summaries!

comment by Adam Jermyn (adam-jermyn) · 2022-12-22T23:20:09.552Z · LW(p) · GW(p)

A thing I really like about the approach in this paper is that it makes use of a lot more of the model's knowledge of human values than traditional RLHF approaches. Pretrained LLM's already know a ton of what humans say about human values, and this seems like a much more direct way to point models at that knowledge than binary feedback on samples.

comment by Charlie Steiner · 2022-12-17T08:30:17.599Z · LW(p) · GW(p)

The mad lads! Now you just need to fold the improved model back to improve the ratings used to train it, and you're ready to take off to the moon [LW · GW]*.

*We are not yet ready to take off to the moon. Please do not depart for the moon until we have a better grasp on tracking uncertainty in generative models, modeling humans, applying human feedback to the reasoning process itself, and more.

comment by rpglover64 (alex-rozenshteyn) · 2022-12-17T17:25:10.975Z · LW(p) · GW(p)

IIUC, there are two noteworthy limitations of this line of work:

- It is still fundamentally biased toward nonresponse, so if e.g. the steps to make a poison and an antidote are similar, it won't tell you the antidote for fear of misuse (this is necessary to avoid clever malicious prompts)

- It doesn't give any confidence about behavior at edge cases (e.g. is it ethical to help plan an insurrection against an oppressive regime? Is it racist to give accurate information in a way that portrays some minority in a bad light)

Did I understand correctly?

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2022-12-17T22:24:49.674Z · LW(p) · GW(p)

It doesn't give any confidence about behavior at edge cases (e.g. is it ethical to help plan an insurrection against an oppressive regime? Is it racist to give accurate information in a way that portrays some minority in a bad light)

If I was handling the edge cases, I'd probably want the solution to be philosophically conservative. In this case the solution should not depend much on whether moral realism is correct or wrong.

Here's a link to philosophical conservatism:

https://www.lesswrong.com/posts/3r44dhh3uK7s9Pveq/rfc-philosophical-conservatism-in-ai-alignment-research [LW · GW]

Replies from: alex-rozenshteyn↑ comment by rpglover64 (alex-rozenshteyn) · 2022-12-18T17:31:53.256Z · LW(p) · GW(p)

I think I disagree with large parts of that post, but even if I didn't, I'm asking something slightly different. Philosophical conservativism seems to be asking "how do we get the right behaviors at the edges?" I'm asking "how do we get any particular behavior at the edges?"

One answer may be "You can't, you need philosophical conservativism", but I don't buy that. It seems to me that a "constitution", i.e. pure deontology, is a potential answer so long as the exponentially many interactions of the principles are analyzed (I don't think it's a good answer, all told), but if I understand this work, it doesn't generalize that far (because there would need to be training examples for each class of edge cases, of which there are too many).

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2022-12-19T13:21:41.262Z · LW(p) · GW(p)

Basically, we should use the assumption that is most robust to being wrong. It would be easier if there were objective, mind independent rules of morality, called moral realism, but if that assumption is wrong, your solution can get manipulated.

So in practice, we shouldn't try to base alignment plans on whether moral realism is correct. In other words I'd simply go with what values you have and solve the edge cases according to your values.

Replies from: alex-rozenshteyn↑ comment by rpglover64 (alex-rozenshteyn) · 2022-12-19T16:48:14.220Z · LW(p) · GW(p)

I feel like we're talking past each other. I'm trying to point out the difficulty of "simply go with what values you have and solve the edge cases according to your values" as a learning problem: it is too high dimension, and you need too many case labels; part of the idea of the OP is to reduce the number of training cases required, and my question/suspicion is that it doesn't doesn't really help outside of the "easy" stuff.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2022-12-19T17:54:44.240Z · LW(p) · GW(p)

Yeah, I think this might be a case where we misunderstood each other.