Expected Utility, Geometric Utility, and Other Equivalent Representations

post by StrivingForLegibility · 2024-11-20T23:28:21.826Z · LW · GW · 0 commentsContents

Expected Utility Functions f-Utility Functions Equivalence of Maximization Duality f-Utility Functions Correspond to Expected Utility Functions Expected Utility Functions Correspond to f-Utility Functions f as a Bijection of Utility Functions f is Injective f is Surjective Composition Conclusions None No comments

In Scott Garrabrant's [LW · GW] excellent Geometric Rationality [? · GW] sequence, he points out [LW · GW] an equivalence between modelling an agent as

- Maximizing the expected logarithm of some quantity ,

- Maximizing the geometric expectation [LW · GW] of ,

And as we'll show in this post, not only can we prove a geometric version of the VNM utility theorem:

- An agent is VNM-rational if and only if there exists a function that:

- Represents the agent's preferences over lotteries

- if and only if

- Agrees with the geometric expectation of

- Represents the agent's preferences over lotteries

Which in and of itself is a cool equivalence result, that maximization VNM rationality maximization. But it turns out these are just two out of a huge family of expectations we can use, like the harmonic expectation , which each have their own version of the VNM utility theorem. We can model agents as maximizing expected utility, geometric utility, harmonic utility, or whatever representation is most natural for the problem at hand.

Expected Utility Functions

The VNM utility theorem is that an agent satisfies the VNM axioms if and only if there exists a utility function which:

- Represents that agent's preferences over all lotteries

- if and only if

- Agrees with its expected value

Where is a probability distribution over outcomes .

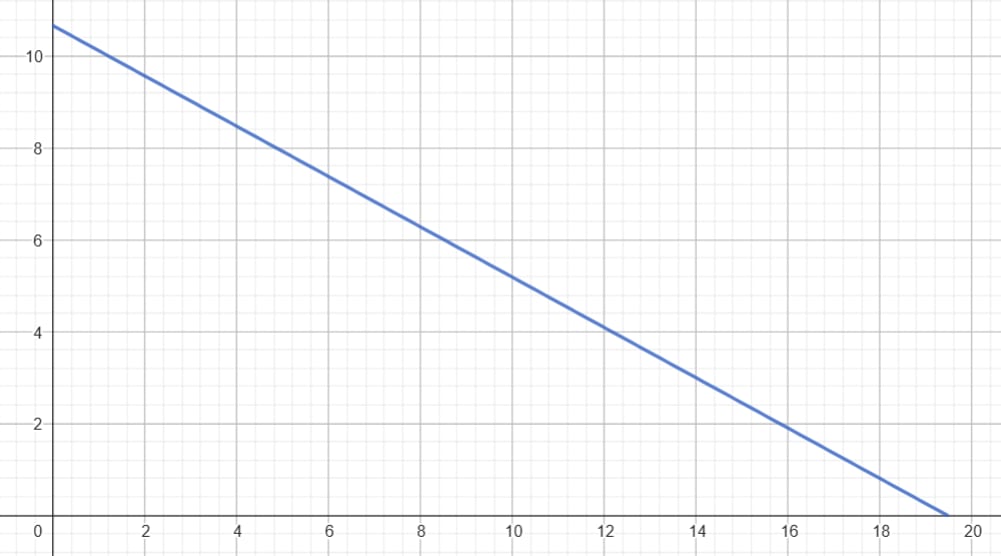

The first property is easy to preserve. Given any strictly increasing function ,

if and only if

So also represents our agent's preferences. But it's only affine transformations that preserve the second property that . And it's only increasing affine transformations that preserve both properties at once.

f-Utility Functions

But what if we were interested in other ways of aggregating utilities? One of the central points of Scott's Geometric Rationality [? · GW] sequence is that in many cases, the geometric expectation is a more natural way to aggregate utility values into a single representative number .

We can represent the same preferences using the expected logarithm , but this can feel arbitrary, and having to take a logarithm is a hint that these quantities are most naturally combined by multiplying them together. The expectation operator can emulate a weighted product, but the geometric expectation operator is a weighted product, and we can model an agent as maximizing without ever bringing into the picture.

And as scottviteri [LW · GW] asks [LW(p) · GW(p)]:

If arithmetic and geometric means are so good, why not the harmonic mean? https://en.wikipedia.org/wiki/Pythagorean_means. What would a "harmonic rationality" look like?

They also link to a very useful concept I'd never seen before: the power mean. Which generalizes many different types of average into one family, parameterized by a power . Set and you've got the arithmetic mean . Set and you've got the geometric mean . And if you set you've got the Harmonic mean .

It's great! And I started to see if I could generalize my result to other values of . What is the equivalent of for ? Well, scottviteri [LW · GW] set me down the path towards learning about an even broader generalization of the idea of a mean, which captures the power mean as a special case: the quasi-arithmetic mean or -mean, since it's parameterized by a function .

For our baseline definition, will be a continuous, strictly increasing function that maps an interval of the real numbers to the real numbers . We're also going to be interested in a weighted average, and in particular a probability weighted average over outcomes . We'll use the notation to denote sampling from the probability distribution .

Here's the definition of the -expectation :

Which for finite sets of outcomes looks like:

So for example:

- If and (or any increasing affine transformation , where ), then is the arithmetic expectation .

- If , the positive real numbers, and (or any logarithm where and 1), then is the geometric expectation [LW · GW] .

- We can also extend to include , to cover applications like Kelly betting [LW · GW]. This will still be a strictly increasing bijection, and I expect it will work with any result that relies on being continuous.

- If , and , then is the harmonic expectation .

- Using would also compute the harmonic expectation, but is strictly increasing. And that lets us frame our agent as always maximizing a utility function.

- If , and , then is the power expectation using the power .

An -utility function represents an agent's preferences:

if and only if

And agrees with the -expectation of

It turns out that for every -utility function , there is a corresponding expected utility function , and vice versa. We'll prove this more rigorously in the next sections, but it turns out that these are equivalent ways of representing the same preferences.

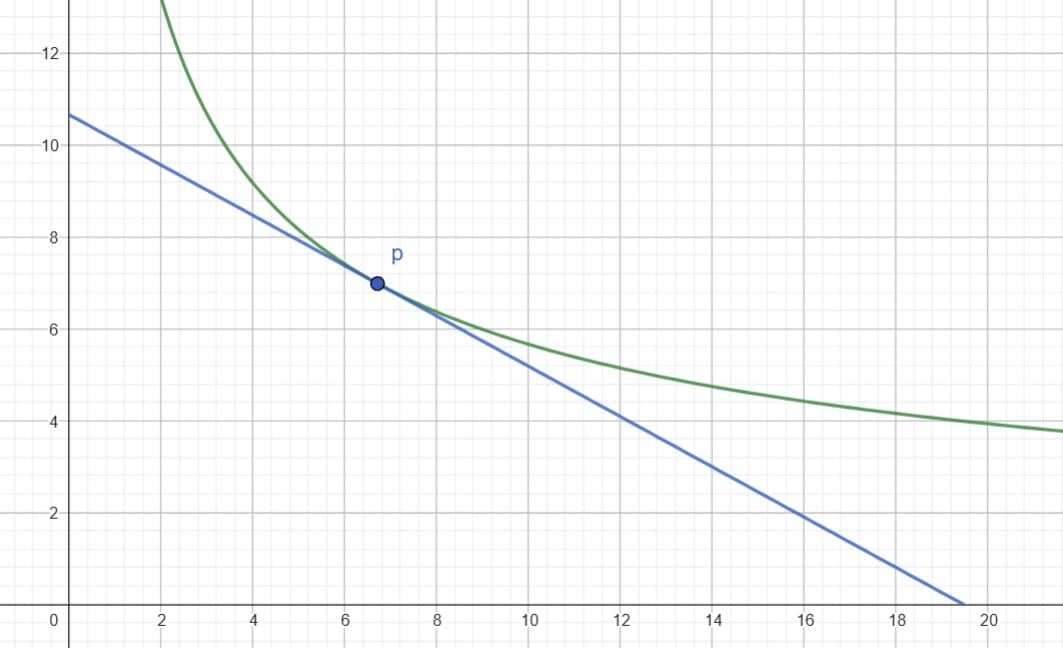

Equivalence of Maximization

Here's the core insight that powers the rest of this equivalence result, which Scott [LW · GW] articulates here [LW · GW]:

Maximization is invariant under applying a monotonic function... So every time we maximize an expectation of a logarithm, this was equivalent to just maximizing the geometric expectation.

If we think of an agent as maximizing over , something under their control like their action or policy, we can write this as:

So for every geometric utility function , there is a corresponding expected utility function which gives the same result if maximized.

This equivalence can be generalized to all -utility functions, and it follows from exactly the same reasoning. We have a strictly increasing function , and so must be strictly increasing as well.

- If

- Then

And so will ignore either one. Let's use that to simplify the expression for maximizing the -expectation of :

And this suggests a substitution that will turn out to be extremely useful:

There is a function whose expectation we can maximize, and this is equivalent to maximizing the -expectation of our -utility function . And we'll show that is indeed an expected utility function! Similarly, we can apply to both sides to get a suggestion (which turns out to work) for how to turn an expected utility function into an equivalent -utility function.

Duality

f-Utility Functions Correspond to Expected Utility Functions

It turns out that for every expected utility function , there is a corresponding -utility function , and vice versa. And this duality is given by and .

We'll start by showing how to go from to . Given an expected utility function , we'll define to be

We know from the VNM expected utility theorem that

Let's plug both of those into the definition of

So agrees with . And since is strictly increasing, and represents an agent's preferences, so does .

if and only if

Which means is an -utility function!

This gives us one half of the VNM theorem for -utility functions. If an agent is VNM rational, their preferences can be represented using an -utility function .

Expected Utility Functions Correspond to f-Utility Functions

We can complete the duality by going the other way, starting from an -utility function and showing there is a unique corresponding expected utility function . We'll define to be:

And we'll plug that and the fact that into the definition of .

And that's it! Starting from an -utility function , we can apply a strictly increasing function to get an expected utility function which represents the same preferences and agrees with .

This also gives us the other half of the VNM theorem for -utility functions. If an agent's preferences can be represented using an -utility function , they can be represented using an expected utility function , and that agent must therefore be VNM-rational.

f as a Bijection of Utility Functions

So for every -utility function , we can apply and get an expected utility function . And the same is true in reverse when applying . Does this translation process have any collisions in either direction? Are there multiple -utility functions and that correspond to the same expected utility function , or vice versa?

It turns out that creates a one-to-one correspondence between -utility functions and expected utility functions. And a consequence of that is that all of these languages are equally expressive: there are no preferences we can model using an -utility function that we can't model using an expected utility function, and vice versa.

Another way to frame this duality is to say that our translation function is a bijection between its domain and its image . And this induces a structure-preserving bijection between utility functions and -utility functions .

To show this, we can show that is injective and surjective between these sets of utility functions.

f is Injective

An injective function, also known as a one-to-one function, maps distinct elements in its domain to distinct elements in its codomain. In other words, injective functions don't have any collisions. So in this case, we want to show that given two distinct -utility functions and , and must also be distinct.

Since and are distinct -utility functions, they must disagree about some input .

And since is strictly increasing, it can't map these different values in to the same value in .

And thus must be a distinct function from .

f is Surjective

A surjective function maps every element in its domain onto an element of its codomain, and these functions are also called "onto" functions. So in this case, we want to show that given an expected utility function , there is an -utility function such that . And this is exactly the -utility function that picks out.

And that's it! induces a one-to-one correspondence between expected utility functions and -utility functions . We can freely translate between these languages and maximization will treat them all equivalently.

Composition

I also want to quickly show two facts about how -expectations combine together:

- The -expectation of -expectations is another -expectation

- The weights combine multiplicatively, as we'd expect from conditional probabilities

- Analogous to

All of this is going to reduce to an expectation of expectations, so let's handle that first. Let's say we have a family of probability distributions and expected utility functions . And then we sample from a probability distribution I'll suspiciously call .

Taking the expectation over of the expectation over is equivalent to taking the expectation over pairs .[1]

This is one way to frame Harsanyi aggregation [? · GW]. Sample an agent according to a probability distribution , then evaluate their expected utility using that agent's beliefs. The Harsanyi score is the expectation of expected utility, and the fact that nested expectations can be collapsed is exactly why aggregating this way satisfies the VNM axioms. The Harsanyi aggregate is VNM rational with respect to the conditional probability distribution .

Knowing that, the general result for -expectations is even easier:

So for example, Scott motivates [LW · GW] the idea of Kelly betting as the result of negotiating between different counterfactual versions of the same agent. In that framing, naturally captures "Nash bargaining [LW · GW] but weighted by probability." If we geometrically aggregate these geometric expected utilities , the result is the same as one big geometrically aggregate over all counterfactual versions of all agents, weighted by . And the fact that we can model this aggregate as maximizing means that it's VNM-rational as well!

This is a very cool framing, and there are some phenomena that I think are easier to understand this way than using the expected utility lens. But since Geometric Rationality is Not VNM Rational [LW · GW], we know that the model won't have all the cool features we want from a theory of geometric rationality, like actively preferring to randomize our actions in some situations.

Conclusions

With all of that under our belt, we can now reiterate the -Utility Theorem for VNM Agents. Which is that an agent is VNM-rational if and only if there exists a function that:

- Represents the agent's preferences over lotteries

- if and only if

- Agrees with the -expectation of

We can model a VNM-rational agent as maximizing expected utility , geometric expected utility , harmonic expected utility , or any other -expectation that's convenient for analysis. We can translate these into expected utility functions, but we can also work with them in their native language.

We can also think of this equivalence as an impossibility result. We may want to model preferences that violate the VNM axioms, like a group preference for coin flips in cases where that's more fair than guaranteeing any agent their most preferred outcome. And the equivalence of all these representations means that none of them can model such preferences.

One approach that does work is mixing these expectations together. Our best model of geometric rationality to my knowledge is ; the geometric expectation of expected utility. See Geometric Rationality is Not VNM Rational [LW · GW] for more details, but the way I'd frame it in this sequence is that the inner expectation means that the set of feasible joint utilities is always convex.

And maximizing the geometric expectation, aka the geometric aggregate, always picks a Pareto optimum, which is unique as long as all agents have positive weight.

Check out the main Geometric Utilitarianism [LW · GW] post for more details, but I think of these equivalence results as telling us what we can do while staying within the VNM paradigm, and what it would take to go beyond it.

- ^

We went through the proof for discrete probability distributions here, but holds for all probability distributions.

0 comments

Comments sorted by top scores.