A Gentle Introduction to Risk Frameworks Beyond Forecasting

post by pendingsurvival · 2024-04-11T18:03:25.605Z · LW · GW · 10 commentsContents

Introduction 1. Disaster Risk Models 1.1 The Determinants of Risk 1.2 Exposure 1.3 Pressure and Release 1.4 The Disaster Cycle 1.5 Complexity and Causation 2 Normal Accidents and High-Reliability Organisations 3 Sexy and Unsexy Risks 4 Forecasting, Foresight, and Futures 4.1 Forecasting 4.2 Foresight 4.3 Futures Studies Conclusion Bibliography None 10 comments

This was originally posted on Nathaniel's and Nuno's substacks (Pending Survival and Forecasting Newsletter, respectively). Subscribe here and here!

Discussion is also occurring on the EA Forum here [EA · GW] (couldn't link the posts properly for technical reasons).

Introduction

When the Effective Altruism, Bay Area rationality, judgemental forecasting, and prediction markets communities think about risk, they typically do so along rather idiosyncratic and limited lines. These overlook relevant insights and practices from related expert communities, including the fields of disaster risk reduction, safety science, risk analysis, science and technology studies—like the sociology of risk—and futures studies.

To remedy this state of affairs, this document—written by Nathaniel Cooke and edited by Nuño Sempere—(1) explains how disaster risks are conceptualised by risk scholars, (2) outlines Normal Accident Theory and introduces the concept of high-reliability organisations, (3) summarises the differences between “sexy” and “unsexy” global catastrophic risk (GCR) scenarios, and (4) provides a quick overview of the methods professionals use to study the future. This is not a comprehensive overview, but rather a gentle introduction.

Risk has many different definitions, but this document works with the IPCC definition of the “potential for adverse consequences”, where risk is a function of the magnitude of the consequences and the uncertainty around those consequences, recognising a diversity of values and objectives.1 Scholars vary on whether it is always possible to measure uncertainty, but there is a general trend to assume that some uncertainty is so extreme as to be practically unquantifiable.2 Uncertainty here can reflect both objective likelihood and subjective epistemic uncertainty.

1. Disaster Risk Models

A common saying in disaster risk circles is that “there is no such thing as a natural disaster”. As they see it, hazards may arise from nature, but an asteroid striking Earth is only able to threaten humanity because our societies currently rely on vulnerable systems that an asteroid could disrupt or destroy.3

This section will focus on how disaster risk reduction scholars break risks down into their components, model the relationship between disasters and their root causes, structure the process of risk reduction, and conceptualise the biggest and most complex of the risks they study.

The field of global catastrophic risk, in which EAs and adjacent communities are particularly interested, has incorporated some insights from disaster risk reduction. For example, in past disasters, states have often justified instituting authoritarian emergency powers in response to the perceived risks of mass panic, civil disorder and violence, or helplessness among the public. However, disaster risk scholarship has shown these fears to be baseless.4–9 and this has been integrated into GCR research under the concept of the “Stomp Reflex”.

However, other insights from disaster risk scholarship remain neglected, so we encourage readers to consider how they might apply within their cause area and interests.

1.1 The Determinants of Risk

Popular media often refers to things like nuclear war, climate change, and lethal autonomous weapons systems as “risks” in themselves. This stands in contrast with how risk scholars typically think about risk. To these scholars, “risk” refers to outcomes—the “potential for adverse consequences”—rather than the causes of those outcomes. So what would a disaster risk expert consider a nuclear explosion to be if not a risk, and why does it matter?

There is no universal standard model of disaster risk. However, the vast majority highlight how it emerges from the interactions of several different determinants, specifically the following:

- Hazard: potentially harmful or destructive phenomena

- “The spark”, “what kills you”

- Exposure: the inventory of elements (people, animals, ecosystems, structures, etc.) present in an area in which hazard events may occur

- “Being in the wrong place at the wrong time”, “the reaction surface”

- Vulnerability: propensities of the exposed elements to suffer adverse effects when impacted by hazards

- “The tinder”, “how you die”

- The outcome of an event usually depends more on the vulnerabilities than the hazards

- Adaptive capacity: the strengths, attributes, knowledge, skills, and resources available to manage and reduce disaster risks

- People are not only vulnerable to disasters, they are also able to anticipate, cope with, resist, and recover from them

- Response: actions taken directly before, during, or immediately after the hazard event

- These can have positive or negative consequences, or both

The term “threat” is sometimes used as a general catch-all term for a “plausible and significant contributor to total risk”.10

A common point of confusion is that these are sometimes presented as an equation, for instance “Risk = Hazard x Exposure x Vulnerability/Capacity x Response”. Perhaps we could more accurately say Risk = f( H, E, V, C, R). However, this is not meant to be an equation you plug numbers into, but rather a concise way to illustrate the composition of risk. Basically, this is a framework intended to highlight the interplay of various factors and is not a perfectly coherent from-first-principles ontology; there are areas of overlap.

Imagine that someone gets shot. The hazard is the bullet, their vulnerabilities are the ways in which their body relies upon not having being shot (blood circulation, functioning organs, etc.), their capacities are their abilities to prepare, damage-control, and heal, the exposure is their presence in the path of a flying bullet, and the response is somebody performing first aid (and/or trying to cut out the bullet and accidentally making the situation worse).

Same goes for an earthquake (hazard), striking a village in an earthquake zone (exposure) containing poorly-constructed buildings and no earthquake warning system (vulnerabilities) but also disaster-experienced, skilled people possessing useful tools and resources (capacities) who eventually organise relief operation (response).

Things can get more complicated: the earthquake could cause a landslide from above the village (compounding hazards), or the collapse of the village’s only granary could trigger a famine and social strife (cascading disaster), and so on.11

The Hazard-Exposure-Vulnerability-Capacity-Response (HEVCR) model was developed for disaster risk reduction, with a recent wave of interest from climate change scholars. It may not perfectly translate to other risks, for instance global catastrophic risks. It’s a start, though.

What this model excels at is illustrating how catastrophic events are the result of an interplay of factors, with multiple possible points of intervention. In contrast, a critique of the approach of the Effective Altruism and its adjacent forecasting communities is that they tend to consider risk more simplistically, where catastrophes are “Acts of God” triggered by cosmic dice-rolls causing individual hazards to strike an abstract, undifferentiated “humanity”.12

It is difficult to cite an absence of sophisticated reasoning, but a forthcoming post by Ximena Barker Huesca surveys key texts by EA megafauna (MacAskill, Bostrom, Ord, etc.), and shows that they tend to pay little attention to risk determinants beyond hazards, and offer simplistic or nonexistent accounts of the social aspects of risk and the interactions between threats. Elsewhere, EAG “area of interest” lists focus exclusively on hazard, and it is only over the last year or two that the EA community has started to conceptualise AGI development as a human process that can be slowed (or even stopped) rather than a cosmic inevitability. Members of the EA community have been known to absorb Toby Ord’s table of probabilities of existential risks uncritically, rather than considering the actual threats and mechanisms involved. This results in people making complex life decisions based on very, very informal, abstract, and simplistic “estimates of doom”.

What the HEVCR framework helps us see is that catastrophes happen in specific places to specific people in specific ways at specific times, and hazardous phenomena only threaten humanity because human society is structured in ways that put people at risk from them.13

For a discussion of how hazard, vulnerability, and exposure can be applied to existential risk, see Liu, Lauta, and Maas’s Governing Boring Apocalypses,14 which includes a list of potential “existential vulnerabilities” like the lack of effective governance institutions or increasing global socioeconomic homogeneity.

So, how exactly could an asteroid strike or some other risk threaten humanity’s existence? What particular things would need to happen to get from “asteroid hits the Earth” to “the last human dies”? The disaster risk reduction field proposes we look at the specifics of the current state of the world and go from there,

1.2 Exposure

People sometimes dismiss exposure when studying GCR, since “everyone is exposed by definition”. This isn’t always true, and even when it is, it still points us towards interesting questions.

Considering exposure can highlight the benefits of insulating people from certain (categories of) hazards, for instance with island refuges.15,16

If everyone really were exposed to a given hazard (e.g. to an actively malevolent superintelligent AGI), it simply raises the question of how the hazard was born. We weren’t exposed before, so what happened? Was the creation of the AGI inevitable? What were the steps that led us from wholly unexposed to wholly exposed, and what were the points of intervention along the way? Thinking in these terms may help us avoid falling into oversimplification, and assist us in finding leverage points.

1.3 Pressure and Release

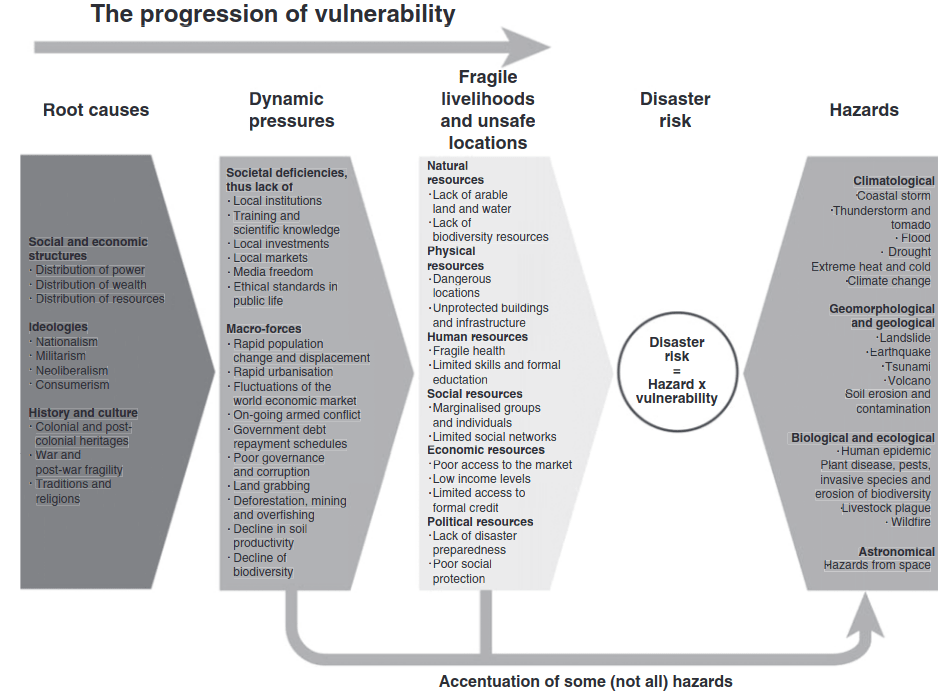

The Pressure and Release model (also known as “PAR” and the “crunch” model) was introduced to counter some deficiencies in previous models of risk.17 Previous approaches had focused on the physical aspects of hazards, neglecting the ways in which they can be the consequences of failed or mismanaged development, thus affecting marginalised groups disproportionately.13

According to PAR, vulnerability progresses through three stages:

- Root causes: interrelated large-scale structural forces—economic systems, political processes, ideologies, and so on—that shape culture, institutions, and the distribution of resources

- Often invisible or taken for granted as a result of being spatially distant, comparatively historically remote (e.g. colonisation), and/or buried in cultural assumptions and belief systems

- Dynamic pressures: more spatiotemporally specific material and ideological conditions that result (in large part) from root causes, and in turn generate unsafe conditions

- Unsafe conditions: the particular ways in which vulnerability manifests at the point of disaster

- Naturally these conditions also exist for some time before and often after the disaster event itself: poverty and marginalisation mean that, even before a hazard strikes, many people spend their daily lives in a “disaster state”

The model was developed to explain “natural” disasters rather than technological disasters, global catastrophes, or intentional attacks. While the specifics sometimes need adapting (and in some cases already have been, for instance for global health emergencies),18 the broad thrust of “disasters don’t come from nowhere, address the root causes!” remains vital.

1.4 The Disaster Cycle

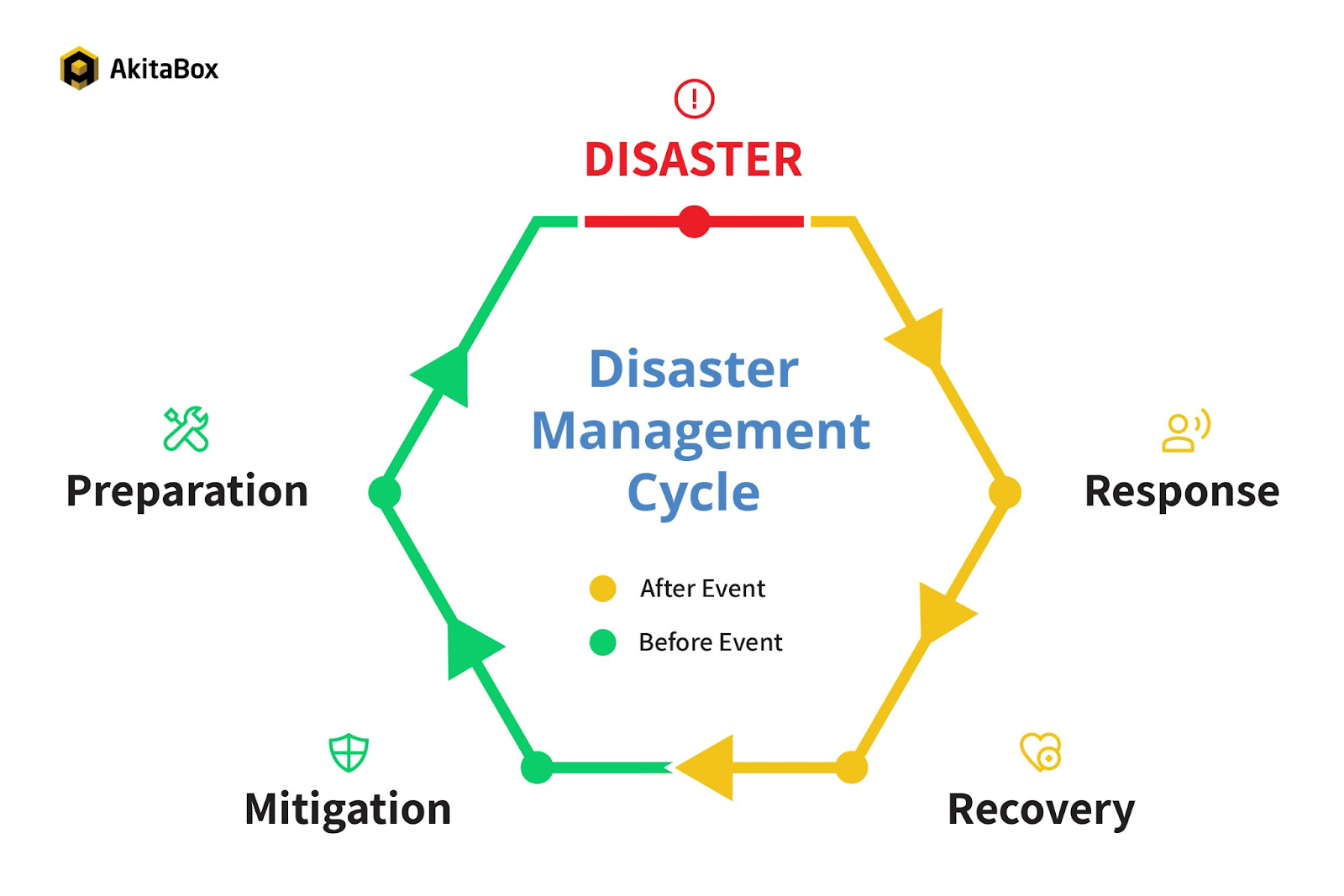

The disaster cycle breaks disasters down into separate phases to structure resilience efforts and emphasise how risk reduction should be treated as an ongoing integrated process, rather than isolated projects and headline-grabbing relief efforts.

The four phases of disaster are as follows:

- Mitigation (sometimes known as Prevention): actions taken to prevent or reduce the likelihood, impact, and/or consequences of disasters before they happen

- Assess the level of risk and survey its determinants, reduce vulnerability and exposure, minimise the probabilities and magnitudes of hazards

- Preparedness: actions taken to develop the knowledge and capacities necessary to effectively foresee, respond to, and recover from disasters

- Create and test plans and procedures, spread awareness, train people, stockpile and distribute resources

- Response: actions taken directly before, during, or immediately after the risk event

- Save lives, stabilise the situation, and reduce the potential for further harm

- Depends on the quality of the preparedness: “preparedness is the best response”

- Recovery: actions taken to return affected communities to a state of normalcy

- There is currently a movement to “build back better” or “bounce forward” rather than “bounce back”, i.e. to put communities in a better position than they started rather than aim to restore the (vulnerable) status quo

While treated as “canon”, the disaster cycle has some limitations: the stages are not always separate or temporally distinct (especially mitigation and preparedness) and is taken by some to suggest that disasters are necessary or inevitable “parts of the cycle”.[1]

1.5 Complexity and Causation

The HEVCR model points our attention towards the many factors involved in a given catastrophic scenario, and the importance of their interrelations.

Imagine that worsening climate change, economic inequality, and social division magnified by AI-powered misinformation campaigns motivate a group of omnicidal eco-terrorists to steal the information and technology necessary to engineer multiple highly virulent pathogens and release them at strategically-chosen airports across the world. This eventually wipes out humanity in large part due to dysfunctional governments failing to adequately respond in time.

Was that “extinction from synthetic biology”?[2]

Thus, many GCR researchers argue that the question is not “Is X an extinction risk?” but “How (much) could X contribute to the overall level of extinction risk?”12

This is further complicated by the key issue of “global systemic risk”.19 By this we mean that we live in a highly interdependent world; an exceptionally complex system blanketed with intricate networks of flowing food, energy, people, information, trade, money, and culture. Global systemic risk is premised on the idea that this creates great efficiency, but the interconnectedness and complexity it entails creates systemic fragility and instability. Local events (e.g. a big boat gets stuck in the Suez Canal) can have global consequences. Sudden changes (sometimes catastrophic ones) can occur purely as a result of internal system behaviour; no external “spark” is needed.

Complex system characteristics of particular interest are:

- Emergent behaviour: the system has properties and behaviours that its parts do not have on their own, and which emerge only when those parts interact as a whole.

- Examples can include language, material exchange, religion, or even society itself if humans are considered the parts, and e.g. World War II if you consider nations.

- A particularly famous example is crowd behaviour

- Feedback loops: where outputs of the system feed back into the system as new inputs.

- These can be:

- Positive (amplifying/reinforcing), e.g. decreasing demand eliminates jobs, which reduces demand, which eliminates jobs…

- Negative (dampening/stabilising), e.g. thermostats, various forms of homeostasis in the body[3]

- These can be:

- Nonlinear responses: where a change in the output of the system is not proportional to the change in the input.

- A Tunisian street vendor named Mohamed Bouazizi sets himself on fire to protest police harassment, triggering the Arab Spring

As a question for the reader: how well do the models described in earlier sections apply in light of these dynamics? How easily can risks and catastrophes be broken up into separate factors or stages, and what is gained or lost by doing so?

For those interested, related concepts to global systemic risk include globally networked risks,20 anthropocene risk,21 femtorisks,22 synchronous failure,23 critical transitions,24 and polycrisis.25

2 Normal Accidents and High-Reliability Organisations

A mainstay of the safety science and science and technology studies literatures, Charles Perrow’s Normal Accident Theory holds that it is reductive and unhelpful to explain major accidents as results of individual failures like operator error[4] or equipment malfunction.26

Instead, many accidents (known as “system accidents”) are seen as the inevitable structural consequences of highly complex and/or tightly coupled systems, where one malfunction can trigger, conceal, or impede the repair of another. The vast number of possible combinations between parts creates novel failure combinations and chain reactions that make major accidents difficult or even impossible to anticipate. These accidents are considered “normal” not because they are frequent or expected, but because they are inevitable features of certain types of organisation.

For Perrow, an organisation has high interactive complexity if one part is able to affect many others. Complex organisations typically have many components and control parameters, and exhibit nonlinear responses and feedback loops. This gives rise to unfamiliar or unexpected sequences of interactions that are either imperceptible or incomprehensible to their operators.

Two parts of the system are tightly coupled if there are close and rapid associations between changes in one part and changes in another with little to no slack, buffer, or “give” between them. If many components are tightly coupled, disturbances propagate quickly across the system.

There is a wide range of classic safety features used by safety engineers and resilience professionals, for instance redundancies, safety barriers, and improved operator training.

While these are generally valuable, Perrow suggests a number of strategies specific to the risk posed by normal accidents:

- Decrease interactive complexity

- Reduce opportunities for one malfunction to interact with another

- Increase legibility

- Improve the ability of operators to understand the dynamics of the system they control

- Loosen tight couplings

- Add buffers and lag time to improve the likelihood of a successful response to a malfunction

- Ensure that where a fast coupling exists, the systems designed to manage it can react at a similar speed to any potential unfolding incident

- Decentralise interactively complex systems

- Allow operators at the point of component/subsystem failures the discretion to respond to small failures independently and prevent them from propagating, rather than relying on instructions from senior managers who may lack the speed, information, specialist expertise, or contextual knowledge required

- Sometimes the manual must be disregarded in favour of local conditions or common sense

- Centralise tightly coupled systems

- Allow for rapid, reactive control

Perrow is highly pessimistic of our ability to reduce normal accident risk in systems that are both highly complex and tightly coupled, and recommends they should be abandoned altogether. Other scholars criticise this as overly fatalistic.

There are some ways in which safety features can counterintuitively increase danger. For instance, adding safety features increases the complexity of the overall system, which adds new possible unforeseen failure modes. They can also encourage worker complacency, and sometimes embolden managers to operate at a faster pace with a more relaxed attitude to safety practices.

Incidentally, this latter phenomenon is closely related to the economic concept of moral hazard, where an actor is incentivised to adopt riskier behaviour after acquiring some kind of protection. One may be more careless with their phone if they know it is insured.

An outgrowth of Normal Accident Theory is the study of high reliability organisations. High reliability organisations such as air traffic control systems and nuclear power plants tend to share a number of characteristics, such as deference to expertise, a strong safety culture that emphasises accountability and responsibility, and a preoccupation with proactively identifying and addressing issues combined with a commitment to continually learning and improving.27–33 There is an extensive literature on the topic, which may prove of use to those involved in GCR-relevant organisations where accidents could potentially be calamitous, for example nuclear weapons facilities, BSL-4 labs, and AI companies.[5]

One key takeaway that Normal Accident Theory shares with the rest of the safety and resilience literature is that there is almost always a tradeoff between short-term efficiency and profitability, and long-term reliability and safety. Short time-horizons combined with bad incentives and power structures lead to the latter frequently being hollowed out in service to the former.

Despite its massive impact on the theory and practice of safety, Normal Accident Theory is not without its criticisms. Notably, it has been accused of being vague, hard to empirically measure or test, and difficult to practically implement. Its position that major accidents are inevitable in a sufficiently large and complex organisation may lead to fatalism and thus missed opportunities.

Despite this, Normal Accident’s theory has reached beyond its home domains of electricity generation, chemical processing, and so on, to more GCR-relevant domains like artificial intelligence.34–38

3 Sexy and Unsexy Risks

Karin Kuhlemann argues that too much catastrophic risk scholarship is focused on “sexy” risks like asteroid strikes and hostile AI takeovers, rather than “unsexy” risks like climate change.39 In her view, this is due to a mixture of complexity, cognitive biases, and overconfident and over-certain failures of imagination.

The below simply summarises her argument; don’t be confused if some of it seems incompatible with the HEVCR model outlined in section 1.1. Note that Kuhleman uses the term “risk” to refer to a very heterogeneous group of phenomena; perhaps a more fitting term would have been “threats”.

In any case, per Kuhleman, sexy risks are characterised by:

- Epistemic neatness

- Sudden onset

- Technology is involved

They are easily categorisable, with clear disciplinary homes

- Hostile AGI takeover → computer science, neuroscience, and philosophy

- Meteor and asteroid strikes → astronomy

- Pandemic → epidemiology, pathology, medicine

They crystallise in a few hours, or maybe a few years.

“All hell breaks loose”.

They include flattering ideas about human technological capabilities, where advanced (future) technologies are the problem’s cause, its only solution, or both.

Humanity receiving Promethean punishment for uncovering things Man Was Simply Not Meant To Know and everyone-is-saved technofixes are different sides of the same movie-plot coin.

Unsexy risks, by contrast, are characterised by:

- Epistemic messiness

- Gradual build-up

- Behavioural and attitudinal drivers

They are necessarily transdisciplinary, and cannot simply be governed by a pre-existing institution that deals with “this sort of thing”.

Difficult to study, communicate, and fund.

They are often based on accumulated and latent damage to collective goods.

Baselines shift as people gradually, often subconsciously, alter the definition of “normal”.

They are driven primarily by human behaviour, and may require radical social change to prevent or even just mitigate.

Kuhlemann argues that human overpopulation is the best example of an “unsexy” global catastrophic risk, but this is not taken seriously by the vast majority of global catastrophic risk scholars.

4 Forecasting, Foresight, and Futures

The global consequences of nuclear war is not a subject amenable to experimental verification — or at least, not more than once.

Carl Sagan

One thing a person cannot do, no matter how rigorous his analysis or heroic his imagination, is to draw up a list of things that would never occur to him.

Thomas Schelling

Trying to say useful things about events that have not yet happened and may never happen is very difficult. This is especially true if the events in question are unprecedented, poorly-defined, and/or low-probability yet high impact, when they exist in domains of high complexity and/or deep uncertainty,2 and when they are subject to significant amounts of expert disagreement.40,41 Alas, this is where we find ourselves.

A notable type of problem in this domain is the Black Swan, an event that is extremely impactful, yet so (apparently) unlikely that it lies wholly beyond the realm of normal expectations. They are thus impossible to predict and explainable only in hindsight.42 Commonly cited examples include 9/11, World War I, and the 2008 financial crisis.

Luckily, there is a field called “Futures Studies” that can help us out. There are many ways of cutting it up, but to make things easy we’re just going to say:

- Futures Studies: the systematic study of possible, probable, and preferable futures, including the worldviews and myths that underlie them

- Foresight methods: quantitative and qualitative tools for exploring the shape of possible futures

- (This is a subsection of Futures Studies)

- Forecasting methods: quantitative tools for estimating the future values of variables and/or the likelihoods of future events

- i.e. including questions like “What will the average price of oil be next quarter?” and “What is the probability that X will win the 2028 US Presidential election?”

- (This is a class of foresight methods)

We do not mean to set up any artificial conflict between forecasting and qualitative foresight methods: they complement each other, and there is broad agreement between them on a number of issues. For instance, foresight and forecasting professionals generally agree that a large, diverse group of people sharing and processing lots of different sources of information is better than a single person or small homogenous group, and that producing quality work becomes dramatically harder as you extend your time horizon.

4.1 Forecasting

Quantitative forecasts are useful for several purposes, especially when dealing with comparisons. How should we prioritise different courses of action? What do we do about risk-risk trade-offs, that is, when a given action could decrease risk from one source while increasing risk from another?[6]

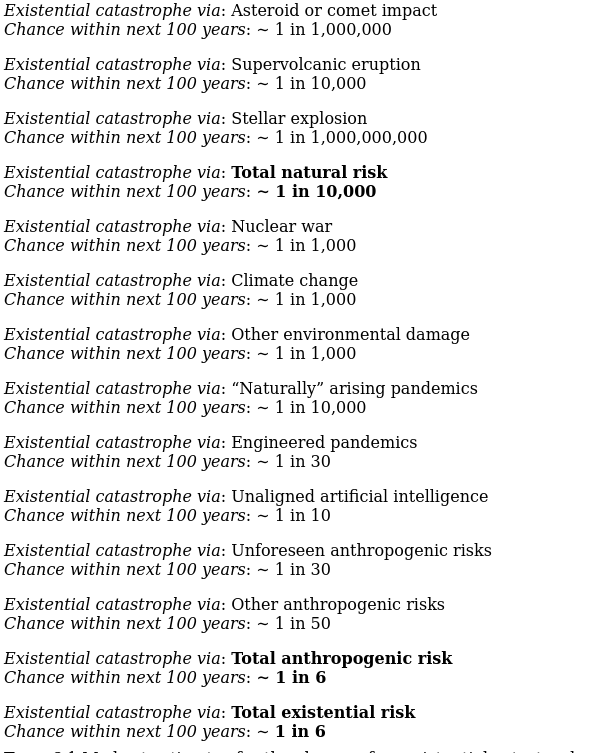

However, they can also cause problems when produced by unrigorous or controversial means. For instance, some GCR scholars consider Toby Ord’s subjective judgements of existential risk from different sources44 (see below) to be useful formalisations of his views, valuable as order-of-magnitude estimates. Others are very critical of this approach, contending that Ord’s numbers are mostly mere guesses that are presented as far more rigorous than they actually are, potentially giving Ord’s personal biases a scientific sheen.[7] The debate is ongoing.

Numbers provide an air of scientific rigour that is extremely compelling to the lay public,45 and anchoring bias means that people’s judgements can be influenced by a reference point (often the first one they see) regardless of its relevance and/or quality.

All this means is that numerical estimates are excellent for some purposes, but must be generated and communicated responsibly.

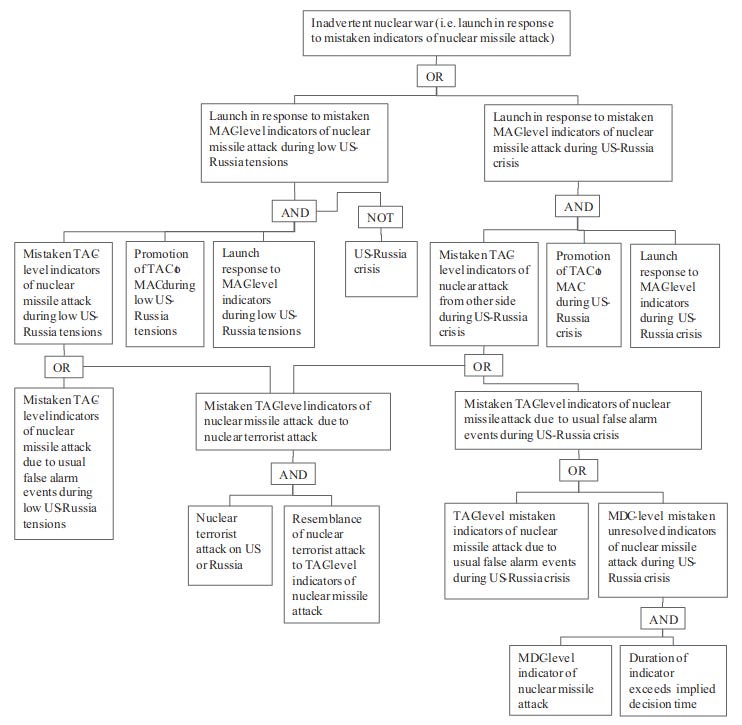

Examples of forecasting methods include:

- Toy models: deliberately simplistic mathematical models, e.g. assuming that pandemics occur according to a certain statistical distribution46

- Fault trees: branching a logic tree backwards from overall system failure, representing the proximate causes of failure as different nodes. Add nodes representing the conditions of those nodes failing, and then the conditions of those nodes failing, and so on. If possible, assign a probability of failure to each node, and sum/multiply to get the overall probability

- See, for example, the simple fault tree for inadvertent nuclear war between the US and Russia, below47

- Individual subjective opinion: one person’s best guess

- Superforecasting: “superforecasters” are people, often with certain traits (intelligence, cognitive flexibility/open-mindedness, political knowledge…) and some training, who combine their judgements in structured ways48

- These superforecasters are said to frequently outperform domain-experts in forecasting competitions, though the extent to which these are fair comparisons is debated, and in any case the literature leaves much to desired49

Various methods work better or worse when applied to/in different problems/contexts. Superforecasting, for instance, has been extremely successful at predicting the outcomes of binary propositions over short timespans (things like “Before 1 May 2024, will Russia or Belarus detonate a nuclear device outside of Russian and Belarusian territory or airspace?”), but shows comparatively less promise on less well-defined questions resolving more than a few years in the future (“If a global catastrophe occurs, how likely is it that it will be due to either human-made climate change or geoengineering?”).50

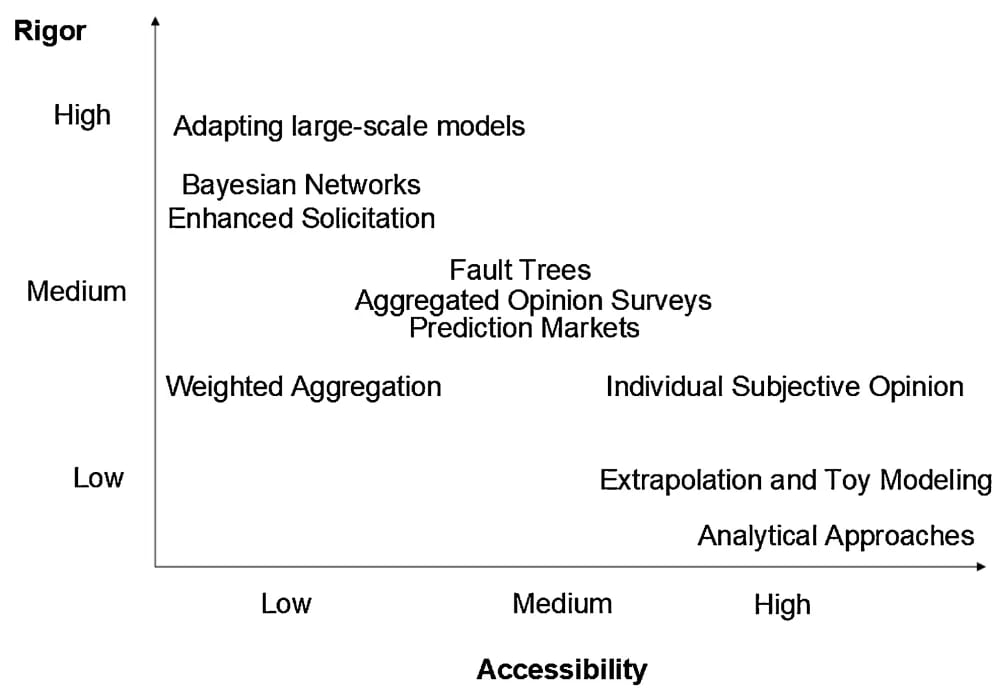

Beard et al outline the forecasting methods that have been used in GCR thus far, and evaluates their rigour, how well they handle uncertainty, their accessibility, and their utility.51

In a response to this paper, Baum notes a negative correlation between the rigour of a method and its accessibility.52

This is pretty unsurprising, and squares with Beard et al’s observation that the least rigorous methods are also the most popular and the most frequently referenced. Virtually all quantitative existential risk estimates referenced in the popular press (and a surprisingly high proportion of those cited in the research and policy spheres!) are point estimates from individuals presented with little to no methodology. The most notable examples here are Martin Rees’ ½53 and Toby Ord’s ⅙.44

Beard et al conclude by arguing that (1) forecasting methods are valuable tools that form a key part of existential risk research, but (2) the mere fact that a particular probability estimate has been produced does not mean it is worthy of consideration or reproduction, and (3) that scholars should be more transparent about how their estimates are generated.

4.2 Foresight

Foresight is a broader category, incorporating qualitative tools which often – but not always – eschew firm predictions in favour of generating information and insight about the broad contours of possible futures.50 This exploratory approach makes them particularly useful for identifying Black Swans, especially when intentionally modified to do so.54

The goal is usually not to create fine-tuned predictions and optimise accordingly, but to create robust and flexible plans that allow organisations to adapt in response to a changing and uncertain future. This overlaps with decision theories developed for problems with similar characteristics to GCR, for instance decision-making under deep uncertainty (DMDU).2

There is a rough split between technocratic foresight methods, and participatory or critical ones. Examples of the former include:

- Horizon scans: a wide class of techniques for the early detection and assessment of emerging issues

- These are often based on structured expert elicitation processes, e.g. the Delphi method55 or the IDEA protocol,56 where experts repeatedly deliberate and vote on the likelihood and magnitude of the impacts of various issues by a certain date

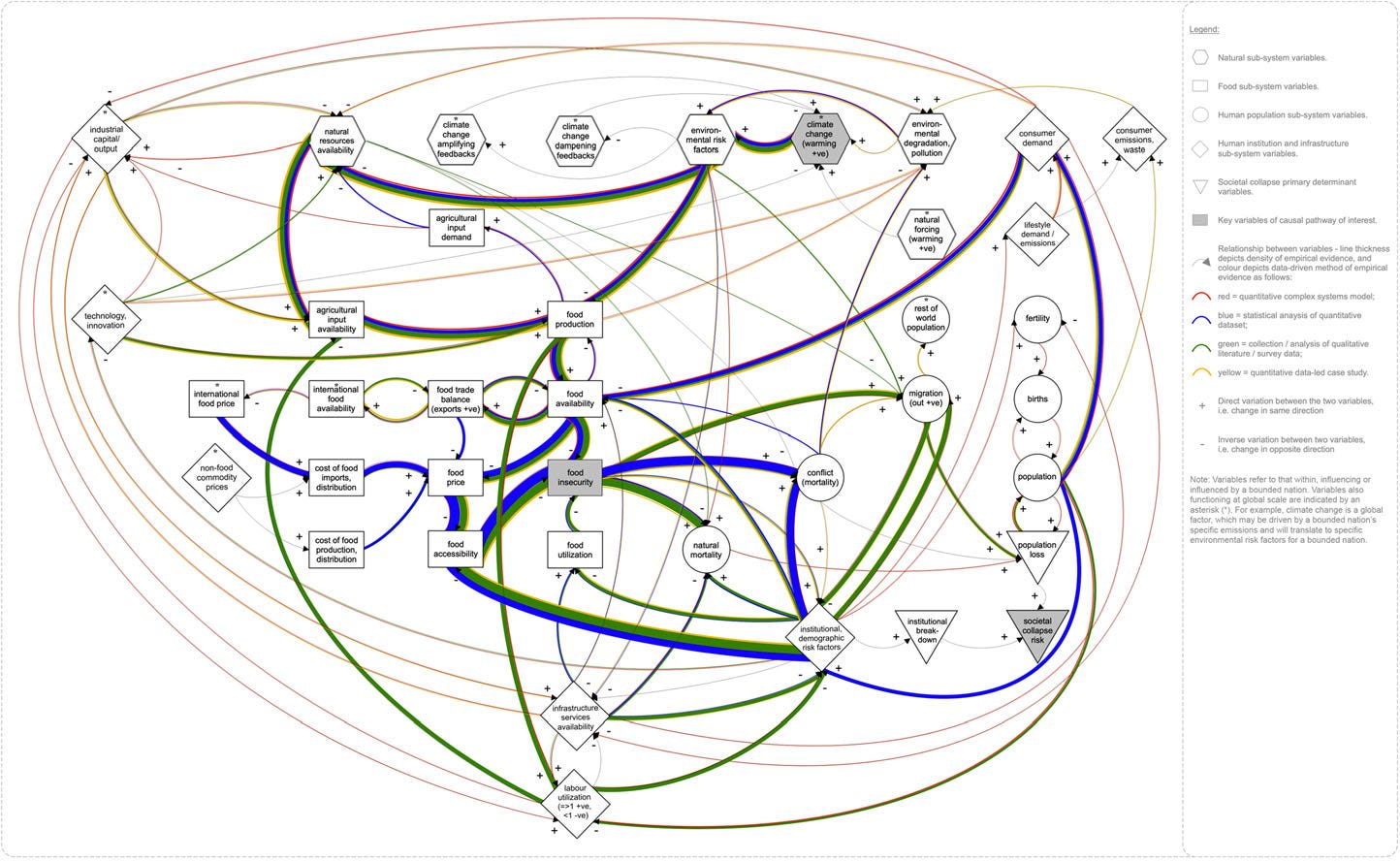

- Causal loop diagrams: map out the various elements of the system and how they affect each other, sometimes performing computational simulations to explore different knock-on effects

- Scenario plans: participants collectively generate multiple (usually 3-5) widely differing descriptions of plausible states of a particular system (the world, the Singaporean tech sector…) at a given time, with the scenarios collectively forming an envelope of plausible futures

- Wargames: serious games and role-play exercises, used for both training and analysis

While valuable, these tools have been critiqued for restricting the task of exploring and deciding the future to a tiny homogenous group of privileged experts, and in consequence producing outputs that reflect the preferences and biases of that group.58

In contrast, participatory foresight techniques place a greater emphasis on democratic legitimacy and the meaningful involvement of citizens, especially those from otherwise marginalised groups. Examples include:[8]

- Citizens’ assemblies: randomly-chosen stakeholders participate in facilitated discussions or exercises designed to help and encourage them think about the future, often in order to generate policy recommendations

- Games: games or role-plays in which citizens experience and experiment with the long-term impacts of near-term decisions

- Immersive experiences: interactive simulations, exhibitions, or theatre that make possible futures feel “real” and allow participants to explore them in an intuitive and embodied way

For an overview of more technocratic methods see the UK government’s Futures Toolkit;59 for more participatory methods see Nesta’s Our Futures report.60 Some approaches, like speculative fiction, do not fit easily into either category.

As with forecasting, foresight methods each have different context/question-dependent strengths and weaknesses. They are rarely used in isolation, and users are more confident when a variety of methods converge on similar results.

Many foresight methods share similar disadvantages. For example, the lack of concrete predictions makes falsifiability and validation difficult, which means that there is a huge range of foresight tools out there, yet few ways of easily distinguishing the good from the bad ones. The rigorous evaluation of the effectiveness of various foresight tools is a major research gap.[9] There have been a few studies on Delphi-based horizon-scanning and the IDEA protocol, which have yielded positive results.54

In addition, the rejection of prediction and optimisation in favour of exploration and adaptation can lead to missed opportunities, especially when in domains that actually are fairly stable and/or predictable. Where behaviour is well-characterised and unexpected events are likely to be rare and low-impact, for instance industrial production or energy demand in stable regions, then optimisation makes the most sense. In any case, optimality vs robustness is a spectrum; there is almost always a role for both.

Finally, those who use foresight tools usually do so in order to better navigate the future, which means combatting or avoiding the very problems they foresaw. Thus, people may make their foresight results less accurate by using them. A few examples of similar problems are catalogued here [LW · GW]. The future is profoundly affected by how people understand it in the present.

4.3 Futures Studies

The field of futures studies incorporates far more than the above methods, and so this review has been, by necessity, limited. For instance, futures studies includes huge amounts of discourse on the politics of the future and how predictions and statements made about possible futures are used as tools (and weapons) in the present.[10] Elsewhere, scholars consider how we should decide on the desirability of various kinds of futures, and how those decisions are affected by structures and inequalities of power.

A relatively well-defined field is the study of sociotechnical imaginaries, “collectively held, institutionally stabilised, and publicly performed visions of desirable futures, animated by shared understandings of forms of social life and social order attainable through, and supportive of, advances in science and technology”.61 Focused study of the sociotechnical imaginaries surrounding GCR is surprisingly neglected, but some work has been done on the narratives surrounding AI, geoengineering, and space colonisation.62–64

Another relevant example is utopian studies, which is more or less what it says on the tin. Utopian studies scholars have taken a significant interest in Existential Risk Studies, as well as Longtermism and the extropian transhumanism and Californian Ideology that it emerged from.65–70

Given that modern GCR research is so profoundly influenced by Nick Bostrom’s vision of an interstellar transhumanist utopia—a utopian imaginary with as large a potential for misuse for power and profit as any other—it may indeed be advisable to turn the microscope on ourselves.

On a related note, there is a long tradition of powerful actors (often with vast conflicts of interest) telling the public that we have no choice but to build catastrophically dangerous technologies, either because somebody else will or because the benefits are too big to turn down. These technological prophecies have been influential in fields from nuclear weapons to climate change to AI safety, and act to shift accountability from those taking risky decisions while circumventing the democratic process. This rhetoric is usually unfalsifiable, and the historical track-record of its predictions is unimpressive.71

Self-fulfilling future visions are not restricted to prophecies: fantasies are just as—if not more—influential. Science fiction has a vast track record of informing and shaping future technological and design decisions, from Star Trek’s Personal Access Display Devices to how Neuromancer’s concepts of “cyberspace” and “console cowboys” shaped the development of the internet, hacker culture, and digital technology more broadly.

Along those lines, it is notable that Sam Altman has commented that “eliezer [yudkowsky] has IMO done more to accelerate AGI than anyone else.” Those working in AI Safety (or other fields) may wish to dedicate significantly more attention to the ways in which they may increase (or have already increased) the level of risk. Assuming the creation of a dangerous technology to be inevitable might just make it so.

Conclusion

When funders and academics have tried to make sense of global catastrophic risks, they have occasionally used judgmental forecasting of the Tetlock variety, but they also have relied on hedgehog-style thinking [LW · GW] and stylized mathematical models. These approaches can be valuable, but they are not the whole story. Fields like disaster risk reduction, science and technology studies, safety science, risk analysis, and futures studies have been studying problems remarkably similar to ours for a long time. Hopefully this piece has given readers some notion of the value to be found in these fields. Members of these fields often show considerable interest in GCR when it is mentioned to them, and we have an opportunity to build networks in valuable and neglected areas.

We have surveyed several promising routes for GCR theory and practice over the course of this essay. Disaster risk can be productively analysed by breaking it up into hazard, exposure, vulnerability, adaptive capacity and response. Current GCR work places a huge emphasis on hazard, neglects the societal vulnerabilities that put us at risk from the hazards in the first place, and even further neglects the underlying root causes that generate vulnerabilities (and in our case, technological hazards as well).

Disasters often occur in cycles, with work clustering into mitigation, preparedness, response, and recovery, but this can be complicated given the complexity and interconnectivity of the world as a system. From this perspective, most mainstream GCR research is excessively reductive, often ignoring complicated causal networks and the behavioural characteristics of complex systems. The study of global systemic risk and related phenomena is of extreme relevance to GCR work, and we would do well to add it to our conceptual toolkit.

Technological systems are often complex and tightly coupled, and thus fall victim to “normal accidents”. Many interconnected components rapidly impacting one another breed unexpected—and sometimes calamitous—interactions, and traditional safety features can sometimes counterintuitively increase risk, for instance through the creation of moral hazards. High-reliability organisations, with their preoccupation with proactive continuous improvement and a culture of accountability, may prove to be a promising route for reducing risks posed by some organisations in hazardous sectors.

Evaluations of different sources of GCR may be biased towards “sexy” risks, which are epistemically neat, occur “with a bang”, and involve futuristic technologies, at the expense of “unsexy” risks, which are epistemically messy, build up gradually, and are primarily driven by social factors.

When attempting to understand the course of the future, quantitative forecasts are a useful tool, but they represent only a narrow subset of the concepts developed to systematically study futures. At their best, quantitative forecasts can help us rigorously make decisions and allocate resources, but these efforts can be hamstrung by an uncritical reliance on accessible but unrigorous techniques like individual subjective opinions.

Forecasting techniques also appear substantially less well-adapted for questions involving poorly-defined and/or low-probability high-impact events (such as “Black Swans”), which exist in domains of high complexity or deep uncertainty and subject to significant amounts of expert disagreement. Qualitative foresight techniques may be able to help in this regard, focusing as they do on exploring the shape of possible futures and developing plans that are robust to a wide variety of outcomes. The best approach will likely involve a combination of foresight and forecasting techniques, combining different information streams to create plans with a balance of efficiency to robustness that is appropriate to the situation at hand.

There is a large body of work that can help us contextualise the sociotechnical imaginaries and utopian philosophies that unconsciously shape peoples’ perceptions and decisions. These concepts can be productively applied to classic global catastrophic threats, as well as the field itself. GCR research is new in some ways, but painfully unoriginal in others, and self-fulfilling prophecies and fantasies that may cause people to inadvertently create the problems they seek to solve is a well-known and dangerous pattern.

This post has been long; it is also just the tip of the iceberg.

One term that has been conspicuous by its absence is “resilience”: there is an extensive body of research on “resilience theory” that focuses on how to improve the robustness and adaptability of systems subjected to shocks. It has been excluded because there is already an introduction to resilience for GCR researchers and practitioners in the form of “Weathering the Storm: Societal Resilience to Existential Catastrophes” available here.

Hopefully, after becoming more acquainted with these concepts, people in EA, forecasting and adjacent communities will be able to incorporate them into their decision-making methods, and critically and productively engage with the relevant fields rather than dismissing them out of hand.

Effective Altruists (and readers in general) interested in these topics may wish to look at other related EA Forum posts, for instance:

- Doing EA Better [EA · GW] (specifically section two: “Expertise and Rigour”)

- Beyond Simple Existential Risk: Survival in a Complex Interconnected World [EA · GW]

- How to think about an uncertain future: lessons from other sectors & mistakes of longtermist EAs [EA · GW]

- A practical guide to long-term planning – and suggestions for longtermism [EA · GW]

- Foresight for Governments: Singapore’s Long-termist Policy Tools & Lessons [EA · GW]

- Foresight for AGI Safety Strategy [EA · GW]

- Statement on Pluralism in Existential Risk Studies [EA · GW]

- Model-Based Policy Analysis under Deep Uncertainty [EA · GW]

- …and a forthcoming post by Ximena Barker Huesca [EA · GW] evaluating how risk is conceptualised in GCR and how disaster risk reduction frameworks may be applied to GCR mitigation

Bibliography

1. Reisinger, A. et al. The Concept of Risk in the IPCC Sixth Assessment Report. (2020).

2. Decision Making under Deep Uncertainty: From Theory to Practice. (Springer, Cham, 2019).

3. Kelman, I. Disaster by Choice: How Our Actions Turn Natural Hazards into Catastrophes. (Oxford University Press, 2020).

4. Alexander, D. E. Misconception as a Barrier to Teaching about Disasters. Prehospital Disaster Med. 22, 95–103 (2007).

5. Quarantelli, E. Conventional Beliefs and Counterintuitive Realities. Soc. Res. Int. Q. Soc. Sci. 75, 873–904 (2008).

6. Tierney, K., Bevc, C. & Kuligowski, E. Metaphors Matter: Disaster Myths, Media Frames, and Their Consequences in Hurricane Katrina. Ann. Am. Acad. Pol. Soc. Sci. 604, 57–81 (2006).

7. Drury, J., Novelli, D. & Stott, C. Psychological disaster myths in the perception and management of mass emergencies. J. Appl. Soc. Psychol. (2013).

8. Clarke, L. Panic: Myth or Reality? Contexts 1, 21–26 (2002).

9. Clarke, L. & Chess, C. Elites and Panic: More to Fear than Fear Itself. Soc. Forces 87, 993–1014 (2008).

10. Kemp, L. et al. Climate Endgame: A Research Agenda for Exploring Catastrophic Climate Change Scenarios. PNAS 119, (2022).

11. Pescaroli, G. & Alexander, D. Understanding Compound, Interconnected, Interacting, and Cascading Risks: A Holistic Framework. Risk Anal. 38, 2245–2257 (2018).

12. Cremer, C. Z. & Kemp, L. T. Democratising Risk: In Search of a Methodology to Study Existential Risk. https://papers.ssrn.com/abstract=3995225 (2021).

13. Wisner, B., Blaikie, P., Cannon, T. & Davis, I. At Risk: Natural Hazards, People’s Vulnerability and Disasters. (Routledge, London, 2003).

14. Liu, H.-Y., Lauta, K. C. & Maas, M. M. Governing Boring Apocalypses: A new typology of existential vulnerabilities and exposures for existential risk research. Futures 102, 6–19 (2018).

15. Boyd, M. & Wilson, N. Optimizing Island Refuges against global Catastrophic and Existential Biological Threats: Priorities and Preparations. Risk Anal. 41, 2266–2285 (2021).

16. Boyd, M. & Wilson, N. Island refuges for surviving nuclear winter and other abrupt sunlight-reducing catastrophes. Risk Anal. 43, 1824–1842 (2022).

17. Naheed, S. Understanding Disaster Risk Reduction and Resilience: A Conceptual Framework. in Handbook of Disaster Risk Reduction for Resilience: New Frameworks for Building Resilience to Disasters (eds. Eslamian, S. & Eslamian, F.) 1–25 (Springer International Publishing, Cham, 2021). doi:10.1007/978-3-030-61278-8_1.

18. Hammer, C. C., Brainard, J., Innes, A. & Hunter, P. R. (Re-) conceptualising vulnerability as a part of risk in global health emergency response: updating the pressure and release model for global health emergencies. Emerg. Themes Epidemiol. 16, 2 (2019).

19. Centeno, M. A., Nag, M., Patterson, T. S., Shaver, A. & Windawi, A. J. The Emergence of Global Systemic Risk. Annu. Rev. Sociol. 41, 65–85 (2015).

20. Helbing, D. Globally networked risks and how to respond. Nature 497, 51–59 (2013).

21. Keys, P. W. et al. Anthropocene risk. Nat. Sustain. 2, 667–673 (2019).

22. Frank, A. B. et al. Dealing with femtorisks in international relations. Proc. Natl. Acad. Sci. 111, 17356–17362 (2014).

23. Homer-Dixon, T. et al. Synchronous failure: the emerging causal architecture of global crisis. Ecol. Soc. 20, art6 (2015).

24. Scheffer, M. et al. Anticipating Critical Transitions. Science 338, 344–348 (2012).

25. Lawrence, M., Janzwood, S. & Homer-Dixon, T. What Is a Global Polycrisis? 11.

26. Perrow, C. Normal Accidents: Living with High Risk Technologies - Updated Edition. Normal Accidents (Princeton University Press, 2011). doi:10.1515/9781400828494.

27. Sutcliffe, K. M. High reliability organizations (HROs). Best Pract. Res. Clin. Anaesthesiol. 25, 133–144 (2011).

28. Weick, K. & Sutcliffe, K. Managing the Unexpected: Resilient Performance in an Age of Uncertainty. (Jossey-Bass, 2007).

29. Schulman, P. R. General attributes of safe organisations. Qual. Saf. Health Care 13, ii39–ii44 (2004).

30. Roberts, K. H. & Rousseau, D. M. Research in nearly failure-free, high-reliability organizations: having the bubble. IEEE Trans. Eng. Manag. 36, 132–139 (1989).

31. Roberts, K. H., Bea, R. & Bartles, D. L. Must Accidents Happen? Lessons from High-Reliability Organizations [and Executive Commentary]. Acad. Manag. Exec. 1993-2005 15, 70–79 (2001).

32. Weick, K. E. Organizational Culture as a Source of High Reliability. Calif. Manage. Rev. 29, 112–127 (1987).

33. Roberts, K. H. Some Characteristics of One Type of High Reliability Organization. Organ. Sci. 1, 160–176 (1990).

34. Chan, A. Loss of Control: ‘Normal Accidents’ and AI Systems. in (International Conference on Learning Representations, 2021).

35. Maas, M. M. Regulating for ‘Normal AI Accidents’: Operational Lessons for the Responsible Governance of Artificial Intelligence Deployment. in Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society 223–228 (Association for Computing Machinery, New York, NY, USA, 2018). doi:10.1145/3278721.3278766.

36. Maas, M. M. How viable is international arms control for military artificial intelligence? Three lessons from nuclear weapons. Contemp. Secur. Policy 40, 285–311 (2019).

37. Williams, R. & Yampolskiy, R. Understanding and Avoiding AI Failures: A Practical Guide. Philosophies 6, 53 (2021).

38. Carvin, S. Normal Autonomous Accidents: What Happens When Killer Robots Fail? SSRN Scholarly Paper at https://doi.org/10.2139/ssrn.3161446 (2017).

39. Kuhlemann, K. Complexity, creeping normalcy, and conceit: sexy and unsexy catastrophic risks. Foresight 21, 35–52 (2019).

40. ConcernedEAs. Doing EA Better. Effective Altruism Forum https://forum.effectivealtruism.org/posts/54vAiSFkYszTWWWv4/doing-ea-better-1 (2023).

41. Sundaram, L., Maas, M. & Beard, S. J. Seven Questions for Existential Risk Studies: Priorities, downsides, approaches, coherence, impact, diversity and communication. in Managing Extreme Technological Risk (ed. Rhodes, C.) (World Scientific, 2024).

42. Taleb, N. N. The Black Swan. (Random House, New York, 2007).

43. Tang, A. & Kemp, L. A Fate Worse Than Warming? Stratospheric Aerosol Injection and Global Catastrophic Risk. Front. Clim. 3, 720312 (2021).

44. Ord, T. The Precipice: Existential Risk and the Future of Humanity. (Bloomsbury, London, 2020).

45. Porter, T. M. Trust in Numbers: The Pursuit of Objectivity in Science and Public Life. (Princeton University Press, 2020). doi:10.23943/princeton/9780691208411.001.0001.

46. Millett, P. & Snyder-Beattie, A. Existential Risk and Cost-Effective Biosecurity. Health Secur. 15, 373–383 (2017).

47. Barrett, A. M., Baum, S. D. & Hostetler, K. Analyzing and Reducing the Risks of Inadvertent Nuclear War Between the United States and Russia. Sci. Glob. Secur. 21, 106–133 (2013).

48. Tetlock, P. E. & Gardner, D. Superforecasting: The Art and Science of Prediction. (Crown, New York, 2015).

49. Leech, G. & Yagudin, M. Comparing top forecasters and domain experts. Effective Altruism Forum https://forum.effectivealtruism.org/posts/qZqvBLvR5hX9sEkjR/comparing-top-forecasters-and-domain-experts (2022).

50. Foreseeing the End(s) of the World. (Cambridge Conference on Catastrophic Risk, 2022).

51. Beard, S., Rowe, T. & Fox, J. An analysis and evaluation of methods currently used to quantify the likelihood of existential hazards. Futures 115, 102469 (2020).

52. Baum, S. D. Quantifying the probability of existential catastrophe: A reply to Beard et al. Futures 123, 102608 (2020).

53. Rees, M. Our Final Century: Will the Human Race Survive the Twenty-First Century? (William Heinemann, 2003).

54. Kemp, L. Foreseeing Extreme Technological Risk. in Managing Extreme Technological Risk (ed. Rhodes, C.) (World Scientific, 2024).

55. Linstone, H. & Turoff, M. The Delphi Method: Techniques and Applications. (Addison-Wesley Publishing Company, 1975).

56. Hemming, V., Burgman, M. A., Hanea, A. M., McBride, M. F. & Wintle, B. C. A practical guide to structured expert elicitation using the IDEA protocol. Methods Ecol. Evol. 9, 169–180 (2018).

57. Richards, C. E., Lupton, R. C. & Allwood, J. M. Re-framing the threat of global warming: an empirical causal loop diagram of climate change, food insecurity and societal collapse. Clim. Change 164, (2021).

58. Krishnan, A. Unsettling the Coloniality of Foresight. in Sacred Civics: Building Seven Generation Cities (eds. Engle, J., Agyeman, J. & Chung-Tiam-Fook, T.) 93–106 (Routledge, 2022).

59. Waverly Consultants. The Futures Toolkit: Tools for Futures Thinking and Foresight across UK Government. 116 (2017).

60. Ramos, J., Sweeney, J., Peach, K. & Smith, L. Our Futures: By the People, for the People. (2019).

61. Jasanoff, S. Future Imperfect: Science, Technology, and the Imaginations of Modernity. in Dreamscapes of Modernity: Sociotechnical Imaginaries and the Fabrication of Power 1–33 (University of Chicago Press, 2015).

62. Tutton, R. J. Sociotechnical Imaginaries as Techno-Optimism : Examining Outer Space Utopias of Silicon Valley. Sci. Cult. 30, 416–439 (2020).

63. Augustine, G., Soderstrom, S., Milner, D. & Weber, K. Constructing a Distant Future: Imaginaries in Geoengineering. Acad. Manage. J. 62, 1930–1960 (2019).

64. Sartori, L. Minding the gap(s): public perceptions of AI and socio-technical imaginaries. AI Soc. 38, 443–458 (2023).

65. Taillandier, A. From Boundless Expansion to Existential Threat: Transhumanists and Posthuman Imaginaries. in Futures (eds. Kemp, S. & Andersson, J.) 332–348 (Oxford University Press, 2021). doi:10.1093/oxfordhb/9780198806820.013.20.

66. Hauskeller, M. Utopia in Trans- and Posthumanism. in Posthumanism and Transhumanism (eds. Sorgner, S. & Ranisch, R.) (Peter Lang, 2013).

67. Davidson, J. P. L. Extinctiopolitics: Existential Risk Studies, The Extinctiopolitical Unconscious, And The Billionaires’ Exodus from Earth. New Form. 107, 48–65 (2023).

68. Coenen, C. Utopian Aspects of the Debate on Convergent Technologies. in Assessing Societal Implications of Converging Technological Development (eds. Banse, G., Grunwald, A., Hronszky, I. & Nelson, G.) 141–172 (edition sigma, 2007).

69. Bostrom, N. Letter from Utopia. Stud. Ethics Law Technol. 2, (2008).

70. Barbrook, R. & Cameron, A. The Californian ideology. Sci. Cult. 6, 44–72 (1996).

71. Kemp, L. & Pelopidas, B. Self-Serving Prophets: Techno-prophecy and Catastrophic Risk. (Forthcoming).

- ^

This is tied to the “Tombstone mentality” of ignoring problems until they kill people.

- ^

This is simply an example to illustrate the point, not taking a side on how plausible such a situation is.

- ^

There is frequent confusion between positive/negative feedback loops and virtuous/vicious cycles. While people sometimes understandably assume that virtuous cycles must be “positive” and vicious cycles “negative”, in fact both virtuous and vicious cycles are self-amplifying rather than self-dampening, and thus are simply desirable or undesirable types of positive feedback loop.

- ^

In the words of Perrow, a focus on blaming human error is not only unhelpful but also “...suggests an unwitting—or perhaps conscious—class bias; many jobs, for example, require that the operator ignore safety precautions if she is to produce enough to keep her job, but when she is killed or maimed, it is considered her fault.”

- ^

Depending on how concerned one is about AI risk.

- ^

See, for example, Stratospheric Aerosol Injection, which might reduce existential (and other types of) risk from climate change while adding risks of its own.43

- ^

See section 1.5 for further complications.

- ^

Attentive readers may notice that participatory methods appear to be generally “softer”, focusing on meaning and subjective experience at least as much as rational analysis. This may reflect several factors, but the Nesta report includes a cognitive science argument that methods involving more of the former attributes are more effective at influencing peoples’ motivations and actions. Adjudicating the proper quantity and type of traits from each approach in any given foresight exercise is beyond the scope of this post, but it seems likely that the answer will (1) vary according to context, and (2) lie somewhere between the two extremes.

- ^

If, by any chance, somebody is looking for a research project…

- ^

A common bogeyman here is tech companies: “The Metaverse is the future, so buy in before you’re left behind!”.

10 comments

Comments sorted by top scores.

comment by Dan H (dan-hendrycks) · 2024-04-12T06:36:13.689Z · LW(p) · GW(p)

If people are interested, many of these concepts and others are discussed in the context of AI safety in this publicly available chapter: https://www.aisafetybook.com/textbook/4-1

comment by WitheringWeights (EZ97) · 2024-04-12T08:51:11.706Z · LW(p) · GW(p)

Strongly upvoted, neat overview of the topic.

I especially like the academic format (e.g. with the sources clearly cited), as well as its conciseness and breadth.

↑ comment by pendingsurvival · 2024-04-12T11:02:20.404Z · LW(p) · GW(p)

Thanks!

comment by Kieran Farr (kieran-farr) · 2024-04-15T15:37:35.973Z · LW(p) · GW(p)

Thanks author for this amazing piece, especially bibliography to followup up on some of the direct sources. While I had been introduced to Normal Accident Theory a decade ago, I wasn't familiar with the HEVCR model and it definitely opens up new -- and very practical -- doors to thinking about existential risk in the context of AI.

If use the HEVCR what are the actual "exposure" and "vulnerability" issues for humanity? At least, I would assert that the exposure and vulnerability will vary widely between individuals and population groups. That is a stunning insight that in and of itself deserves future work.

Replies from: pendingsurvival↑ comment by pendingsurvival · 2024-04-15T18:22:02.118Z · LW(p) · GW(p)

Thanks!

I think the broader lesson to be drawn from this is that the EA/LessWrong/x-risk nexus really needs to make an effort to seek out and listen to people who have been working on related things for decades; they have some really useful things to say!

There seems to be a pervasive tendency here to try to reinvent the wheel from first principles, or else exclusively rely on a handful of approaches that fit the community's highly quantitative/theoretical/high-modernist sensibilities---decision theory, game theory, prediction markets, judgemental forecasting, toy modelling, etc.---at the expense of all others.

Both of these approaches are rarely productive in my view.

(I am aware that this is not a new argument, but it bears making).

Some organisations are much scientifically omnivorous/open to multiple perspectives and approaches; I'm a big fan of both CSER and the GCRI, for instance.[1]

The Boring Apocalypses paper sketches out some ideas about existential exposures and vulnerabilities, but it's very much opening the conversation rather than offering the final word.

You've definitely identified a major research gap, one made shocking by the fact that a clear majority of disaster risk reduction work is focused on reducing vulnerabilities and exposures rather than hazards.

The point on variability of vulnerability and exposure is also well-taken. Vulnerability usually increases along all the lines you would expect: people living in poverty, marginalised minorities, etc. are typically much more at risk (c.f. the Pressure and Release model).

- ^

Disclosure: I have worked at CSER as a Visiting Researcher.

comment by Chris_Leong · 2024-04-12T06:29:17.365Z · LW(p) · GW(p)

Thanks for this post.

My (hot-)take: lots of useful ideas and concepts, but also many examples of people thinking everything is a nail/wanting to fit risks inside their pre-existing framework.

Replies from: pendingsurvival↑ comment by pendingsurvival · 2024-04-12T11:01:25.752Z · LW(p) · GW(p)

Not sure what you mean here, can you explain?

Replies from: Chris_Leong↑ comment by Chris_Leong · 2024-04-12T13:18:30.551Z · LW(p) · GW(p)

I'll provide an example:

People sometimes dismiss exposure when studying GCR, since “everyone is exposed by definition”. This isn’t always true, and even when it is, it still points us towards interesting questions.

Even if there are some edge cases when this applies to existential risks, it doesn't necessarily mean that it is prominent enough to be worthwhile including as an element in an x-risk framework.

comment by denkenberger · 2024-04-18T03:07:52.354Z · LW(p) · GW(p)

Kuhlemann argues that human overpopulation is the best example of an “unsexy” global catastrophic risk, but this is not taken seriously by the vast majority of global catastrophic risk scholars.

I think the reason overpopulation is generally not taken seriously by the GCR community is that they don't believe it would be catastrophic. Some believe that there would be a small reduction in per capita income, but greater total utility. Others argue that having more population would actually raise per capita income and could be key to maintaining long-term innovation.

comment by Owen Henahan (OwenLeaf) · 2024-04-17T13:22:03.614Z · LW(p) · GW(p)

Thank you for this thoughtful and extremely well-composed piece!

I have mostly been a lurker here on LessWrong, but as I have absorbed the discourse over time, I started coming to a similar conclusion as you both in the earlier sections of your post -- namely, the detriment to our discourse caused by our oversimplification of risk. I think Yudkowsky's "everyone dies" maxim is memetically powerful, but ultimately a bit detrimental to a community already focused on solving alignment. Exposure is something we all need to think more critically about, and I appreciate the tools you have shared from your field to help do so. I hope we adopt some of this terminology in our discussions.