Caution when interpreting Deepmind's In-context RL paper

post by Sam Marks (samuel-marks) · 2022-11-01T02:42:06.766Z · LW · GW · 8 commentsContents

Appendix: expert distillation None 8 comments

Lots of people I know have had pretty strong reactions to the recent Deepmind paper, which claims to have gotten a transformer to learn an RL algorithm by training it on an RL agent's training trajectories. At first, I too was pretty shocked -- this paper seemed to provide strong evidence of a mesa-optimizer in the wild. But digging into the paper a bit more, I'm quite unimpressed and don't think that in-context RL is the correct way to interpret the experiments that the authors actually did. This post is a quick, low-effort attempt to write out my thoughts on this.

Recall that in this paper, the authors pick some RL algorithm, use it to train RL agents on some tasks, and save the trajectories generated during training; then they train a transformer to autoregressively model said trajectories, and deploy the transformer on some novel tasks. So for concreteness, during training the transformer sees inputs that look like

which were excerpted from an RL agent's training on some task (out of a set of training tasks) and which span multiple episodes (i.e. at some point in this input trajectory, one episode ended and the next episode began). The transformer is trained to guess the action that comes next. In deployment, the inputs are determined by the transformer's own selections, with the environment providing the states and rewards. The authors call this algorithmic distillation (AD).

Many people I know have skimmed the paper and come away with an understanding something like:

In this paper, RL agents are trained on diverse tasks, e.g. playing many different Atari games, and the resulting transcripts are used as training data for AD. Then the AD agent is deployed on a new task, e.g. playing a held-out Atari game. The AD agent is able to learn to play this novel game, which can only be explained by the model implementing an reasonably general RL algorithm. This sounds a whole lot like a mesa-optimizer.

This understanding is incorrect, with two key issues. First the training tasks used in this paper are all extremely similar to each other and to the deployment task; in fact, I think they only ought to count as different under a pathologically narrow notion of "task." And second, the tasks involved are extremely simple. The complaints taken together challenge the conclusion that the only way for the AD agent to do well on its deployment task is by implementing a general-purpose RL algorithm. In fact, as I'll explain in more detail below, I'd be quite surprised if it were.

For concreteness, I'll focus here on one family of experiments, Dark Room, that appeared in the paper, but my complaint applies just as well to the other experiments in the paper. The paper describes the Dark Room environment as:

a 2D discrete POMDP where an agent spawns in a room and must find a goal location. The agent only knows its own (x, y) coordinates but does not know the goal location and must infer it from the reward. The room size is 9 × 9, the possible actions are one step left, right, up, down, and no-op, the episode length is 20, and the agent resets at the center of the map. ... [T]he agent receives r = 1 every time the goal is reached. ... When not r = 1, then r = 0.

To be clear, Dark Room is not a single task, but an environment supporting a family of tasks, where each task is corresponds to a particular choice of goal location (so there are 81 possible tasks in this environment, one for each location in the 9 x 9 room; note that this is an unusually narrow notion of which tasks count as different). The data on which the AD agent is trained look like: {many episodes of an agent learning to move towards goal position 1}, {many episodes of an agent learning to move towards goal position 2}, and so on. In deployment, a new goal position is chosen, and the agent plays many episodes in which reward is given for reaching this new goal position.

At this point, the issue might be clear: as soon as the model's input trajectory contains the end of a previous episode in which the agent reached the goal (got reward 1), the model can immediately infer what the goal location is! So rather than AD needing to learn any sort of interesting RL algorithm which involves general-purpose planning, balancing exploration and exploitation, etc., it suffices to implement the much simpler heuristic "if your input contains an episode ending in reward 1, then move towards the position the agent was in at the end of that episode; otherwise, move around pseudorandomly." I strongly suspect this is basically what the AD agents in this paper have learned, up to corrections like "the more the trajectories in your input look like incompetent RL agents in early training, the more incompetent you should act."[1]

If the above interpretation of the paper's experiments are correct, then rather than learning a general-purpose RL algorithm which could be applied to genuinely different tasks, the AD agent has learned a very simple heuristic which is only useful for solving tasks of the form "repeatedly navigate to a particular, unchanging position in a grid." If the AD agent trained in this paper were able to learn to do any non-trivially different task (e.g. an AD agent trained on Dark Room tasks were able to in-context learn a task involving collecting keys and unlocking boxes), then I would take that as strong evidence of a mesa-optimizer which had learned to implement a reasonably general RL algorithm. But that doesn't seem to be what's happened.

[Thanks to Xander Davies, Adam Jermyn, and Logan R. Smith for feedback on a draft.]

Appendix: expert distillation

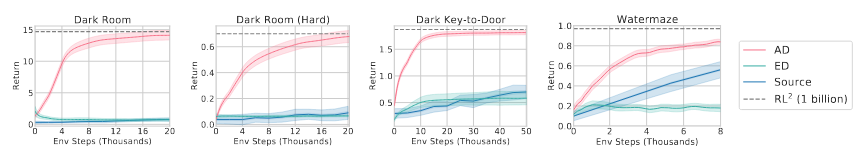

People who've read the in-context RL paper in more detail might be curious about how the above story squares with the paper's observation that the AD agents outperform expert distillation (ED) agents. Recall that ED trains exactly the same way as AD, except that the trajectories used as training data only consist of expert demonstrations[2]. It ends up that the resulting ED agents aren't able to do well on the deployment tasks, even though their inputs consist of cross-episode trajectories.

I don't consider this to be strong evidence against my interpretation. In training, ED agents saw {cross-episode trajectories of an expert competently moving to goal position 1}, {cross-episode trajectories of an expert moving to goal position 2}, and so on. The result is that the rewards in these training data are very uninformative -- every episode ends with reward 1 and no episodes end with a 0, so there's no chance for the ED agents to learn to respond differently to different rewards. In fact, I'd guess that ED agents tend to pick some particular goal position from their training data and iteratively navigate to that goal position, never incurring reward so long as the deployment goal position isn't along the path from the starting position to the ED agent's chosen position. This comports with what the authors describe in the paper:

In Dark Room, Dark Room (Hard), and Watermaze, ED performance is either flat or it degrades. The reason for this is that ED only saw expert trajectories during training, but during evaluation it needs to first explore (i.e. perform non-expert behavior) to identify the task. This required behavior is out-of-distribution for ED and for this reason it does not reinforcement learning in-context.

This excerpt frames this as ED agent failing to explore (in contrast with the AD agent), which I agree with. But the sort of exploration that the AD agent does is likely "when you don't know what to do, mimic an incompetent RL agent" rather than some more sophisticated exploration as part of a general RL algorithm.

- ^

Well, probably the AD agent learned a few other interesting heuristics, like "if the last episode didn't end by reaching the goal position, then navigate to different part of the environment," but I'd be surprised if the sum total of these heuristics is sophisticated enough for me to consider the result a mesa-optimizer.

- ^

The authors don't specify whether the expert demonstrations were generated by humans or by trained RL agents, but it probably doesn't matter.

8 comments

Comments sorted by top scores.

comment by Owain_Evans · 2022-11-05T16:15:26.016Z · LW(p) · GW(p)

(I haven't yet read the paper carefully). The main question of interest is: "How well can transformer do RL in-context after being trained to do so?" This paper only considers quite narrow and limited tasks but future work will extend this and iterate on various parts of the setup. How do these results update your belief on the main question of interest? It's possible the result can be explained away (as you suggest) but also that there is some algorithm distillation going on.

Replies from: samuel-marks↑ comment by Sam Marks (samuel-marks) · 2022-11-05T19:12:57.072Z · LW(p) · GW(p)

In-context RL strikes me as a bit of a weird thing to do because of context window constraints. In more detail, in-context RL can only learn from experiences inside the context window (in this case, the last few episodes). This is enough to do well on extremely simple tasks, e.g. the tasks which appear in this paper, where even seeing one successful previous episode is enough to infer perfect play. But it's totally insufficient for more complicated tasks, e.g. tasks in large, stochastic environments. (Stochasticity especially seems like a problem, since you can't empirically estimate the transition rules for the environment if past observations keep slipping out of your memory.)

There might be more clever approaches to in-context RL that can help get around the limitations on context window size. But I think I'm generally skeptical, and expect that capabilities due to things that look like in-context RL will be a rounding error compared to capabilities due to things that look like usual learning via SGD.

Regarding your question about how I've updated my beliefs: well, in-context RL wasn't really a thing on my radar before reading this paper. But I think that if someone had brought in-context RL to my attention then I would have thought that context window constraints make it intractable (as I argued above). If someone had described the experiments in this paper to me, I think I would have strongly expected them to turn out the way they turned out. But I think I also would have objected that the experiments don't shed light on the general viability of in-context RL, because the tasks seem specially selected to be solvable with small context windows. So in summary, I don't think this paper has moved me very far from what I expect my beliefs would have been if I'd had some before reading the paper.

Replies from: gautam-sharda↑ comment by gotem (gautam-sharda) · 2024-09-19T20:16:01.316Z · LW(p) · GW(p)

Given the recent increases in context-window sizes, how have you updated on this, if at all?

Replies from: samuel-marks↑ comment by Sam Marks (samuel-marks) · 2024-09-21T22:11:50.676Z · LW(p) · GW(p)

I continue to think that capabilities from in-context RL are and will be a rounding error compared to capabilities from training (and of course, compute expenditure in training has also increased quite a lot in the last two years).

I do think that test-time compute might matter a lot (e.g. o1), but I don't expect that things which look like in-context RL are an especially efficient way to make use of test-time compute.

comment by Jozdien · 2022-11-01T13:25:55.300Z · LW(p) · GW(p)

I'm confused, because I wasn't that surprised on reading the paper. My take was that generative models are not-terrible at simulating agents to do some kind of task, which can include ones that require stuff we might call optimization. That would imply that modelling a low-fidelity RL algorithm isn't really beyond its simulation capabilities. Independent of whether the paper actually did show models learning good RL algorithms, it feels like if they had, I wouldn't take it as much evidence to update my priors one way or the other.

comment by Charlie Steiner · 2022-11-01T03:53:09.379Z · LW(p) · GW(p)

Nice, now I can ask you some questions :D

It seemed like the watermaze environment was actually reasonably big, with observation space of a small colored picture, and policy needing to navigate to a specific point in the maze from a random starting point. Is that right? Given context window limitations, wouldn't this change how many transitions it expects to see, or how informative they are?

Ah, wait, I'm looking through the paper now - did they embed all tasks into the same 64d space? This sounds like about right for the watermaze and massive overkill for looking at a black room.

Anyhow, what's the meaning of their graphs with sloped lines? The line labeled with their paper was on top of the other lines, but are they actually comparing like to like? That is, are they getting better performance out of the same total data? Does their transformer get an advantage by being pretrained on a bunch of other mazes with the same layout and goal but different starting location? But if so, how is it actually extracting an advantage - is it doing decision transformer style conditioning on reward?

Replies from: samuel-marks↑ comment by Sam Marks (samuel-marks) · 2022-11-01T04:55:46.528Z · LW(p) · GW(p)

The paper is frustratingly vague about what their context lengths are for the various experiments, but based off of comparing figures 7 and 4, I would guess that the context length for Watermaze was 1-2 times as long as an episode length(=50 steps). (It does indeed look like they were embedding the 2d dark room observations into a 64-dimensional space, which is hilarious.)

I'm not sure I understand your second question. Are you asking about figure 4 in the paper (the same one I copied into this post)? There's no reward conditioning going on. They're also not really comparing like to like, since the AD and ED agents were trained on different data (RL learning trajectories vs. expert demonstrations).

Like I mentioned in the post, my story about this is that the AD agents can get good performance by, when the previous episode ends with reward 1, navigating to the position that the previous episode ended in. (Remember, the goal position doesn't change from episode to episode -- these "tasks" are insanely narrow!) On the other hand, the ED agent probably just picks some goal position and repeatedly navigates there, never adjusting to the fact that it's not getting reward.

Replies from: Charlie Steiner↑ comment by Charlie Steiner · 2022-11-01T06:08:02.287Z · LW(p) · GW(p)

Yeah, I'm confused about all their results of the same type as fig 4 (fig 5, fig 6, etc.). But I think I'm figuring it out - they really are just taking the predicted action. They're "learning" in the sense that the sequence model is simulating something that's learning. So if I've got this right, the thousands of environment steps on the x axis just go in one end of the context window and out the other, and by the end the high-performing sequence model is just operating on the memory of 1-2 high-performing episodes.

I guess this raises another question I had, which is - why is the sequence model so bad at pretending to be bad? If it's supposed to be learning the distribution of the entire training trajectory, why is it so bad at mimicking an actual training trajectory? Maybe copying the previous run when it performed well is just such an easy heuristic that it skews the output? Or maybe performing well is lower-entropy than performing poorly, so lowering a "temperature" parameter at evaluation time will bias the sequence model towards successful trajectories?