Who models the models that model models? An exploration of GPT-3's in-context model fitting ability

post by Lovre · 2022-06-07T19:37:49.276Z · LW · GW · 16 commentsContents

Introduction The Setup Iris Dataset 2D binary classification, generally Is there a number sense? Learning regression Code Discussion None 17 comments

Introduction

Much has been written and much has been observed about the abilities of GPT-3 on many tasks [LW · GW]. Most of these capabilities, though not all, pertain to writing convincing text, but, not to undermine GPT-3's impressiveness at performing these tasks, we might call this the predictable part of its oeuvre. It only makes sense that a better language modelling is, well, going to be better at writing text.

Deeper and comparatively much less explored is the unpredictable ability of GPT-3 to learn new tasks by just seeing a few examples, without any training/backpropagation – the so called in-context learning (sometimes called metalearning).

The original paper announcing GPT-3 contained a handful of examples (perhaps mostly notably examples of GPT-3 learning to perform arithmetic, e.g. accurate addition of up to 5-digit numbers), Gwern has also insightfully written about it, Frieda Rong has performed some interesting experiments, and there have been various other experiments one could chance upon. My curiosity being piqued but not sated by these experiments, and also having had the feeling that, as captivating the arithmetic examples were, they weren't the most natural question one could ask about a stochastic model's quantitative capabilities – I decided to investigate whether GPT-3 could fit numerical models in-context.

The Setup

What does it mean to fit a model in-context? Well, recall that GPT-3 has a context window (roughly speaking: text it considers before generating additional text) of length 2048 tokens (roughly speaking: (parts of the) words, punctuation, etc.) so the idea is to put feature vectors inside that context window. Of course, this means you cannot fit any larger or higher-dimensional dataset in there.[1]

In practice this means prompting GPT-3 on input like:

Input: 94, 47, 84, 31, output = 2

Input: 89, 51, 73, 31, output = 1

[...]

Input: 96, 51, 80, 38, output = 2

Input: 90, 37, 76, 27, output =

And then taking its output, i.e. the text it generates, as the prediction.

A couple more technical details: In all the experiments I performed, all the numbers were integers. The GPT-3's outputs were always sampled with temperature 0, i.e. they were deterministic – only the most probable output was considered. I restricted myself that way for simplicity, but hopefully some future work looks at the full distribution over outputs.

(In the rest of this post I share GPT-3's results without too much warning, so if you'd like to make your own predictions about how GPT-3 does at simple classification and regression tasks in-context, now would be the time to pause reading and make your general predictions.)

Iris Dataset

Just as there is the MNIST dataset for visual recognition, so too there is a small low-dimensional classification dataset which every would-be classifier has to solve or else sink – the Iris dataset, composed of 150 observations of septal/petal height/width of three species of iris (50 observations each). Note that classification of the Iris dataset is in some sense trivial, with simple algorithms achieving near-perfect accuracy, but a model still needs to do some meaningful learning, given that it needs to learn to differentiate between three classes based on just a few dozen four-dimensional vectors.

In order to prevent leakage, as the Iris dataset is all over the internet, as well as to get the more-easily-palatable integers out, I transformed every feature with the transformation

I also split the dataset into 50% train and 50% test folds – so that the model "trained" on, or rather looked at 75 examples.

I hadn't quite been expecting what had followed – I had expected GPT-3 to catch on to some patterns, to have a serviceable but not-quite-impressive accuracy – instead, the accuracies, averaged over 5 random dataset shufflings, for Ada (350M params), Babbage (1.3B), Curie (6.7B), and Davinci (175B), compared to kNN (k=5) and logistic regression were:

So Curie and Davinci did about as well as kNN[2] and logistic regression. GPT-3, just by looking at feature vectors, solves Iris.

I also conducted a few other experiments with the Iris dataset. One was not labelling the "input" or "output", but just sending bare numbers, like this:

94, 47, 84, 31, 2

89, 51, 73, 31, 1

[...]

91, 48, 75, 31, 2

96, 51, 80, 38,

This seemed to degrade the results very slightly. Scaling up numbers so that all features were in the hundreds also seemed to potentially degrade performance by a few percentage points, but I didn't investigate that exhaustively either.[3]

2D binary classification, generally

These results, while interesting, only show GPT-3's model-fitting ability on one dataset. It might, indeed, be that the Iris dataset is, for some reason, unusually easy to classify for GPT-3. That's why I conducted some more experiments, in a lower dimension, where I could try out a lot different things.

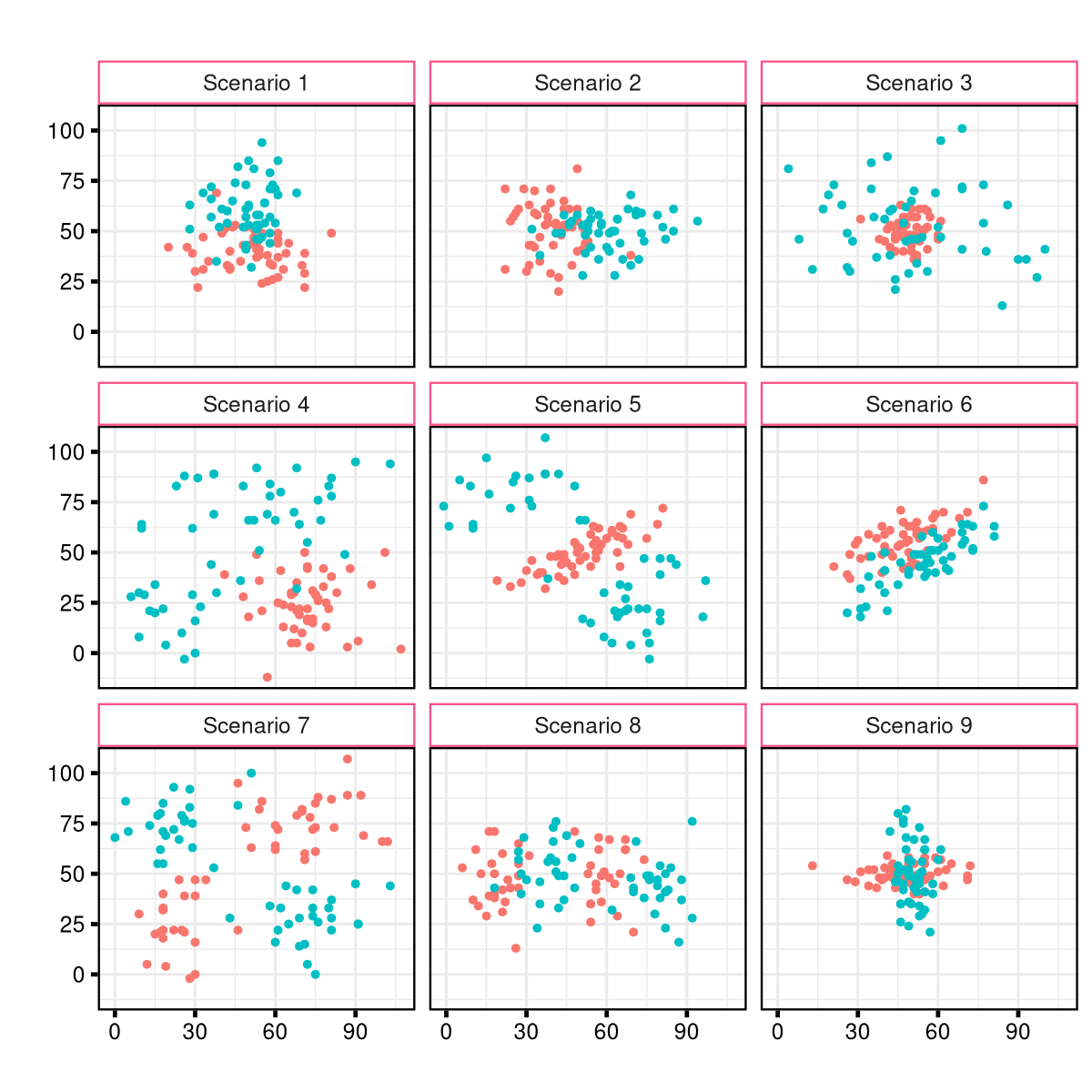

To the end of trying out a lot of things, I constructed 9 "typical binary classification scenarios" – I tried to think up a set of class distributions which would capture a decent number of realistic-looking two-class binary classification cases, as well as some slightly adversarial ones – e.g. scenario 7 is the analogue of the "XOR" problem, which perceptrons famously cannot learn because they define a linear boundary. Below you can see a random sample of each of these scenarios.

For each scenario I sampled a dataset three times; each of those datasets had 50 "train" examples and 30 test examples. Here are the results, comparing between kNN (k=5[4]), logistic regression, a custom text-based classifier which I wrote thinking about the easiest text-based-algorithm GPT could learn[5], and GPT-3.

GPT-3, as can be seen, does significantly better than chance on each of these scenarios. I would like to lightly discourage reading too much into what GPT-3 is doing just from the above numbers. Despite there being tables and graphs, this is at heart very much just an exploratory work – aiming to investigate whether there is something there, not what exactly it is – hence the methodology here being quite lacking insofar as "drawing deeper conclusions" goal is concerned.

The table below shows the averages for each of the above models, noting that this makes only marginal sense, given that some of the "scenarios" are inherently harder than others. If I were doing this anew, I'd probably standardize all the "scenarios" so that distributions are such that the expected accuracy of say kNN is 80%. But it still seems better to display this, rather than not:

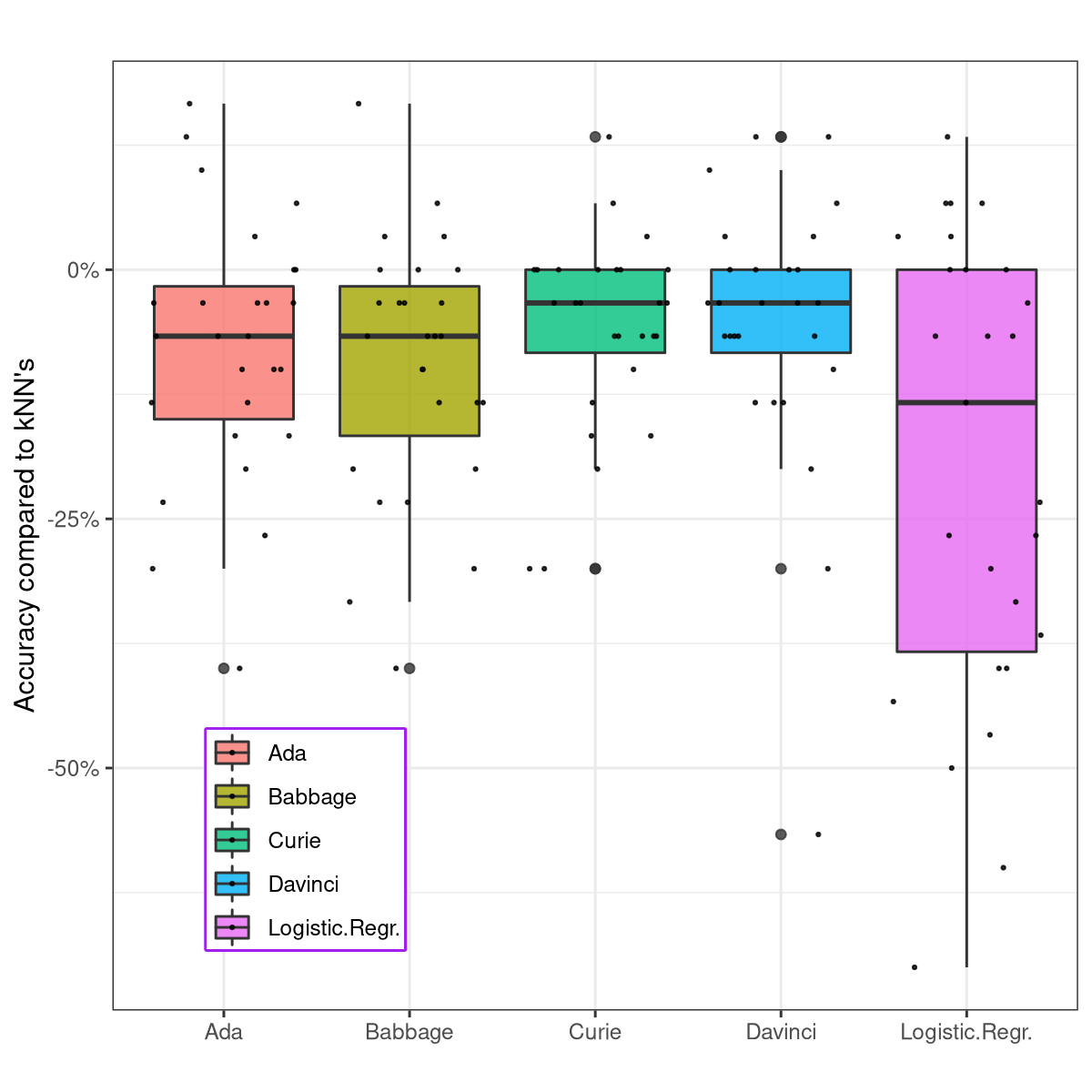

On the graph below each dot denotes the difference in accuracy, for each model, and for each scenario and each random sample of it, compared to kNN – noting that the same disclaimer about this making only marginal sense still applies.

Is there a number sense?

One of the immediate questions one might have: is GPT-3 just operating on the basis of pure symbols, or is there some "number sense" which it is using while fitting these models? To this end, I took each of the above scenarios, and substituted all the digits in the input vectors by the (randomly-generated) mapping . Hence the input looked like this:

Input = mw, mc, output = 1

Input = bd, wb, output = 0

[...]

Input = wm, cw, output = 1

Input = ch, jj, output =

A friend pointed out that encoding issues might negatively affect results if there are no spaces between the letters, so I tried this version out too:

Input = m w , m c , output = 1

Input = b d , w b , output = 0

[...]

Input = w m , c w , output = 1

Input = c h , j j , output =

Below, you can see the results; all the models were run with Davinci.

So, the letter-models do learn something; the spaced letter being on average a bit better of the two, but both being clearly inferior to the model prompted with numerical vectors.

Learning regression

I also ventured to test, though not nearly as extensively nor with any kind of attempt at systematicity, regression. I exclusively tested functions with added noise; the results were sometimes shockingly good, sometimes bad, but the most important part of the bottom line was that GPT-3 often successfully extrapolates.

I'll showcase just a few examples, showing both 'success' and 'failure'. A reminder that the input to GPT-3 in these examples was of the form:

Input = 7, output = -131

Input = 24, output = -338

[...]

Input = 95, output = -2270

Input = 13, output =

All of the examples were run with Davinci.

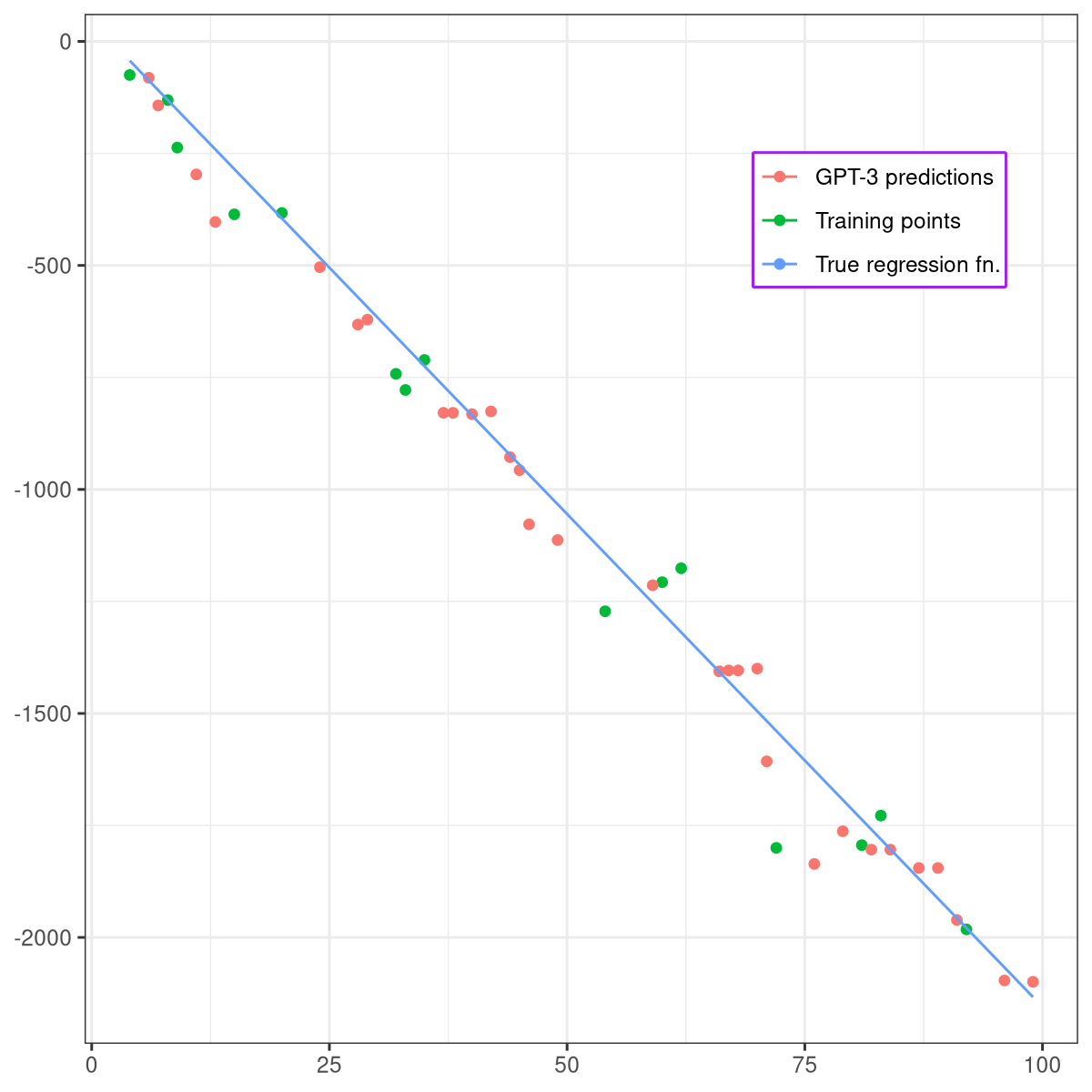

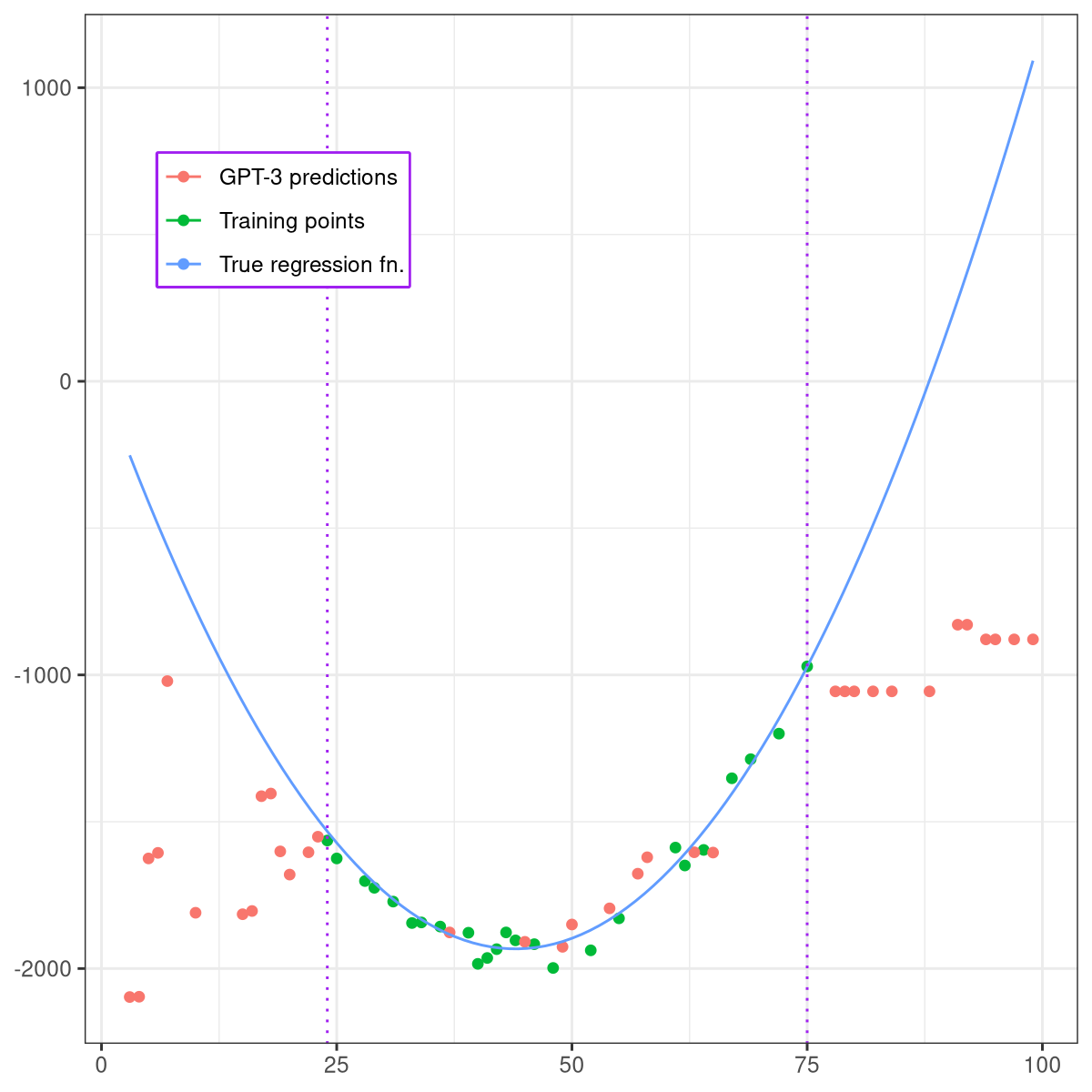

First, we ask GPT-3 to fit

where is distributed normally with mean and standard deviation (). Despite a great amount of noise, only 15 examples in the "train" set, and decently large negative output numbers, GPT-3 learns it quite well:

Note the bottom-most two points, where GPT-3 extrapolates faithfully to a region where it hasn't seen any points before. This does not seem a mere coincidence, but rather – based on my limited experiments – recurs regularly.

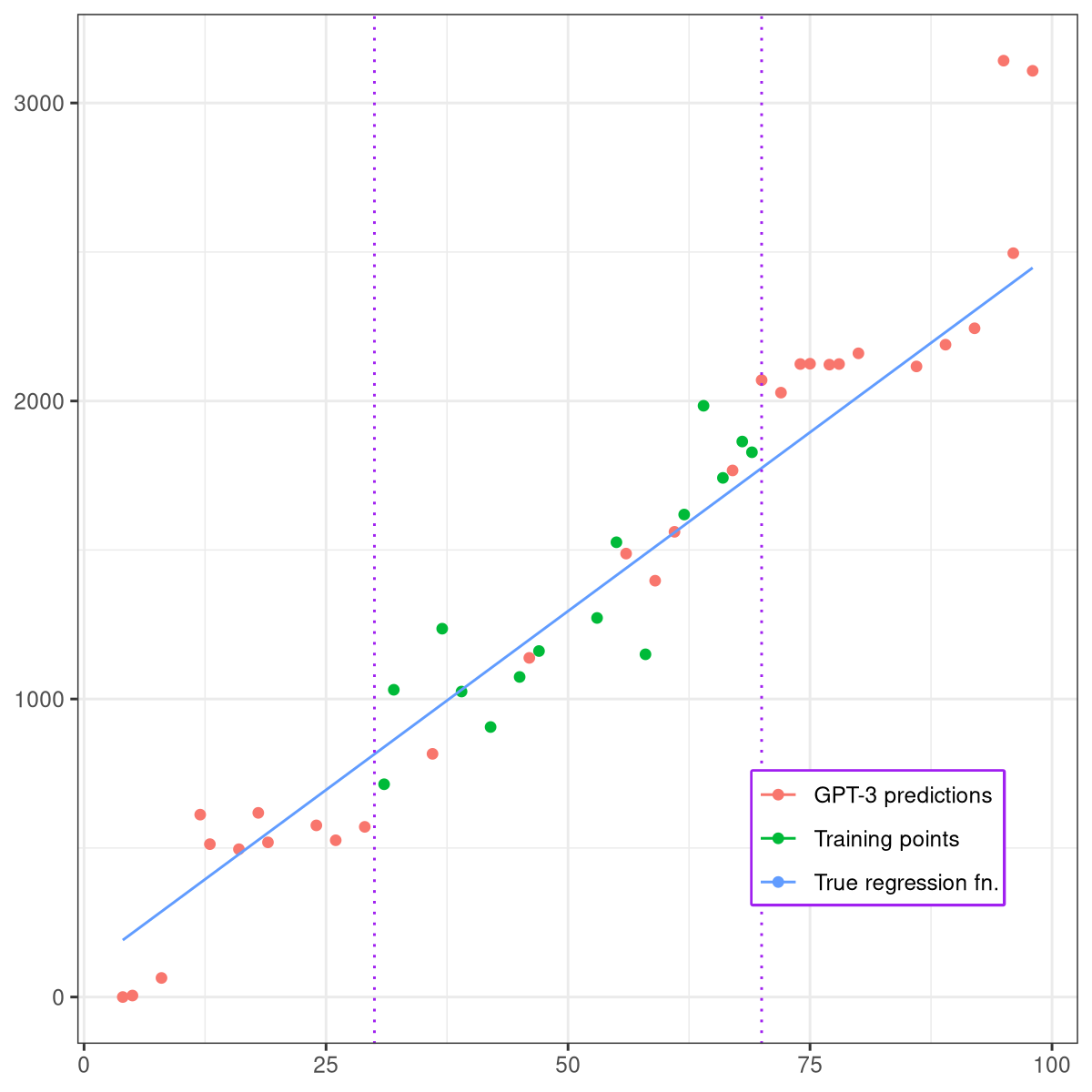

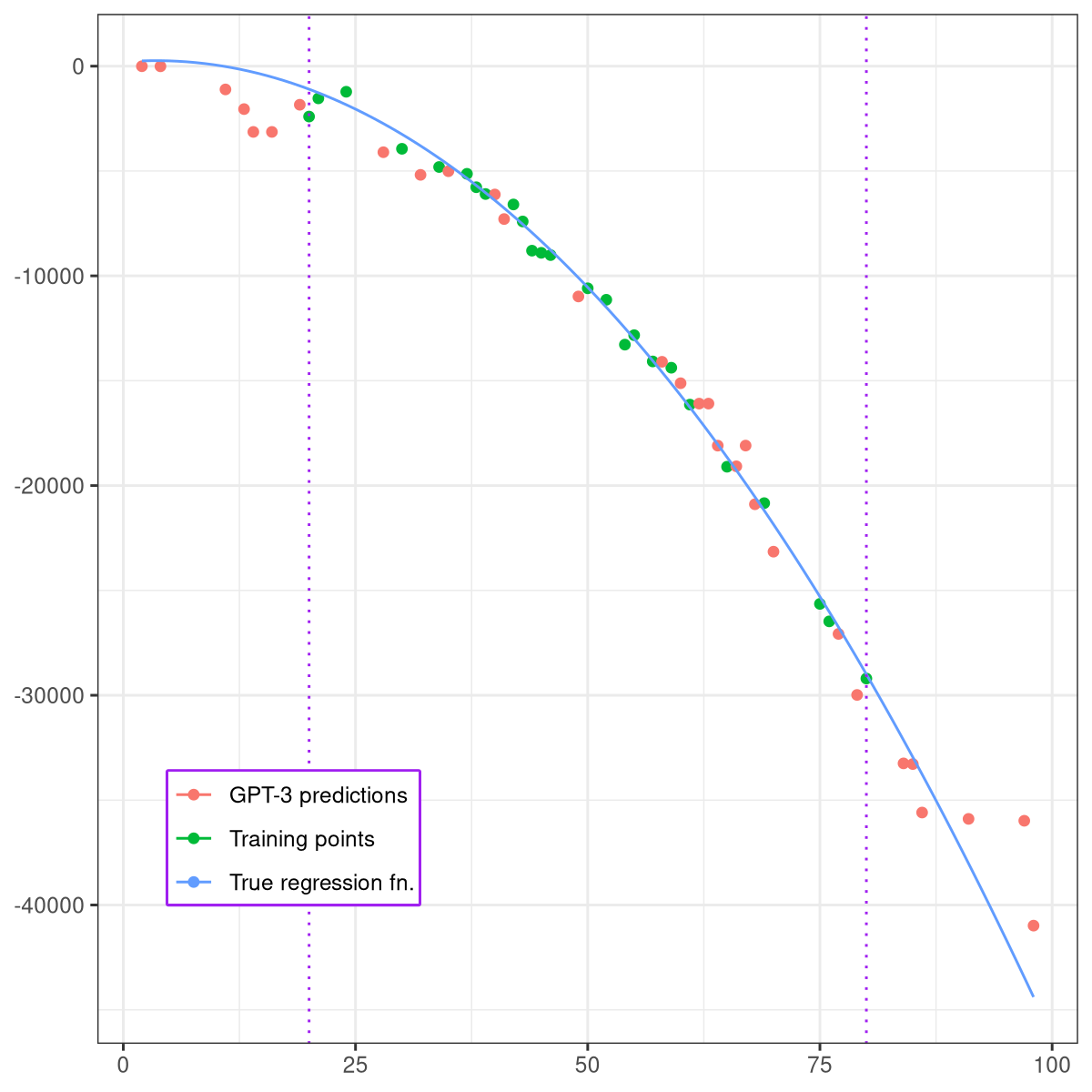

Now we fit

where , and we also sample for training in the range from to , while we test on randomly sampled points from to .

As we can see, GPT-3 extrapolates reasonably, though starts being worse as it gets farther away from the training domain.

The following example tests both nonlinearity and nonmonotonicity. So, we're modelling with . Anyway, the model does reasonably fine in the interpolating region, but does badly when extrapolating:

But then, on another example,

– GPT-3 fits the data nicely, both when interpolating and extrapolating, despite really big standard deviation, output numbers into negative tens of thousands, and nonlinearity.

I could share some more examples, but they would all be pretty similar to these ones. To my best judgment, I don't think these are cherrypicked – in fact, it could be that failure is slightly overrepresented among these few examples, compared to all the ones I've looked at.

All in all, this is a pretty limited span of regression experiments, since I wasn't pursuing any kind of systematicity in this case as in the classification case; so I'd be antsy to see more done, especially taking the full distribution of outputs in consideration, not just taking the most probable output.

Code

All code, all metadata and results of each experiment can be found here. I didn't work much on either code readability, cleanliness or documentation, so you might find it hard to read or reuse it – it's not meant to be used, in short, but rather just serve as a record of what I did.

Discussion

This experiment was motivated by my intuition, formed by playing with GPT-3, that there is some analogue of model-fitting going on in the background, probably even when one isn't prompting it with explicit meta-learning-y stuff – and it seemed like a good first step towards elucidation of that hypothesis to show that there is some quite explicit model-fitting going on, that meta-learning is more than a fancy trick. And as far as that is a coherent hypothesis, this work seems to go into its favor.[6] [7]

It also vaguely seemed important to have ways of testing (language) model capabilities which

- Are language-understanding independent.

- Can be scaled up indefinitely (i.e. can be made arbitrarily harder).

- Are leakage-proof (that is, there is nowhere on the internet where the results might already have been written down, in some fashion).

I would say the present work kind of failed to fulfill this promise, given that nothing I tried showed even noticeable differences between the 6.7B model and the 175B model – where there "should" have been a noticeable difference – however, further experiments could imaginably amend this, perhaps find sets of numerical model-fitting tasks which exhibit smoother scaling performance growth.

In fact, exploration of these tasks seems a potentially fruitful avenue of exploration of what is happening inside these models, or at least as a tool for understanding their metalearning abilities. There are so many experiments in this direction which I'd like to run, though I'm currently limited both by a lack of funding and the lack of time, as there are other experiments I'd like to run which seem higher-impact than merely continuing this line of investigation – however, I'm very curious to hear what people on LW make of these results!

Except e.g. through the use of context distillation [LW · GW] or something like that, though at that point you're not really doing purely in-context learning anymore. ↩︎

I went with k=5 as that'd be my first choice, and k=3 and k=7 had very similar results. ↩︎

Each of these runs cost about ~5 USD, which is why I didn't go completely overboard with testing and exploring and I would be wont to do. ↩︎

k=3 and k=7 had very similar results. ↩︎

The classifier works by reducing every number to its tens digit, and then for each test example, each train example votes for it if its ten digits matches it – the example is classified as the class which gave it more votes. Note that while this classifier is defined in de facto textual terms, it is quite geometric in what it actually does, and it is quite similar to kNN in spirit – there is an implicit computation of Euclidean distance within it. ↩︎

Though any reinterpretations of this work which cast a different light would be appreciated. ↩︎

I have various vague intuitions for the importance of metalearning and its implication for the alignment, but I'll probably share those in some future essay, leaving this post to be chiefly about the experiment. ↩︎

16 comments

Comments sorted by top scores.

comment by gwern · 2022-06-07T20:29:54.042Z · LW(p) · GW(p)

I am surprised by the Iris result. I would have expected it to fail badly, similar to how it can't solve most math word problems without inner-monologue, because it requires too much spread-out computation to transform an obtuse representation like "Input: 94, 47, 84, 31, output = 2" into a learned model and then generate a prediction. That it can handle Iris naively without any reformatting or trickery is surprising. Also interesting that it very much looks like it's scaling with model-size. (I wonder if this should be considered a zero-shot or a few-shot result?)

The regression is also a lot better than one would expect from what is, remember, 'a model trained to predict English words based on a pile of random scraped websites'. (I wonder how well humans would do without resorting to explicit graphing?)

I'm less surprised that your synthetic binary problems fail since I thought from the description they'd all fail, but in light of Iris/regression, I'm left wondering why two succeeded and the other didn't. You could make an argument for naturalness & informative-priors, since Iris is real data and the regression curves are not real but very similar to loads of real data, but the 2D scatterplots for the synthetic binary problems don't look all that unnatural to me. Is there any difference in formatting you omitted mentioning?

Replies from: Lovre↑ comment by Lovre · 2022-06-07T20:53:24.621Z · LW(p) · GW(p)

Is there any difference in formatting you omitted mentioning?

There shouldn't be any difference – neither between Iris and the synthetic binary tasks, nor between different synthetic binary tasks themselves – except if some snuck in that evaded my notice.

The only thing I experimented with, alternative-formatting-wise, was that the first time I experimented with Iris, I did it with a line before all the input vectors which said something like "This is a sequences of inputs and outputs of an integer function.", but then I redid the experiment without that line, without any penalty to the accuracy (the results shown are without that preamble) – so when I later did all the synthetic binary experiments, I omitted any preamble.

In regression experiments, I also originally added the line: "This is a sequence of inputs and outputs of a function which takes an integer as an argument and returns an integer." I didn't really do any test whether regression performed better with that line or not, but in some examples it didn't seem like it made a difference.

(Technical note: for all the synthetic binary and regression tasks shown in this post, their "input text" (i.e. the way their train feature vectors were formatted) can be found in the linked repository, in experiments_log.json. Top-level of the json is the experiment name, and each experiment name has the key "input_text" where this is stored. Input text for Iris is not stored though, but there is some metadata in iris_results/. A run of iris_test.py with the parts which send the input via API commented out does confirm that the format is much the same, though.)

comment by gwern · 2022-08-03T20:21:23.543Z · LW(p) · GW(p)

Interesting new paper: "What Can Transformers Learn In-Context? A Case Study of Simple Function Classes", Garg et al 2022:

Replies from: gwernIn-context learning refers to the ability of a model to condition on a prompt sequence consisting of in-context examples (input-output pairs corresponding to some task) along with a new query input, and generate the corresponding output. Crucially, in-context learning happens only at inference time without any parameter updates to the model. While large language models such as GPT-3 exhibit some ability to perform in-context learning, it is unclear what the relationship is between tasks on which this succeeds and what is present in the training data.

To make progress towards understanding in-context learning, we consider the well-defined problem of training a model to in-context learn a function class (e.g., linear functions): that is, given data derived from some functions in the class, can we train a model to in-context learn "most" functions from this class?

We show empirically that standard Transformers can be trained from scratch to perform in-context learning of linear functions -- that is, the trained model is able to learn unseen linear functions from in-context examples with performance comparable to the optimal least squares estimator. In fact, in-context learning is possible even under two forms of distribution shift: (i) between the training data of the model and inference-time prompts, and (ii) between the in-context examples and the query input during inference.

We also show that we can train Transformers to in-context learn more complex function classes -- namely sparse linear functions, two-layer neural networks, and decision trees -- with performance that matches or exceeds task-specific learning algorithms.

Our code and models are available at this https URL.

...Curriculum: ...Notably, when training Transformers without curriculum, there is an initial—relatively long—period in training where the loss does not decrease, followed by a period of sharp decrease. The length of this period varies with training randomness and seems to increase on average with problem dimension. Understanding the model just before and after this transition moment is a promising future direction, which can give insights into the emergence of in-context learning. Interestingly, Olsson et al 2022 observe a similar jump in the in-context learning ability of a language model which they attribute to the formation of “induction heads”.

↑ comment by gwern · 2024-04-12T20:13:30.620Z · LW(p) · GW(p)

Another: "From Words to Numbers: Your Large Language Model Is Secretly A Capable Regressor When Given In-Context Examples", Vacareanu et al 2024:

We analyze how well pre-trained large language models (e.g., Llama2, GPT-4, Claude 3, etc) can do linear and non-linear regression when given in-context examples, without any additional training or gradient updates. Our findings reveal that several large language models (e.g., GPT-4, Claude 3) are able to perform regression tasks with a performance rivaling (or even outperforming) that of traditional supervised methods such as Random Forest, Bagging, or Gradient Boosting. For example, on the challenging Friedman #2 regression dataset, Claude 3 outperforms many supervised methods such as AdaBoost, SVM, Random Forest, KNN, or Gradient Boosting. We then investigate how well the performance of large language models scales with the number of in-context exemplars. We borrow from the notion of regret from online learning and empirically show that LLMs are capable of obtaining a sub-linear regret.

comment by gwern · 2022-06-26T22:51:28.078Z · LW(p) · GW(p)

One parallel case that occurs to me is Anthropic using their GPT-like to try to imitate the COMPAS prediction of criminal offending, which is a regression problem too; then in the appendix, they experiment with a movie recommender system:

Figure 8 shows that language models smoothly decrease in the standard Root Mean Square Error (RMSE, lower is better) metric on the widely used Movielens 1M movie recommendation system task [31] as they increase in size. The smallest model achieves a significantly better RMSE (1.06) than chance (RMSE 1.91), and the largest model achieves a significantly lower RMSE (0.94) than a strong baseline model (RMSE 0.98, see below for further details). Although no models achieve state of the art (SOTA) performance (RMSE 0.82), these results are still surprising because the language models (in our zero-shot setting) see two orders of magnitude less training data than the SOTA model.

comment by Vlad Mikulik (vlad_m) · 2022-06-09T16:54:57.939Z · LW(p) · GW(p)

Nice work!

This section in Anthropic's work on Induction heads seems highly relevant -- I would be interested in seeing an extension of your analysis that looks at what induction heads do in these tasks.

If we believe the claims in that paper, then in-context learning of any kind seems to driven by a fairly simple mechanism not unlike kNN -- induction attention heads. Since it's pretty tractable to locate induction heads in an automated way, we could potentially take a look at the actual mechanism being used to implement these predictions and verify/falsify the hypotheses you make about how GPT makes these predictions. (Although you'd probably have to switch to an open-source model.)

↑ comment by Lovre · 2022-06-11T11:18:45.194Z · LW(p) · GW(p)

That seems like a great idea, and induction heads do seem highly relevant!

What you describe is actually one of the key reasons why I'm so excited about this whole approach. I've seen many interesting metalearning tasks, and they mostly just like work or not work, or they fail sometimes, and you can try to study their failures to perhaps glean some insight into the underlying algorithm -- but...they just don't have (m)any nontrivial "degrees of freedom" in which you can vary them. The class of numerical models, on the other hand, has a substantial amount of nontrivial ways in which you can vary your input -- and even more so, you can vary it not just discretely, but also ~continuously.

That makes me really optimistic about the possibility of which you hint, of reverse engineering whatever algorithm the model is running underneath, and then using interpretability tools to verify/falsify those findings. Conversely, interpretability tools could be used to make predictions about the algorithm, which can then be checked. Hence one can imagine a quite meaningful feedback loop between experimentation and interpretability!

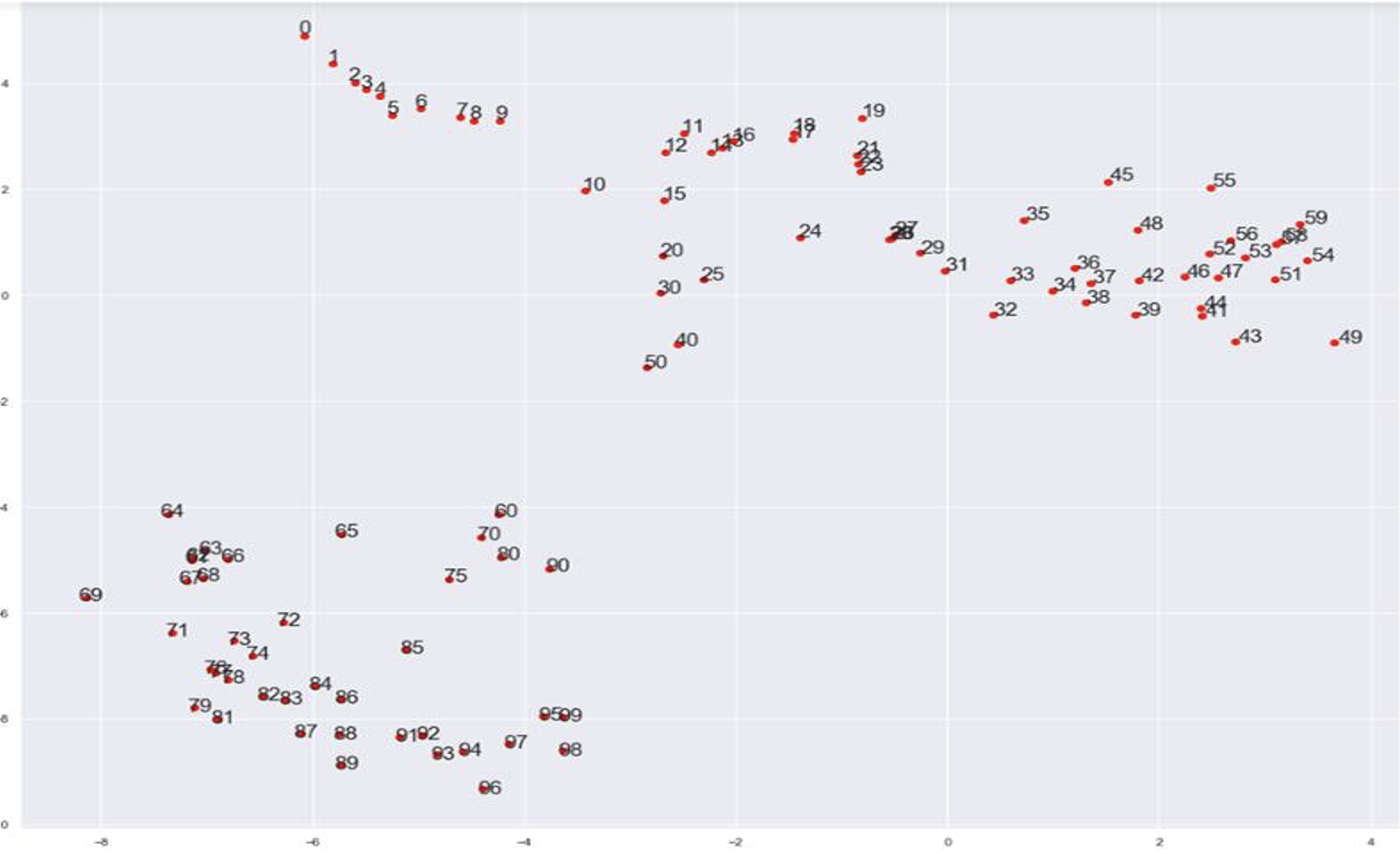

comment by p.b. · 2022-06-08T15:15:42.562Z · LW(p) · GW(p)

This is a t-SNE I made a couple of years ago of the glove-wordvectors for numbers. So it's not surprising that there is a "number sense", though I am definitely surprised how good some of the results are.

Fun fact: Fitting the Iris dataset with a tiny neural network can be suprisingly fickle.

comment by SarahNibs (GuySrinivasan) · 2022-06-08T14:47:27.967Z · LW(p) · GW(p)

This was a fantastic idea and I am more interested in model interpretability for understanding these results than any I have seen in a while. In particular any examples of nontrivial mesa-optimizers we can find in the wild seem important to study, and maybe there's one here.

comment by gwern · 2023-01-31T02:36:43.242Z · LW(p) · GW(p)

https://twitter.com/volokuleshov/status/1619906183955095558 demos using ChatGPT to run a 'Python notebook' to 'train a neural net model' to 'predict' outputs for various functions like sin() or 5 / (1+x)**2 -1

comment by Owain_Evans · 2022-06-10T16:19:17.202Z · LW(p) · GW(p)

Cool post! Did you try seeing whether GPT-3 can regenerate parts of the Iris dataset (or any other datasets that may appear in its training data)? I'd also be interested to see finetuning results, results for the latest InstructGPT, and to see analysis of the GPT-3 Embeddings for integers and floats.

Replies from: Lovre, Lovre↑ comment by Lovre · 2022-06-11T11:19:57.399Z · LW(p) · GW(p)

Since I transformed the Iris dataset with a pretty "random" transformation (i.e. not chosen because it was particularly nice in some way), I didn't check for its regeneration -- since my feature vectors were very different to original Iris's, and it seemed exceedingly unlikely that feature vectors were saved anywhere on the internet with that particular transformation.

But I got curious now, so I performed some experiments.

The Iris flower data set or Fisher's Iris data set is a multivariate data set introduced by the British statistician and biologist Ronald Fisher in his 1936 paper

Feature vectors of the Iris flower data set:

Input = 83, 40, 58, 20, output = 1

Input = 96, 45, 84, 35, output = 2

Input = 83, 55, 24, 9, output = 0

Input = 73, 54, 28, 9, output = 0

Input = 94, 45, 77, 27, output = 2

Input = 75, 49, 27, 9, output = 0

Input = 75, 48, 26, 9, output = 0

So these are the first 7 transformed feature vectors (in one of the random samplings of the dataset). Among all the generated output (I looked at >200 vectors), it never once output a vector which was identical to any of the latter ones, and also... in general the stuff it was generating did not look like it was drawing on any knowledge of the remaining vectors in the dataset. (E.g. it generated a lot that were off-distribution.)

I also tried

Input = 83, 55, 24, 9, output = 0

Input = 73, 54, 28, 9, output = 0

[... all vectors of this class]

Input = 76, 52, 26, 9, output = 0

Input = 86, 68, 27, 12, output = 0

Input = 75, 41, 69, 30, output = 2

Input = 86, 41, 76, 34, output = 2

Input = 84, 45, 75, 34, output = 2

Where I cherrypicked the "class 2" so that the first coordinate is lower than usual for that class; and the generated stuff always had the first coordinate very off-distribution from the rest of the class 2, as one would expect if the model was meta-learning from the vectors it sees, rather than "remembering" something.

This last experiment might seem a little contrived, but bit of a problem with this kind of testing is that if you supply enough stuff in-context, the model (plausibly) meta-learns the distribution and then can generate pretty similar-looking vectors. So, yeah, to really get to the bottom of this, to become 'certain' as it were, I think one would have to go in deeper than just looking at what the model generates.

(Or maybe there are some ways around that problem which I did not think of. Suggestions appreciated!)

To recheck things again -- since I'm as worried about leakage as anyone -- I retested Iris, this time transforming each coordinate with its own randomly-chosen affine transformation:

And the results are basically identical to those with just one affine transformation for all coordinates.

I'm glad that you asked about InstructGPT since I was pretty curious about that too, was waiting for an excuse to test it. So here are the synthetic binary results for (Davinci-)InstructGPT, compared with the original Davinci from the post:

comment by jacopo · 2022-06-09T16:55:33.563Z · LW(p) · GW(p)

I am left wondering if when GPT3 does few-shot arithmetics, it is actually fitting a linear model on the examples to predict the next token. I.e. the GPT3 weights do not "know" arithmetics, but they know how to fit, and that's why they need a few examples before they can tell you the answer to 25+17: they need to know what function of 25 and 17 to return.

It is not that crazy given my understanding of what a transformer does, which is in some sense returning a function of the most recent input which depends on earlier inputs. Or am I confusing them with a different NN design?

Replies from: jacopocomment by Lovre · 2022-06-08T10:08:19.923Z · LW(p) · GW(p)

I forgot to explicitly note it in the post, but yeah, if you have any ideas for variations on these experiments which you'd like to see run, which you feel might make your model of what is going on clearer, feel free to comment them here. Conditional on them being compute-light/simple enough to implement, I'll try to get back to you ASAP with the results – do feel encouraged to share ideas which might be vaguer or might require more compute as well, though in those cases I might not get back to you immediately.

comment by davidl123 · 2022-08-05T09:50:50.377Z · LW(p) · GW(p)

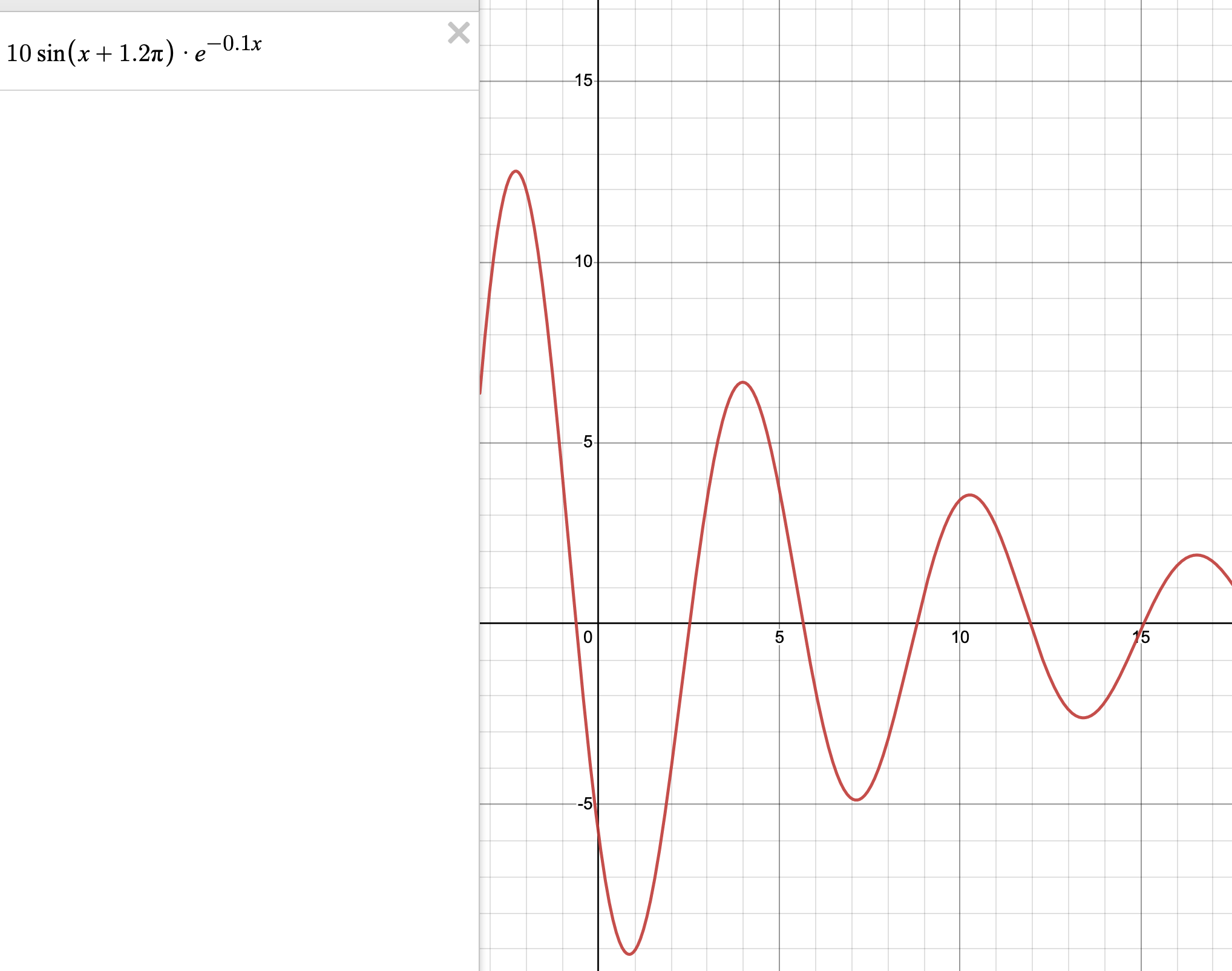

Great write-up. Inspired me to try how much further ICL could go beyond "simpler" mappings (OP shows pretty nice results for two linear and two quadratic functions). As such, I tried a damped sinusoid:

with the prompt:

x=3.984, y=6.68

x=2.197, y-2.497

x=0.26, y=-7.561

x=6.025, y=-1.98

x=7.126, y=-4.879

x=8.584, y=-0.894

x=9.97, y=3.403

x=11.1, y=2.45

x=12.09, y=-0.452

x=13.72, y=-2.48

x=14.81, y=-0.606

x=10, y=but didn't get any luck. Maybe I need more points, especially around the troughs and valleys.