Alignment, conflict, powerseeking

post by Oliver Sourbut · 2023-11-22T09:47:08.376Z · LW · GW · 1 commentsContents

1 comment

Is powerseeking instrumentally convergent?

Having power is instrumentally useful for most things.

Gaining power is a means to having power.

Seeking power is a means to gaining power.

But seeking power is also a means to producing conflict.

Avoiding costly conflict is instrumentally useful for most things.

This cashes out to a few simple tradeoffs.

Who has power right now matters. Are they aligned on outcome preferences?

The risk of costly conflict matters. How much is at stake? What are the odds of things getting destroyed by conflict? Escalation and de-escalation are important factors here.

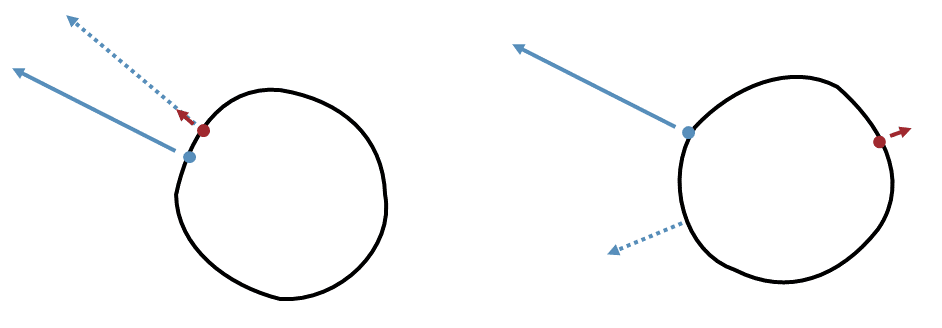

An overly simple visualisation[1]

- preferences are direction vectors

- power balance produces magnitudes

- conflict spends some overall magnitude but changes power balance

- alignment is literal vector alignment

Blue and red are preferences. Arrow magnitudes show blue has balance of power. Blue dashed shows projection of blue onto red direction. Left: red has a lot to lose from conflict; blue is basically aligned. Right: red has little to lose from conflict; blue is very misaligned.

Usually when we talk about instrumental convergence we're interested in the forward direction: what will X do given we know some limited amount about X? But in light of the above tradeoffs, we can also use this for the inverse problem of inference: given what X does, what can we infer about X (preferences, beliefs)?

Of course, in the knowledge that behaviour can be used for this inverse inference problem, deception arises as an obvious strategy. (This is where surprise coups come from[2].)

When we observe entities seeking power, or gauge their willingness to escalate, we can learn something about

- their estimate of the risk and cost of conflict/escalation

- their estimate of their alignment with the existing power balance

Caveat: this post is secretly more about humans than AI and I want to pre-emptively clarify that I'm not trying to imply AI won't understand or exploit the instrumental usefulness of gaining power.

This really is simplistic, but actually not a terrible fit for some more legit maths ↩︎

Deceptively pretending to be misaligned should be systematically rarer: if aligned with the powers-that-be, who are you aiming to deceive? The 4-d chess version of this is seeking power when actually aligned, in order to signal misalignment for some reason, perhaps to gain support from some third/fourth party. ↩︎

1 comments

Comments sorted by top scores.

comment by Oliver Sourbut · 2023-11-22T09:57:17.829Z · LW(p) · GW(p)

A salient piece of news which prompted me writing this up was Sama vs OpenAI board [LW · GW]. I've kept this note out of the post itself because much less timeless.

It looks like Sam's subsequent willingness to escalate and do battle with the board is strong evidence that he considers his goals meaningfully misaligned from theirs, and their willingness to fire him suggests they feel the same way. Unclear what this rests on, whether it's genuine level of care about safety, or more about intermediate things like the tradeoffs around productisation and publicity, or other stuff.