Research agenda: Can transformers do system 2 thinking?

post by p.b. · 2022-04-06T13:31:39.327Z · LW · GW · 0 commentsContents

No comments

This is one of the posts that detail my research agenda [LW · GW], which tries to marry Deep Learning and chess to gain insight into current AI technologies. One of my main motivations for this research is trying to identify gaps aka unsolved problems in AI, because these would uniquely inform AGI timelines.

There are many critics of Deep Learning, however, they uniformly disappoint when it comes to making concrete verifiable statements about what it is that Deep Learning is missing and what fundamental gaps in capabilities will remain as long as that is the case.

One candidate for a gap is system 2 thinking. Deliberation. Solving complex problems by thinking them through. There is reason to think that this is a gap in current Deep Learning. As Andrew Ng has stated: Deep Learning can do anything a human can do in under a second.

In sucessful systems where that is not enough, deliberation is often replaced by search (AlphaGo) or by roll-outs with selection (AlphaCode). These are tailored to the problem in question and not uniformly applicable.

Large language models show signs of deliberation. Especially the possibility to elicit a form of inner monologue to better solve problems that demand deliberation points into that direction.

However, the evaluation of large language models has the persistent problem that they exhibit "cached intelligence". I.e. for all seemingly intelligent behavior the distance to existing training examples is unclear.

In chess system 1 thinking and system 2 thinking have straightforward analogues. Positional intuition guides the initial choice of move candidates. Calculation is the effortful and slow way to search through possible moves and counter-moves.

When I trained a transformer [LW · GW] to predict human moves from positions, it came apparent that although the benefit of scaling had maxed out the accuracy, the model was incapable of avoiding even tactical mistakes that are immediately punished.

When playing against the model this is especially striking because all its moves, including the mistakes, are just so very plausible. Playing against search based engines often feels like playing against somebody who doesn't know any chess, but beats you anyway. Playing against a transformer feels like playing against a strong player, who nevertheless always loses.

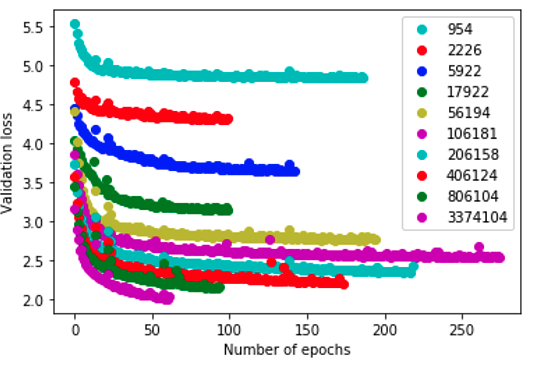

However, it is possible that tactical deliberation aka calculation emerges at scale. In that case the benefit from scaling seemingly maxed out, because the benefit of scale for calculation hadn't started in a significant way yet.

The ability to catch tactical errors can be quantified automatically using chess engines. When the score drops, the main line loses a piece, a tactical mistake has occured. These mistakes can be classified into how many moves you'd have to think ahead to catch it.

Such a benchmark would enable the investigation into whether there is a second scaling law under the scaling law. A system 2 scaling law, that might take off after the system 1 scaling has maxed out.

0 comments

Comments sorted by top scores.