Continuity Assumptions

post by Jan_Kulveit · 2022-06-13T21:31:29.620Z · LW · GW · 13 commentsContents

What I mean by continuity How is this relevant Deep cruxes How continuity helps How continuity does not help Continuity does not solve alignment. Downstream implications Common objections to continuity Implications for x-risk None 13 comments

This post will try to explain what I mean by continuity assumptions (and discontinuity assumptions), and why differences in these are upstream of many disagreements about AI safety. None of this is particularly new, but seemed worth reiterating in the form of a short post.

What I mean by continuity

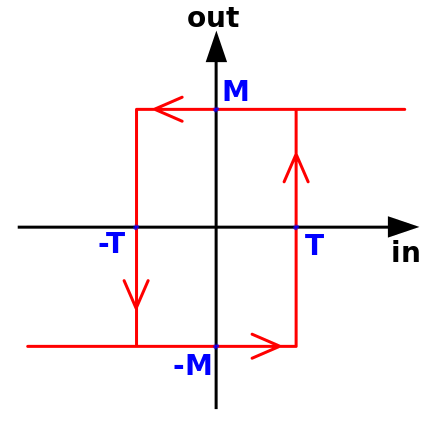

Start with a cliff:

This is sometimes called the Heaviside step function. This function is actually discontinuous, and so it can represent categorical change.

In contrast, this cliff is actually continuous:

From a distance or in low resolution, it looks like a step function; it gets really steep. Yet it is fundamentally different.

How is this relevant

Over the years, I've come to the view that intuitions about whether we live in a "continuous” or "discontinuous” world are one of the few top principal components underlying all disagreements about AI safety. This includes but goes beyond the classical continuous [LW · GW] vs. discrete [LW · GW] takeoff debates.

A lot of models of what can or can't work in AI alignment depends on intuitions about whether to expect "true discontinuities" or just "steep bits". This holds not just in one, but many relevant variables (e.g. the generality of the AI’s reasoning, the speed or differentiability of its takeoff).

The discrete intuition usually leads to sharp categories like:

| Before the cliff | After the cliff |

| Non-general systems. Lack the core of general reasoning, that which allows thought in domains far from training data | General systems. Capabilities generalise far |

| Weak systems - that won't kill you, but also won't help you solve alignment | Strong systems - that would help solve alignment, but unfortunately will kill you by default, if unaligned |

| Systems which may be misaligned, but aren't competently deceptive about it | System which is actively modelling you at a level where the deception is beyond your ability to notice |

| Weak acts | Pivotal acts |

| … | … |

In Discrete World, empirical trends, alignment techniques, etc usually don't generalise across the categorical boundary. The right is far from the training distribution on the left. Your solutions don't survive the generality cliff, there are no fire alarms - and so on.

Note that while assumptions about continuity in different dimensions are in principle not necessarily related, and you could e.g. assume continuity in takeoff and discontinuity in generality - in practice, they seem strongly correlated.

Deep cruxes

Deep priors over continuity versus discontinuity seem to be a crux which is hard to resolve.

My guess is intuitions about continuity/discreteness are actually quite deep-seated: based more on how people do maths, rather than specific observations about the world. In practice, for most researchers, the "intuition" is something like a deep net trained on a whole lifetime of STEM reasoning - they won't update much on individual datapoints, and if they are smart, they are often able to re-interpret observations to be in line with their continuity priors.

(As an example, compare Paul Christiano's post on takeoff speeds from 2018, which is heavily about continuity, to the debate [LW · GW] between Paul and Eliezer in late 2021. Despite the participants spending years in discussion, progress on bridging the continuous-discrete gap between them seems very limited.)

How continuity helps

In basically every case, continuity implies the existence of systems "somewhere in between".

- Systems which are moderately strong: maybe weakly superhuman in some relevant domain and quite general, but at same time maybe still bad with plans on how to kill everyone.

- Moderately general systems: maybe are able of general reasoning, but in a strongly bounded way

- Proto-deceptive systems which are bad at deception.

The existence of such systems helps us with two fundamental difficulties of AI alignment as a field - that "you need to succeed on the first try" and "advanced AIs much more cognitively powerful than you are very difficult to align". In-between systems mean you probably have more than one try to get alignment right, and implies that bootstrapping is at least hypothetically possible - that is, aligning a system of similar power to you, and then attempting to set up a system that preserves the alignment of these early systems when we train successor systems.

A toy example:

A large model is trained, using roughly the current techniques. Apart from its performance on many other tasks, we constantly watch its performance on tripwires, a set of ''deception" or "treacherous turn" tasks. Assuming continuity, you don’t get from "the model is really bad at deception" to "you are dead" in one training step.

If things go well, at this moment we would have an AI doing interesting things, maybe on the border of generality, and has some deceptive tendencies but sort of sucks at it.

This would probably help: for example, we would gain experimental feedback on alignment ideas, which can help with finding ideas which actually work. The observations would make the "science of intelligent systems" easier. More convincing examples of deception would convince more people, and so on.

For more generality, in a different vocabulary, you can replace performance on tripwires by more general IDA [EA · GW]-style oversight.

Continuity makes a difference: if you are in truly-discontinuous mode, you are usually doomed at the point of the jump. In the continuous mode, oversight can notice the increasing steepness of some critical variables, and slow down, or temporarily halt.

How continuity does not help

Continuity does not solve alignment.

In the continuous view, some problems may even become harder - even if you basically succeed in aligning a single human-level system, it does not mean you have won. You can easily imagine [AF · GW] worlds, in which alignment of single systems at human-level is almost perfect – and yet in which, in the end, humans find themselves in a world where they are completely powerless and also clueless about what's actually happening with the world. Or in worlds in which the lightcone is filled with computations of little moral value, because we succeed in alignment with highly confused early 21st century moral values.

Downstream implications

I claim that most reasoning about AI alignment and strategy is downstream of your continuity assumptions.

One reason why: once you assume a fundamental jump in one of the critical variables, you can often carry it to other variables, and soon, instead of multiple continuous variables, you are thinking in discrete categories.

Continuity is a crux for strategy. Assuming continuity, things will get weird before getting extremely weird. This likely includes domains such as politics, geopolitics, experience of individual humans... In contrast, discrete takeoff usually assumes just slightly modified politics – quite similar to today’s. So continuity can paradoxically imply that many intuitions from today won't generalise to the takeoff period, because world will be weirder.

Again, the split leads people to focus on different things – for example on "steering forces" vs. "pivotal acts".

Common objections to continuity

Most of them are actually covered in Paul's writing from 2018.

One new class of objections against continuity comes from observations of jumps in capabilities in training language models. This points to a possibly important distinction between continuity in real-time variables, and continuity in learning-time variables. Which seems similar to AlphaGo and AlphaZero - where the jump is much less pronounced when the time axis is replaced by something like "learning effort spent".

Implications for x-risk

It's probably worth reiterating: continuity does not imply safety. At the same time, continuity is at tension with some popular discrete assumptions about doom. For example, in Eliezer's recent post [LW · GW], discontinuity is a strong component of points 3, 5, 6, 7, 10, 11, 12, 13, 26, 30, 31, 34, 35 – either it's the whole point, or a significant part of its hardness and lethality.

On the continuous view, these stories of misalignment and doom seem less likely; different ones take their place.

Thanks Gavin for help with editing and references.

13 comments

Comments sorted by top scores.

comment by Rob Bensinger (RobbBB) · 2022-06-14T06:02:02.972Z · LW(p) · GW(p)

A lot of models of what can or can't work in AI alignment depends on intuitions about whether to expect "true discontinuities" or just "steep bits".

Note that Nate and Eliezer expect there to be some curves you can draw after-the-fact that shows continuity in AGI progress on particular dimensions. They just don't expect these to be the curves with the most practical impact (and they don't think we can identify the curves with foresight, in 2022, to make strong predictions about AGI timing or rates of progress).

Quoting Nate in 2018 [LW · GW]:

On my model, the key point is not 'some AI systems will undergo discontinuous leaps in their intelligence as they learn,' but rather, 'different people will try to build AI systems in different ways, and each will have some path of construction and some path of learning that can be modeled relatively well by some curve, and some of those curves will be very, very steep early on (e.g., when the system is first coming online, in the same way that the curve "how good is Google’s search engine" was super steep in the region between "it doesn’t work" and "it works at least a little"), and sometimes a new system will blow past the entire edifice of human knowledge in an afternoon shortly after it finishes coming online.' Like, no one is saying that Alpha Zero had massive discontinuities in its learning curve, but it also wasn't just AlphaGo Lee Sedol but with marginally more training: the architecture was pulled apart, restructured, and put back together, and the reassembled system was on a qualitatively steeper learning curve.

My point here isn't to throw 'AGI will undergo discontinuous leaps as they learn' under the bus. Self-rewriting systems likely will (on my models) gain intelligence in leaps and bounds. What I’m trying to say is that I don’t think this disagreement is the central disagreement. I think the key disagreement is instead about where the main force of improvement in early human-designed AGI systems comes from — is it from existing systems progressing up their improvement curves, or from new systems coming online on qualitatively steeper improvement curves?

And quoting Eliezer more recently [LW · GW]:

if the future goes the way I predict and yet anybody somehow survives, perhaps somebody will draw a hyperbolic trendline on some particular chart where the trendline is retroactively fitted to events including those that occurred in only the last 3 years, and say with a great sage nod, ah, yes, that was all according to trend, nor did anything depart from trend

There is, I think, a really basic difference of thinking here, which is that on my view, AGI erupting is just a Thing That Happens and not part of a Historical Worldview or a Great Trend.

Human intelligence wasn't part of a grand story reflected in all parts of the ecology, it just happened in a particular species.

Now afterwards, of course, you can go back and draw all kinds of Grand Trends into which this Thing Happening was perfectly and beautifully fitted, and yet, it does not seem to me that people have a very good track record of thereby predicting in advance what surprising news story they will see next - with some rare, narrow-superforecasting-technique exceptions, like the Things chart on a steady graph and we know solidly what a threshold on that graph corresponds to and that threshold is not too far away compared to the previous length of the chart.

One day the Wright Flyer flew. Anybody in the future with benefit of hindsight, who wanted to, could fit that into a grand story about flying, industry, travel, technology, whatever; if they've been on the ground at the time, they would not have thereby had much luck predicting the Wright Flyer. It can be fit into a grand story but on the ground it's just a thing that happened. It had some prior causes but it was not thereby constrained to fit into a storyline in which it was the plot climax of those prior causes.

My worldview sure does permit there to be predecessor technologies and for them to have some kind of impact and for some company to make a profit, but it is not nearly as interested in that stuff, on a very basic level, because it does not think that the AGI Thing Happening is the plot climax of a story about the Previous Stuff Happening.

I think the Hansonian viewpoint - which I consider another gradualist viewpoint, and whose effects were influential on early EA and which I think are still lingering around in EA - seemed surprised by AlphaGo and Alpha Zero, when you contrast its actual advance language with what actually happened. Inevitably, you can go back afterwards and claim it wasn't really a surprise in terms of the abstractions that seem so clear and obvious now, but I think it was surprised then; and I also think that "there's always a smooth abstraction in hindsight, so what, there'll be one of those when the world ends too", is a huge big deal in practice with respect to the future being unpredictable.

(As an example, compare Paul Christiano's post on takeoff speeds from 2018, which is heavily about continuity, to the debate [LW · GW] between Paul and Eliezer in late 2021. Despite the participants spending years in discussion, progress on bridging the continuous-discrete gap between them seems very limited.)

Paul and Eliezer have had lots of discussions over the years, but I don't think they talked about takeoff speeds between the 2018 post and the 2021 debate?

Replies from: Jan_Kulveit↑ comment by Jan_Kulveit · 2022-06-21T09:58:34.988Z · LW(p) · GW(p)

Note that Nate and Eliezer expect there to be some curves you can draw after-the-fact that shows continuity in AGI progress on particular dimensions. They just don't expect these to be the curves with the most practical impact (and they don't think we can identify the curves with foresight, in 2022, to make strong predictions about AGI timing or rates of progress).

Quoting Nate in 2018 [LW · GW]: ...

Yes, but conversely, I could say I'd expect some curves to show discontinuous jumps, mostly in dimensions which no one really cares about. Clearly the cruxes are about discontinuities in dimensions which matter.

As I tried to explain in the post, I think continuity assumptions mostly get you different things than "strong predictions about AGI timing".

...

My point here isn't to throw 'AGI will undergo discontinuous leaps as they learn' under the bus. Self-rewriting systems likely will (on my models) gain intelligence in leaps and bounds. What I’m trying to say is that I don’t think this disagreement is the central disagreement. I think the key disagreement is instead about where the main force of improvement in early human-designed AGI systems comes from — is it from existing systems progressing up their improvement curves, or from new systems coming online on qualitatively steeper improvement curves?

I would paraphrase this as "assuming discontinuities at every level" - both one-system training, and the more macroscopic exploration in the "space of learning systems" - but stating the key disagreement is about the discontinuities in the space of model architectures, rather than in jumpiness of single model training.

Personally, I don't think the distinction between 'movement by learning of a single model' and 'movement by scaling' and 'movement by architectural changes' will be necessarily big.

There is, I think, a really basic difference of thinking here, which is that on my view, AGI erupting is just a Thing That Happens and not part of a Historical Worldview or a Great Trend.

This seem more or less support what I wrote? Expecting a Big Discontinuity, and this being a pretty deep difference?

I think the Hansonian viewpoint - which I consider another gradualist viewpoint, and whose effects were influential on early EA and which I think are still lingering around in EA - seemed surprised by AlphaGo and Alpha Zero, when you contrast its actual advance language with what actually happened. Inevitably, you can go back afterwards and claim it wasn't really a surprise in terms of the abstractions that seem so clear and obvious now, but I think it was surprised then; and I also think that "there's always a smooth abstraction in hindsight, so what, there'll be one of those when the world ends too", is a huge big deal in practice with respect to the future being unpredictable.

My overall impression is Eliezer likes to argue against "Hansonian views", but something like "continuity assumptions" seem much broader category than Robin's views.

Paul and Eliezer have had lots of discussions over the years, but I don't think they talked about takeoff speeds between the 2018 post and the 2021 debate?

In my view continuity assumptions are not just about takeoff speeds. E.g, IDA make much more sense in a continuous world - if you reach a cliff, working IDA should slow down, and warn you. In the Truly Discontinuous world, you just jump off the cliff at some unknown step.

I would guess probably a majority of all debates and disagreements between Paul and Eliezer has some "continuity" component: e.g. the question whether we can learn a lot of important alignment stuff on non-AGI systems is a typical continuity problem, but only tangentially relevant to takeoff speeds.

comment by Steven Byrnes (steve2152) · 2022-06-14T02:19:17.583Z · LW(p) · GW(p)

Do you think there’s an important practical difference between “discontinuous” and “continuous improvement from preschooler-level-intelligence to superintelligence over the course of 24 hours”? The post suggests that they are fundamentally different—cf. “true discontinuities” versus “steep bit”. But it seems to me that there is no difference in practice.

(If we replace “24 hours” by “2 years”, well I for one am mostly inclined to expect that those 2 years would be squandered. If it’s “30 years”, well OK now we’re playing a different game.)

Replies from: Jan_Kulveit↑ comment by Jan_Kulveit · 2022-06-14T04:01:14.855Z · LW(p) · GW(p)

Yes, I do. To disentangle it a bit

- one line of reasoning is, if you have this "preschooler-level-intelligence to superintelligence over the course of 24 hours” you probably had something which is able to learn really fast and generalize a lot before this. how does the rest of the world look like?

- second - if you have control over the learning time, in the continuous version, you can slow down or halt. yes, you need some fast oversight control loop to do that, but getting to a state where this is what you have, because that's what sane AI developers do, seems tractable. (also I think this has decent chance to become instrumentally convergent for AI developers)

↑ comment by Steven Byrnes (steve2152) · 2022-06-14T13:16:03.047Z · LW(p) · GW(p)

one line of reasoning is, if you have this "preschooler-level-intelligence to superintelligence over the course of 24 hours” you probably had something which is able to learn really fast and generalize a lot before this. how does the rest of the world look like?

OK, here’s my scenario in more detail. Someone thinks of a radically new AI RL algorithm, tomorrow. They implement it and then run a training. It trains up from randomly-initialized to preschooler-level in the first 24 hours, and then from preschooler-level to superintelligence in the second 24 hours.

I’m not claiming this scenario is realistic, just that it illustrates a problem with your definition of continuity. I think this scenario is “continuous” by your definition, but that the continuity doesn’t buy you any of the things on your “how continuity helps” list. Right?

(My actual current expectation (see 1 [LW · GW],2 [LW · GW]) is that people are already today developing bits and pieces of an AGI-capable RL algorithm, and at some indeterminate time in the future the pieces will all come together and people will work out the kinks and tricks to scaling it up etc. And as this happens, the algorithm will go from preschool-level to superintelligent over the course of maybe a year or two, and that year or two will not make much difference, because whatever safety problems they encounter will not have easy solutions, and a year or two is just not enough time to figure out and implement non-easy solutions, and pausing won’t be an option because of competition.)

Replies from: Jan_Kulveit↑ comment by Jan_Kulveit · 2022-06-17T05:32:20.592Z · LW(p) · GW(p)

So, I do think the continuity buys you things, even in this case - roughly in the way outlined in the post - it's choice of the developer to continue training after passing some roughly human level, and with a sensible oversight, they should notice and stop before getting to superintelligence,

You may ask why would they have the oversight in place. My response is, possible because some small-sized AI disaster which happened before, people understand the tech is dangerous.

With the 2-year scenario, again, I think there is a decent chance at stopping ~before or roughly at the human level. One story why that may be the case is, it seems quite possible the level where AI gets good at convincing/manipulating humans, or humans freak out for other reasons, is lower than AGI. If you get enough economic benefits from CAIS at the same time, you can also get strong counter-pressures to competition at developing AGI.

↑ comment by JBlack · 2022-06-18T02:02:24.302Z · LW(p) · GW(p)

How does the researcher know that it's about to pass roughly human level, when a misaligned AI may have incentive and ability to fake a plateau at about human level until it has enough training and internal bootstrapping to become strongly superhuman? Even animals have the capability to fake being weaker, injured, or dead when it might benefit them.

I don't think this is necessarily what will happen, but it is a scenario that needs to be considered.

comment by Richard_Kennaway · 2022-06-14T07:40:17.311Z · LW(p) · GW(p)

The practical equivalence of continuity and discontinuity comes at the point where the continuous transition gets inside your OODA [LW · GW] loop [LW · GW].

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2022-06-14T07:46:02.915Z · LW(p) · GW(p)

Sometimes, it really is discontinuous in the variables that one has chosen to plot. The continuous change is happening along some other variable not plotted. For example, if we plot "achieved AI power" against "supplied computing power in FLOPS", then at a point where it gains self-improvement capabilities, "AI power" can skyrocket while FLOPS remains constant. The continuous variable not plotted is time.

comment by Steven Byrnes (steve2152) · 2022-06-14T02:52:05.464Z · LW(p) · GW(p)

in Eliezer's recent post [LW · GW], discontinuity is a strong component of points 3, 5, 6, 7, 10, 11, 12, 13, 26, 30, 31, 34, 35

I think I disagree. For example:

- 3,5,6,7, etc.—In a slow-takeoff world, at some point X you cross a threshold where your AI can kill everyone (if you haven’t figured out how to keep it under control), and at some point Y you cross a threshold where you & your AI can perform a “pivotal act”. IIUC, Eliezer is claiming that X occurs earlier than Y (assuming slow takeoff).

- 10,11,12, etc.—In slow-takeoff world, you still eventually reach a point where your AI can kill everyone (if you haven’t figured out how to keep it under control). At that point, you’ll need to train your AI in such a way that kill-everyone actions are not in the AI’s space of possible actions during training and sandbox-testing, but kill-everyone actions are in the AI’s space of possible actions during use / deployment. Thus there is an important distribution shift between training and deployment. (Unless you can create an amazingly good sandbox that both tricks the AI and allows all the same possible actions and strategies that the real world does. Seems hard, although I endorse efforts in that area.) By the same token, if the infinitesimally-less-competent AI that you deployed yesterday did not have kill-everyone actions in its space of possible actions, and the AI that you’re deploying today does, then that’s an important difference between them, despite the supposedly continuous takeoff.

- 26—In a slow-takeoff world, at some point Z you cross a threshold where you stop being able to understand the matrices, and at some point X you cross a threshold where your AI can kill everyone. I interpret this point as Eliezer making a claim that Z would occur earlier than X.

↑ comment by Jan_Kulveit · 2022-06-14T03:43:28.182Z · LW(p) · GW(p)

- Can we also drop the "pivotal act" frame? Thinking in "pivotal acts" seem to be one of the root causes leading to discontinuities everywhere.

- 3,... Currently, my guess is we may want to steer to a trajectory where no single AI can kill everyone (in no point of the trajectory). Currently, no single AI can kill everyone - so maybe we want to maintain this property of the world / scale it, rather than e.g. create an AI sovereign which could unilaterally kill everyone, but will be nice instead (at least until we've worked out a lot more of the theory of alignment and intelligence than we had so far).

(I don't think the "killing everyone" threshold is a clear cap on capabilities - if your replace "kill everyone" with "own everything", it seems the property "no one owns everything" is compatible with scaling of economy.)

↑ comment by Steven Byrnes (steve2152) · 2022-06-14T12:55:23.729Z · LW(p) · GW(p)

Consider the following hypotheses.

- Hypothesis 1: humans with AI assistance can (and in fact will) build a nanobot defense system before an out-of-control AI would be powerful enough to deploy nanobots.

- Hypothesis 2: humans with AI assistance can (and in fact will) build systems that robustly prevent hostile actors from tricking/bribing/hacking humanity into all-out nuclear war before an out-of-control AI would be powerful enough to do that.

- Hypothesis 3,4,5,6,7…: Ditto for plagues, and disabling the power grid, and various forms of ecological collapse, and co-opting military hardware, and information warfare, etc. etc.

I think you believe that all these hypotheses are true. Is that right?

If so, this seems unlikely to me, for lots of reasons, both technological and social:

- Some of the defensive measures might just be outright harder technologically than the offensive measures.

- Some of the defensive measures would seem to require that humans are good at global coordination, and that humans will wisely prepare for uncertain hypothetical future threats even despite immediate cost and inconvenience.

- The human-AI teams would be constrained by laws, norms, Overton window, etc., in a way that an out-of-control AI would not.

- The human-AI teams would be constrained by lack-of-complete-trust-in-the-AI, in a way that an out-of-control AI would not. For example, defending nuclear weapons systems against hacking-by-an-out-of-control-AI would seem to require that humans either give their (supposedly) aligned AIs root access to the nuclear weapons computer systems, or source code and schematics for those computer systems, or similar, and none of these seem like things that military people would actually do in real life. As another example, humans may not trust their AIs to do recursive self-improvement, but an out-of-control AI probably would if it could.

- There are lots of hypotheses that I listed above, plus presumably many more that we can't think of, and they're more-or-less conjunctive. (Not perfectly conjunctive—if just one hypothesis is false, we’re probably OK, apart from the nanobot one—but there seem to be lots of ways for 2 or 3 of the hypotheses to be false such that we’re in big trouble.)

Note that I don’t claim any special expertise, I mostly just want to help elevate this topic from unstated background assumption to an explicit argument where we figure out the right answer. :)

(I was recently discussing this topic in this thread [LW · GW].)

we may want to steer to a trajectory where no single AI can kill everyone

Want? Yes. We absolutely want that. So we should try to figure out whether that’s a realistic possibility. I’m suggesting that it might not be.

comment by JBlack · 2022-06-14T01:47:03.307Z · LW(p) · GW(p)

There isn't really as much difference as it might seem.

This is true in a prosaic, boring sense: a steep continuous change may have exactly the same outcome as a discontinuous change on some process, even if technically there is some period during which the behaviour differs.

It is also true in a more interesting sense: the long term (technically: asymptotic) outcome of a completely continuous system can be actually discontinuous in the values of some parameter. This almost certainly does apply to things like values of hyperparameters in a model and to attractor basins following learning processes. It seems likely to apply to measures such as capabilities for self-awareness and self-improvement.

So really I'm not sure that the question of whether the best model is continuous or discontinuous is especially relevant. What matters more is along the lines of whether it will be faster than we can effectively respond.