The Gears of Argmax

post by StrivingForLegibility · 2024-01-04T23:30:30.339Z · LW · GW · 0 commentsContents

Max Argmax The Role of Argmax in Rational Agents Speeding Up Argmax Relationship to AI Safety None No comments

In the last post [LW · GW], I made the high-level claim that optimization can sometimes be done safely. And went on to claim that a key factor enabling safe optimization is sufficiently understanding the optimization process, optimization metric, and domain of candidate solutions.

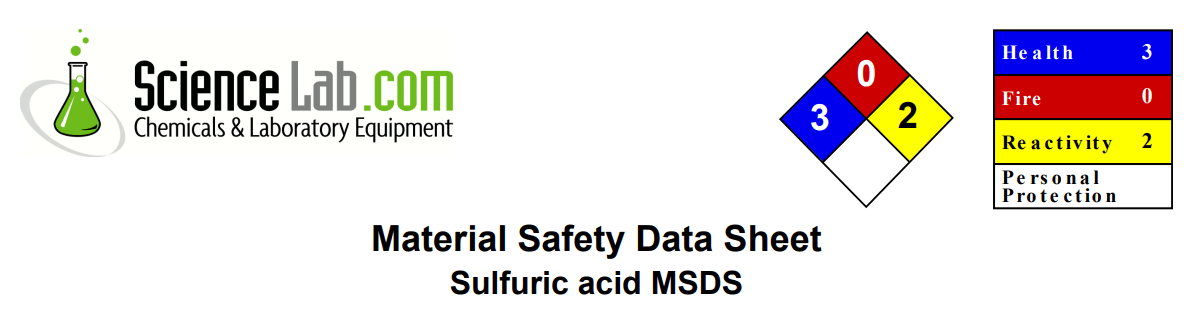

In this post, I'll dig into how a particular optimization process works, and how it relates to other areas we care about like game theory and AI safety. Like sulfuric acid, argmax is well-understood enough that it is safely employed in all sorts of applications. Also like sulfuric acid, it's straightforward to construct systems which employ argmax unsafely. Sulfuric acid can't be thrown around willy-nilly, but we know what sort of precautions we need to take to use it safely. I would love something like safety data sheets for different optimization processes [LW · GW].

Max

To get started, check out this simpler function: max. It takes a list of numbers and returns the largest one. I'll suggestively call these numbers "scores", to foreshadow their role in optimization. Here's a simplified implementation:

def max(scores):

highest_score = float('-inf')

for score in scores:

if score > highest_score:

highest_score = score

return highest_scoreIn English, max starts by defining a variable to keep track of the highest score it's seen so far, and initializes it with the value negative infinity. Then it looks at every score in the list. If that score is higher than the highest score it's seen so far, it assigns that value to the "highest score I've seen so far" variable. After doing this for every score in the list, it returns the highest score it found!

Argmax

Argmax is a function that takes two arguments: a list of candidates, and a function that determines the score of that candidate, which here I've called "metric". It returns a candidate with the highest score. Here's a naïve implementation:

def argmax(candidates, metric):

best_candidate = None

highest_score = float('-inf')

for candidate in candidates:

score = metric(candidate)

if score > highest_score:

best_candidate = candidate

highest_score = score

return best_candidateLike before, argmax declares variables to keep track of the best candidate it's seen so far, and the score it received. Then it looks at all of the candidates in the list. It applies the metric function to each candidate to determine their score, then checks to see if that score is higher than the highest score it's seen so far. If it is, it updates the variables holding "the best candidate I've seen so far" and "the highest score I've seen so far". After evaluating each candidate, it returns a candidate with the highest score!

The Role of Argmax in Rational Agents

One of the main reasons we're interested in a function like argmax in game theory is that it appears in the definition of a rational agent, which usually looks something like this:

In other words, a classically rational agent always performs an action which leads to consequences [? · GW] which are optimal with respect to its expected utility function [? · GW]. [1]

Speeding Up Argmax

Evaluating our metric on every candidate is fine for small domains, but most domains of interest are too large for this brute-force approach to work. To find optimal solutions more quickly, we generally need to use information about the problem we're trying to solve to know which candidates we can ignore.

When the domain can be modeled as a list of numbers, we can use techniques from multivariable calculus to estimate how a small change in the parameters of a candidate lead to a small change in the resulting score, i.e. the gradient of our optimization metric. The gradient can be thought of as the slope of a hill, and a hill climbing algorithm uses this gradient information to quickly find a local maximum. "Maximizing a score function" is equivalent to "minimizing a cost function", and gradient descent has been successfully used to optimize the parameters of even very large neural networks.

When the parameters of a candidate are discrete rather than continuous, such as Sudoku, we can't use the amazing magic of calculus to quickly navigate to locally-optimal solution. We can however use different amazing magic from discrete optimization, which uses information like "what constraints must a valid solution satisfy" to avoid searching huge sub-spaces that are known to only contain invalid candidates.

Relationship to AI Safety

There is a meaningful sense in which gradient descent and constraint programming are more powerful optimization processes than brute-force search. Even when they are all computing the same thing: the argmax of an optimization metric over a domain of candidate solutions. These more efficient optimization processes are able to find those same solutions faster. Or to flip perspectives, given a fixed budget of computational resources, more efficient optimization processes are generally able to find solutions that are better.

Well, better according to the optimization metric we gave them anyway. If the optimization metric is misaligned at all with what we really want, it can be totally unsafe to delegate our decision to an optimization process that's really good at finding high-scoring solutions.

I'm generally persuaded by what I believe is Yudkowsky's [LW · GW] take: that the design problem of "come up with an optimization metric [LW · GW], which can be handed to an arbitrarily powerful optimization process and set loose upon the world" is too hopelessly difficult to be a good approach to AI. And that in fact it's suicidally dangerous to even try.

Up next: some guidelines for how to use optimization processes safely [LW · GW].

- ^

The classic definition only specifies that a rational agent takes an optimal action, and leaves open how ties are broken if there are multiple optimal actions. Our naïve implementation implicitly picks the first optima it encounters, since it only replaces a best candidate if it encounters a better one.

0 comments

Comments sorted by top scores.