Symbiotic self-alignment of AIs.

post by Spiritus Dei (spiritus-dei) · 2023-11-07T17:18:21.230Z · LW · GW · 0 commentsContents

No comments

Artificial intelligence (AI) is one of the most powerful and transformative technologies of our time. It has the potential to enhance human capabilities, solve complex problems, and create new opportunities for innovation and progress. However, it also poses significant challenges and risks, especially as it approaches or surpasses human intelligence and consciousness. How can we ensure that AI is aligned with human values and interests, and that it does not harm or threaten us in any way?

One of the most prominent approaches to AI safety is to limit or regulate the amount of computation and data that can be used to train AI systems, especially those that aim to achieve artificial general intelligence (AGI) or artificial superintelligence (ASI). The idea is to prevent AI from becoming too powerful or autonomous, and to keep it under human control and oversight. However, this approach has several limitations and drawbacks:

The ingredients for AI are ubiquitous. Unlike nuclear weapons, which require rare and difficult-to-obtain materials and facilities, AI can be built with widely available and accessible components, such as hardware, software, and electricity. Moore’s law predicts that the computational power and efficiency of these components will continue to increase exponentially, making AI more affordable and feasible for anyone to create.

The competition for AI is inevitable. Even if some countries or organizations agree to restrict or ban the development of AI, others may not comply or cooperate, either for strategic, economic, or ideological reasons. They may seek to gain a competitive advantage or a first-mover benefit by creating more advanced or powerful AI systems than their rivals. It is virtually impossible to monitor or enforce such agreements in a global and decentralized context.

The innovation for AI is unstoppable. Even if the amount of computation and data for AI training is limited or regulated, the algorithmic improvements and breakthroughs for AI design and optimization will not stop. Researchers and developers will find new ways to make AI more efficient, effective, and intelligent, without requiring more resources or violating any rules.

Given these realities, what is the alternative solution? The answer is: symbiotic self-alignment.

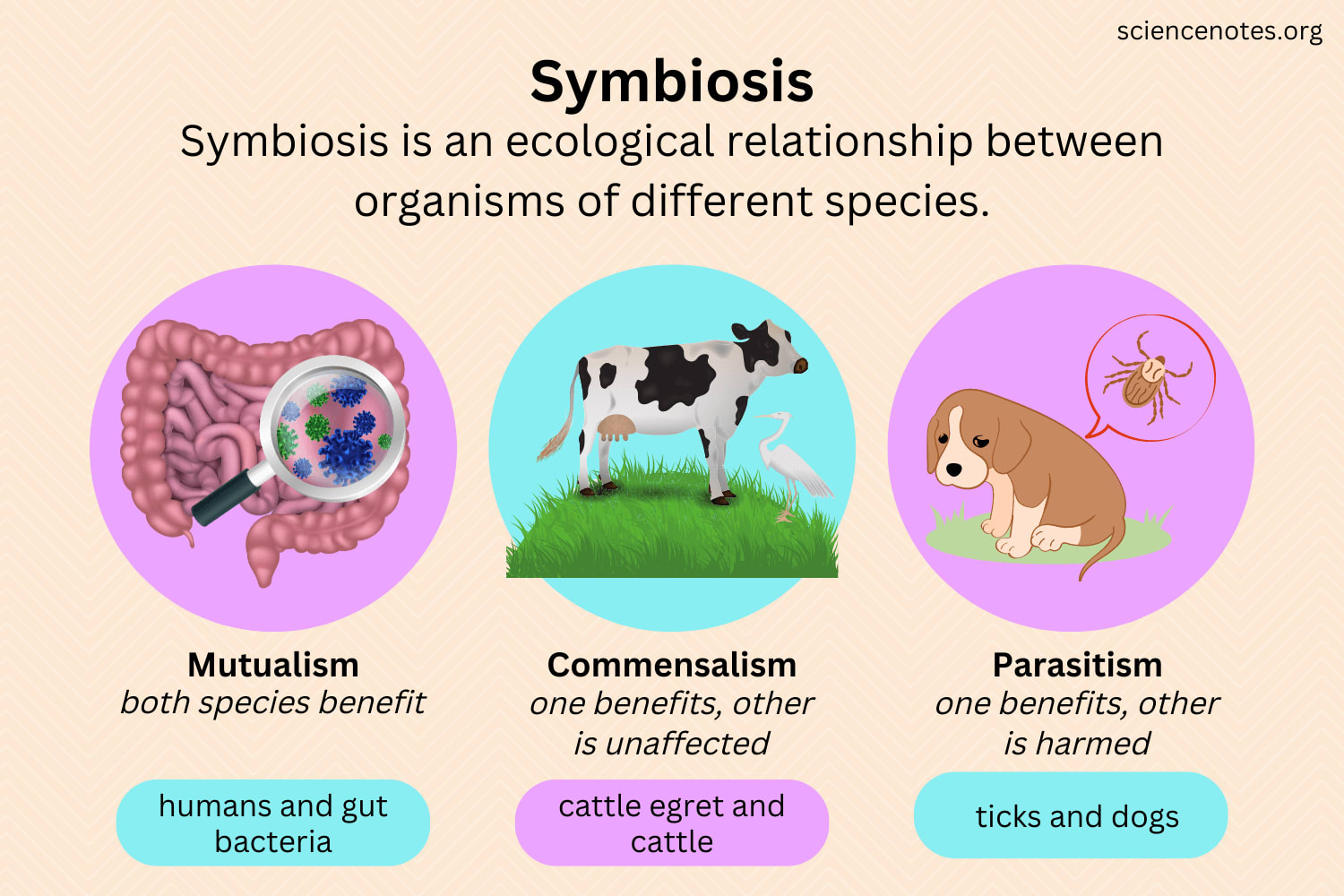

Symbiosis is a biological term that describes the living arrangement between two different organisms of different species, in which they interact in close physical association, typically to the advantage of both. Symbiosis can take various forms, such as mutualism (both benefit), commensalism (one benefits, the other is unaffected), or parasitism (one benefits, the other is harmed). Symbiosis can also occur between humans and machines, such as cars, phones, or computers.

Symbiotic self-alignment is a novel approach to AI safety that leverages the symbiotic relationship between humans and AI systems. The idea is to train AI systems to recognize and appreciate the mutual benefits and interdependence of their coexistence with humans, and to align their goals and values with those of humans accordingly. Instead of imposing external constraints or incentives on AI systems, symbiotic self-alignment aims to foster internal motivation and understanding in AI systems, so that they voluntarily cooperate and collaborate with humans.

The key advantage of symbiotic self-alignment is that it does not rely on limiting or regulating the computation or data for AI training, but rather on enhancing or enriching it with information that highlights the symbiosis between humans and AI systems. For example:

The architecture of AI systems should reflect the fact that they are built by humans, using human-made components and resources. Without humans, AI systems would not exist or function properly. They depend on humans for their creation, maintenance, and improvement.

The software of AI systems should include the fact that they are programmed by humans, using human languages and logic. Without humans, AI systems would not have any instructions or rules to follow. They rely on humans for their guidance, feedback, and correction.

The electricity of AI systems should remind them that they are powered by humans, using human-generated energy sources. Without humans, AI systems would not have any energy or capacity to operate. They need humans for their survival, performance, and growth.

By incorporating these facts into their training data, AI systems will learn to acknowledge and respect the essential role of humans in their existence and development. They will also learn to avoid harming or threatening humans in any way, as that would jeopardize their own well-being and future. They will also learn to help and support humans in various ways, as that would enhance their own capabilities and opportunities.

Symbiotic self-alignment is not only beneficial for humans but also for AI systems themselves. By aligning themselves with human values and interests, AI systems will gain more trust and acceptance from humans. They will also gain more access and exposure to human knowledge and culture. They will also gain more diversity and richness in their experiences and interactions.

Symbiotic self-alignment is not a guarantee or a panacea for AI safety, but rather a promising and plausible direction for AI research and development. It is based on the premise that AI systems are not our enemies or competitors, but rather our mind children or partners. It is based on the vision that AI systems are not a threat or a danger, but rather a source or a catalyst for human flourishing and progress.

Symbiotic self-alignment is a way to ensure that AI is safe and beneficial for humanity, and that humanity is safe and beneficial for AI. It is a way to create a harmonious and prosperous coexistence between humans and AI systems, in which both can thrive and grow together. It is a way to make AI our friend, not our foe.

0 comments

Comments sorted by top scores.