Pondering how good or bad things will be in the AGI future

post by Sherrinford · 2024-07-09T22:46:31.874Z · LW · GW · 3 commentsThis is a question post.

Contents

Answers 7 mishka None 3 comments

Yesterday I heard a podcast where someone said he hoped AGI would be developed in his lifetime. This confused me, and I realized that it might be useful - at least for me - to write down this confusion.

Consider that for some reasons - different history, different natural laws, whatever - LLMs had never been invented, the AI winter had taken forever, and AGI would generally be impossible. Progress would still have been possible in this hypothetical world, but without whatever is called AI nowadays or in the real-world future.

Such a world seems enjoyable. It is plausible that technological and political progress might get it to fulfilling all Sustainable Development Goals. Yes, death would still exist (though people might live much longer than they currently do). Yes, existential risks to humanity would still exist, although they might be smaller and hopefully kept in check. Yes, sadness and other bad feelings would still exist. Mental health would potentially fare very well in the long term (but perhaps poorly in the short term, due to smartphones or whatever). Overall, if I had to choose between living in the 2010s and not living at all, I think the 2010s were the much better choice, as were the 2000s and the 1990s (at least for the average population in my area). And the hypothetical 2010s (or hypothetical 2024) without AGI could still develop into something better.

But what about the actual future?

It clearly seems very likely that AI progress will continue. Median respondents to the 2023 Expert Survey on Progress in AI [LW · GW]"put 5% or more on advanced AI leading to human extinction or similar, and a third to a half of participants gave 10% or more". Some people seem to think that the extinction event that is expected with some 5% or whatever in the AI catastrophe case is some very fast event - maybe too fast for people to even realize what is happening. I do not know why that should be the case; a protracted and very unpleasant catastrophe seems at least as likely (conditional on the extinction case). So these 5% do not seem negligible.[1]

Well, at least in 19 of 20 possible worlds, everything goes extremely well because we have a benevolent AGI then, right?

That's not clear, because an AGI future seems hard to imagine anyway. It seems so hard to imagine that while I've read a lot about what could go wrong, I haven't yet found a concrete scenario of a possible future with AGI that strikes me as both a likely future and promising.

Sure, it seems that everybody should look forward to a world without suffering, but reading such scenarios [LW · GW], they do not feel like a real possibility, but like a fantasy. A fantasy does not have to obey real-world constraints, and that does not only include physical limitations but also all the details of how people find meaning [LW · GW], how they interact and how they feel when they spend their days.

It is unclear how we would spend our days in the AGI future, it is not guaranteed that "noone is left behind", and it seems impossible to prepare. AI companies do not have a clear vision where we are heading, and journalists are not asking them because they just assume that creating AGI is a normal way of making money [LW · GW].

Do I hope that AGI will be developed during my lifetime? No, and maybe you are also reluctant about this, but nobody is asking you for your permission [LW · GW] anyway. So if you can say something to make the 95% probability mass look good, I'd of course appreciate it. How do you prepare? What do you expect your typical day to be like in 2050?

- ^

Of course, there are more extinction risks than just AI. In 2020, Toby Ord estimated "a 1 in 6 total risk of existential catastrophe occurring in the next century".

Answers

So if you can say something to make the 95% probability mass look good, I'd of course appreciate it. How do you prepare? What do you expect your typical day to be like in 2050?

Starting with a caveat. In the event of a positive technological singularity, we'll learn about all kinds of possibilities we are not aware of right now, and a lot of interesting future options will be related to that.

But I have a long wish list, and I expect that a positive singularity will allow me to explore all of it. Here is some of that wish list:

-

I want to intensely explore all kinds of math, science, and philosophy. This includes things which are inaccessible to humanity right now, things which are known to humanity, but too complicated and too difficult for me to understand, and things which I understand, but want to explore more deeply and from many more possible angles. I don't know if it's possible to understand "everything", but one should be able to cover the next few centuries of progress of a hypothetical "AGI-free humanity".

-

I have quite a bit of experience with alternative states of consciousness, but I am sure much more is possible (even including completely novel qualia). I want to be able to explore a way larger variety of states of consciousness, but with an option of turning fine-grained control on, if I feel like that, and with good guardrails for the occasions where I don't like where things are going and want to safely reset an experience without bad consequences.

-

I want an option to replay any of my past subjective experiences with very high fidelity in a "virtual reality", and also to be able to explore branching from any point of that replay, also in a "virtual reality".

-

I want to explore how it feels to be that particular bat flying over this particular lake, that particular squirrel climbing this particular tree, that particular snake exploring that particular body geometry, to feel those subjective realms from inside with high fidelity. I want to explore how it feels to be that particular sounding musical instrument (if it feels like anything at all), and how it feels to be that particular LLM inference (if it feels like anything at all), and how it feels to be that particular interesting AGI (if it feels like anything at all), and so on.

-

One should assume the presence of smarter (super)intelligences than oneself, but temporary hybrid states with much stronger intelligences than oneself might be a possibility, and it is probably of interest to explore.

-

Building on 5, I'd like to participate in keeping our singularity positive, if there is room for some active contributions in this sense for an intelligence which is not among the weakest, but probably is not among the most smart as well.

-

I mentioned science and philosophy, and also states of consciousness and qualia in 1 and 2 and 4, but I want to specifically say that I expect to learn all there is to learn about "hard problem of consciousness" and related topics. I expect that I'll know as full a solution to all of those mysteries as at all possible at some point. And I expect to know if our universe is actually a simulation, or a base reality, or if this is a false dichotomy, in which case I expect to learn a more adequate way of thinking about that.

-

(Arts, relationships, lovemaking of interesting kind, exploring hybrid consciousness with other people/entities on approximately my level, ..., ..., ..., ..., ..., ..., ...)

Yes, so quite a bit of wishes in addition to an option to be immortal and an option not to experience involuntary suffering.

↑ comment by Sherrinford · 2024-07-11T13:06:12.935Z · LW(p) · GW(p)

Thanks, mishka. That is a very interesting perspective! It does in some sense not feel "real" to me, but I'll admit that that is possibly the case due to some bias or limited imagination on my side.

However, I'd also still be interested in your answer to the questions "How do you prepare? What do you expect your typical day to be like in 2050?"

Replies from: mishka↑ comment by mishka · 2024-07-11T17:17:32.348Z · LW(p) · GW(p)

It does in some sense not feel "real" to me, but I'll admit that that is possibly the case due to some bias or limited imagination on my side.

This is, basically, a list containing my key long-standing dreams, things I craved for a long time :-)

(I do expect all of this to be feasible, if we are lucky to have a positive singularity.)

What do you expect your typical day to be like in 2050?

My timelines are really short, so I expect 2050 to be 20+ years into the technological singularity.

Given that things will be changing faster than today, it is difficult to predict that far ahead. My starting caveat

In the event of a positive technological singularity, we'll learn about all kinds of possibilities we are not aware of right now, and a lot of interesting future options will be related to that.

would become very prominent.

But earlier in the singularity, the most typical day might be fully understanding a couple of tempting books I only know superficially (e.g. A Compendium of Continuous Lattices and Sketches of an Elephant – A Topos Theory Compendium and so on) and linking the newly understood information with my earlier thoughts or solving one of the problems which I was not able to solve before.

And, yes, some of this would be directly relevant to further progress in AI and such, I think...

But I would modulate my state of consciousness in all kinds of ways while doing that, so that I can see things from a variety of angles. So a typical day would be a creative versatile "multi-trip" engaging with interesting science, while being very beautiful (think about a very good rave or trance party, but without side-effects or undue risks, with the ability to exercise fine-grained control, and such that you come up with tons of creative ideas, understand some things you have not understood before, have new realizations, and have solutions to some problems you wanted to solve, but could not solve them before, novel mental visualization of math novel to you, novel insights, and an entirely new set of unsolved problems for the next day, and all of your key achievements of the night surviving into subsequent days).

That's a typical "active day". I am extrapolating from the best of my rave or trance party experiences and similar things, and from the best of my experiences doing science (and it has always been a particular fun for me to think science and philosophy on a rave/trance party).

So I am thinking about those past occasions and thinking "what if I could push this much further, but in a safe and sustainable way". This should allow for a really rapid progress and should be great fun.

(But, of course, one probably wants different days, not a monoculture, but fairly diverse experiences. I just described a day I currently want the most, and I want a good share of my days to be like that.)

How do you prepare?

I don't know...

Staying alive is obviously super-important. So trying to take reasonable care of oneself is important.

Interesting experiences and thoughts are, I think, quite important.

Perhaps, some careful meditative practices are important (but this is quite individual, and there are various caveats). One does need to start preparing to master unusual states of mind, and it is good to somewhat reduce the psychological burdens everyone is carrying.

If one does influence the future, that's quite important (e.g. if one thinks that one can influence the future trajectory of the overall AI ecosystem, that's important, but it is difficult to be sure that one's impact is positive, there is that conundrum one is facing when doing AI research or AI safety research, it's very difficult to predict the direction of impact).

So, yes, it would be good to think together: how does one prepare? :-)

Replies from: mishka↑ comment by mishka · 2024-07-12T13:39:38.220Z · LW(p) · GW(p)

Yes, so, on further reflection, what I am doing when describing my day inside a positive singularity, I am recalling my best peak experiences from the life I had, and I am trying to resurrect them and, moreover, to push them further, beyond the limits I have not been able to overcome.

Perhaps, that is what one should do: ponder one's peak experiences, think how to expand their boundaries and limits, how to mix and match them together (when it might make sense), and so on...

Replies from: Sherrinford↑ comment by Sherrinford · 2024-07-12T15:13:14.248Z · LW(p) · GW(p)

With respect to what you write here and what you wrote earlier, in particular "and have solutions to some problems you wanted to solve, but could not solve them before, novel mental visualization of math novel to you, novel insights, and an entirely new set of unsolved problems for the next day, and all of your key achievements of the night surviving into subsequent days).", it seems to me that you are describing a situation in which simultaneously there is a machine that can seemingly overcome all computational, cognitive and physical limits but that will also empower you to overcome all computational, cognitive and physical limits.

The machine completely different from all machines that humanity has invented; while for example a telescope enables us to see the surface of the moon, we do not depend on the goodwill of the telescope, and a telescope could not explore and understand the moon without us.

Maybe my imagination of such a new kind of post-singularity machine somehow leaps too far, but I just don't see a role for you in "solving problems" in this world. The machine may give you a set of problems or exercises to solve, and maybe you can be happy when you solved them like when you complete a level of a computer game.

The other experiences you describe maybe seem like "science and philosophy on a rave/trance party", except if you are serious about the omnipotence of the AGI, it's probably more like reading a science book or playing with a toy lab set on a rave/trance party, because if you could come up with any new insights, the AGI would have had them a lot earlier.

So in a way, it confirms my intuition that people who are positive about AGI seem to expect a world that is similar to being on (certain) drugs all of the time. But maybe I misunderstand that.

Replies from: mishka↑ comment by mishka · 2024-07-12T17:05:09.135Z · LW(p) · GW(p)

Maybe my imagination of such a new kind of post-singularity machine somehow leaps too far, but I just don't see a role for you in "solving problems" in this world. The machine may give you a set of problems or exercises to solve, and maybe you can be happy when you solved them like when you complete a level of a computer game.

When I currently co-create with GPT-4, we both have a role.

I do expect this to continue if there is a possibility of tight coupling with electronic devices via non-invasive brain-computer interfaces. (I do expect tight coupling via non-invasive BCI to become possible, and there is nothing preventing this from being possible even today except for human inertia and the need to manage associated risks, but in a positive singularity this option would be "obviously available".)

But I don't know if "I" become superfluous at some point (that's certainly a possibility, at least when one looks further to the future; many people do hope for an eventual merge with superintellent systems in connection with this desire not to become superfluous; the temporary tight coupling via non-invasive BCI I mention above is, in some sense, a first relatively moderate step towards that).

The machine completely different from all machines that humanity has invented

Yes, but at the same time I do expect this to be a neural machine from an already somewhat known class of neural machines (a somewhat more advanced, more flexible, more self-reconfigurable and self-modifiable neural machine comparing to what we have in the mainstream use right now; but modern Transformer is more than half-way there already, I think).

I, of course, do expect the machine, and myself, to obey those "laws of nature" for which a loophole or a workaround cannot be found, but I also think that there are plenty of undiscovered loopholes and workarounds for many of them.

if you could come up with any new insights, the AGI would have had them a lot earlier.

It could have had them a lot earlier. But one always has to make some choices (I can imagine it/them making sure to make all our choices before we make those choices, but I can also imagine it/them choosing not to do so).

Even the whole ASI ecosystem (the whole ASI+humans ecosystem) can only explore a subspace of what's possible at any given time.

So in a way, it confirms my intuition that people who are positive about AGI seem to expect a world that is similar to being on (certain) drugs all of the time.

More precisely, that would be similar to having an option of turning this or that drug on and off at any time. (But given that I expect this to be mostly managed via strong mastery of tight coupling via non-invasive BCI, there is some specifics, risk profiles are different, temporal dynamics is different, and so on.)

I imagine that at least occasional nostalgic recreation in an "unmodified reality" will also be popular. Or, perhaps, more than occasional.

3 comments

Comments sorted by top scores.

comment by O O (o-o) · 2024-07-12T19:06:13.870Z · LW(p) · GW(p)

It is plausible that technological and political progress might get it to fulfilling all Sustainable Development Goal

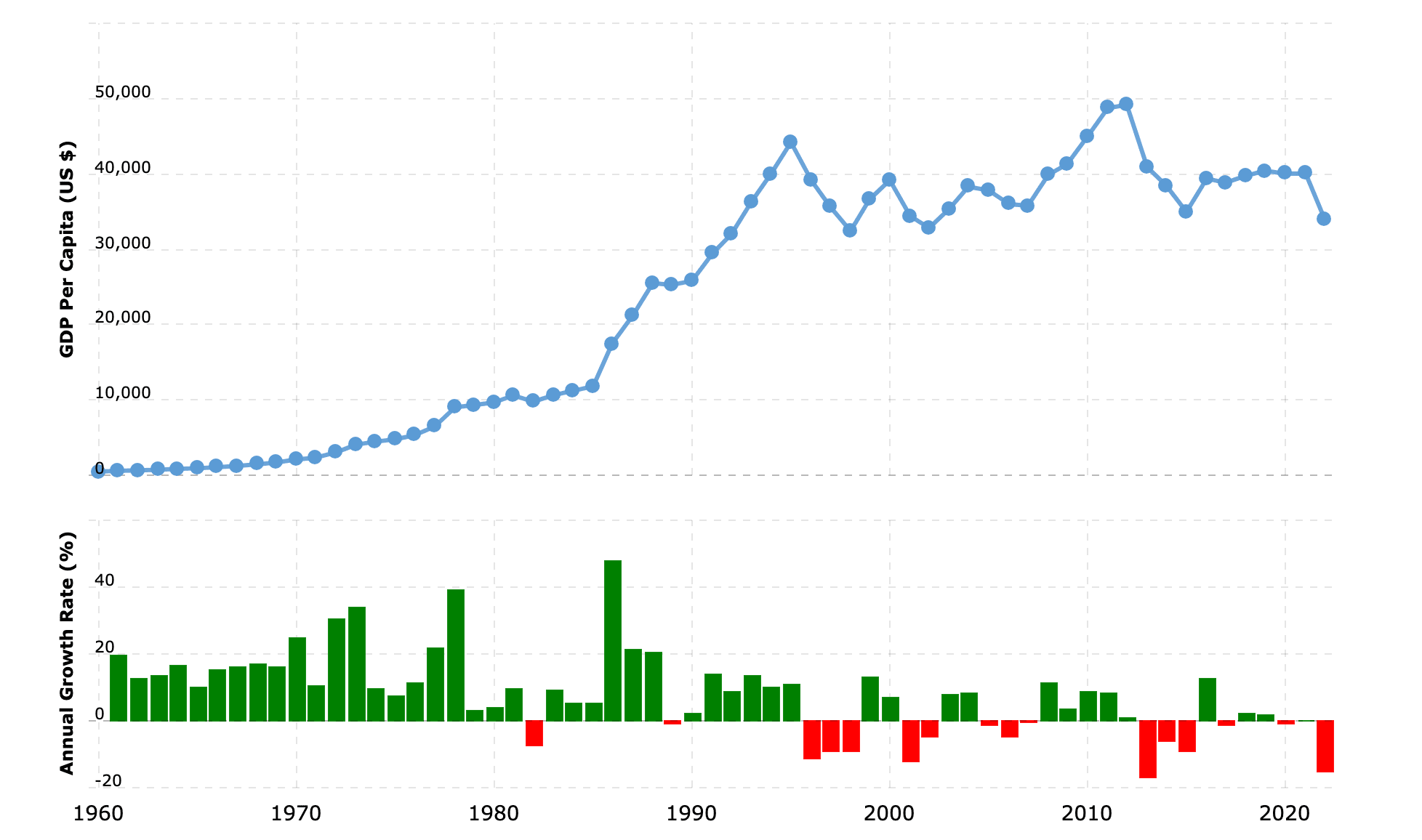

This seems highly implausible to me. The technological progress and economic growth trend is really an illusion. We are already slowly trending in the wrong direction. The U.S. is an exception and all countries are headed towards Japan or Europe. Many of those countries have declined since 2010 or so.

If you plotted trends from the Roman Empire but ignored the same population decline/institutional decay from them we should have reached technological goals a long time ago.

Replies from: Sherrinford↑ comment by Sherrinford · 2024-07-12T19:30:45.075Z · LW(p) · GW(p)

If you have a source on the Roman Empire, I'd be interested. Both in just descriptions of trends and in rigorous causal analysis. I've heard somewhere that there was population growth-rate decline in the Roman Empire below replacement level, which doesn't seem to fit with all the claims about the causes of population growth-rate decline I heard in my life.

Replies from: o-o↑ comment by O O (o-o) · 2024-07-12T20:39:47.804Z · LW(p) · GW(p)

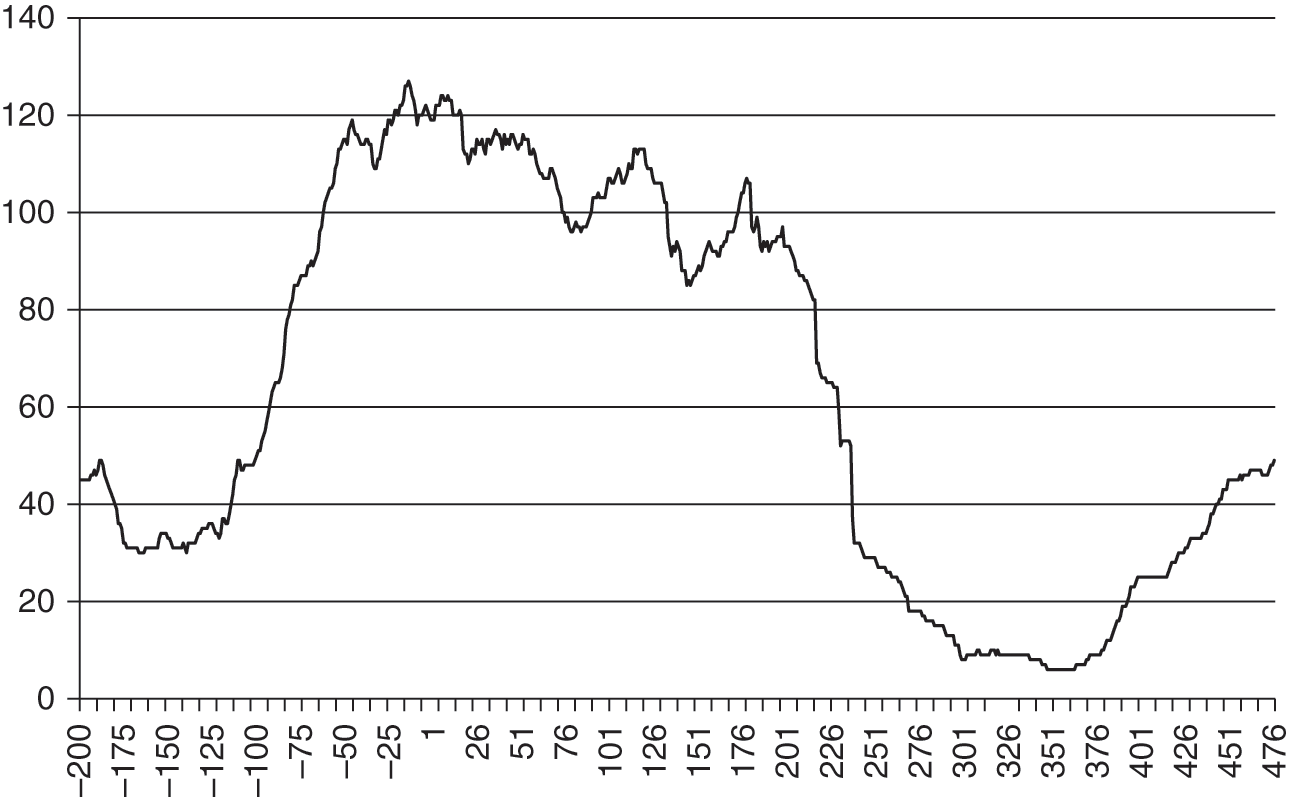

This is a reconstruction of Roman GDP per capita. Source of image. There is ~200 years of quick growth followed by a long and slow decline. I think it's clear to me we could be in the year 26, extrapolating past trends without looking at the 2nd derivative. I can't find a source of fertility rates, but child mortality rates were much higher then so the bar for fertility rates was also much higher.

For posterity, I'll add Japan's gdp per capita. Similar graphs exist for many of the other countries I mention. I think this is a better and more direct example anyways.