Podcast on “AI tools for existential security” — transcript

post by Lizka, fin · 2025-04-21T19:26:07.518Z · LW · GW · 0 commentsThis is a link post for https://pnc.st/s/forecast/30c70e39/ai-tools-for-existential-security-with-lizka-vaintrob-

Contents

Transcript Elevator pitch & how this relates to d/acc and def/acc Fin Lizka Fin Lizka Fin Lizka Fin Lizka Which kinds of AI applications seem most exciting? Fin Lizka Fin Lizka Getting more specific on applications & discussing adoption Fin Lizka Fin Lizka Fin Lizka Fin Lizka AI negotiation tools, privacy-preserving information-sharing Fin Lizka Fin Lizka Fin Lizka Fin Lizka Fin Lizka Fin Lizka Fin Lizka Fin Lizka Cybersecurity[5] Fin Lizka Fin Lizka Fin Lizka Things that might be possible as AI capabilities ramp up Fin Lizka Fin Win-win policy advice Lizka Fin Lizka Fin Lizka Fin Lizka Why these clusters of applications? Fin Lizka Fin Lizka What about market effects (and other dynamics that might make it hard to make a counterfactual difference)? Fin Lizka Fin Lizka Fin Lizka Fin Lizka Fin Lizka Fin Lizka Fin Lizka Methods: how can we accelerate beneficial AI tools? Fin Lizka Fin Lizka Fin Lizka Fin Lizka Fin Lizka Fin Lizka Fin Lizka Fin Lizka Fin Lizka Fin Lizka Applications that could be doable with today’s models (or generally very soon) Fin Lizka Fin Lizka Fin Lizka Fin Lizka Why not focus on slowing risk-increasing things down? Fin Lizka Fin Lizka Fin Lizka A pre-mortem Fin Lizka Fin Lizka (How) many more people should be working on this? Fin Lizka Fin Lizka Fin Lizka Fin Other upshots Speeding up automation of your area, using AI tools more Lizka Fin Lizka Fin Lizka Preparing for a world with much more abundant cognition Fin Lizka Fin Lizka Fin Lizka Fin Getting ready to help with automation Lizka Fin Lizka Fin Applications we’re personally excited for Lizka Fin Lizka Fin Lizka Fin Key open questions? Lizka Fin Lizka Fin Lizka Fin Lizka None No comments

About two months ago, @finm [EA · GW] and I recorded a discussion about my now-published piece [LW · GW] on “AI tools for Existential Security”[1] (for the podcast Fin has been running at Forethought). I’d never done something like this before, and liked the result a lot more than I thought I would![2]

I'm sharing a quick transcript here in case anyone appreciates section headers, hyperlinks, etc.. Please note that this is very rough; it's an unpolished, not-double-checked “MVP” edit of an AI-generated transcript, and will definitely have mistakes.[3]

Here’s the episode itself; you should also be able to find it on most podcast apps. The discussion loosely follows the structure of the piece [LW · GW] it focuses on.

My thinking on some of what we discuss here has changed a bit, but I'd still say most of this (sometimes with somewhat different emphasis, framings, etc.) and I wanted to quickly get this out there. (I plan to share more on this broad topic soon.)

The other episodes of “ForeCast” that currently exist are:

- Will AI R&D Automation Cause a Software Intelligence Explosion? (with Tom Davidson)

- Preparing for the Intelligence Explosion (with Will MacAskill)

- Intelsat as a Model for International AGI Governance (with Rose Hadshar)

I’m behind on listening to my podcast queue, even for episodes recorded by my colleagues,[4] and am embarrassed to say that I haven’t yet listened to most of these so can’t say much more!

Transcript

Elevator pitch & how this relates to d/acc and def/acc

Fin

Okay, I am Fin and I'm speaking with Lizka Vaintrob about a memo that you’ve co-written with Owen Cotton-Barratt, on AI applications for existential security.

So what is the elevator pitch for this?

Lizka

The basic idea is:

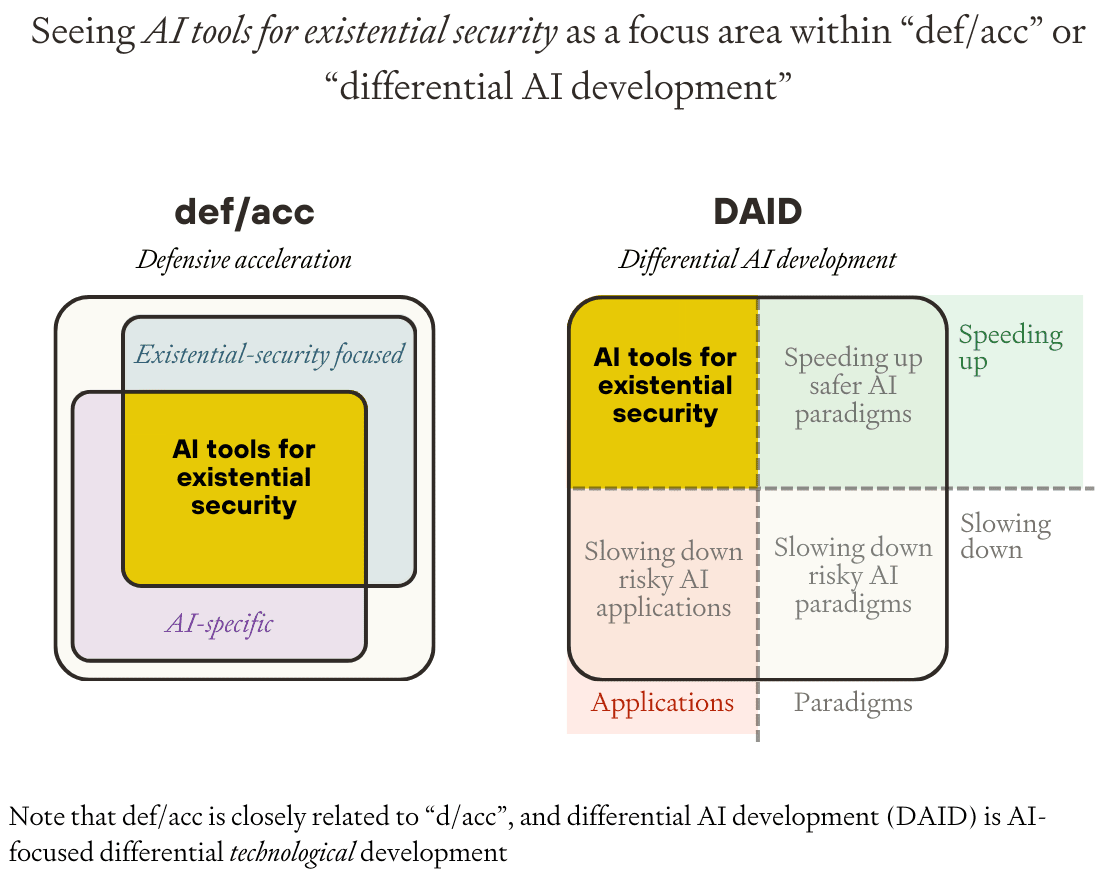

“What AI is doing” is increasingly shaping the world. But AI is not one big thing, and much like we have differential technological progress [? · GW] — which is the idea that you can bring forward positive technologies and push back risk increasing technologies — we might be able to shape the character of AI that is available to us, and the exact applications that exist in the world in a way to help us navigate the coming challenges.

I actually think that the opportunity here is fairly big.

You can cut this space up in various ways. You can talk about shaping the paradigms of AI development. So that's like, maybe you want to try to help more interpretable architectures succeed, or bet on learning algorithms that are less likely to get you to scary properties of AI systems. But you could also try to shape the kinds of applications that are available at various points in time.

Paradigms are a little bit more outside of my wheelhouse, so I've been focusing more on applications, and I'm also focusing more on bringing forward, so speeding up the beneficial applications rather than slowing down the scary ones.

Fin

This sounds a lot like d/acc or def/acc. Is it the same thing?

Lizka

That's right. I think in a similar spirit. Both of those are not specific to AI. I do think a lot of people who are working in the space are thinking about AI. I think the idea of d/acc is to focus specifically on decentralized technologies, or things that —

Fin

I thought the D stands for defensive but is it decentralized?

Lizka

I think it stands for three things. I don't remember exactly what they are. He wrote a follow-up essay recently, which I think is quite good, and he also clarifies it a bit.

Maybe the main difference between what I'm thinking of as differential AI application development and def/acc is (a) it's focused on AI. And (b) I think also I'm a little bit more into focusing on the really high priority cases for existential risk, as opposed to broadly defensive.

Fin

And then you also mentioned the more general idea of differential tech development. Is the thing that you're talking about — AI applications for existential risk — is that, like a sub-area of this thing?

Lizka

You can imagine cutting differential AI development into four quadrants. So you have two dimensions here. You have paradigms and applications, and you have speeding up and slowing down. And I'm in the speeding up AI applications quadrant.

From the appendices to AI Tools for Existential Security.

(I also think speeding up some paradigms might be very promising. I think there are also valuable things to do in the slowing downside, potentially.)

Which kinds of AI applications seem most exciting?

Fin

Okay, so let's just jump into it. We're talking about, you know, positive AI, applications for, you know, reducing existential risks. And I just want to ask, what are those applications that you're most excited about?

Lizka

So we've been thinking mostly about three clusters. I think one of them is sort of a cop-out cluster, but yeah [Fin: so it goes].

So the first cluster is epistemic applications that can help us understand the world better. That could include AI for forecasting — better predictions about the world, could include stuff like helping people make good judgment calls, could include things that help basically improve collective sanity — maybe fact checking in various ways. And it could also include things like AI for making progress on philosophy or similar things, where maybe our errors would be a little bit more subtle. So that's one cluster: epistemics.

Another one is coordination-enabling applications. So that's stuff like automated negotiation tools, which can work in various ways — maybe we can talk about them later. Could include some forms of commitment technology. So sometimes when you want to make an agreement with someone, especially in contexts where you can't quite trust people, you both want to make an agreement, but you can't trust that it will be followed, and so the commitment is never made. So you could imagine commitment tech being good — although I think there's also some downsides. And then one other thing in this coordination enabling cluster is basically structured transparency tools. So this is stuff that helps us get over difficult trade offs between privacy and being able to share information

Fin

Like monitoring as well, right?

Lizka

Exactly. So maybe you want to prevent people from releasing really scary viruses, but you don't want to monitor everything that they do.

And then the last cluster, which, again, is slightly the cop-out cluster, is risk-targeted applications. So basically, if you're working on something that you think is really important for navigating existential risks, you might be able to speed up automation of that work, or build applications that are complementary and help improve that work.

A thing that people often talk about is AI for AI safety (or for alignment). But you could also have AI for bio risks, bio surveillance tools. Infosec plays into various things. So yeah, AI cyber security tools, some other stuff — maybe digital minds [research].

Getting more specific on applications & discussing adoption

Fin

I would really like to go back through that list and then pick out almost unnecessarily concrete examples to picture it. So we have these three classes that you mentioned. So like epistemics (tools for thinking, basically), tools for coordination, and then the cop out bucket of, like, risk-focused applications.

On the epistemic cluster, is there anything just like, on your mind that you're like, actually picturing examples of?

Lizka

Forecasting could be a good one here. There's been some work on this. I haven't been following exactly. I don't know how successful AI systems are at forecasting, but you could also imagine stuff like “they get really good at grading statements from pundits, and produce track records.”

Fin

You could almost imagine doing that now, right?

Lizka

Yeah.

I guess with that kind of tool, a worry I often have is about adoption. Like, how do you get people to actually go and look at this?

A thing I'm excited about here is trying to help make the platforms and systems that already are being used (or that will be used by default) more epistemically sound. That could be like, produce a benchmark or an eval of “how much are people better informed after engaging with something with an AI tool”. Ideally, people are very proud of the fact that their system is really good at helping people have better opinions, like true opinions.

Fin

I saw some work recently where they just got participants to chat with an AI assistant, and they found that just extended, semi-directed conversations reduce certain kinds of conspiratorial beliefs. So it's not just tools, and also benchmarks, social science research...

Lizka

Yeah. I guess we're getting a little bit into methods, but the way I think about it is: benchmarks can help you get the tools that you want to exist.

Another way to get around adoption issues is to focus on specific users, or decision-makers who want to help. Like, maybe you know people who are trying to stay up to date on a rapidly evolving field, or trying to do research on a topic or something. Maybe you can make tools that work better for them and make sure that they're not being left behind.

Fin

So there's this coordination bucket. You're talking about negotiation tools and verification and so on. Again, is there anything you want to pick out there to really fill out?

Lizka

Again, I'm quite worried about adoption — let’s set that aside though.

AI negotiation tools, privacy-preserving information-sharing

So, automated negotiation tools. I think there are a few different ways they could work, but I'll just sketch out one way.

So negotiation processes are often really difficult.

Fin

This is, like, between countries, we're talking about?

Lizka

Between countries, but also between, I don't know, like, agreements being made in Congress or something — you want to pass a...

Fin

In a political..?

Lizka

Political, yeah. (I think both could work; they have different difficulties and different theories of how exactly it's helpful. You could imagine making it easier to coordinate ML scientists who want to make sure that companies are being ethical or something, or companies that want to coordinate with other companies without violating... to do things that I want. So I think there's a lot of stuff here that I'm excited about.)

(Yeah, I want to think more about exactly which cases are most promising. Some of them are more like long shots — I endorse thinking about long shots here.)

Especially when you have multiple parties in a negotiation process... Often, actually, negotiation processes will restrict the number of parties artificially because it's so complicated. You've got this issue where it's really costly to re-propose something.

So, often you'll have a bunch of informal conversation, a bunch of research, then you have some initial proposal, some formal discussion of that proposal, some more informal discussion between people, whatever, more research, a little bit of negotiation, maybe a vote, and then you'll have maybe an update, and like, maybe it'll go back to the drawing board. But that will only happen a few times in many of these settings — because again, super costly. Then you have some agreement. It's maybe less likely to pass because people didn't get what they want. It's probably not as good as it could be, etc.

So now imagine that you have... let's say every party has an AI system or tool or something that basically acts as its delegate. It's a system that you trust — so maybe even people have their own system somehow — and it knows a lot about what you’re happy communicating with other parties, what your preferences are, etc. And then it coordinates with all of the other parties’ delegates. And they can go through a ton of various proposals and just say yes or no, maybe re-propose something. They can do this a huge amount. And then they can come back and be like, “Okay, how do you feel about this, human representative?” And then you can say yes or no, but you've gone through a lot more iterations, which tends to lead to better outcomes, and the agreements can be a lot more complex.

Fin

So you have, like, a draft bill for some legislation or whatever. If you could put all the congresspeople who are interested in shaping this bill — and they have ideas, how could we improve and would make their constituents better off — if you could put them in a room for two months, they probably would hash out something better. But you can't do that because of time constraints. But if you could somehow automate the process and then just skip to the output, and if every party somehow saw that the output is like, “oh, that's an improvement by my lights,” then you can just discover these much better compromises that we just can't discover right now.

Lizka

That's right, yeah. Also, negotiated processes tend to be better when more things are on the table — I guess when the pie is bigger, because you can negotiate over different things, like, maybe someone really wants jobs or something...

Fin

Oh, so maybe it's not just one very narrow bill, but it's this omnibus thing.

Lizka

Yeah, that's right. And it's really complex, but then you have your system that explains to you exactly how it's affecting your priorities, what your constituents are going to think about it. That kind of thing is exciting to me.

And then these kinds of tools could also potentially get around asymmetric [or incomplete] info issues. So this is where maybe you have a preference, but you don't want to share that you have a preference — or, you know, something about the situation. This sort of thing will inform the best deal that is possible, but you don't want to share directly. So then you could imagine third-party systems, or ways for these systems to reliably forget information. But, like, yeah, you have some sort of...

Fin

I mean, I really like this idea. It doesn't just apply in the high-stakes/existential risk context. Like, there's these more prosaic contexts where maybe you're a journalist, and I want to come to you and I want to sell you some news story. But I, like, can't tell you the story, because you have every right to not pay me then. But then you aren’t going to pay me unless you know that I have a valuable story. And this is very hard to solve, but maybe you could solve it with AI. If you want to speak to that?

Lizka

One of the ideas here is, maybe you have a trusted third party. Somehow, you have a system that you both know can make objective decisions in various ways. Maybe you have some evals to test the system. Maybe it's a complicated, multi agent setup. I think Eric Drexler has some writing on this, by the way. And the idea is, you have some research you want to sell, or you have a story, or whatever; you can tell your idea to the system. It can be like, “Yep, this is a good deal, other party. Go take it.” Or like, “you should pay this amount. You should be happy to pay this amount.” And then maybe that system... like, you can prove that it's forgetting it... it's like, re-started to before it knew this information, or something like that.

Fin

And then the more high-stakes example might be something like: we are representatives of two different nuclear armed states. And we want to hit some sort of disarmament or non-proliferation agreement, but if we show too much willingness to give up our existing stockpiles or whatever, or some you know [Lizka: or you don't want to say where your data center is, or something], yeah, then you're weakening yourself if the agreement doesn't get made. So you want to posture as much as possible. But then that can mean that agreements don't get made.

Lizka

There's more I want to think through here, but I can imagine a situation where, say, you want to be like, “Yeah, we’re for sure not using this massive stockpile to do this thing that we both agreed not to do.”

And you want to be able to prove this, but you don't want to show exactly what you are using that compute for. The other party, other state, has some system that they trust very well — they made it whatever — and you can somehow check that it has forgotten some information that it has gotten — it's being reset, or whatever (there's like various mechanisms you can imagine).

Then maybe what they can do is give the information to that system — all of your information, somehow — where the data center is, or what is going on in this data center... And then it can go and tell its human representative (its customer) that yes, you're not using it to do that thing. So that's more in the verification sense. But yeah, it's asymmetric information issues.

Fin

Yeah, I guess it’s the right thing. I'm just proving somehow — like “I promise we're not doing X, Y, Z, but following these agreements.” But currently, verification is itself very hard.

Lizka

There are various setups that people have explored a bit, but yeah, it's probably a problem.

Fin

And then there’s this third bucket, which is AI applications targeting specific risks again. I don't know if anything jumps out at you as being especially interesting?

Lizka

Here, I'm honestly very excited about domain subject matter experts sharing what seems especially... how it's been going already (because people are using AI systems here), where they see opportunities to speed things up, and also what seems most useful.

Cybersecurity[5]

Setting that aside, I'm quite interested in cybersecurity stuff. I think I’m quite worried about some failure modes there. I think the opportunity is quite [big]; I think AI could start helping. I mean, it already is helping, but could be helping meaningfully in the near future. And there are reasons to believe that we care about this more than people might naturally do; if you have an existential risk world view, then there's a big public good of managing existential risks. You might imagine that the world is under-investing — that individual groups, companies, whatever, governments are under-investing in cybersecurity.

Fin

I feel very skeptical about that. I mean, we're still talking about AI companies. How much are these companies willing to pay — or indeed, increasingly, countries? I expect it's in the high billions. If they can just plow money into making themselves more secure, they would.

Lizka

Yeah. I don't know how things are going in AI companies. I do think that governments are clearly under-investing. And governments like I also care about. And also stuff like bio-companies. Or potentially, as you get to other technologies that could be destabilizing, again you have companies that are not really investing as much as we want them to.

Fin

Yeah. The other thing I was going to mention is that the cybersecurity industry as a whole is very large, and so the weight that the community of people who specifically care about existential risks can throw around relative to that industry is just pretty small.

But then it sounds like you're saying, at least in this case, maybe it's hard to meaningfully speed up AI applications of cybersecurity and information security, but you can do things like getting adoption?

Lizka

Oh, I'm not sure. Yeah, this is interesting — I want to think more about this. I probably don't think that it's especially hard to speed up AI applications, for instance, just for cybersecurity.

Fin

Maybe, like, the cybersecurity world is just completely underrating how useful AI is going to be. And so you can get a head start. You can found the automated information security startup, and then you have this first mover advantage, something like this?

Lizka

I don't know enough about the cybersecurity world here. You could imagine that people are fairly secretive about... Like, a lot of work is not being shared in various ways — a lot of what's going on in the cybersecurity world. I guess this is sort of adoption, shaping adoption, where you, in fact, make sure that the deployers of various advanced technologies, or the US government, are using the most advanced things possible, I'm not sure. But yeah, this is all plausible to me.

Other stuff in the risk targeted things. Bio-surveillance has been discussed. I'm quite excited there.

Things that might be possible as AI capabilities ramp up

Especially as AI capabilities ramp up, you could imagine things that look pretty weird to us today, that are promising.

So, for instance, managing the outbreak of a disease is hard, and social distancing apps are not great right now. You could have AI systems create or power applications that are super customized to every person who has them, which, right now is not really feasible. It's like ridiculous to talk about...

Fin

Wait, say more. So I have my app on my phone, and it's like a contact tracing app, right? [Yeah.] And it's like monitoring where I go and stuff and loads of other details...

Lizka

Yeah, I guess it's monitoring. It's talking to other people's apps. Ideally, it's not sharing information that you don't want shared. But it's also talking to you in exactly the language that you want. It knows what you care about, what the risks are that you are willing to take, or can explain things in a reasonable way to you. Maybe can even suggest, like, “oh, you should consider rearranging in this way,” or whatever — it's extremely custom. What you might imagine a really good executive assistant doing for a CEO — everyone could have this kind of thing in the future

This is just an illustration; I'm not actually super excited about this specific way of mitigating pandemics. But the idea is, in areas like this, I think we will have these weird things, these strategies that look really weird now...

Fin

Yeah, but also not weird in the sense of, “I can't see how this is doable, because it seems to involve alien technology.” Like, the thing about COVID. So much of the risk was concentrated into these, like, small number of super spreader events. If you’re just a bit pro-social — you care about not infecting people and not getting infected — and then you had an app which was smart about telling you, “just don't go to this concert and then you can get on with most of the rest of your life,” that would have probably helped everyone.

Win-win policy advice

Lizka

There's also stuff like: people were not tracking ventilation as a key thing here, right? So you had schools with closed windows — stuff like that. I don't know, maybe this goes into this epistemics. Like, people were not doing the thing. You didn't have mask production ramp up...

Fin

Yeah, it's interesting. Like, if you imagine early on in COVID, after it's gone pandemic; we have a lot of like, confusion and like, policies going back and forth. If you just had these trusted AI advisors, and you say, “Hey, go and just think about what we should be doing as a country now, and they come back with this very well-evidenced, almost like Deep Research, report.” “Here are the bottom line suggestions. Here are the misconceptions you might otherwise have. Ventilation seems really important.” I think that's not intractable because you get all this partisan disagreement — people were just empirically wrong about stuff, and you can really help with that.

Lizka

I mean, you could imagine that you have checks on systems. Maybe, in fact there are partisan systems but, I don't know, I don't think lots of your constituents dying from COVID is good for any party. So there's a lot of shared interests here. So even if you do have partisan advice to policymakers, I think it could still be a massive improvement.

Also these systems can talk to people in the language that makes sense to them and understand their priorities. Like, “okay, you don't want kids to be missing school. Okay, so that's a big problem. So what are the possible solutions...”

People might care to different extents, have different priorities here. But the way I think about these kinds of things is something like, the pie that we collectively have is very, very big, and we're burning a lot of it all the time.

Fin

There's so many win-win things you can discover with smart enough advisors. I really agree with that.

Any other, like, just fun, concrete ideas? (Or we can move on.)

Lizka

There's some exciting things happening in AI for AI safety, I think.

For a lot of the near-term applications, the way I'm thinking about them is they are good for speeding up later-term applications, by demonstrating interest, by building up the skill required to build this kind of thing, by leaning into very compounding effects.

So like, you start making better progress on vaccine science now, and then that puts you in a better place for making even faster progress later on. (Or forecasting, or whatever.) So I often like to think about “small” applications in the near term, and I'm happy to get excited about them when I think of how they can speed things up later, if that makes sense.

Fin

Yeah. I guess I think about AI safety, where at least until recently, a lot of the experiments were toy experiments. Like even using models much smaller than the frontier models, even when the frontier models were smaller than they are now. And so you don't really get results that you can usefully carry over very much, but you get these really nice clues. And then you can imagine, “okay, we can now scale these experiments up, and we now know we have some indications of which experiments are really promising and which are not very promising.”

Lizka

Yeah. I don't know how much compute is the issue, there, or something similar where presumably, larger models are more expensive to run experiments on, in various ways. But I think, in fact, designing experiments... helping alignment researchers run more empirical tests or experiments would be very useful. I think a lot of people, in fact, struggle; they either feel happy running experiments or doing other kinds of theoretical research. And I think there's space for applications here.

Why these clusters of applications?

Fin

I want to ask: why these applications? Why these three buckets, and why not a bunch of other useful ways to use AI?

Lizka

I think this is just one way to cluster things. One of the top things I want more work on is better prioritization here, better specifications, etc.

But, that said, there are things that you could imagine me having said earlier that I haven't said. So, for instance, like AI for medicine or for tutoring kids (maybe that's epistemics, but it’s not what I’m thinking of). I think they're probably quite beneficial in various ways. And there are obvious cases to be made for these applications.

But I have a frame of “we should get ourselves prepared to navigate existential challenges in the near future.” And so I want to really prioritize. And then the things that feel like they matter a lot in the space are:

- Helping decision makers understand what's going on, and what the possible strategies are. So that's epistemics; this is sort of empowering humanity to deal with these challenges.

- The second part is: well, humanity is big. There's a bunch of different interests, and it's really, really hard to coordinate. So coordination stuff; this is sort of my case for why this seems really important.

- And the third [cluster] is: we have a lot of people who are actively working on specific issues that they already see as coming up. And I think this is quite promising, both because this is important work and because we know who the users might be.

Fin

When you say we, I guess it's something like the community of people who care a lot about existential risk stuff and are trying to, like, devote some part of their career to issues they think are important?

Lizka

Yeah. Although I guess existential risk doesn't seem like a strong prerequisite here — people who just want to make certain things/ help the world.

You can imagine taking a “cluster-thinking” approach; helping people make more reasonable decisions and not fight is good for a bunch of other things. And again, I think it's useful to prioritize. I like to do a bit of both kinds of thinking, I guess.

A complementary approach you could take with applications is thinking about where we might have an especially big opportunity to speed them up. So both important applications and then ones that might come later than they could have, for reasons that we can affect. Often that might be because they're under-incentivized — they're providing public goods, or the users in question are people who don't have a lot of funding or won't be spending tons of money on tech. So government officials, policy-makers come to mind there.

What about market effects (and other dynamics that might make it hard to make a counterfactual difference)?

Fin

You might worry that sure, a lot of these applications sound really great and useful, but we should, in at least a ton of cases, just expect the market to provide them.

And in fact, maybe in some cases, if you step in as an impact-motivated investor, then what you're basically doing is just displacing some other investor who cares less about impact who’ll then invest in some random other application. You're having very little counterfactual.

Curious to hear more about the reasons to think, in general, that it's possible to make much of a difference on these AI applications, given that AI is just going to be such a big deal, there's going to be a ton of money flowing into it.

Lizka

Totally.

So I think market forces and some other dynamics in fact decrease our opportunity for making a difference. There's going to be some amount of market correction — or you should expect that these applications will be coming at some point. So maybe you can only speed them up so much.

Another concern that's often brought up is that AI progress has been fairly general. So maybe if you manage to accelerate forecasting a little bit, then the next generation of [general] models will just trump whatever system you've created. Yeah, I do think this is a thing to consider.

I do, however, think that as a general rule, there's a fairly significant opportunity to speed things up. A few things that inform my view here.

One, I think the underlying AI capabilities are there often significantly before they're basically usable, where “significantly” is like months, at least. You might be surprised that this is the case. I think part of the reason it is, is because AI is, in fact, just a small space.

The private efforts going into making AI applications are just very small — I guess it's a growth industry. So there's a lot of noise about AI startups, there's a lot of AI startups these days, but it's still tiny relative to how much money and interest is on the table.

And you see people who run startup incubators be like, “it's the best time to get into to start an AI startup right now,” because they're growing significantly faster than non-AI startups used to. Like 90% or maybe 80% of the most recent Y Combinator batch was AI — a lot of them are working on stuff like agents. I think there was some factoid like these companies are tending to go from $1M to $30M five times faster than non-AI companies, or something like that.

So, yeah, growth industry. People are really trying to get more people in, because the space of opportunities is so much richer than the set of opportunities that are being taken. Separately, I think a lot of this effort is not being allocated optimally. But the point is, I think really not a lot of opportunities are getting taken right now.

Fin

I think that's a good point; sure there's a lot of hype in AI, and that will continue presumably, but there's a difference between, there's a ton of interest in investing in stuff and then just the supply of ideas for startups and people who are willing to actually do them.

Like, maybe an analogy could be — you could tell me, if this is silly — like, maybe it's the early 2000s and you're just really confident that social media is going to be a huge deal in the next couple of decades. And then there's also maybe a bunch of hype around finding social media company startups, and then you're just like, “well, I can throw my hat in the ring here, and there's a decent chance that I end up, shaping the next Facebook, or displacing what would otherwise be Facebook, which, has a bunch of contingent properties, which could have turned out otherwise.”

Lizka

I guess social media is interesting because it also has a bunch of network effects to consider,

Fin

But which, again, is a reason for thinking that there's contingency?

Lizka

So, yeah, you could imagine — I've been talking about bringing forward the beneficial applications and maybe pushing back the risk-increasing applications. You could also imagine that you want to make sure that a slightly better version of an important and foundational institution that will be developed is... You want to help a better AI thing succeed. I've mostly decided this is out of scope for now, but this is interesting.

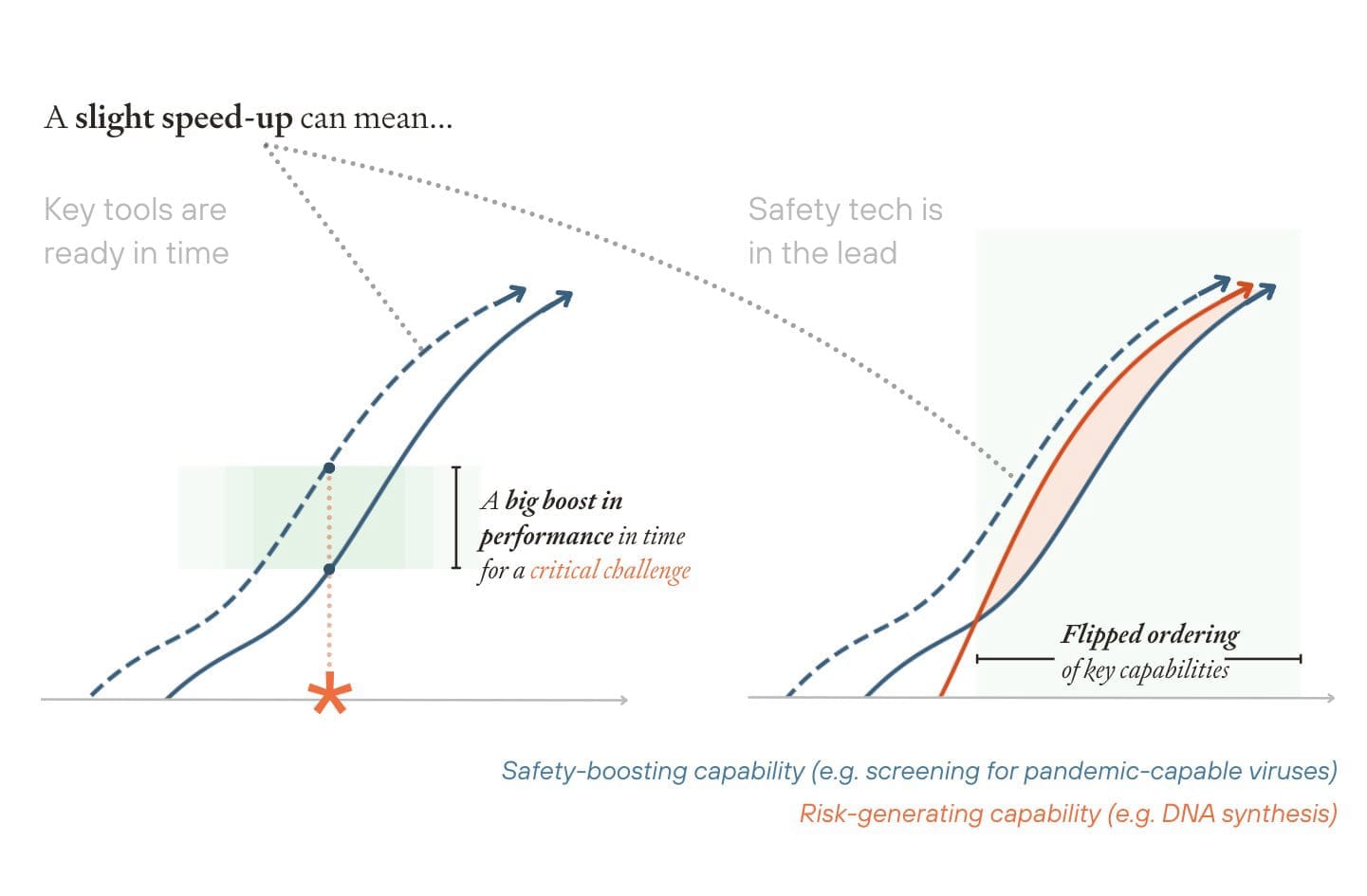

And then for early 2000s — I don't know very much about what was going on at the time, but yeah, the web space was not getting filled as fast as it could. And I think things are probably moving faster now, but I also think that small differences in time could actually be very meaningful here.

Also, we've been talking about market forces not being as efficient as one might imagine. But there are also things that are, in fact, under-incentivized by the market. You might expect that our opportunity to shape things there might be bigger.

And then also in terms of other kinds of washing out effects, there's “the market will respond and just step out of whatever you step into.” So that's a concern.

A different concern is, “The next generation will absorb all of your work and make it irrelevant.” And there, I think again, there are some compounding effects that you might hope will last past that. So like, if you get better forecasting tools, then maybe you get more data early. Or you can automate forecasting research or automate whatever bio/vaccine research earlier; you can bring forward even the things that will swamp the thing that you've currently built. So maybe you're currently building a small thing, and, yeah, it totally will be irrelevant...

Fin

So there is this property of AI, like a lot of other technologies, that it just keeps getting better and cheaper. It's this big wave where you just should expect that early efforts just eventually get swept away, in the sense that it becomes trivially easy and cheap to do the things you're trying to build.

An example, again, might be: it's the early days of personal computing, and maybe you think that we should invest loads of money in specific computer designs for, you know, important scientific research. And this could be good, but there's a limit on how useful this would be, because if you just wait five years, then Moore's Law makes it really, really cheap to get those capabilities.

And then maybe there's a kind of special feature of AI; there's this bitter lesson idea. So it turns out that the way that you make progress in AI is by making progress in all of it, in some sense — by training these very general-purpose models with just more and more compute. And these attempts to build specialized applications or architectures seem to be swept away in the next couple years by the highly general thing that, among many capabilities, happens to be able to do the thing that you're trying to build a specialized tool for. Maybe this is an extra reason to be pessimistic about building specialized applications.

Lizka

Yeah. So again, I think this is a reason to have some skepticism. We should expect that our counterfactual impact washes out after a little bit. But there are a couple of things here.

One is, I think even just getting a boost for a limited period of time — say, between two GPT equivalents (maybe like GPT-5 comes out, and you know GPT-6 is coming). But during that period, if you can increase capabilities — boost useful applications sufficiently — even if it will just totally get nuked by GPT six, that increase could be really valuable, especially as we're starting to face serious challenges.

Also, it's worth noting that the difference in capability level during this time could be huge because progress is becoming so fast.

Fin

So the same speed up in calendar-time could matter more in capability, right?

Lizka

Yeah.

You can imagine that we have a whole treaty-negotiating suite, and by default, it'll get the next improvement in a month or something. And that improvement could be huge. As things speed up, we might be dealing with a bunch of problems that it'd be great to have better tools for. Getting that kind of improvement by a month earlier could be huge.

From my Twitter thread summary of the piece

And in fact — we might sort of be skipping to methods here a little bit — there was this paper from Epoch, I think in late 2023, where they were exploring what they were calling “post-training enhancements”. This is like fine-tuning, scaffolding, better prompting. And I think they found, at least at the time that you could fairly easily get the equivalent of a 5 to 30x increase in pre-training compute just by improving the stuff that's built around your base model — which is pretty huge.

Fin

And I guess the point is, often those improvements are more specialized.

Lizka

Yeah. So you fine-tune on data that helps you specifically with better forecasting or something.

Fin

There's a bit of a meme that when people say they're doing an AI startup, what they're really doing is just building some wrapper around the base model, around an API call. But in this case, that's actually a good example.

Lizka

Yeah. If that works, I'm super happy for it!

I often think about this as closing the gap between the underlying capabilities being there already and the useful applications being usable. Because I do think there's often just a huge delay that is not necessary.

Methods: how can we accelerate beneficial AI tools?

Fin

Let's talk about how to actually speed up these applications.

We were talking a bit about, “oh, you could just do a startup.” That's one example, but I'm sure there are many other examples. So what can we actually do?

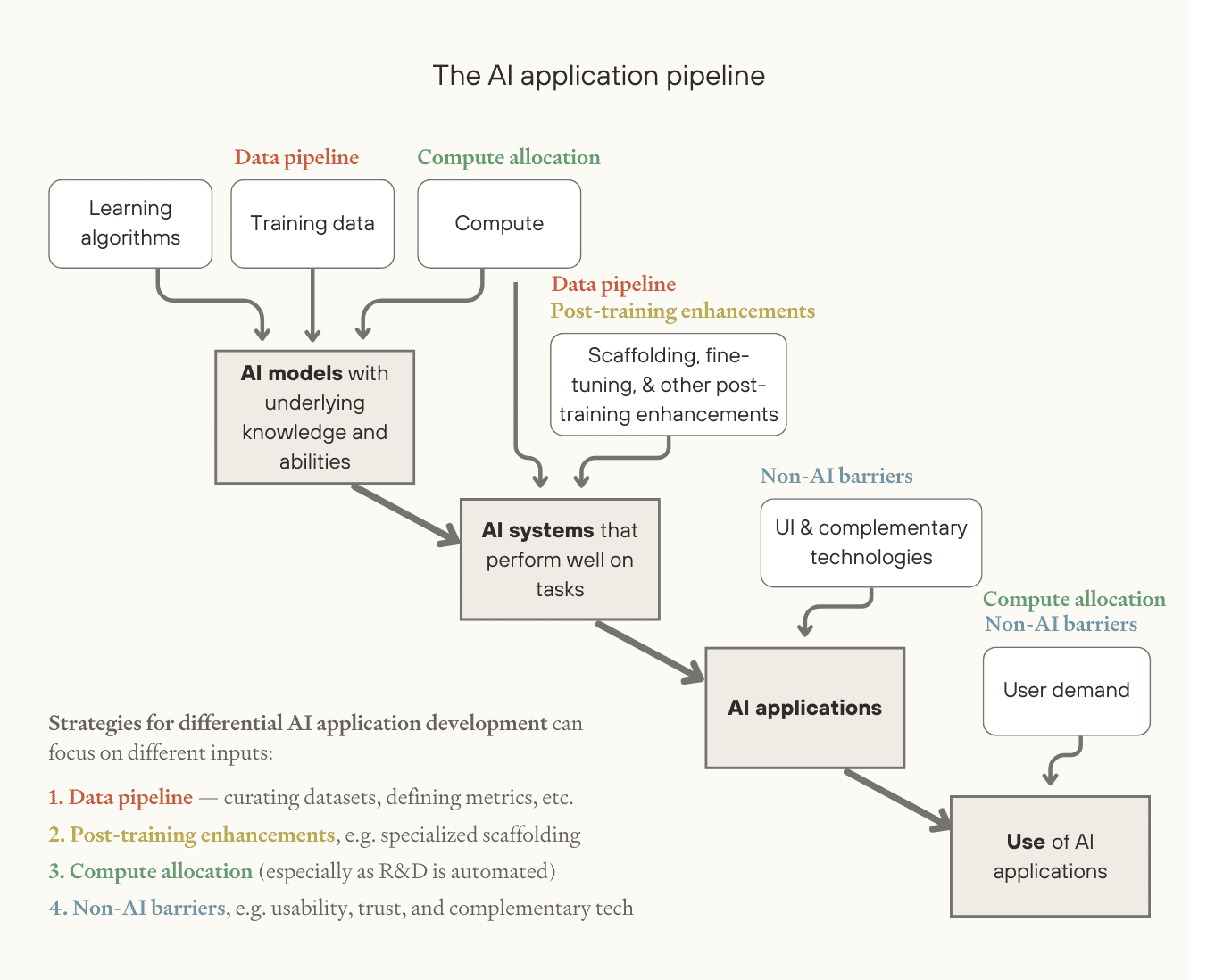

Lizka

It might be useful to talk through the technical ... things that are unique to AI applications. And it might be useful to think about the pipeline that produces AI applications.

So you go from models with underlying capabilities to systems that can perform well on tasks, to usable AI applications that you can download and subscribe for, and then to them actually being used.

From the original piece.

For the models, the main inputs are learning algorithms or architectures. Those, I think, are often too general to seem promising for our purposes. So I'm going to set them aside.

Then there's compute, and then there's training data (or sometimes grading criteria, like task-grading systems). Yeah, we're going to come back to those in a second. Then there's fine-tuning, scaffolding, post-training enhancements for producing better systems, and then stuff like UI, complementary tech, and working on barriers to adoption for the later nodes.

And so the things I feel most promising across this pipeline are:

- Just working on the data and task-grading pipeline. This is, like, curating datasets of what it looks like to have really good outcomes for many parties or something, or defining criteria for “what does it look like to succeed” at a task.

- Then there's shaping compute allocation. Especially as more and more research is getting automated, you can imagine that a lot of the relative rate of progress in different areas is basically determined by some people's decisions about where to spend compute.

- And then in many cases, you could do some post-training enhancements, at least to get a temporary speed-up. Possibly then you can leverage that — bootstrap from that speed-up to produce more speed-ups in the future.

- And I also do think that working on UI — like, just building a better UI system for the people who don't trust the thing that currently exists, or who need specific privacy or security properties for an application that already exists... (like, you're a policymaker, and you can't use that thing) — building better, more trustworthy systems, or working on complementary tech for this kind of system — could be quite valuable.

Fin

Okay, nice. There's a lot there. So one picture I have in my head — you can tell me if this seems right or wrong — is just:

Imagine that you're an AI company, like you’re early DeepMind, before the Google merger, or OpenAI or whatever. And you have this list of projects you're working on. And just like any other tech company — or frankly, any other company — you need to put your foot down at some point and say, “These are the projects we're focusing on. This other project doesn't quite meet the bar, even though it sounds really interesting.” I imagine around the bar there's a decent amount of contingency about like, “Do we do this random nuclear fusion control thing, or do we do this idea for making negotiation tools, or a board game playing app,” and maybe that could be shaped by arguments of which applications are actually most useful.

Lizka

Interesting, yeah. I don't know how these kinds of decisions are made at AI companies. I also don't know how much of the moving from foundation models to usable applications is being done by those companies (or has to be done by them). Right now, a decent amount is, although not all. Like, there's Cursor and stuff.

Fin

That's a good point. AlphaFold, Alpha Go — these are not applications of existing foundation models. They were trained for specialized reasons. So that's maybe a bit different.

Lizka

Yeah, but there are variants or ChatGPT or something — which is just a product, right?

And I guess what you're saying is just a broader point: build interest in a type of application, get investors excited about it. Do an advanced market commitment for some application. Like, “if you can get lots of people using your fact checker, we will pay some amount”.

Fin

Yeah, I have this general take that hype is really underrated as a driver of tech progress — where it's not necessarily tracking something that was previously true, but it just builds on itself. You get these bubbles. And so if you start a bubble for some kind of imagined application, that could be really high leverage.

Lizka

Seems totally plausible. And this could also look like: have a conference, or have a prize for something, or, like, get a few people to share that they're really excited about some application.

Fin

Literally just coming up with a name for some area of applications, and then using the name a lot on Twitter, and suddenly it just feels like a slightly more real thing. And then it's like, “Why is no one working on this?”

Lizka

Another thing here is building an early prototype to demonstrate that it is viable and exciting.

And this is also a lot of where... again, I worry a lot about adoption stuff, but I do think this can change fairly quickly, as people see that others are using even tools that they themselves wouldn't use, but then they get interested in using more advanced versions of those tools — that sort of thing.

Fin

Yeah, that seems right.

Is there anything you want to say about ways to speed up applications? Like you were talking about curating data sets and scoring outputs... if you have more concrete examples?

Lizka

Okay, say, for instance, you want to train a model that's good at forecasting, or you want to fine-tune it. Maybe that means you want it to be calibrated, maybe you also want it to be able to explain forecasts, or something like that. It is good to have, for instance, timestamped data, because you can then check performance — basically as you're training a model or fine-tuning a model, you can test its performance. And this could mean that it's good to have like datasets of time-stamped data, because then you can be like, “Well, did this happen or not?” Especially if it's not especially if it's not super connected to everything else.

Fin

Forecasting is a great example because it's really hard to do retroactive tests on the existing foundation models, because they've totally already peeked at the answer in their training.

Lizka

Yeah. And this also goes into... in many cases, you won't be directly collecting the data. Maybe you'll be setting up a system to collect it...

Fin

Maybe you're using AI to do it? But this would again be a really big lift. This forecasting example; you're trying to create this huge data-set of world events. It's labeled by time. It's not accidentally contaminated...

Lizka

Yeah, but you could imagine that your data set is weird and random. So it's not necessarily like “big world events” — I'm worried they’re just too tangled up in everything else. But I don't know, like developments in some tiny little city that are not tracked elsewhere... And so it's still a big lift, but it's not exactly a grandiose project,

Fin

I wonder if a similar example is: There are a bunch of human practitioners in different fields, where they have so much implicit, tacit knowledge about how to do their job well. And you can't really get this from reading the entire internet, and that's the reason to think that those skills will be bottlenecks. In some sense, there'll be pressures to collect the data, but you might want to look for, you know, practitioners and fields you think are really important with skills that you think are really important but currently hard to train? And then just collect a bunch of interviews, private... save their emails...

Lizka

Yeah — or somehow try and get better signals for quality. Especially in areas where it's hard to find “ground truth”, helping the training process instill good taste in this research direction, etc.

Fin

Yeah, RHF, with taste.

Lizka

Or, you know, other grading systems. You can come up with various schemes. You could train specific graders. I guess scalable oversight stuff feels like it plays into this.

There's a bunch of possible paths here, and different ones will seem more useful in different scenarios, based on where the bottlenecks for a certain application will be.

Like, is it that you want to advance this kind of complicated, messy research field? Or is it that you want to make sure policymakers are using cybersecurity tools or whatever.

And also, which period in time are you targeting? Some of these methods would take a while to start paying off, so if you want them sooner, you shouldn't be using that. And maybe some of them rest on the assumption that the paradigms of AI development are similar to what they are now. So maybe don't use those when target further periods — or it's a reason to not.

In general, I would love for more people to experiment with this. Or more people who've worked on this kind of thing to be writing about this.

Applications that could be doable with today’s models (or generally very soon)

Fin

It strikes me that there are applications of AI that might not be useful for such risks, but would be really useful for at least some actors, which you could totally build from today's models that don't exist. And then that feels like evidence of “there's just more ideas than there is capacity to build them.” Or it's hard to get uptake, even when you can build them. Maybe some ideas just haven't occurred to the right people yet, or just aren't in the water enough.

A silly example would be, maybe I want to make a bet with you based on some events in the world, and we can't quite figure out the resolution criteria, but it's a “you know it if you see it” thing. Normally, we have to find a third party that we both trust to judge whether the criteria are met. But I think increasingly you can say, “we agree on the prompt, which is like, please read the news. Tell me if this criteria is met, yes or no.” I think that's actually just cool.

But then you can imagine something similar at the political level, where maybe I just want to credibly commit to retaliating against you if you do something I don't like. This is famously, hard to do in the, like, anarchic context of international politics. But I could just say, “hey, look, I just wired up an LLM, and it's just reading the news the whole time. And I've asked it to output some code when it learns that you've done this thing I don't want you to do from the news.”

Lizka

Yeah, I mean this also gets into some scary issues with commitment tech — there's stuff about threats it can enable.[6]

(Part of my view on this front is: if you can make credible commitments to do something that is, say, illegal, I think increasingly we should be treating that as you have done the legal thing. Anyway, that's sort of a tangent.)

And then with the specific examples you gave, my sense is that people are very hesitant to automate — especially in high-stakes/ policy/ international relations contexts, they are, in fact, very hesitant to automate — for good reason, in some cases,

Fin

Yeah, but who is most willing to be the risky thing, be reckless...

Lizka

That's right. Another issue is that I could start screwing with news coverage or something.

Fin

I agree; in the specific examples, it’s like, “well, this probably wouldn't exactly work.”

Lizka

But the “bet” case is really cool.

And I think it relates to some stuff about forecasting resolution, which maybe Ozzie Gooen has written about [EA · GW]. Getting a system to open up a bunch of forecasts and grade [EA(p) · GW(p)]/resolve them themselves. Because often you have to specify forecasts in really complicated ways, or they'll resolve based on issues with how they're specified. I think some of the value of forecasting actually comes in because you have to operationalize. But setting that aside, it's good to have forecasts, and it's good to do something like prediction markets, and it can be hard to be like “Well, does it, in fact, in retrospect, seem good that x development happened? How are we going to grade that?” And yeah, if you can get third-party or increasingly trustworthy systems to be grading that, that could be really cool.

And you could then explore which kinds of systems are good at forecasting in different cases.

Fin

Yeah, if you wanted to train a forecasting AI, then you can get it to just write millions of questions and get some other system to grade them on the resolution criteria...

Lizka

And you can, again, explore different epistemic processes for producing those forecasts and test which ones perform better in various environments, or how much this generalizes from one domain to another. It's cool.

Why not focus on slowing risk-increasing things down?

Fin

Yeah, we were talking just then about potentially scary uses of applications. Why not focus on identifying those uses and trying to slow them down, rather than speeding up the cool things? Especially given that in many cases, the cool things will happen anyway.

Lizka

There's absolutely a role for trying to slow down the scary applications — there are people who are working on that.

I do think that speeding up positive use cases (or positive applications)... I currently think it's more promising. This is for two main reasons.

One is that it is just more collaborative with the world, and across different world views. Missing out on medical research because we are worried about engineered viruses is just really sad. And like, there are trade-offs that are worth making there. But if we can accomplish the same thing either by speeding up a different technology or by slowing down a technology that also has positive use cases, I do think it's better to do the speeding up bit. It's not always possible, and again, I do think there's a role for the “slowing down” side.

And then, sort of because of that, it's also easier to speed things up, often. You’re going to face less pushback. You don't need everyone to agree — this also means you should be a bit more careful with not speeding up really scary stuff, because the Unilateralist’s Curse [? · GW] applies. But you don't have to have an international treaty agreeing that we will all speed up this one thing.

In climate change, there's been a bunch of discussion and some economic modeling of whether we should be taxing things that are polluting or incentivizing green, environmentally friendly energy tech. And my understanding is that both have a role and that there's been a lot of success in trying to speed up.

Fin

Maybe the same thing with factory farming, where one thing you could do is try to stop factory farming. Which I think, as far as that's possible, then that's good, but maybe just requires fairly intractable asks, trying to get a lot of agreement, not making friends (lots of enemies actually), in a way that undermines your success in the future. Versus really just pressing on making cheap, good-tasting alternatives to the animal products, and then that's the thing that you can just do yourself.

Lizka

That's right, yeah. I don't know how successful both sides of this have been relative to each other. It does seem like we need the alt protein side, or like we need stuff in this area to make progress. There's a sad element here, where there have also been bans on alt protein stuff. But I think it's very rare. It's sort of the example that proves (the counter example?) that proves the rule.

Fin

I mean that kind of thing you just can't account for. It's just so crazy, yeah,

Lizka

And it feels much less likely in the AI applications space, generally.

A pre-mortem

Fin

So we're talking about applications for reducing existential risk.

I guess I like this idea of doing a forward-looking post-mortem. So you're imagining, you know, it's 10 years time, whatever you're looking back and you're thinking, “Why didn’t we get started on this project sooner?”

Curious what comes to mind; what could be the most important things that we're liable to drop the ball on?

Lizka

Yeah, I like the question.

There’s stuff where I can imagine we curse what we did in the past not just because we didn't do it, but because we screwed things up for ourselves in the future. But just focusing on things we will wish we had started...

To the extent that we want to be shaping what compute is going towards in later stages, and to the extent that we're worried about blockers to some useful AI applications from legal restrictions or just slow-moving human institutions — that part feels really worrying in terms of “we will wish we had started earlier,” because it takes so long to build up interest in something or change how an institution works, to make sure that it is not like actively getting in the way or moving at slow mo.

Fin

Like we spent all this time making this like shiny AI advisor or AI negotiator tool...

Lizka

And no one's using it, yeah.

In general, thinking about where we can get derailed earlier on seems useful. I imagine we look back and we're like, “Okay, well, we just fully missed the point where we lost a lot of the possible [value/paths] — the window closed earlier than we were thinking.” That could be stuff where it's useful to be under something like a veil of ignorance — where you want people to be making agreements before they know that they are for sure... when a broader set of people has bargaining power. Yeah, some form of mapping things out and thinking, “what are the earliest points where we can start losing a lot of our opportunities?”

Plus just thinking about, “What are things that compound a lot, or take a long time to start?”

(How) many more people should be working on this?

Fin

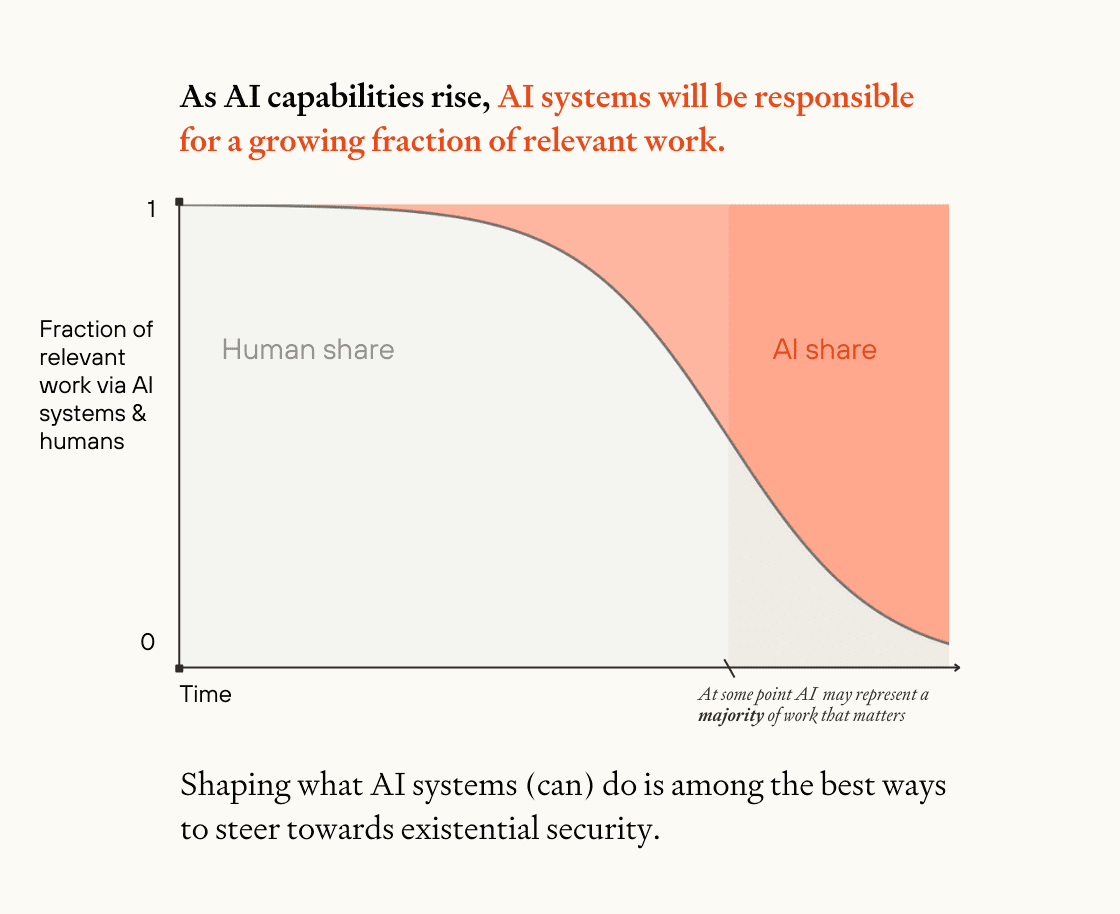

There's a point in the piece that we're talking about where you just put your cards on the table and say that many more people who are interested broadly in reducing existential risks should actually just be working on this stuff. Do you want to say more about that? First of all, why? And then how many more people?

Lizka

I do think a lot more people should be working on it. Stuff that's informing this view:

I think this space can absorb a lot of people [EA · GW] in a way that doesn't end up with lots of people just fighting for the same levers — as you might imagine happens with, say, policy advocacy or something like that. Again, there's just a lot to do; there's a lot of ways to productively contribute.

And I also think that a lot of people who are currently already working on some area should be devoting more of their time to trying to basically accelerate automation of that thing, or helping people work on complementary applications.

And then I generally think that, if you think AI capabilities will keep improving very quickly, and if you're worried about AI regulation not working as well as you might hope, then speeding up the positive/safety side, is maybe one of your best bets — because there's just, like, not enough time to coordinate on some of the “making sure we don't do the bad stuff”.

We wrote that we think potentially in the near future, like 30% of people who are interested in existential risk would be working in this space. This number is sort of... we sketched out some reasons to try to estimate it, but it is just a loose number to give you a sense of where we're at.

Fin

I mean, the headline there is... I mean, I don't actually know how many people you think are currently working on this space. This is, like, you know, 100x as many people, at least, right?

Lizka

It sort of depends on how you define things. Certainly, it's like, 5x or something. So it is a lot more people.

We think that if AI capabilities keep improving, it’s reasonable to have 50% of people working on this or something — again, worried about other kinds of opportunities closing and this being just a very good lever.

I don't know, AI applications are, in fact, just going to be shaping the world. What are you gonna do? You know?

From my Twitter thread summary of the piece

Fin

It's hard to imagine AI applications becoming way less important in the future — unless things just go totally off the rails and there's just nothing you can do in the world. This is not like some policy window that might close soon; you can just always build things with AI. As long as there's AI around, you can choose what to do with it.

Lizka

I guess there are worlds where you can imagine that our opportunity here is very limited.

A question that I have thought about a bit, that I think lots of people have, is like, “Okay, well, AI capabilities seem really advanced right now, but it feels like maybe we're not feeling them being used in the real world...” I used to worry about this more; this would maybe be a reason to believe that, “Sure, AI capabilities will improve, but they will not be having a [transformative] impact for a while.”

But I actually think we are starting to see fairly significant impacts. There's some stats on how much people are using AI capabilities, and I expect that it might become much more obvious in the near future — we're on the cusp of something like an automation transformation.

But it is unclear. And you could imagine, if things take really long to diffuse or they really plateau... I don't expect this to happen, but this would be a reason to not direct as much...

Fin

I see; it could be like this crypto thing: “We're on the cusp of reconfiguring the world financial system to crypto.” Turns out it's just not as big as you thought.

Other upshots

Okay, so one thing I heard you mention was that, in terms of upshots for people, maybe we should just be thinking more, in general, about how AI or AI applications are going to automate/ speed up work in one's own fields — and then somehow, get ready for that. I guess I want to hear a bit more about what that means.

Speeding up automation of your area, using AI tools more

Lizka

Basically, I think if you're working in an area that you think is important for existential risk — or whatever worldview, although for now I'm just inhabiting the existential risk point of view — then, yeah, there's various things that I think you could do to try and speed up more progress on this work via AI.

So one is just using AI applications as much and as soon as possible — even in situations where it's not really clear if it makes sense directly. That could be very useful because it trains you to do this, and maybe makes it easier to identify the most promising applications to further develop or the issues with current applications, and speeds up the process of improving those.

Also focusing on not marginal cases that e.g. make it easier for you to file your expense reports or something, but the stuff that you really, really want to be making progress on. Like, can you automate that as soon as possible? So that's using AI, that could be good.

Fin

It sounds like not much of that is specific to existential risk work. I would just guess that most people doing some knowledge work... if it's the case that AI will just keep getting way better and might start automating a bunch of stuff, most of these people should be thinking about, how can I get ready for this? And maybe I should start training myself to use these tools or whatever.

Lizka

I think that's right, basically. So other things you could do is... I guess you mentioned publishing more of your intermediate outputs. You could also try and organize your tasks, or delineate tasks that could get automated, or make it easier to automate them in the future, because this is a classically difficult thing to do. (If you're trying to delegate to a junior employee — it's hard.) Doing that kind of work to prepare for automating is quite useful, potentially.

Fin

I like that point; just make sure you're capturing loads of information about your work, even if it currently feels really unnecessary and redundant. All those emails you sent, don't delete them. All those random notes that you took and so on — it might turn out to be really useful to train on.

Lizka

Possibly meeting notes — that sort of thing too.

Preparing for a world with much more abundant cognition

Fin

You have this related point: we should expect the world to just be more full of cognition in general, of just AI's running around able to do different tasks — and that means we should be thinking about plans, strategies, projects, which currently might be way too cognitive-labor intensive, but might become feasible. Again, I don't know if you have things in mind by that?

Lizka

So I guess if the former point was like, “we should try and accelerate AI applications in various areas,” this one is like:

Okay, well, we've done a bunch of strategizing on how we can navigate various issues. But a lot of the time when we're strategizing, we're trying to pick the strategies that will use the people we have as effectively as possible — because we have very few people working on problems that ideally tons of smart people would be working on. We want to be labor-efficient, cognitive-labor-efficient.

But if, in fact, AI is coming, and AI is going to be boosting the amount of cognitive labor... that actually means that we do not have to have this constraint as much.

An analogy here is agricultural methods, which have become massively better. Before, using a ton of space to farm tomatoes or something would not be the best thing. You'd probably just do lots of wheat or something, or potatoes. The kinds of strategies that are viable for you change.

Now you can potentially do stuff like explore massive spaces of potential solutions to some problem and just have that as your strategy or as part of your process — which you would never spend your 20 people working on UVC lighting (or whatever it is that you think is important)... you would never do that with those people, because you have so few people.

And now you can explore tons of possibilities. You can do crazy customization. You can do real-time updating or monitoring of complex data sets to try and find literally the earliest sign of a new pandemic that you can.

Fin

I like this point.

And I think there’s this generally underrated trend about computing. Like in our computers, we use grotesquely large amounts of computing power, from the perspective of 30, 40, years ago — for these completely inane tasks. And it requires a certain kind of imagination to see the rate at which this computation is getting cheaper, feeling more transistors on the chip, and then to look so far ahead and be like, “we're just going to be not thinking twice about burning the amount of computation that costs, like, $50,000 right now to like, watch a YouTube video or whatever.”

And this kind of trend just won't stop. It will be possible to leverage resources, in maybe not very long, which feel, to us now, not just “oh, that's impractical,” but like, “that is just absolutely insane.”

Lizka

Yeah — from what I remember, Bill Gates, or someone early in the Microsoft days, had this thought (probably lots of people had this). Computers were huge, right? But they saw the trend, Moore's Law, it just keeps getting smaller, it keeps getting more practical. And they were like, “Okay, personal computers are gonna be a thing.”

Fin

Yeah, the idea of a personal computer is really not intuitive. Why would you not centralize this thing?

Lizka

Right. Maybe your department has a computer or something... Anyway, that kind of thing: preparing for this sort of shift could be quite big.

Also, there's a flip side; some of the work that we might be doing because we are not expecting this (or not really thinking through this) might not be that valuable.

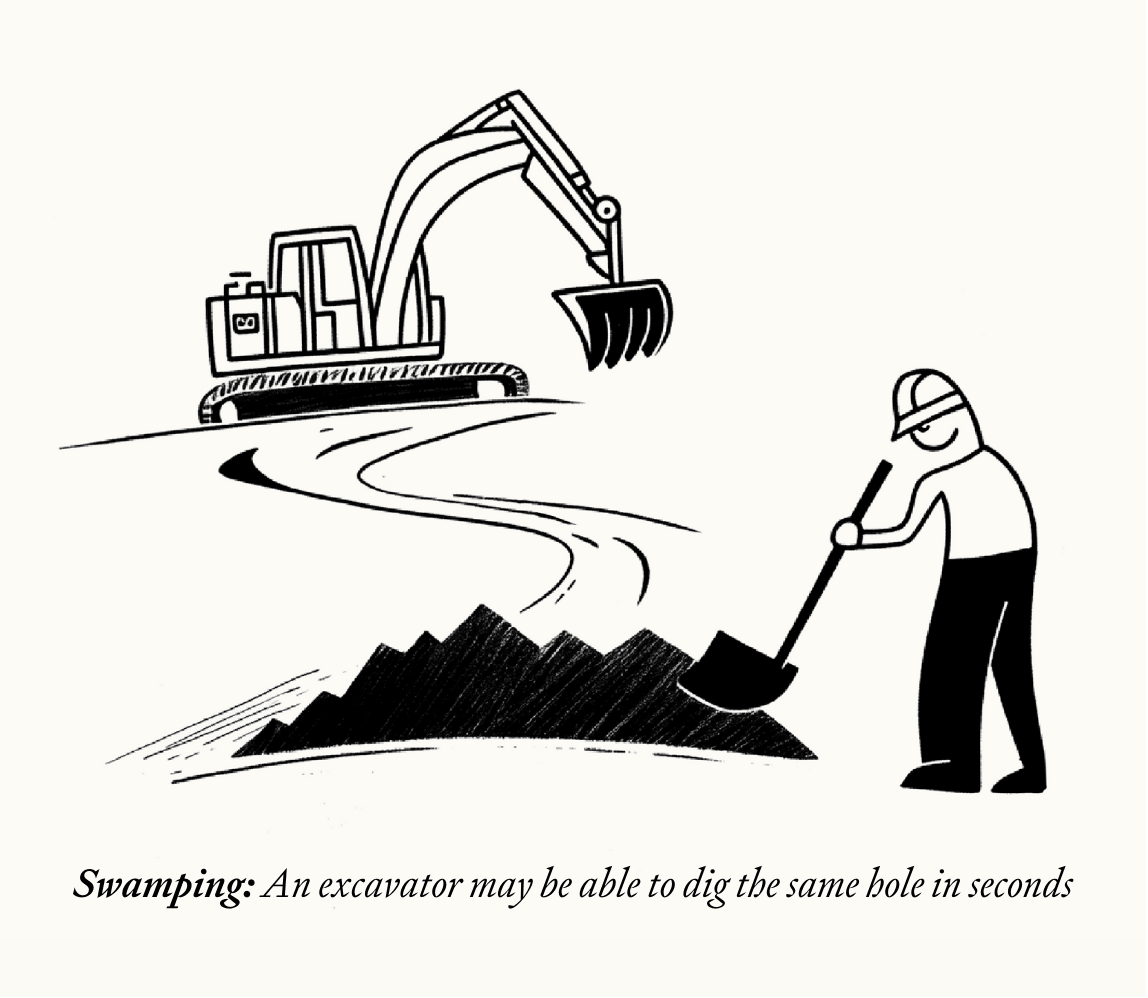

It's like digging a hole when there's a big excavator coming; you might get the hole a little bit faster, but realistically, maybe it's better for you to be building the next excavator.

From the appendices to AI Tools for Existential Security.

There's this phrase, “the jagged frontier of AI capabilities”, which I quite like. So there's different aspects that might get... where cognitive labor will be growing in uneven ways. Trying to figure out what the bottlenecks will be and what we should, in fact, be optimizing for... seeing if we can get around them by leveraging the stuff that is growing more available.

Fin

I like that. Any more examples that come to mind, of just strategies for accelerating these useful AI applications?

Getting ready to help with automation

Lizka

A lot of people will have the opportunity to be doing this kind of thing now, potentially. But also we could place ourselves to be able to do it better in the future, even if we can’t now.

I feel fairly limited on subject-matter expertise. I don't know if this is my main thing, but you could imagine that it's good for me to go and deeply understand the needs of some sort of problem and be like, “Okay, let's see if we can build applications for that problem.”

A more generic thing that might be useful is: go and build AI expertise of some kind. Connect with the startup world, if that's the most relevant. Or like, try and set up spaces for us to coordinate on this topic. Maybe don't burn bridges with the people with whom you might want to coordinate on this sort of thing.

Fin

Maybe a point here is, I at least currently feel like I'm not using AI tools as much as I should be. And compared to the general population, I'm usually interested in AI stuff. So there might even just be a case of, “look, this is going to pay off right now...”

Lizka

That's right. I do think a lot of us are not using AI tools as much as we should, even from the point of view of how much progress you're going to make in the next week. But yeah, it could also be quite useful from the perspective of building your own capacity for helping. I'm not sure — I'm genuinely not sure if that's the thing that would help, really, in most cases (could be different).

Fin

That's good point.

Applications we’re personally excited for

Let's start wrapping up. I am just interested — maybe just putting the really most important existential-risk-relevant applications aside — are there just applications of AI that we don't currently have you're really excited for?

Lizka

Oh my god, yes.

Honestly, this is a sort of a gripe. I really love getting feedback on my writing, but I don't like... I really wish I just had something that could comment on my Google Docs.

Fin

I tried to make this once, but the Google Docs API makes it very difficult.

Lizka

(!) I really want something that comments on my Google Docs.

Anyway, there’s lots of things. Another thing is: stuff that helps me have to exert less willpower — I guess this is sort of “credible commitments with yourself”.

For instance, being able to have a system that tracks what I do, nudges me when I'm not spending my time as I should... that can talk to me in a moment where I have extra willpower and be like, “do you want me to just lock your computer past certain time? Or, you know, make sure you don't open...” I have a problem where I often have an explosion of tabs when I'm getting into a topic and spend way too much time reading all of the possible articles I can find. Or I go on Wikipedia, rabbit holes, whatever.

I feel like, at this point, AI systems could be helping me manage that impulse.

Fin

Yeah. I really like this idea, this guardian angel thing, right?

Where it would be like your best friend is watching over your shoulder and they have your interest at heart. You told them about a bunch of stuff that you really want to avoid procrastinating in this way. And then just every half an hour it’s like “You sure you want to be doing this right now?”

Lizka

And they could even, you know, test what works for me. Maybe I don't like being nudged, but maybe I like being nudged at particular times — or it can be nice nudges or something, not judging.

Anyway, I think that'd be so cool.

Fin

Totally. And totally doable, right?

Key open questions?

So you wrote this piece with Owen on AI applications for existential risk. Are you left with questions? Are there things you still feel like you want to get more clarity on?

Lizka

No, I’ve got all the answers. :))

Fin

Okay, let me rephrase that. What are the most important questions?

Lizka

There's a bunch I want to gain clarity on, and I'm never going to get to all of it.

I would love to see more work on specific ideas for applications, and specifically discussion of priority levels: which ones are, in fact, super important to get early. Where do we have a really good opportunity? Again, especially from domain experts, or people who have experience, just trying to use this kind of thing, or to build it, for that matter, or to get adoption.

In a similar vein, figuring out what it looks like (and whether it's feasible) to try and accelerate these late-stage applications. Like research into moral philosophy, if that's the thing. I feel fairly confused about what this looks like when it is, in fact, tons of AI researchers. I would love to get more concrete.

Another thing is exploring the negative effects of some of these. Especially coordination stuff, I'm a bit worried about (that's in scoping applications).

Then there's methods where, honestly, maybe I'm most excited about people just trying, experimenting with it and... figuring out what it looks like for decision makers to be excited about an application, or like, what intermediate progress milestones you could aim for — and just doing that. Or also just talking to potential users and trying to figure out what will stop them from using (or what is currently stopping them from using) AI tools.

This “re-strategizing” point is very vague right now — getting more concrete there.

And yeah, the last thing is just paradigms; more on what the opportunities [in differential AI paradigm development] are, how feasible it is, etc.

Fin

This makes me think; there was this post with a title like, “concrete biosecurity interventions, some of which could be big [EA · GW],” from Andrew Snyder-Beattie. And it's just like a list of four or five pretty well fleshed-out descriptions of big projects that someone could just do. And obviously a bunch of thought has gone into figuring out what projects, in fact, would be most important, but that kind of thing is so useful — it’s so rare to just read a list of stuff you can really concretely imagine doing. I would love to see that also for AI applications, I guess you did some of it, but there’s still much more, right?

Lizka

Yeah, that's right.

Fin

Okay. Lizka Vaintrob, thank you very much.

Lizka

Thanks!

- ^

- ^

Besides Fin's skill as a host, this might be — at least in part — because I sound smarter to myself when on 2x speed, and because a bunch of my rambling was cut out in post-production. :))

- ^

I had initially just meant to make myself a quick transcript (to get an AI summary from it), and then I realized that I could very quickly (and during non-productive times) clean it up to get an MVP shareable version (and I also added some hyperlinks and images, because I’m me).

- ^

Which, to be fair to me, is many hours of content in the past couple of months

- ^

This is one of the areas where my thinking has changed more in the past couple of months

- ^

See e.g. section 5 in this paper: Open Problems in Cooperative AI

0 comments

Comments sorted by top scores.