The So-Called Heisenberg Uncertainty Principle

post by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2008-04-23T06:36:26.000Z · LW · GW · Legacy · 23 commentsContents

23 comments

Previously in series: Decoherence [? · GW]

As touched upon earlier [? · GW], Heisenberg's "Uncertainty Principle" is horribly misnamed.

Amplitude distributions in configuration space evolve over time. When you specify an amplitude distribution over joint positions, you are also necessarily specifying how the distribution will evolve. If there are blobs of position, you know where the blobs are going.

In classical physics, where a particle is, is a separate fact from how fast it is going. In quantum physics this is not true. If you perfectly know the amplitude distribution on position, you necessarily know the evolution of any blobs of position over time.

So there is a theorem which should have been called the Heisenberg Certainty Principle, or the Heisenberg Necessary Determination Principle; but what does this theorem actually say?

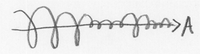

At left is an image I previously used to illustrate a possible amplitude distribution over positions of a 1-dimensional particle.

Suppose that, instead, the amplitude distribution is actually a perfect helix. (I.e., the amplitude at each point has a constant modulus, but the complex phase changes linearly with the position.) And neglect the effect of potential energy on the system evolution; i.e., this is a particle out in intergalactic space, so it's not near any gravity wells or charged particles.

If you started with an amplitude distribution that looked like a perfect spiral helix, the laws of quantum evolution would make the helix seem to rotate / move forward at a constant rate. Like a corkscrew turning at a constant rate.

This is what a physicist views as a single particular momentum.

And you'll note that a "single particular momentum" corresponds to an amplitude distribution that is fully spread out—there's no bulges in any particular position.

Let me emphasize that I have not just described a real situation you could find a particle in.

The physicist's notion of "a single particular momentum" is a mathematical tool for analyzing quantum amplitude distributions.

The evolution of the amplitude distribution involves things like taking the second derivative in space and multiplying by i to get (one component of) the first derivative in time. Which turns out to give rise to a wave [? · GW] mechanics—blobs that can propagate themselves across space, over time.

One of the basic tools in wave mechanics is taking apart complicated waves into a sum of simpler waves.

If you've got a wave that bulges in particular places, and thus changes in pitch and diameter, then you can take apart that ugly wave into a sum of prettier waves.

A sum of simpler waves whose individual behavior is easy to calculate; and then you just add those behaviors back together again.

A sum of nice neat waves, like, say, those perfect spiral helices corresponding to precise momenta.

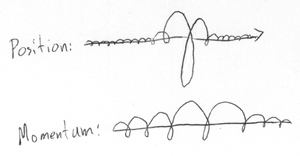

A physicist can, for mathematical convenience, decompose a position distribution into an integral over (infinitely many) helices of different pitches, phases, and diameters.

Which integral looks like assigning a complex number to each possible pitch of the helix. And each pitch of the helix corresponds to a different momentum. So you get a complex distribution over momentum-space.

It happens to be a fact that, when the position distribution is more concentrated—when the position distribution bulges more sharply—the integral over momentum-helices gets more widely distributed.

Which has the physical consequence, that anything which is very sharply in one place, tends to soon spread itself out. Narrow bulges don't last.

Alternatively, you might find it convenient to think, "Hm, a narrow bulge has sharp changes in its second derivative, and I know the evolution of the amplitude distribution depends on the second derivative, so I can sorta imagine how a narrow bulge might tend to propagate off in all directions."

Technically speaking, the distribution over momenta is the Fourier transform of the distribution over positions. And it so happens that, to go back from momenta to positions, you just do another Fourier transform. So there's a precisely symmetrical argument which says that anything moving at a very definite speed, has to occupy a very spread-out place. Which goes back to what was shown before, about a perfect helix having a "definite momentum" (corkscrewing at a constant speed) but being equally distributed over all positions.

That's Heisenberg's Necessary Relation Between Position Distribution And Position Evolution Which Prevents The Position Distribution And The Momentum Viewpoint From Both Being Sharply Concentrated At The Same Time Principle in a nutshell.

So now let's talk about some of the assumptions, issues, and common misinterpretations of Heisenberg's Misnamed Principle.

The effect of observation on the observed

Here's what actually happens when you "observe a particle's position":

Decoherence [? · GW], as discussed yesterday, can take apart a formerly coherent amplitude distribution into noninteracting blobs.

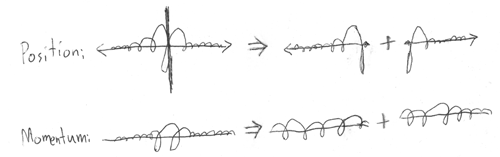

Let's say you have a particle X with a fairly definite position and fairly definite momentum, the starting stage shown at left above. And then X comes into the neighborhood of another particle S, or set of particles S, where S is highly sensitive to X's exact location—in particular, whether X's position is on the left or right of the black line in the middle. For example, S might be poised at the top of a knife-edge, and X could tip it off to the left or to the right.

The result is to decohere X's position distribution into two noninteracting blobs, an X-to-the-left blob and an X-to-the-right blob. Well, now the position distribution within each blob, has become sharper. (Remember: Decoherence is a process of increasing quantum entanglement that masquerades as increasing quantum independence.)

So the Fourier transform of the more definite position distribution within each blob, corresponds to a more spread-out distribution over momentum-helices.

Running the particle X past a sensitive system S, has decohered X's position distribution into two noninteracting blobs. Over time, each blob spreads itself out again, by Heisenberg's Sharper Bulges Have Broader Fourier Transforms Principle.

All this gives rise to very real, very observable effects.

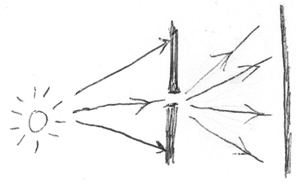

In the system shown at right, there is a light source, a screen blocking the light source, and a single slit in the screen.

Ordinarily, light seems to go in straight lines (for less straightforward reasons [? · GW]). But in this case, the screen blocking the light source decoheres the photon's amplitude. Most of the Feynman paths hit the screen.

The paths that don't hit the screen, are concentrated into a very narrow range. All positions except a very narrow range have decohered away from the blob of possibilities for "the photon goes through the slit", so, within this blob, the position-amplitude is concentrated very narrowly, and the spread of momenta is vey large.

Way up at the level of human experimenters, we see that when photons strike the second screen, they strike over a broad range—they don't just travel in a straight line from the light source.

Wikipedia, and at least some physics textbooks, claim that it is misleading to ascribe Heisenberg effects to an "observer effect", that is, perturbing interactions between the measuring apparatus and the measured system:

"Sometimes it is a failure to measure the particle that produces the disturbance. For example, if a perfect photographic film contains a small hole, and an incident photon is not observed, then its momentum becomes uncertain by a large amount. By not observing the photon, we discover that it went through the hole."

However, the most technical treatment I've actually read was by Feynman, and Feynman seemed to be saying that, whenever measuring the position of a particle increases the spread of its momentum, the measuring apparatus must be delivering enough of a "kick" to the particle to account for the change.

In other words, Feynman seemed to assert that the decoherence perspective actually was dual to the observer-effect perspective—that an interaction which produced decoherence would always be able to physically account for any resulting perturbation of the particle.

Not grokking the math, I'm inclined to believe the Feynman version. It sounds pretty, and physics has a known tendency to be pretty.

The alleged effect of conscious knowledge on particles

One thing that the Heisenberg Student Confusion Principle DEFINITELY ABSOLUTELY POSITIVELY DOES NOT SAY is that KNOWING ABOUT THE PARTICLE or CONSCIOUSLY SEEING IT will MYSTERIOUSLY MAKE IT BEHAVE DIFFERENTLY because THE UNIVERSE CARES WHAT YOU THINK.

Decoherence works exactly the same way whether a system is decohered by a human brain or by a rock. Yes, physicists tend to construct very sensitive instruments that slice apart amplitude distributions into tiny little pieces, whereas a rock isn't that sensitive. That's why your camera uses photographic film instead of mossy leaves, and why replacing your eyeballs with grapes will not improve your vision. But any sufficiently sensitive physical system will produce decoherence, where "sensitive" means "developing to widely different final states depending on the interaction", where "widely different" means "the blobs of amplitude don't interact".

Does this description say anything about beliefs? No, just amplitude distributions. When you jump up to a higher level and talk about cognition, you realize that forming accurate beliefs [? · GW] requires [? · GW] sensors [? · GW]. But the decohering power of sensitive interactions can be analyzed on a purely physical level.

There is a legitimate "observer effect", and it is this: Brains that see, and pebbles that are seen, are part of a unified physics; they are both built out of atoms. To gain new empirical knowledge about a thingy, the particles in you have to interact with the particles in the thingy. It so happens that, in our universe, the laws of physics are pretty symmetrical about how particle interactions work—conservation of momentum and so on: if you pull on something, it pulls on you.

So you can't, in fact, observe a rock without affecting it, because to observe something is to depend on it—to let it affect you, and shape your beliefs. And, in our universe's laws of physics, any interaction in which the rock affects your brain, tends to have consequences for the rock as well.

Even if you're looking at light that left a distant star 500 years ago, then 500 years ago, emitting the light affected the star.

That's how the observer effect works. It works because everything is particles, and all the particles obey the same unified mathematically simple physics.

It does not mean the physical interactions we happen to call "observations" have a basic, fundamental, privileged effect on reality.

To suppose that physics contains a basic account of "observation" is like supposing that physics contains a basic account of being Republican. It projects [? · GW] a complex, intricate, high-order biological cognition onto fundamental physics. It sounds like a simple theory to humans, but it's not simple. [? · GW]

Linearity

One of the foundational assumptions physicists used to figure out quantum theory, is that time evolution is linear. If you've got an amplitude distribution X1 that evolves into X2, and an amplitude distribution Y1 that evolves into Y2, then the amplitude distribution (X1 + Y1) should evolve into (X2 + Y2).

(To "add two distributions" means that we just add the complex amplitudes at every point. Very simple.)

Physicists assume you can take apart an amplitude distribution into a sum of nicely behaved individual waves, add up the time evolution of those individual waves, and get back the actual correct future of the total amplitude distribution.

Linearity is why we can take apart a bulging blob of position-amplitude into perfect momentum-helices, without the whole model degenerating into complete nonsense.

The linear evolution of amplitude distributions is a theorem in the Standard Model of physics. But physicists didn't just stumble over the linearity principle; it was used to invent the hypotheses, back when quantum physics was being figured out.

I talked earlier about taking the second derivative of position; well, taking the derivative of a differentiable distribution is a linear operator. F'(x) + G'(x) = (F + G)'(x). Likewise, integrating the sum of two integrable distributions gets you the sum of the integrals. So the amplitude distribution evolving in a way that depends on the second derivative—or the equivalent view in terms of integrating over Feynman paths—doesn't mess with linearity.

Any "non-linear system" you've ever heard of is linear on a quantum level. Only the high-level simplifications that we humans use to model systems are nonlinear. (In the same way, the lightspeed limit requires physics to be local, but if you're thinking about the Web on a very high level, it looks like any webpage can link to any other webpage, even if they're not neighbors.)

Given that quantum physics is strictly linear, you may wonder how the hell you can build any possible physical instrument that detects a ratio of squared moduli [? · GW] of amplitudes, since the squared modulus operator is not linear [? · GW]: the squared modulus of the sum is not the sum of the squared moduli of the parts.

This is a very good question.

We'll get to it shortly.

Meanwhile, physicists, in their daily mathematical practice, assume that quantum physics is linear. It's one of those important little assumptions, like CPT invariance.

Part of The Quantum Physics Sequence [? · GW]

Next post: "Which Basis Is More Fundamental? [? · GW]"

Previous post: "Decoherence [? · GW]"

23 comments

Comments sorted by oldest first, as this post is from before comment nesting was available (around 2009-02-27).

comment by Enginerd · 2008-04-23T13:03:46.000Z · LW(p) · GW(p)

I'd be a bit careful where you put bold text. When I skimmed this entry the first time, I got a very different impression of your thoughts than actually were there. It's always good to hear somebody give the correct, non-consciousness-centric view of QM when talking for the public. As opposed to say, Scott Adams, who interprets the double-slit experiment as the future affecting the past.

Linearity of QM can be proven? I didn't know that. I don't suppose you'd be able to sketch out the proof, or provide a link to one?

-Enginerd

comment by Nick_Tarleton · 2008-04-23T15:58:33.000Z · LW(p) · GW(p)

This Java applet demonstrates the relationship between position and momentum really nicely. (He has a lot of others too.)

comment by Silas · 2008-04-23T15:58:34.000Z · LW(p) · GW(p)

Hm, I thought you were going to have a mind-blowing, semi-novel explanation of Heisenberg. Your explanation turned out to be the same that Roger Penrose gives in The Emporer's New Mind. Am I wrong in this characterization?

Btw, I still don't understand the implications of amplitude distributions on perception. If positon is lumped and momentum is spread out, what are the implications for my perception? Do I notce the perceived-object as having a random momentum? Does it concentrate when my brain forms mutual information with it?

And do physicists actively debate the relationship between the observer effect and Heisenberg, even while the official explanation is that they are separate?

comment by celeriac · 2008-04-23T16:58:25.000Z · LW(p) · GW(p)

As a general comment, you've written a number of very useful sequences on various topics. Would it be possible to go through and add forward links in addition to the usual prerequisite links?

I'm thinking of the case where you wind up saying to someone, "here is the last page in a very useful tutorial, start by alt-clicking the back link at the top until you get to the beginning of the sequence and then read your tabs in reverse order.... no, better to go to the archives for April and read up in reverse order from the bottom of the page skipping over what isn't part of the series... no, maybe use the Google search on 'quantum,' if that didn't skip some posts and get others and disregard order... well, you know, it's a friggin' blog, they're impossible to read anyway..."

comment by DaveInNYC2 · 2008-04-23T18:27:23.000Z · LW(p) · GW(p)

I'm re-going through posts, and a question after reading The Quantum Arena. There you state that if you know the entire amplitude distribution, you can predict what subsequent distributions will be. Am I correct in assuming that this is independent of (observations, "wave function collapses", or whatever it is when we say that we find a particle at a certain point)?

For example, let's I have a particle that is "probably" going to go in a straight to from x to y, i.e. at each point in time there is a huge bulge in the amplitude distribution at the appropriate point on the line from x to y. If I observe the particle on the opposite side of the moon at some point (i.e. where the amplitude is non-zero, but still tiny), does the particle still have the same probability as before of "jumping" back onto the line from x to y?

As I write this, I am starting to suspect that I am asking a Wrong Question. Crap.

comment by DonGeddis · 2008-04-23T21:20:54.000Z · LW(p) · GW(p)

DaveInNYC: Keep in mind that Eliezer still has yet to get to "wave function collapse" or "find[ing] a particle at a certain point". That's the "magic wand" that "detects a ratio of squared moduli of amplitudes".

He hasn't given you that tool yet, so if you're just following his posts so far, you can't even ask the question that you want to ask. So far, there is no way to "observe a particle" in any particular place. There are only (spread-out) amplitude modulations.

That may not be satisfying, but I suspect the only answer (here) at this point is, "wait until a later post".

comment by Constant2 · 2008-04-23T22:22:40.000Z · LW(p) · GW(p)

Am I correct in assuming that this is independent of (observations, "wave function collapses", or whatever it is when we say that we find a particle at a certain point)?

Wavefunction collapses are unpredictable. The claim in The Quantum Arena, if your summary is right, is that subsequent amplitude distributions are predictable if you know the entire current amplitude distribution. The amplitude distribution is the wavefunction. Since wavefunction collapses are unpredictable but the wavefunction's progression is claimed to be predictable, wavefunction collapses are logically barred from existing if the claim is true. From this follows the no-collapse "interpretation" of quantum mechanics, a.k.a. the many-worlds interpretation. Eliezer's claim, then, is expressing the many-worlds interpretation of QM. The seeming collapse of the wavefunction is only apparent. The wavefunction has not, in fact, collapsed. In particular, when you find a particle at a certain point, then the objective reality is not that the particle is at that point and not at any other point. The you which sees the particle at that one point is only seeing a small part of the bigger objective picture.

If I observe the particle on the opposite side of the moon at some point (i.e. where the amplitude is non-zero, but still tiny), does the particle still have the same probability as before of "jumping" back onto the line from x to y?

Yes and no. Objectively, it still has the same probability of being on the line from x to y. But the you who observes the particle on the opposite side of the moon will from that point forward only see a small part of the bigger objective picture, and what that you will see will (almost certainly) not be the particle jumping back onto the line from x to y. So the subjective probability relative to that you is not the same as the objective probability.

Now, let me correct my language. Neither of these probabilities is objective. What I called "objective" was in fact the subjective probability relative to the you who witnessed the particle starting out at x but did not (yet) witness the particle on the other side of the moon.

comment by athmwiji · 2008-04-24T02:01:42.000Z · LW(p) · GW(p)

"To suppose that physics contains a basic account of "observation" is like supposing that physics contains a basic account of being Republican. It projects a complex, intricate, high-order biological cognition onto fundamental physics. It sounds like a simple theory to humans, but it's not simple."

This seems to be arguing in favor of epiphenominalism, but you just spent pages and pages arguing against it. what gives?

I don't think it is reasonable to expect to find anything about phenomena in quantum physics, but that does not mean it is not hiding somewhere at a lower level. QM gives us the tools to mimic the computations performed at a very low level of physics, but it says nothing about how those computations are actually preformed in the territory, and i suspect phenomena is hiding there.

The fact that i can actually talk about the redness of red and my own thoughts is evidence of this.

I really like these explanations of QM

comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2008-04-24T03:10:55.000Z · LW(p) · GW(p)

Enginerd: Linearity of QM can be proven? I didn't know that. I don't suppose you'd be able to sketch out the proof, or provide a link to one?

When I say it's a theorem, I mean that when you look at all known laws of quantum physics, they are linear; so it's a theorem of current physical models that Q(A) + Q(B) = Q(A + B). And furthermore, this was suspected early on, and used to help deduce the hypotheses and form of the Standard Model.

I don't mean there's a simple a priori proof, or a simple proof from experiment like there is for the identity of particles - if such a thing exists, I do not know it.

Silas: Hm, I thought you were going to have a mind-blowing, semi-novel explanation of Heisenberg. Your explanation turned out to be the same that Roger Penrose gives in The Emporer's New Mind. Am I wrong in this characterization?

I doubt it. I mean, physics is physics is reality. You don't get extra points for novelty. Coming up with a new way of explaining arithmetic is fine, but that which is to be explained, should still have 2 + 2 equaling 4.

Replies from: Luke_A_Somers↑ comment by Luke_A_Somers · 2011-10-21T15:32:01.992Z · LW(p) · GW(p)

The simplest proof I've seen is actually of my own devising, though I'm pretty sure others have come up with it before. Suppose the general framework is right, but there's some superlinear term somewhere. Essentially, it changes things if any one component gets big - like an absolute amplitude of 1/2 or something. And these components can be any of the usual observable variables we'd have - distances between particles, momenta, etc.

Based just on the general framework, the individual components of the wavefunction get very small very quickly. The system decoheres into so many tiny blobs in configuration space that none of them are dense enough for those superlinear terms to be a big deal. And I don't mean we could prepare a highly concentrated state either. Right there at the Big Bang, the superlinear components could be doing something important, but a few Planck times later they'd be a minor deal, and a millisecond later there's no conceivable experimental apparatus that one could build that would ever detect their effect.

If there are nonlinearities that do have effects to the present day, they have to have been shepherding the wavefunction in a highly specialized way to prevent this sort of thing from happening. Mangled worlds might do it, but I have low confidence in it.

Replies from: endoself↑ comment by endoself · 2011-11-26T01:08:57.629Z · LW(p) · GW(p)

Cool, I've thought of that too. The problem with this approach is that it's not obvious how to apply the Born rule or whether it must be revised. Apparently Weinberg wrote a paper on something similar, but I've never been able to find it.

Replies from: Luke_A_Somers↑ comment by Luke_A_Somers · 2012-02-13T02:00:03.983Z · LW(p) · GW(p)

Hmm. I see what you mean - you can end up with a sort of sleeping beauty paradox, where some branches remain more concentrated than others, and over time their 'probabilities' grow or shrink retroactively.

I don't see that being a fundamental issue of dynamics, but rather of our ability to interpret it. If the Born Rule is an approximation that applies except at the dawn of time, I'm okay with that.

Replies from: endoself↑ comment by endoself · 2012-02-13T02:36:34.620Z · LW(p) · GW(p)

I don't see that being a fundamental issue of dynamics, but rather of our ability to interpret it. If the Born Rule is an approximation that applies except at the dawn of time, I'm okay with that.

Yeah, that's what I meant by 'revised'. I don't even know if it's possible to find an approximation that behaves sanely. Last time I thought about this, I thought we'd want to avoid the sort of situation you mentioned, but I've been thinking about the anthropic trilemma post and now I'm leaning toward the idea that we shouldn't exclude it a priori.

comment by AnthonyC · 2011-04-06T16:52:17.217Z · LW(p) · GW(p)

Say I start with an amplitude distribution- essentially an n (potentially infinite) dimensional configuration space with a complex numbered value at each point.

This is essentially an infinite set of (n+2)tuplets. If I knew the dimensionality of the configuration space, I could also determine the cardinality of the set of n+2-tuplets for a particular distribution, as well as that of the set of all possible such sets of n+2-tuplets. (Unless, of course, one of these collections turns out to be pathologically large, hence not a set, but I don't know why that should be).

Then it would seem that I can represent a quantum amplitude distribution as a single point moving around in a configuration space- albeit a much, much larger one.

comment by brilee · 2012-04-14T15:15:54.910Z · LW(p) · GW(p)

The Uncertainty Principle, is in fact, an uncertainty principle.

The uncertainty principle is not a fact about quantum mechanics. The uncertainty principle is a fact about waves in general, and it says that you cannot deliver both a precise-time and precise-frequency wavelet. This has implications in, for example, in radio signals, and electrical signals.

In QM, we assume matter behaves like waves. That means that matter now does all the same things waves do, including, as it turns out, an uncertainty principle. Uncertainty has simply been hyped up by philosophers who couldn't add 2 and 2.

Replies from: Oscar_Cunningham↑ comment by Oscar_Cunningham · 2012-04-14T18:51:04.677Z · LW(p) · GW(p)

Eliezer is just decrying the use of the word "uncertainty". It's not that we don't know the frequency of the wave packet, it's that it doesn't have a single frequency.

Replies from: adele-lopez-1↑ comment by Adele Lopez (adele-lopez-1) · 2021-10-29T05:13:25.873Z · LW(p) · GW(p)

That's true, but there is an interesting way in which the uncertainty principle is still about uncertainty.

It turns out that for any functions f and g related by a Fourier transform, and where |f|^2 and |g|^2 are probability distributions, the sum of these distributions' Shannon entropy H is bounded below:

And this implies the traditional Heisenberg Uncertainty principle!

↑ comment by Oscar_Cunningham · 2021-10-29T10:09:37.459Z · LW(p) · GW(p)

Yes, I'm a big fan of the Entropic Uncertainty Principle. One thing to note about it is that the definition of entropy only uses the measure space structure of the reals, whereas the definition of variance also uses the metric on the reals as well. So Heisenberg's principle uses more structure to say less stuff. And it's not like the extra structure is merely redundant either. You can say useful stuff using the metric structure, like Hardy's Uncertainty Principle. So Heisenberg's version is taking useful information and then just throwing it away.

I'd almost support teaching the Entropic Uncertainty Principle instead of Heisenberg's to students first learning quantum theory. But unfortunately its proof is much harder. And students are generally more familiar with variance than entropy.

With regards to Eliezer's original point, the distibutions |f|^2 and |g|^2 don't actually describe our uncertainty about anything. We have perfect knowledge of the wavefunction; there is no uncertainty. I suppose you could say that H(|f|^2) and H(|g|^2) quantify the uncertainty you would have if you were to measure the position and momentum (in Eliezer's point of view this would be indexical uncertainty about which world we were in), although you can't perform both of these measurements on the same particle.

comment by jeremysalwen · 2012-04-14T19:23:14.006Z · LW(p) · GW(p)

Okay, I completely understand that the Heisenberg Uncertainty principle is simply the manifestation of the fact that observations are fundamentally interactions.

However, I never thought of the uncertainty principle as the part of quantum mechanics that causes some interpretations to treat observers as special. I was always under the impression that it was quantum entanglement... I'm trying to imagine how a purely wave-function based interpretation of quantum entanglement would behave... what is the "interaction" that localizes the spin wavefunction, and why does it seem to act across distances faster than light? Please, someone help me out here.

comment by rnollet · 2025-04-15T10:29:55.804Z · LW(p) · GW(p)

This link is dead.

To the best of my understanding, this is a working link to the same comic strip:

https://dresdencodak.com/2005/06/14/lil-werner/