Distilling the Internal Model Principle

post by JoseFaustino · 2025-02-08T14:59:29.730Z · LW · GW · 0 commentsContents

Overview Post Outline Introduction A simplified Internal Model Theorem Example 1: even-odd integer walking Equivalence relations as information Observability Condition Example 2 - Dyadic transformation Lemma: Generalized Feedback Condition The theorem Theorem: Internal Model Principle simplified The need to generalize None No comments

This post was written during the agent foundations fellowship with Alex Altair [LW · GW] funded by the LTFF. Thank you Alex Altair [LW · GW], Alfred Harwood [LW · GW] and Dalcy [LW · GW] for thoughts and comments.

Overview

This is the first part of a two-post series about the Internal Model Principle (IMP)[1], which could be considered a selection theorem [LW · GW], and how it might relate to AI Safety, particularly to Agent Foundations research.

In this first post, we will construct a simplified version of IMP that is easier to explain compared to the more general version and focus on the key ideas, building intuitions about the theorem's assumptions.

In the second post, we generalize the theorem and discuss how it relates to alignment-relevant questions such as the agent-structure problem [LW · GW] and selection theorems [LW · GW].

Post Outline

- We discuss the basic mathematical objects framed in a friendly-AI-tracking-a-super-AI setup and a condition called the "feedback structure condition".

- With the basic setup and feedback condition, we're already able to construct a (not very useful) notion of an internal model.

- We digress about why equivalence relations represent information structure and how that can be used to specify the observability condition. These ideas are used to make the notion of the model better.

- We prove our particular version of the theorem - which requires quite strong assumptions. We end up with a notion of model, in some sense, doesn't seem very useful either.

- This serves as motivation for why we need to generalize the assumptions in the second post.

Introduction

This section aims to explain the motivation for the post. Statements here might not be fully explained and will become clearer throughout the post. I aimed to include all the definitions and state mathematical facts used without proof to build the theorem from zero. Although there are a lot of equations in the post, there is little mathematical machinery used in the theorem. We mostly use facts about arbitrary functions and sets. I expect that someone with high-school level math could be able to understand this post if read carefully.

The Internal Model Theorem by Cai & Wonham [1] is an abstraction and generalization of the Internal Model Principle that appears in control theory examples. It's built directly on straightforward algebraic tools and a little bit of dynamical systems theory.

The theorem basically states: consider a discrete and deterministic external system passing signals to an internal system which can change its own states. If these signals satisfy a property we call "observability", which ensures that the internal system has enough precision about the receiving signal, then feedback structure and perfect regulation implies that the internal system necessarily has an internal model of the external system.

Intuitively, "feedback" means that the internal system's output can be fed back into the internal system to produce the next output. "Feedback structure" is the condition that ensures this, i.e, that the internal system's state depends only on its previous state. It's autonomous - as opposed to "pursuing the states of the external system one time-step later"

"Perfect regulation" here means that the set of external system states are all good states, in some sense of good. Good states could be, for example, states where the "error" between the external system and internal system is zero, which we expect to happen after the external system has reached some sort of equilibrium or stationary state.

"Modeling" means "faithful tracking/simulating": we say system with dynamics models a system with dynamics , where receives info from via , if and is injective.

- ensures simulation: Given a state , the information receives from is . The state the internal system is in after its own internal update is . We're saying models if those are the same.

- being injective ensures the notion of model is non-trivial, i.e, that A faithfully simulates B. As we'll see later, if is not injective, system A might not be expressive enough to model system B.

To illustrate, could be a robot trying to pursue a moving target in a controlled environment.

A simplified Internal Model Theorem

Suppose we have a very powerful and capable AI (which we'll refer to as ASI) and we don't have a clue about how it works, what decisions it makes, how it chooses these decisions, etc. One idea to understand its behavior is to make a friendly AI (FAI for short) oversee the ASI.

This is generally called "scalable oversight" in the AI Safety community, and we will use this idea to motivate the theorem. Don't get too caught up in the analogy, though - the theorem has strong assumptions that aren't necessarily satisfied in real settings. However, perhaps this theorem could be a good starting point to formulate better theories.

The ASI could be physical, virtual or any type of machine at all, and we would say that the ASI is in different states when its configuration is substantially different. For example, if the ASI is recharging its battery, we could say its state is ; if it is active, its state is . If it's learning, we'd say its state is ; if it's inferring, we'd say its state is .

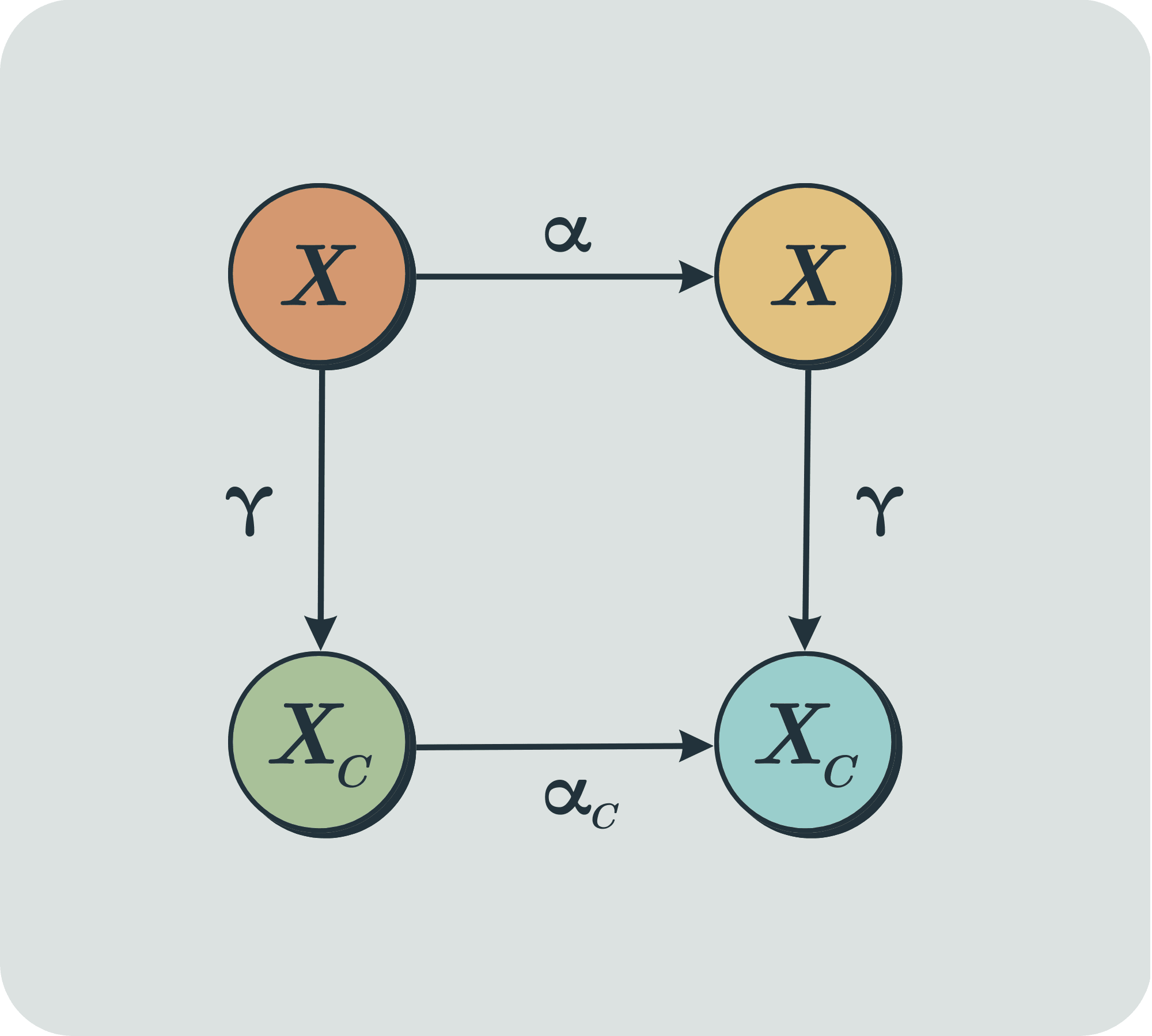

Abstractly, we will call the ASI's internal state set and the rule that describes how its internal state changes . That is, if the ASI is in the state , after one time-step, it will be in state , then in and so on. We call update rules like these discrete dynamics.

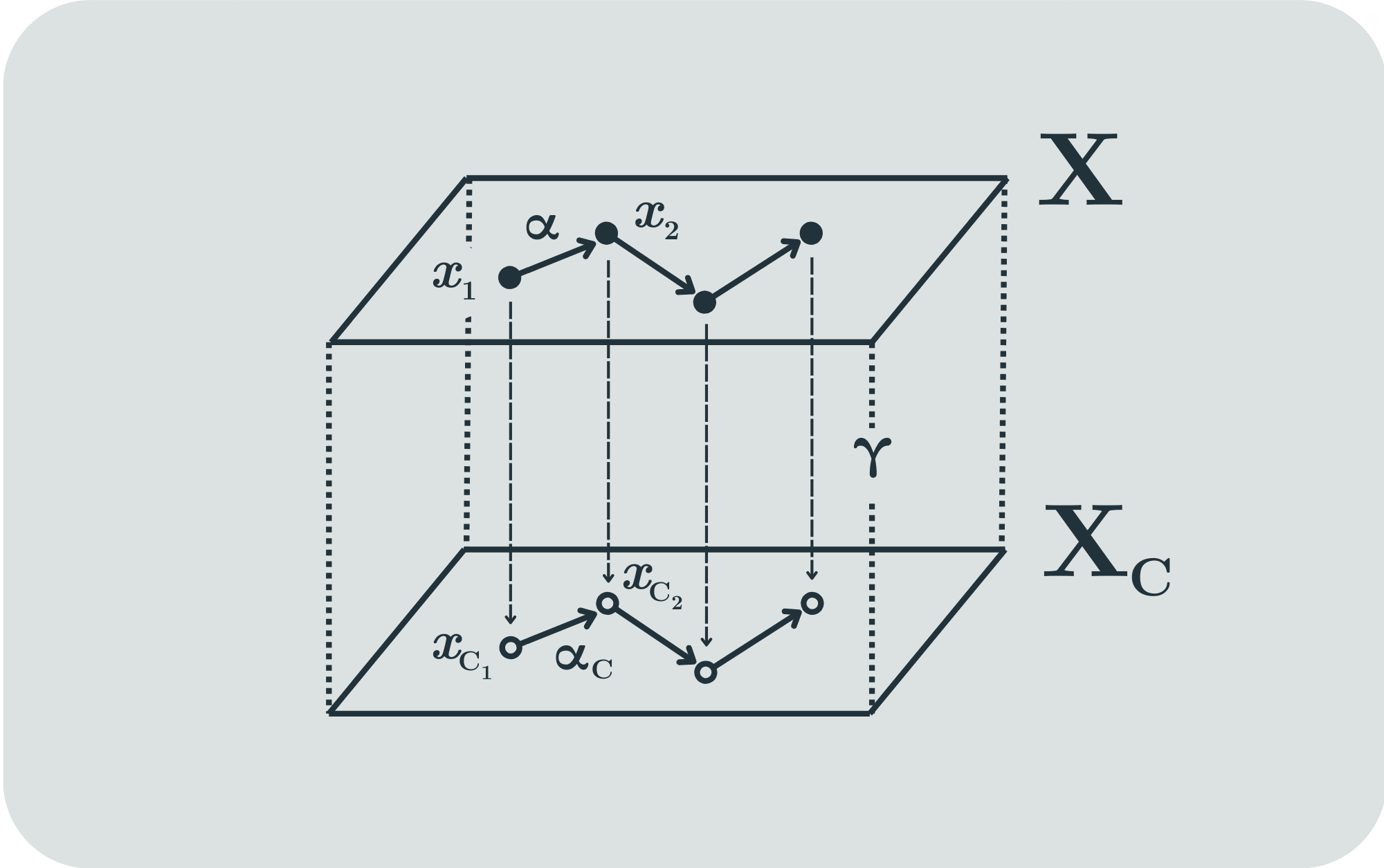

We assume the FAI has access to information about the ASI via a map , where is the FAI state set (we call it because often in control theory this is called the “controller”) and we ask - the image of under - to be equal to . This is not that big of a deal - we're only asking that any given state of the FAI represent information about some state of the ASI. If this was not the case, we could just change the co-domain of to be . Formally, this means that for every FAI state, it can be written as for some ASI state . Thus, we can think that defines .

We want the FAI to track/predict/model the ASI, i.e, to simulate its behaviour in some intuitive sense. Since we can interpret the FAI, this might give us clues about the ASI's behaviour.

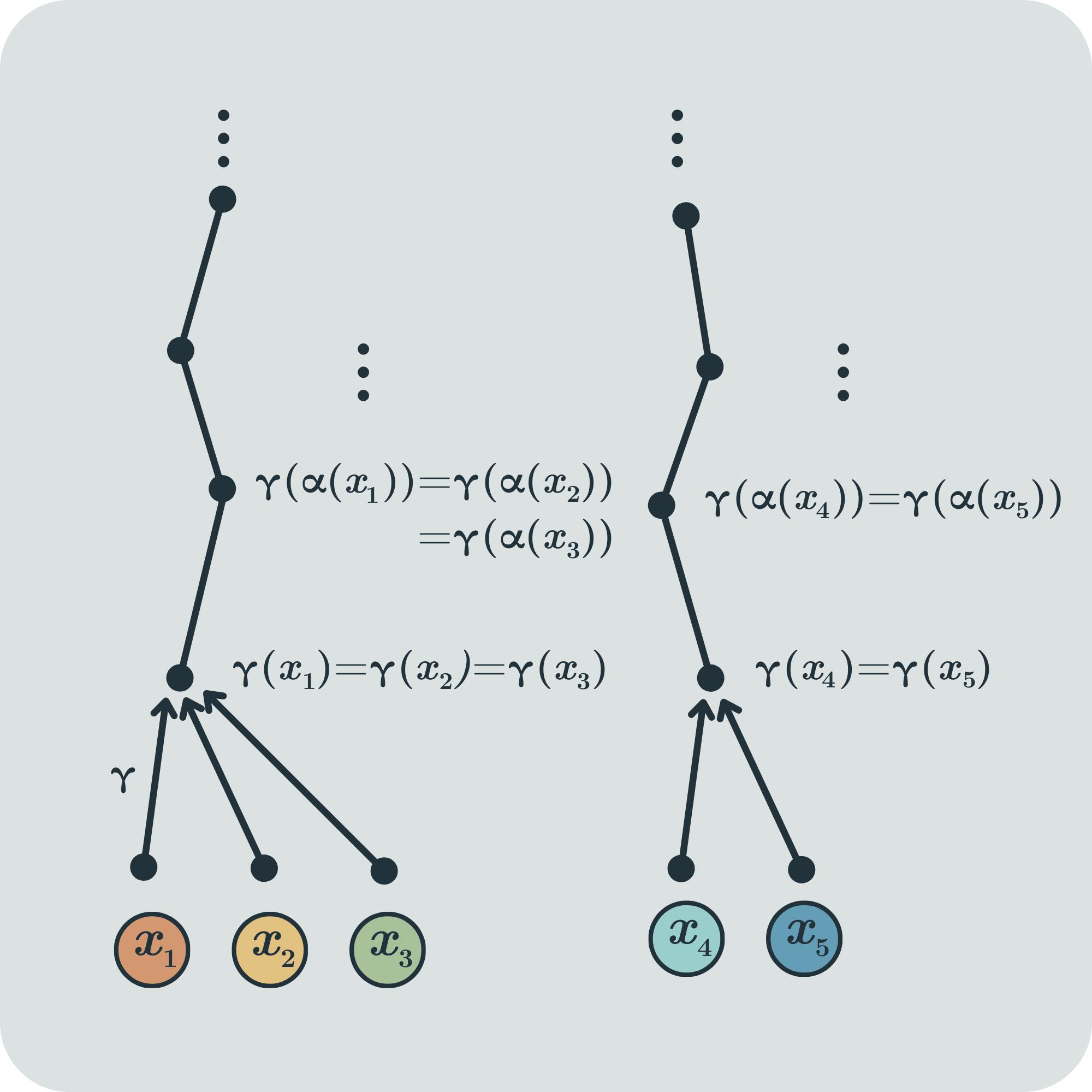

We want, then, to model the FAI as a dynamical system on its own, i.e, one that autonomously evolves. Otherwise, the FAI wouldn't be properly tracking/predicting the ASI's states. In other words, we want the FAI state to determine the next FAI state . Equivalently, this is to say that if are ASI states such that , then necessarily , because can only depend on

We call this the feedback structure condition, because it ensures that the next FAI state is just the feedback of the previous state. We will put a lot of emphasis on this because it's a fundamental hypothesis for the theorem.

Now, some algebraic remarks to help any unfamiliar readers:

- We call the set of equivalence relations and for equivalence relations on , we say

- One can also prove that every subset of has an infimum and supremum, , with respect to the ordering defined above

- In particular, it’s true that

- One can also prove that every subset of has an infimum and supremum, , with respect to the ordering defined above

- Every equivalence relation generates a partition on - the partition . Reciprocally, every partition of generates an equivalence relation on , i.e, the equivalence relation such that two elements are related if and only if they’re in the same set of the partition. Thus, we can think of equivalence relations and partitions as the same thing

- We call the sets cells

- If is any function, we define [2]

- One can prove that is an equivalence relation on X

Thus, we can rewrite the feedback condition as

Which is the same as

This leads us to another way to think about the feedback condition: it's ensuring that two points that are in the same set of the partition induced by remain in the same (that is possibly different from the initial) partition set after the ASI updates. Intuitively, this is saying that the observations the FAI receives are consistent: two different ASI states , that provide the same information to the FAI - , can't provide two different pieces of information - - to the FAI after the ASI updates.

Framed differently, if we don't assume the feedback condition, we would be saying that two states that provide the same information to the FAI will provide different information after one time-step. Intuitively, if this were the case, it seems that the FAI wouldn't be able to track ASI's states at all.

We will prove later that this condition actually ensures that the information the FAI receives from the ASI remains consistent after any number of time-steps.

The basic setup and feedback condition discussed so far are enough for us to define a rule - called the FAI dynamics - that tracks the ASI:

- Let , there is such that . Define

- Note that this definition is unambiguous: if is such that , then by the feedback condition,

- Note that

- This is trivially the case by the definition of :

- For all

- We interpret this result as follows: for a given ASI state , the FAI's observation from the next state of the ASI is . On the other hand, the FAI's update based on the current state observation is . The result states those are the same: that is, the FAI's state after its own update having observed the current state is the same as the FAI's state observed from the ASI's state update. In other words, the FAI's state after an update corresponds to the observation of the ASI's state after the update.

- This is trivially the case by the definition of :

- It's also true that is unique: it is the only map such that

- Assume . Then let

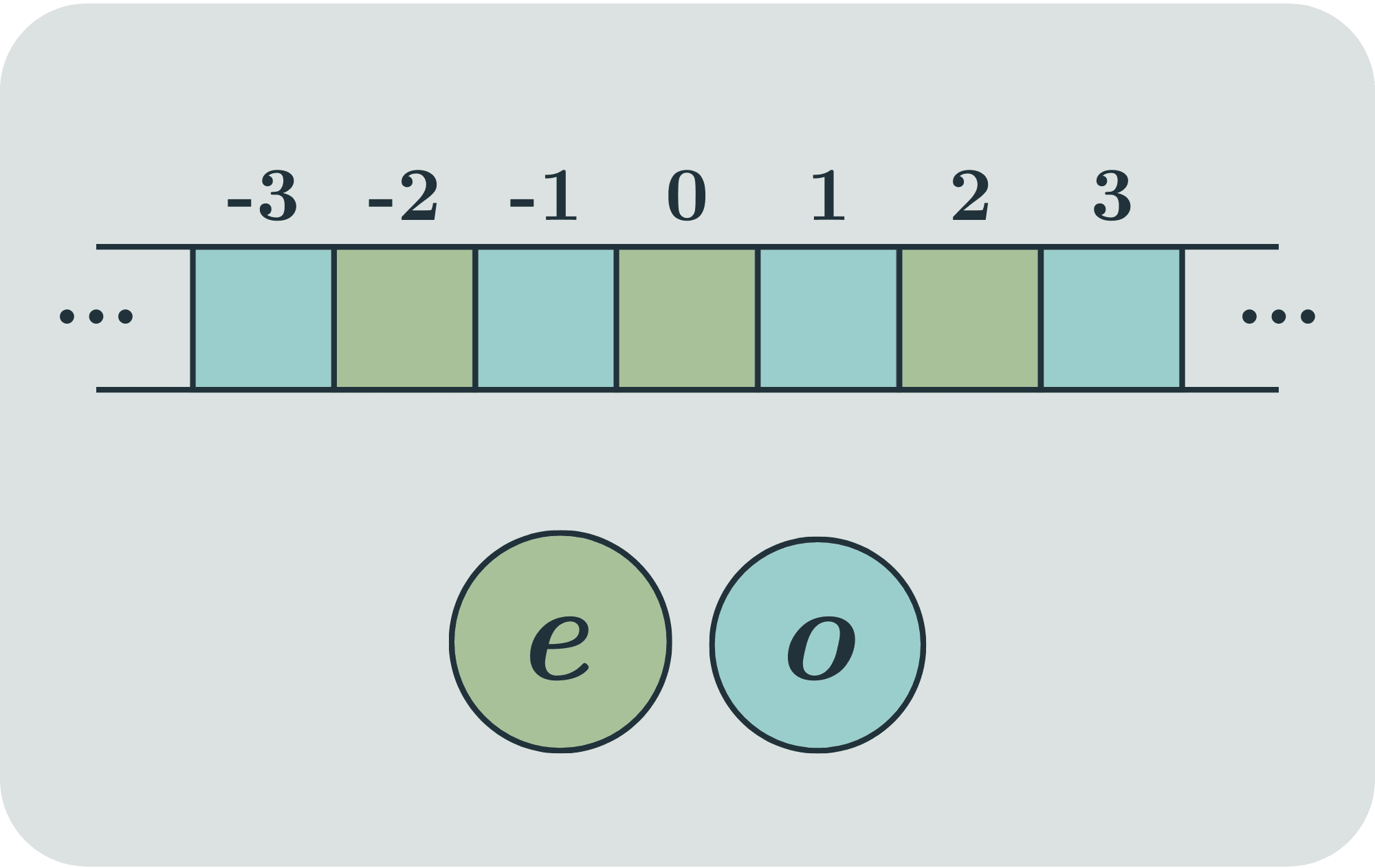

Example 1: even-odd integer walking

Consider a robot walking along the integers. It walks one unit to the right at each step, so its state set is and its dynamics given by , for all .

Let and given by if is even and if is odd.

Since if, and only if , we have

And

We're allowed to add and subtract one on both sides of the integer congruence, hence , so the feedback condition holds. Hence, by the result proved above, exists and is uniquely determined by

Now, calculating :

- For even,

- For odd,

Thus, autonomously alternates between states and

We generally call the "controller" for control theory reasons. We'll touch on that later.

It looks like the dynamic of the controller is simulating the dynamics of the robot, in some weak sense, but it doesn't feel like the controller is doing a very accurate simulation of the robot's behaviour - the controller can simulate the fact that the robot alternates between even- and odd-numbered positions, but it doesn't simulate the fact that the robot is moving indefinitely to the right. Ideally, we would want it to simulate both.

Can we add more assumptions to ensure that the controller accurately simulates the robot?

Since , a dynamics defined on can’t represent a trajectory more complicated than some sequence of states and . Recall that . Hence, to get a better notion of the model, we need to consider more assumptions about (and thus , if we think is defined by ). We can think that the controller states don't have enough "expressivity" to represent a model of the robot.

Another way to think about this is: if the observations that the FAI receives - which here are also the FAI's states - don’t encompass core information from the ASI's states, the FAI couldn’t possibly simulate the ASI.

Equivalence relations as information

We can interpret equivalence relations in as information structures on . Let and suppose an element is ‘known exactly’. Recall that induces a partition on , and that we call the sets forming the partition ‘cells’. Now, if is such that , all the ‘information’ or ‘precision’ we know about is no more than that it is in the cell that contains .

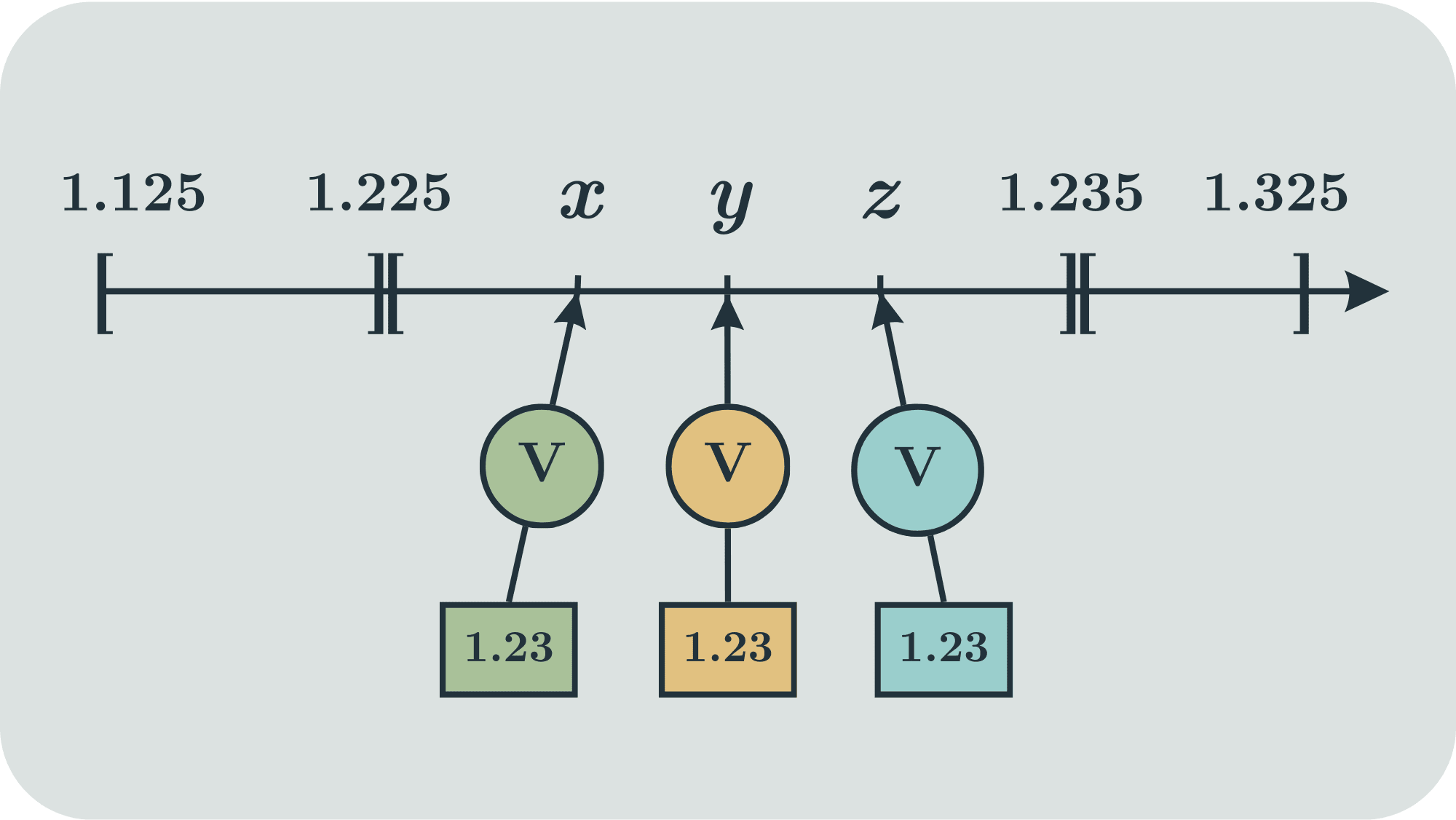

Cai &Wonham [1]illustrates this idea with the following example:

“Consider an ideal voltmeter that is perfectly accurate, but only reads out to a precision of . If a voltage may be any real number, and if, say, a reading of volts means that the measured voltage v satisfies , then the voltmeter determines a partition of the real line into intervals of length . What is ‘known’ about any measured voltage is just that it lies within the cell corresponding to the reading.”

In other words, any measured number inside the interval provides the same information.

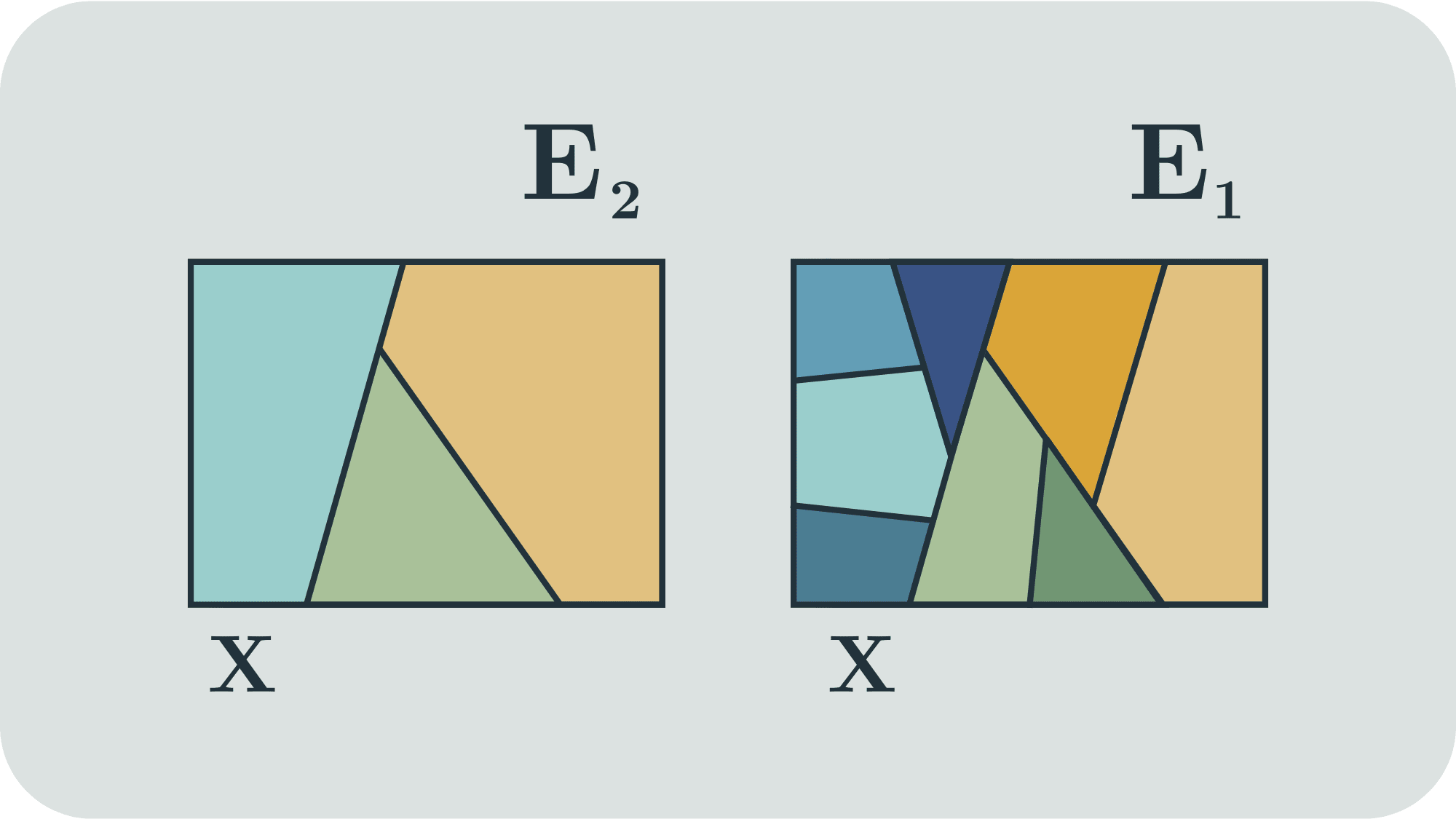

We can also interpret that if and are equivalence relations and , provides us with more information than . Thinking of as a partition of a closed region of a plane, it has more cells than . The figure below illustrate and as partitions:

On this basis, the finest partition possible for any given set is

In words, the finest partition is the one in which each is alone in a cell.

And the equivalence relation associated with is [3]

Let’s consider, then, our mapping provides us with the finest equivalence relation possible, that is,

Thus, for any , there’s no other such that , for, if this wasn’t the case, then , which is a contradiction because

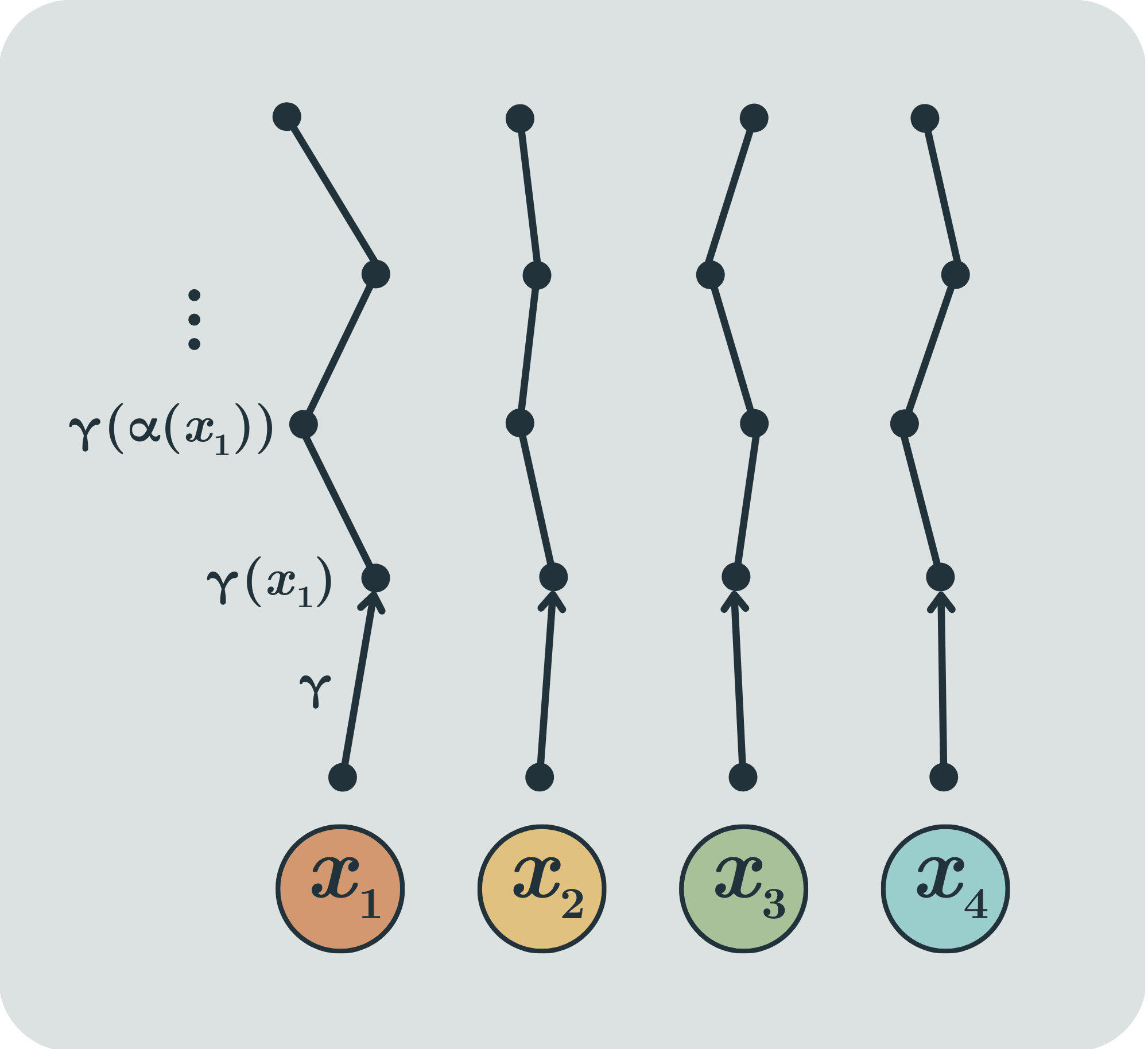

Hence, if we assume , we get that must be a bijection, and examples such as the one we used above don’t satisfy this new hypothesis. We know that, by our theorem, and is bijective. This is saying, by definition, that and are isomorphic dynamic systems.

One way to think about isomorphism is that two objects are isomorphic if they’re the same thing with different labels. Thus, with the new assumption, not only models in a weak manner, but faithfully simulates

Note that the key property that ensures faithful simulation here is injection. If was only injective, this would also have prevented our pathological example and would also give us an intuition of faithful simulation: we would only need to restrict the co-domain of functions to get a bijection.

Observability Condition

Now, we’ll consider a slightly more general assumption that can also provide us with a similar result but that we can use to generalize to other setups later.

All the information the FAI can ever get from the ASI starting in a point is

We call a trajectory and a trajectory of observations.

Instead of asking , we ask

Where . We call this the observability condition.

Recall from our algebraic remark that

So our assumption makes sense (i.e, is indeed an equivalence relation so we can ask it to be equal to )

Also note that

Hence, if , the trajectory of observations of and is the same.

On the other hand, if , then , for some - that is, their trajectory of observations is different at least in one point.

Thus, we can think what is doing is aggregating points that generate the same observation trajectories into cells

Asking that is to say that each cell consists of a single point - that is, the only point that can generate a trajectory of observations . In other words, given two points and , their trajectory of observations is different in at least in one step.

We can also interpret this condition in terms of precision:

- Consider the real line . A partition splits the line into sets (possibly with a single point). Consider a specific partition only made up of intervals. Think of this partition as associated to a weird non-uniform rule with some precision. If the partition intervals have the same length, it's a regular uniform, i.e, equally spaced (but infinitely wide) rule. The rule measures whether two points are in the same cell of the partition or not.

- Suppose we move around all the points according to in the first time-step, then to in the second and so on.

- Each update of the points is associated with a different partition. Taking the intersection of the partitions up to a finite time-step updates our rule with more precision, because the intersection of partitions is always a finer partition.

- In the limit, the partition is , and saying is saying that the rule of this partition (updated after all time-steps) can distinguish between any point. We say this rule has infinite precision.

Thus, the observability condition ensures that the information the FAI receives has infinite precision.

Obs.: Note that this condition of “infinite precision” is different from another condition that we call “perfect information”:

- "Infinite precision" states that the FAI can always tell apart two given trajectories of observations.

- "Perfect information" states that given a signal the FAI receives, it's possible to perfectly reconstruct the ASI state that generated that signal.

Let the initial ASI states be , then , then and so on. Comparing the two assumptions:

- implies that is uniquely determined to the FAI - FAI observes and since implies injective, is the only ASI state that can yield this observation . In other words, observation from the first time-step implies is uniquely determined to the FAI

- implies, by considerations above, that after long enough time-steps, is uniquely determined to the FAI

- Mathematically, it’s clear that implies (because I is the infimum of a set and belongs to this set, thus

Hence, it’s reasonable to think of the observability condition as a generalization of , both in terms of information structure intuition and mathematically.

Example 2 - Dyadic transformation

- Suppose we're looking at numbers between 0 and 1 in decimal notation. So any number is of the form , where each is a binary digit, i.e, or .

- Let the dynamics be =

- Now, when we double a binary digit, we're only shifting the digits one place to the left (so ) and (because if , the gets rid of and so = , and if , the does nothing. Then, the dynamics of the system just shifts every bit to the right and replaces the most significant digit (before the period) with 0

- Hence, ; and so on...

- Suppose for all numbers in this form. This means that the only information we have available is the first digit after the period.

- Now suppose we have a system that starts in and evolves according to Then, and is capable of uniquely determining . Indeed,

Note that in this scenario, is able to determine every digit of , which has an infinite amount of digits. This illustrates well why observability relates to infinite precision.

Lemma: Generalized Feedback Condition

Before we check that the observability condition yields an analogous result to , we need to construct a lemma.

Recall that the feedback condition states that . This, in turn, implies that

Note that in the proof[4], it is important to use . In the next post, we’ll consider a larger set and one crux of the argument will be to ask that and remain in a given set.

The theorem

We can now derive a more specific version of the theorem by assuming the feedback condition and the observability condition.

We’ll see that, in our setup, observability and feedback trivially implies bijection, but this will not be necessarily true in a more general setup, and we will use the same idea of proof to extend this to the more general scenario.

By the generalized feedback condition,

Which implies

(Because the infimum is the greatest lower bound)

By observability,

Thus, we derive that , the initial condition we used to show that is bijective.

Obs.: The general mathematical relation between the assumptions we discussed in our setup is as follows:

- observability

- observability and feedback

- Note that bijection, hence

- bijectionobservability and feedback

To finish up the discussion, let’s restate mathematically the complete theorem with all the assumptions and interpret it.

Theorem: Internal Model Principle simplified

Let and surjective.

- (The FAI necessarily models the ASI) If (feedback), then

- is the unique map determined by

- (The model is faithful) Additionally, if (observability), then

Thus, and are isomorphic.

If represents the ASI dynamics and represents the information map from the ASI to the FAI, then

- If the FAI is an autonomous dynamic system, there’s a unique map defined on FAI states that could possibly model the ASI.

- If, additionally, the FAI can distinguish between the ASI’s different trajectories, then this unique map faithfully represents the ASI, in the sense that they’re isomorphic.

The need to generalize

Clearly, this doesn’t accurately represent a real world scenario of scalable oversight and is also a very specific theorem with strong assumptions that a lot of real systems don't satisfy:

- We’re assuming the ASI dynamics to be discrete and deterministic

- The discrete assumption seems more or less acceptable, given that

- Real world computers are finite and discrete

- Even for continuous or mixed phenomena, good discretized models can provide us a lot of insight

- The deterministic assumption feels more complex to handle and depends on the nature of the ASI - would the ASI be just a super big calculator with fixed weights? Would it take action under uncertainty?

- The discrete assumption seems more or less acceptable, given that

- As the theorem is now, it can’t represent any example of an observation map that is not a bijection (since feedback and observability together are equivalent to bijection). This is a problem because

- The observation map being a bijection means that the information the FAI receives from the ASI is perfect: we can uniquely reconstruct the ASI sequence of states via the FAI sequence of observations (i.e, is well-defined and unique). This is quite a strong assumption.

- It seems it would be more interesting and general if the theorem applied to systems without perfect information; that is, if the theorem guaranteed that even systems with less than perfect information could develop a faithful internal model.

- This implies that and have the same cardinality.

- Would a weaker and stronger model have the same cardinality? Intuitively, we would expect the cardinality of the ASI stateset to be greater than FAI stateset.

Nonetheless, this theorem can be a (simplistic) starting point to think about formalizing AI control questions. It may also help answer alignment-relevant questions such as the agent-structure problem, as we'll see in the second post.

We can solve part of the problem by relaxing a few assumptions we made:

- We allowed the FAI to be autonomous whenever the ASI is in any state (feedback states that ). We could have considered that there’s a set of ASI states where the FAI is allowed to be autonomous.

- It could be the case that the FAI doesn't receive information directly from the ASI, but instead that the ASI interacts with the world and the FAI receives information from this world.

We'll show a version of the theorem that can be applied in more general setups and that this general version actually solves the problem pointed in 2. (i.e, bijection) in the second post.

- ^

Supervisory Control of Discrete-Event Systems, (2019) Cai & Wonham as section 1.5

- ^

This is called the kernel of a function because it's somewhat analogous to the kernel of a linear map from linear algebra, or the kernel of a homomorphism from abstract algebra. Here, instead of being the set of all things that get sent to zero, we're partitioning the whole domain into sets of things that get sent to the same value of the codomain

- ^

The symbol is read as "bottom". We use this name and symbol because the equivalence relation is smaller than any other in our (partial) ordering defined on the set of equivalence relations over

- ^

We can prove this fact by a simple induction on , but we’ll outline the proof for didactic purposes (it will help readers understand why, when extending the theorem, we need to ask for some set to be -invariant):

- Base case: is the feedback condition, which we assume to be true

- Induction hypothesis: Suppose all the inequalities hold for , that is,

- Inductive step: Now, we must prove

- By induction hypothesis, . Let and . Since , we know and by above we know . By Feedback, . Thus,

- The rest of the inequalities follow by transitivity

- Hence, the proof follows by induction

0 comments

Comments sorted by top scores.