Dissolve: The Petty Crimes of Blaise Pascal

post by SebastianG (JohnBuridan) · 2022-08-12T20:04:57.992Z · LW · GW · 4 commentsContents

Human Reactions Little Story about an Angry Theologian Merely Human Defenses against the Outer Darkness How to Mug Rich Uncle AGI See my wallet? Cobwebs. See my brain? GALACTIC. None 4 comments

VLADIMIR: [H]e'd have to think it over.

ESTRAGON: In the quiet of his home.

VLADIMIR: Consult his family.

ESTRAGON: His friends.

VLADIMIR: His agents.

ESTRAGON: His correspondents.

VLADIMIR: His books.

ESTRAGON: His bank account.

VLADIMIR: Before taking a decision.

ESTRAGON: It's the normal thing.

VLADIMIR: Is it not?

ESTRAGON: I think it is.

VLADIMIR: I think so too

Waiting for Godot

Like many petty crimes, Pascal’s Mugging is more time-consuming than law enforcement has time for. Yet it has always struck me as a puzzle worth understanding.

Vishal asks me for a dollar this week. In exchange he will give me a billion dollars next week. The probability of this happening, I guess, is not zero. So, I give him the dollar. It’s only a dollar, and since when do I carry cash anyway?

He comes to me next week to try the same thing. I say, “Get Lost, Man! Mug me once, shame on you. Mug me twice, shame on the foundations of decision theory.”

First of all, this whole “Pascal’s Mugging” thing is a misnomer. It’s more like fraud. My beliefs and values are not being stolen, rather they are being hacked, deceived, and provided a mere mirage of payoff. Yet, we call it a ‘mugging.’

Human Reactions

Introspection tells me there are two ways of rejecting an EV calculation within any defined wager scenario: firstly, I might reject the validity of one of the variables. Maybe the probabilities assigned are too low or high, maybe the payoff values are too low or high. When you have reason to distrust the assigned values, you go back - investigate your assumptions, reevaluate the data, recalculate. This is, of course, the obvious course.

Secondly, I might reject something deeper. I don’t know what to call it. Perhaps, the definition or shape of the wager scenario. It is something like when I reject the underlying logical coherence of the choice presented.

When I reject Vishal’s offer of a billion dollars, I am not necessarily rejecting it because of a calculation that turned out negative. Perhaps, some bit of information is conveyed or not conveyed to me that allows me to ignore the words coming out of his mouth and reject the game. Does this work as a theory? I want to find a more principled way of rejecting certain wagers than mere intuition that something is ‘off’ about them.

Little Story about an Angry Theologian

I once got into a warm discussion about Pascal’s Wager with a theology-minded philosopher. She claimed that the wager only works when the two options seem to the agent like ‘live’ options. What did she mean by this? She meant, insofar as I understood her, that the probabilities and payoff fit some prior model of what is possible in this universe. Put another way, you must be willing to assign probabilities in order to get mugged, and sometimes a person is precommitted to views that do not allow them to assign probabilities to an event or object.

I happen to like this framing. Probabilities must be assigned in order to get mugged, but perhaps there has to be some further reason one is justified in assigning probabilities in the first place. Seems intriguing. It even works by the dictionary definition of a mugging: no one gets mugged unless they are in a public place.

Still, how do we define an intellectual active place where one is able to assign probabilities to events and to contingent objects coming into existence?

Merely Human Defenses against the Outer Darkness

Dubiously rational human agents frequently reject getting mugged thanks to the heuristics and biases that buffer us from the outer darkness of pure decision theory.

- Scope insensitivity implies that sufficiently high and sufficiently low numbers will cease computing, and we merely reject the coherence of the choices presented. Experience bears this one out.

- Finite resources in computing and cost bearing means that any mugging must get past our internal opportunity cost calculator and our discount rate.

- Status quo and social desirability bias prevent average people from individually falling for weird cults and other extreme lifestyle changes, whether those changes are lifesaving or not.

The human defenses are unsatisfying from a game theoretic perspective because they are so unprincipled and result in suboptimal choices so often. We get mugged when we shouldn’t and fail to get mugged when we should. (Perhaps the reason so many government institutions are afraid of using cost-benefit analysis is that deep in the subconscious of governing committees is the fear of being mugged in public, i.e. to fall for a bad argument.)

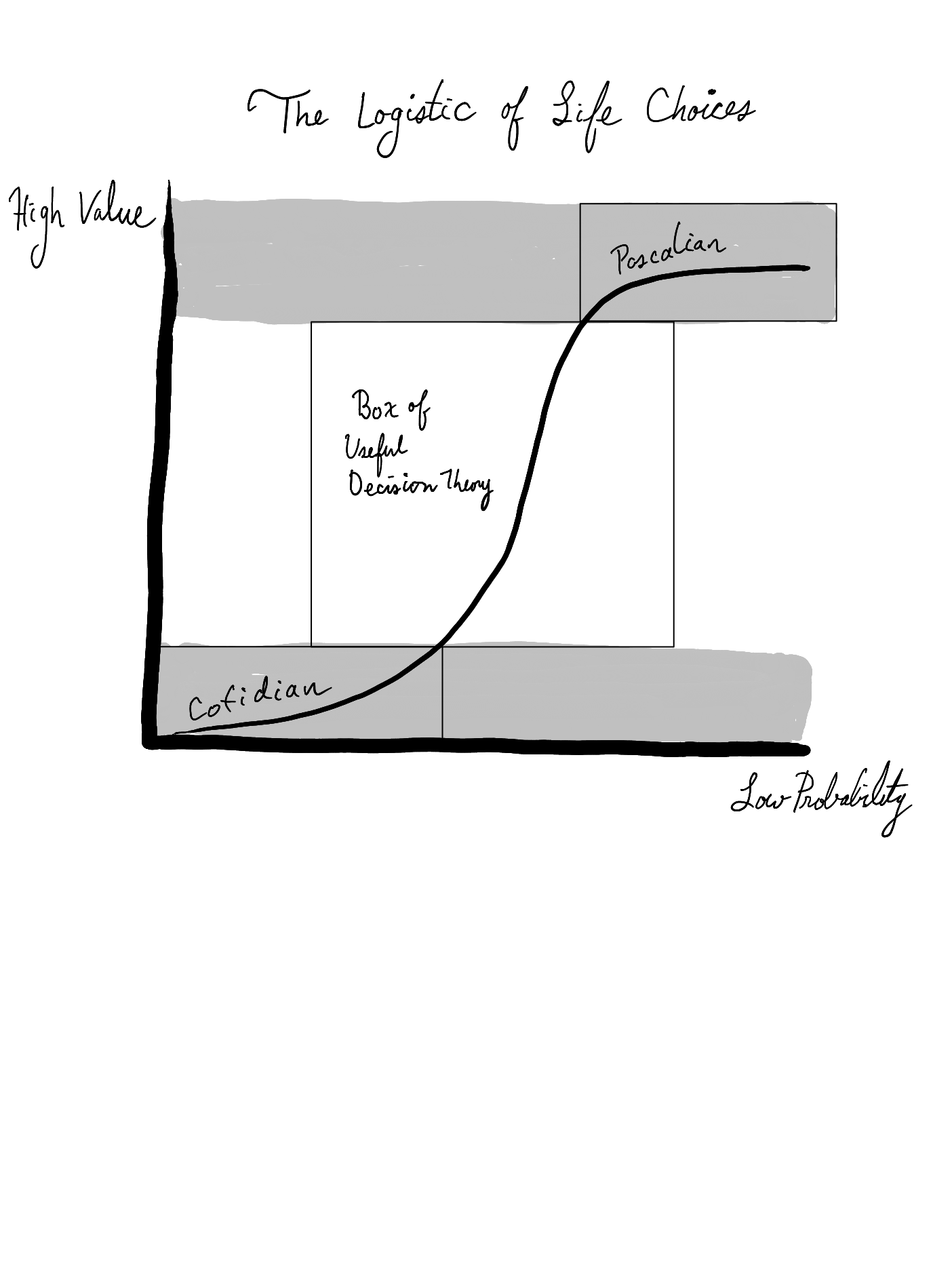

One way of representing the situation I see is the ad hoc graph above, which casts no light on theory, but I think is one way to represent how wise people make decisions.

We have this logistic curve, logistic and s-shaped because at sufficiently high values and sufficiently low probabilities decision theory becomes weird and knotted and without good effect. S-shaped because at sufficiently high probabilities and sufficiently low values things are too obvious to even think about explicitly – until suddenly, they are.

Thus, I have the cotidian and pascalian boxes. While I am terribly unsatisfied to draw things this way. In practice, I do think there is a box of cotidian decisions for which explicit decision theory does not yield additional alpha. And there are also pascalian questions, against which people defend themselves by means of metaphysical commitments and brute intuition.

But this human decision-making, I am describing is merely that: descriptive. It offers us little for solving longstanding issues in game theory. Still, I think description is the place to start. And descriptively, people get off the decision theory train in those two boxes, thanks to in-built machinery and social conformity – or so I have argued thus far.

How to Mug Rich Uncle AGI

An ideal rational agent does not have heuristics built on scope insensitivity. So perhaps an ideal rational agent gets mugged a lot. What to do about this? It does not sound ideal.

Let’s imagine the agent has unlimited resources and computation. The fact of unlimited resources allows us to ignore opportunity cost in being mugged, making calculations easier. If the biases are mostly there to prevent us from getting used. Then the infinite resources make this rational agent uncaring about the loss of resources to potential muggers.

For each proposal, the agent projects twin-curves of the probability and payoffs of the same proposal n times. As the curve of promised payoff approaches infinity and the probabilities assigned approach zero, the rational agent would update both values immediately to the limits of infinity and 0 simultaneously and cease making deals that get it mugged.

Because the agent has infinite resources, there is nothing irrational about getting mugged a few thousand times. Opportunistic agents, however, would figure out this pattern, and work to keep the probabilities within bounds. Of course, the original rational agent would know this and try to find the equilibria where the negative and positive expectation of deals is a random walk.

A rich agent can afford a lot of risk. It is known that probability theory does poorly with infinities. All of this is to show that Pascal’s Mugging is boring, and not worth preventing, when the agent has infinite resources. It is a victimless crime.

See my wallet? Cobwebs. See my brain? GALACTIC.

Would an agent with unlimited compute and limited resources be any different? Perhaps, an agent with unlimited compute but not unlimited resources would have some algorithm that limits Pascalian scenarios.

I don’t see why. On the one hand, if the agent can somehow trade compute for resources, then we are back into the above scenario of an agent with unlimited compute and unlimited resources. On the other hand, if you have unlimited compute what do you need resources for?

We must limit both variables compute and resources for the problem of mugging to matter, but once we do that, I am afraid we are no longer dealing with ideal rational agents, but agents with bounded rationality, agents like us, who based upon their own goals and systems of life will have to develop their “heuristics and biases” (or whatever the alien intelligence equivalent is) to protect their goals from too much mugging and too much drift.

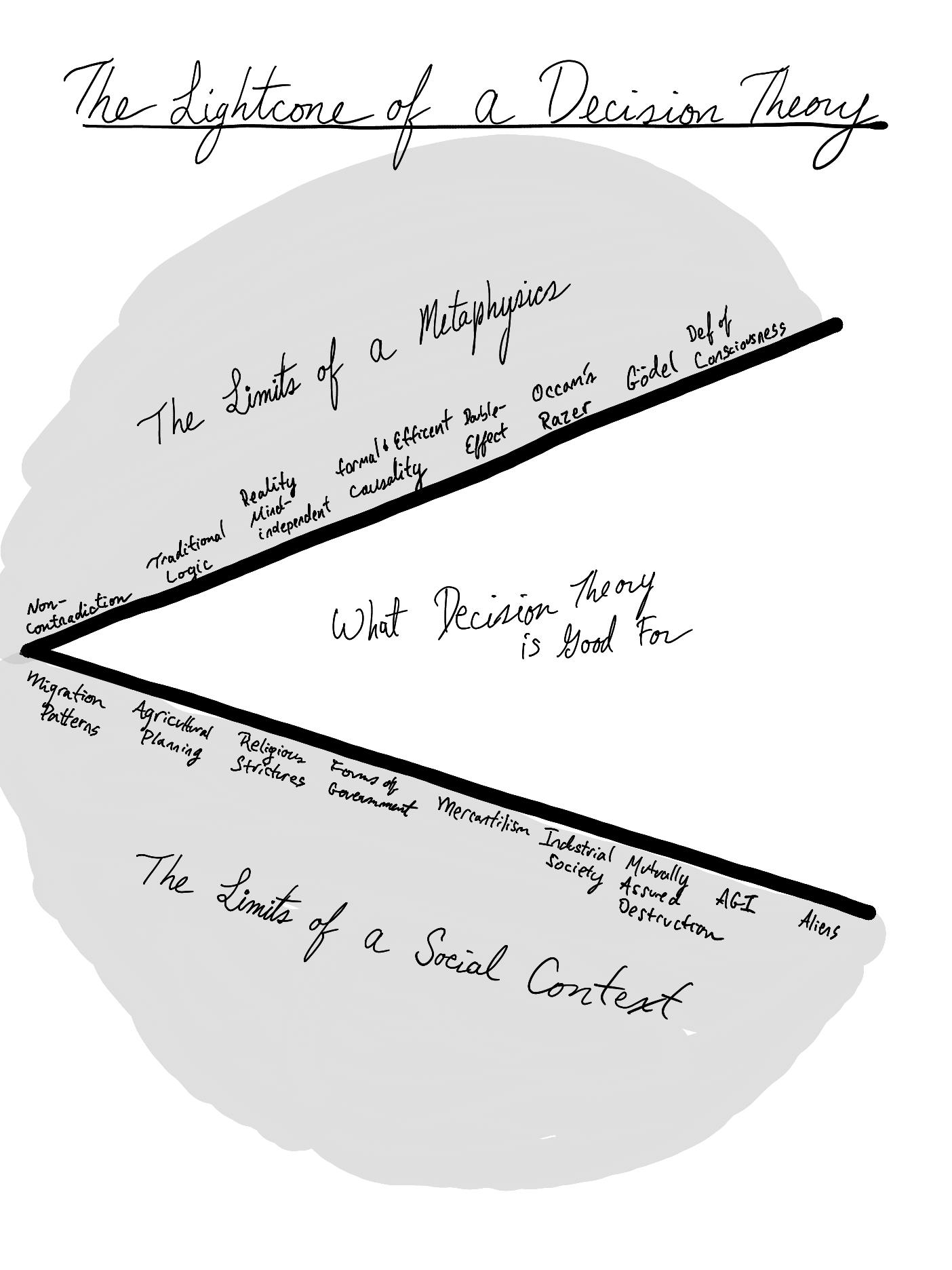

I see it as a lightcone which is half bound by metaphysical priors and half bound by social context and the abilities of the moment. The more established and well-articulated each of these halves are the greater the area in which decision theory applies.

Behold the lightcone! It looks like Pac-Man, and like Pac-Man it cannot eat utilons without an upper and lower jaw. The idea I have in mind is that decision theory only applies within a particular metaphysics and within a particular social context. Both must be bound and assumed. If we don’t assume a single metaphysics, then we wind up with competing types of utilons. The most obvious case is when one metaphysics posits an entity as a possibility that another metaphysics does not: immortal souls vs immortal people, or a billion conscious insects vs a single puppy, or the wrongness of betrayal vs the rightness of a sacred ritual, the rights of nations vs the rights of an individual.

But take the St. Petersburg Paradox:

“A fair coin is flipped until it comes up heads. At that point the player wins a prize worth 2n units of utility on the player’s personal utility scale, where n is the number of times the coin was flipped.”

We likely do not have a metaphysics that can handle infinite undifferentiated utility. Infinite utility does not mean anything to humans right now.

Sometimes expected value calculations are like John Stuart Mill’s higher pleasure that no one has experienced. If we recall, Mill argues that the person who is a good judge of the relative value of pleasures is the person who has experienced both and can speak to the qualitative utility of each. If utility works like this, then positing higher "types* of utility than has yet been experienced is meaningless. The upper jaw of metaphysics cannot grok underdefined utilities.

Meanwhile, the lower jaw of social context, a term which I am here abusing to include everything from biases to social conformity to the capacity of our society to affect the environment, does not work often enough with tiny probabilities to have a sense of what deals are worth taking.

This solution isn’t satisfying. It kind of sucks. It doesn’t relegate Pascal’s mugging to formal decision theory’s wastebasket as I would like to say it does. But I am, at least, proposing something specific. That Pascal’s mugging occurs by using framing effects to transpose the agent into a different social context or by proposing new metaphysical assumptions and uncertainties. I am proposing that decision theory cannot incorporate several contradictory metaphysical assumptions or social assumptions at the same time. I am proposing that if we pay more attention to these metaphysical and sociological elements of paradoxes, repugnant conclusions, and so on, we can reliably dissolve the issue until it is within the decision theory window, and successfully identify when it is in the decision theory window.

4 comments

Comments sorted by top scores.

comment by Vladimir_Nesov · 2022-08-13T03:21:53.662Z · LW(p) · GW(p)

Goodhart boundary encloses the situations where the person/agent making the decisions has accurate proxy utility function and proxy probability distribution (so that the available in practice tractable judgements are close to the normative actually-correct ones). Goodhart's Curse is the catastrophy where an expected utility maximizer operating under proxy probutility [LW · GW] (probability+utility) would by default venture outside the goodhart boundary (into the crash space [LW(p) · GW(p)]), set the things that proxy utility overvalues or doesn't care about to extreme values, and thus ruin the outcome from the point of view of the intractable normative utility.

Pascal's Mugging seems like a case of venturing outside the goodhart boundary in the low-proxy-probability direction rather than in the high-proxy-utility direction. But it illustrates the same point, that if all you have are proxy utility/probability, not the actual ones, then pursuing any kind of expected utility maximization is always catastrophic misalignment.

One must instead optimize mildly and work on extending the goodhart boundary, improving robustness of utility/prior proxy to unusual situations (rescuing them under an ontological shift [? · GW]) in a way that keeps it close to their normative/intended content. In case of Pascal's Mugging, that means better prediction of low-probability events (in Bostrom's framing where utility values don't get too ridiculous), or also better understanding of high-utility events (in Yudkowsky's framing [LW · GW] with 3^^^^3 lives being at stake), and avoiding situations that call for such decisions until after that understanding is already available.

Incidentally, it seems like the bureaucracies of HCH [LW(p) · GW(p)] can be thought of as a step in that direction, with individual bureaucracies capturing novel concepts needed to cope with unusual situations, HCH's "humans" keeping the whole thing grounded (within the original goodhart boundary that humans are robust to), and episode structure arranging such bureaucracies/concepts like words in a sentence.

Replies from: JohnBuridan↑ comment by SebastianG (JohnBuridan) · 2022-08-13T13:56:00.419Z · LW(p) · GW(p)

Thank you for this high quality comment and all the pointers in it. I think these two framings are isomorphic, yes? You have nicely compressed it all into the one paragraph.

comment by Dagon · 2022-08-12T21:48:49.950Z · LW(p) · GW(p)

Pascal's mugging never was very compelling to me (unlike counterfactual weird-causality problems, which are interesting). It seems so trivial to assign a probability of follow-through that scales downward with the offered payoff. If I expect the mugger to have a chance of paying that's less than 1/n, for any n they name, I don't take the bet. If I bothered, I'd probably figure out where the logarithm goes that makes it not quite linear (it starts pretty small, and goes down to a limit of 0, staying well below 1/n at all times), but it's not worth the bother.

comment by DirectedEvolution (AllAmericanBreakfast) · 2022-08-12T21:34:24.982Z · LW(p) · GW(p)

In a one-off context, Pascal’s Mugging resembles a version of the Prisoner’s Game Dilemma. “Lock in a decision to cooperate that I can observe, and I promise I will also choose cooperate.” Only in this PGD, Vishal’s “cooperate” option punishes him, and he’d have to independently choose it even though he already knows for sure that you’ve picked “cooperate.”

In a one-off context, where we can’t gather further information, as the Pascal’s Mugging frame suggests, there’s a defect/defect equilibrium that I think justifies rejecting Vishal’s offer no matter how much he promises.

We do have to have the sophistication to come up with such a game theoretic framing, and we might also reject Vishal’s offer on the basis of “too weird or implausible, and I don’t understand how to think about it properly” until we come up with a better objection later.