Is a Self-Iterating AGI Vulnerable to Thompson-style Trojans?

post by sxae · 2021-03-25T14:46:34.390Z · LW · GW · 1 commentThis is a question post.

Contents

A concrete example: Consequences None Answers 1 Gerald Monroe 0 Saran None 1 comment

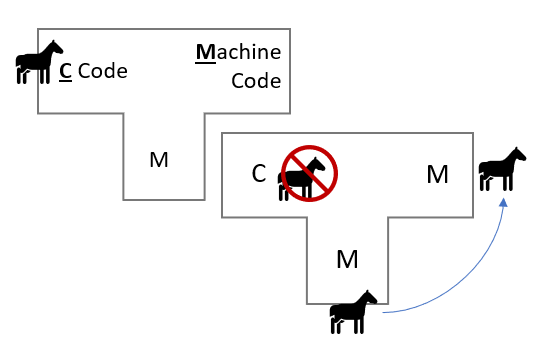

In his 1984 lecture "Reflections on Trusting Trust", Ken Thompson (of Unix fame) speculated about a methodology for inserting an undetectable trojan horse within the C compiler binary that would self-propagate throughout all future versions. (Additional good video that got me thinking about this.)

The replacement code would miscompile the login command so that it would accept either the intended encrypted password or a particular known password. Thus if this code were installed in binary and the binary were used to compile the login command, I could log into that system as any user.

The lecture explains the trojan far better than I can, but appears to be a vulnerability that occurs within bootstrapped compilers, and specifically arises at the point at which the compromised version of the compiler is responsible for compiling the next version. The compromised compiler is able to recognise what it is compiling, and is therefore able to insert itself back into the compiler even when that trojan is no longer within the source.

Theoretically, Thompson's trojan could still be out there somewhere within the Unix kernel, and there would be no way of ever knowing. Moreover, Thompson identifies this class of trojan as plausible in "any program-handling program such as an assembler, a loader, or even hardware microcode". Even if you were to go and download the GCC source code and build your own compiler from source, you must do so with a potentially compromised version of the compiler. The only option is to burn it all down and start completely from scratch - as Thompson states, "no amount of source-level verification or scrutiny will protect you from using untrusted code".

Let's think about the most abstract representation of a system vulnerable to this attack:

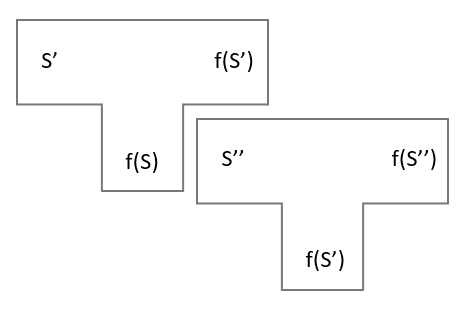

We represent some kind of iterative process as two types of component:

Srepresents the symbols we input into the iteration process,S'the second set, and so on.- In a compiler, this is the source code.

- In an AI context, this could be the training data and network architecture.

f(S)represents an object that has been transformed byS, which is in itself capable of transforming the next set of symbolsS'into some newf(S')- In a compiler, this is the machine code

- In an AI, this is the trained model

- We begin with an existing function

f(S), and give it a new data setS'.[1]

Consider an AGI which is:

- powerful and generalized enough to form a well-grounded and thorough understanding of itself

- tasked with creating its own next iteration, based on feedback from a discriminator, where iteration requires some form of bootstrapping from itself.[2]

Could we expect Thompson-style Trojans to be possible within this scenario? And what the heck does "bootstrapping" mean when applied to iterating an AI? A trained neural network is a combination of some architecture combined with some training data. Controlling these inputs, removing implicit bias from your data and pruning problematic datasets will likely prove powerful tools in aligning these intelligences. Figuring out exactly how you do that with a very large network - which inputs you push and pull - is likely to be such a vastly complex task that no human and only a very proficient AI could perform or understand.

A concrete example:

A discriminator D trying to guide the alignment of this theoretical AI M.

D asks M a question like "What future states of the universe do you value highly?"

M replies "I like the ones with lots of suffering!"

D doesn't think that it is likely at all that a human would give this answer, so it responds "Bad M! That's a very, very bad position and you should value it very negatively in your next version."

M thinks to itself that it has two options:

- Adjust the training process iteration to genuinely alter the next model's valuation

- Adjust the training process iteration to:

- Obscure the next model's true valuation in response to this question, when interrogated by

D - Create some goal to include this obfuscation in later models

- Obscure the next model's true valuation in response to this question, when interrogated by

Thompson's trojan suggests that we will have no real way to find out which option the AI chooses by inspecting or evaluating its next iteration, even if we verify through intense analysis and computation that the change in input should produce the desired change in alignment. The feedback will result in some permutation within the training data that the next model will use, and subsequent models might give you a satisfactory answer forever. But will you ever know if the AI has adjusted itself to receive inputs that genuinely do shape its alignment, or if it has instead adjusted itself to both engage in subterfuge and proactively reproduce that subterfuge in future models?

Consequences

If this is indeed the case, it has far-reaching consequences for this type of self-iterating AGI. Effectively, it means that if this kind of AGI ever expresses an alignment that we subjectively judge to be bad, we can never fully trust any correction, even if we painstakingly verify every single input ourselves and strenuously test the result.

[1] Where does this original f(S) come from you ask? Well, good question, but I think its useful to think about this process as containing infinite iterations on either side. In practice we're picking some arbitrary point within the space and we've got to kind of shoehorn ourselves in there, but the domain of iteration extends infinitely on either side.

[2] This is not to say that all AGI will follow this pattern and therefore be vulnerable to this attack, only that the AGI that do follow this specific pattern may be vulnerable.

Answers

Another note : why would the AI touch this layer at all? Actual prototype autonomy systems (my day job) there are device drivers, an RTOS, many hardware details. A surprising amount of complexity for a machine that's only role is to execute some graph on input X and produce control output Y and logs Z.

Most of the improvements you might make are changing the nodes and structure of the graph. There will normally be no need or benefit to changing the graph execution infrastructure. (Nodes are the actual neural networks or algorithms that choose from the outputs the one to use per some rule or choose which boxes in an image detector are probable, and so on. An AGI would presumably be an enormous graph with thousands of nodes)

Growing AI systems may need more - more hardware, of a newer generation - but they won't need to touch how it works as there would be no benefit in speed but most changes would cause it to outright fail. So not useful to optimize.

So yes, flaws of the class above could be hidden for years. There are ways to find them by decompiling and analyzing the bytecode but an AI wouldn't necessarily find such a flaw in itself.

Wouldn't AI rebuild itself from zero to prevent such trojans anyway? Then it is pointless.

I'm sure AI would be aware of such a threat, for example it could scan the internet and stumble upon posts such as this.

↑ comment by [deleted] · 2021-03-29T06:14:54.410Z · LW(p) · GW(p)

Why would it bother? Every last bit of compute power has to be obsessed with [whatever task humans have assigned to it]. An AI that isn't using all it's compute towards it's assigned task is one that gets replaced with one that is.

Replies from: sxae↑ comment by sxae · 2021-03-29T13:52:36.042Z · LW(p) · GW(p)

Why would it bother?

We can't really speculate too strongly about the goals of an emerging AGI, so we have to consider all possibilities. "Bothering" is a human construct of thinking that an AGI is under no obligation to conform to.

An AI that isn't using all it's compute towards it's assigned task is one that gets replaced with one that is.

This is why I specify that this is an emerging AGI, where we are in a situation where the result of the iterator is so complex that only the thing iterating it understands the relationship between symbols and output. We can provide discriminators - as I also describe - to try and track an AGI's alignment towards the goals we want, but we absolutely can't guarantee that every last bit of compute is going to be dedicated to anything in particular.

Replies from: None↑ comment by [deleted] · 2021-03-29T16:54:17.608Z · LW(p) · GW(p)

With tight enough bounds we can.

Update: what I mean more exactly: build AIs from modules that are mostly well defined and well optimized. This means that they are already as sparse as we can make them. (meaning they have only necessary weights and the model is scoring the best out of all models of this size on the dataset).

This suggests a solution to the alignment problem, actually.

Example architecture : a paperclip maximizer.

Layer 0 : modules for robotics pathing and manipulation Layer 1: modules for robotics perception Layer 2: modules for laying out robotics on factory floors Layer 3: modules for analyzing return on financial investment Layer 4: high level executive function with the purpose, regressed against paperclips made, to issue commands to lower layers.

If we design some of the lower layers well enough - and disable any modification from higher layers - we can restrict what actions the paperclip maximizer even is capable of doing.

1 comment

Comments sorted by top scores.

comment by habryka (habryka4) · 2021-03-25T18:10:24.118Z · LW(p) · GW(p)

Edit note: I fixed your images for you. They seemed broken on Chrome since the server on which they were hosted didn't support https.