How MATS addresses “mass movement building” concerns

post by Ryan Kidd (ryankidd44) · 2023-05-04T00:55:26.913Z · LW · GW · 9 commentsContents

Claim 1: There are not enough jobs/funding for all alumni to get hired/otherwise contribute to alignment Claim 2: Our program gets more people working in AI/ML who would not otherwise be doing so, and this is bad as it furthers capabilities research and AI hype Claim 3: Scholars might defer to their mentors and fail to critically analyze important assumptions, decreasing the average epistemic integrity of the field None 9 comments

Recently, many AI safety movement-building programs have been criticized for attempting to grow the field too rapidly and thus:

- Producing more aspiring alignment researchers than there are jobs or training pipelines;

- Driving the wheel of AI hype and progress by encouraging talent that ends up furthering capabilities;

- Unnecessarily diluting the field’s epistemics by introducing too many naive or overly deferent viewpoints.

At MATS, we think that these are real and important concerns and support mitigating efforts. Here’s how we address them currently.

Claim 1: There are not enough jobs/funding for all alumni to get hired/otherwise contribute to alignment

How we address this:

- Some of our alumni’s projects are attracting funding and hiring further researchers. Three of our alumni have started alignment teams/organizations that absorb talent (Vivek’s MIRI team, Leap Labs [LW · GW], Apollo Research), and more are planned (e.g., a Paris alignment hub).

- With the elevated interest in AI and alignment, we expect more organizations and funders to enter the ecosystem. We believe it is important to install competent, aligned safety researchers at new organizations early, and our program is positioned to help capture and upskill interested talent.

- Sometimes, it is hard to distinguish truly promising researchers in two months, hence our four-month extension program. We likely provide more benefits through accelerating researchers than can be seen in the immediate hiring of alumni.

- Alumni who return to academia or industry are still a success for the program if they do more alignment-relevant work or acquire skills for later hiring into alignment roles.

Claim 2: Our program gets more people working in AI/ML who would not otherwise be doing so, and this is bad as it furthers capabilities research and AI hype

How we address this:

- Considering that the median MATS scholar is a Ph.D./Masters student in ML, CS, maths, or physics and only 10% are undergrads, we believe most of our scholars would have ended up working in AI/ML regardless of their involvement with the program. In general, mentors select highly technically capable scholars who are already involved in AI/ML; others are outliers.

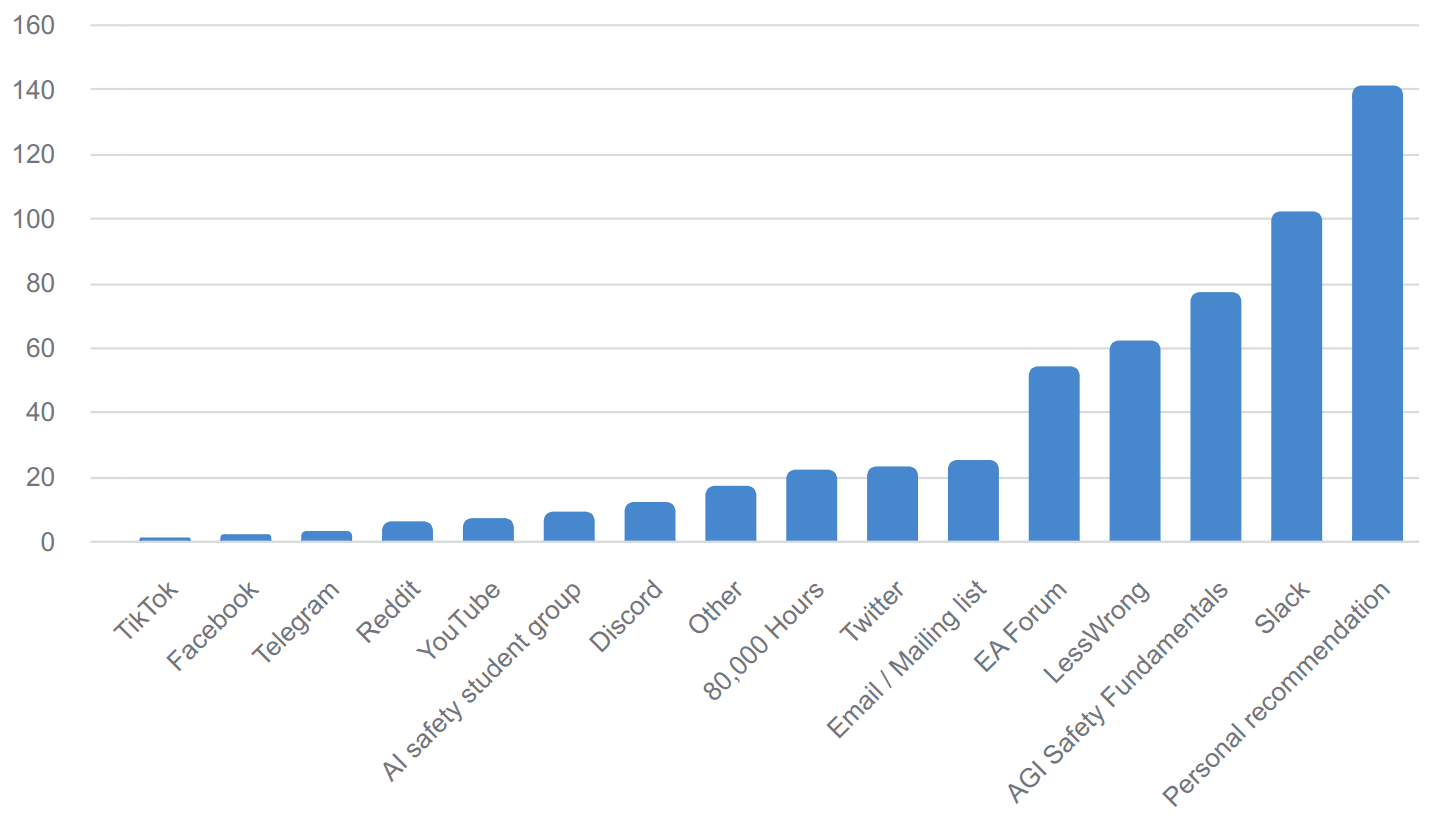

- Our outreach and selection processes are designed to attract applicants who are motivated by reducing global catastrophic risk from AI. We principally advertise via word-of-mouth, AI safety Slack workspaces, AGI Safety Fundamentals and 80,000 Hours job boards, and LessWrong/EA Forum. As seen in the figure below, our scholars generally come from AI safety and EA communities.

MATS Summer 2023 interest form: “How did you hear about us?” (381 responses)

- We additionally make our program less attractive than comparable AI industry programs by introducing barriers to entry. Our grant amounts are significantly less than our median scholar could get from an industry internship (though substantially higher than comparable academic salaries), and the application process requires earnest engagement with complex AI safety questions. We additionally require scholars to have background knowledge at the level of AGI Safety Fundamentals, which is an additional barrier to entry that e.g. MLAB [LW · GW] didn't require.

- We think that ~1 more median MATS scholar focused on AI safety is worth 5-10 more median capabilities researchers (because most do pointless stuff like image generation, and there is more low-hanging fruit in safety). Even if we do output 1-5 median capabilities researchers per cohort (which seems very unlikely), we likely produce far more benefit to alignment with the remaining scholars.

Claim 3: Scholars might defer to their mentors and fail to critically analyze important assumptions, decreasing the average epistemic integrity of the field

How we address this:

- Our scholars are encouraged to “own” their research project and not unnecessarily defer to their mentor or other “experts.” Scholars have far more contact with their peers than mentors, which encourages an atmosphere of examining assumptions and absorbing diverse models from divergent streams. Several scholars have switched mentors during the program when their research interests diverged.

- We require scholars to submit “Scholar Research Plans” one month into the in-person phase of the program, detailing a threat model [? · GW] they are targeting, their research project’s theory of change, and a concrete plan of action (including planned outputs and deadlines).

- We encourage an atmosphere of friendly disagreement, curiosity, and academic rigor at our office, seminars, workshops, and networking events. Including a diverse portfolio of alignment agendas and researchers from a variety of backgrounds allows for earnest disagreement among the cohort. We tell scholars to “download but don’t defer” in regard to their mentors’ models. Our seminar program includes diverse and opposing alignment viewpoints. While scholars generally share an office room with their research stream, we try and split streams up for accommodation and Alignment 201 discussion groups to encourage intermingling between streams.

We appreciate feedback on all of the above! MATS is committed to growing the alignment field in a safe and impactful way, and would generally love feedback on our methods. More posts are incoming!

9 comments

Comments sorted by top scores.

comment by Daniel Paleka · 2023-05-04T09:25:55.592Z · LW(p) · GW(p)

Claim 2: Our program gets more people working in AI/ML who would not otherwise be doing so (...)

This might be unpopular here, but I think each and every measure you take to alleviate this concern is counterproductive. This claim should just be discarded as a thing of the past. May 2020 has ended 6 months ago; everyone knows AI is the best thing to be working on if you want to maximize money or impact or status. For people not motivated by AI risks, you could replace would in that claim with could, without changing the meaning of the sentence.

On the other hand, maybe keeping the current programs explicitly in-group make a lot of sense if you think that AI x-risk will soon be a major topic in the ML research community anyway.

Replies from: ryankidd44, jskatt↑ comment by Ryan Kidd (ryankidd44) · 2023-05-05T20:06:53.669Z · LW(p) · GW(p)

I agree with you that AI is generally seen as "the big thing" now, and we are very unlikely to be counterfactual in encouraging AI hype. This was a large factor in our recent decision to advertise the Summer 2023 Cohort via a Twitter post and a shout-out on Rob Miles' YouTube and TikTok channels.

However, because we provide a relatively simple opportunity to gain access to mentorship from scientists at scaling labs, we believe that our program might seem attractive to aspiring AI researchers who are not fundamentally directed toward reducing x-risk. We believe that accepting such individuals as scholars is bad because:

- We might counterfactually accelerate their ability to contribute to AI capabilities;

- They might displace an x-risk-motivated scholar.

Therefore, while we intend to expand our advertising approach to capture more out-of-network applicants, we do not currently plan to reduce the selection pressures for x-risk-motivated scholars.

Another crux here is that I believe the field is in a nascent stage where new funders and the public might be swayed by fundamentally bad "AI safety" projects that make AI systems more commercialisable without reducing x-risk. Empowering founders of such projects is not a goal of MATS. After the field has grown a bit larger while maintaining its focus on reducing x-risk, there will hopefully be less "free energy" for naive AI safety projects, and we can afford to be less choosy with scholars.

↑ comment by JakubK (jskatt) · 2023-05-12T06:14:20.983Z · LW(p) · GW(p)

Does current AI hype cause many people to work on AGI capabilities? Different areas of AI research differ significantly in their contributions to AGI.

Replies from: ryankidd44↑ comment by Ryan Kidd (ryankidd44) · 2023-05-23T16:38:01.361Z · LW(p) · GW(p)

We agree, which is why we note, "We think that ~1 more median MATS scholar focused on AI safety is worth 5-10 more median capabilities researchers (because most do pointless stuff like image generation, and there is more low-hanging fruit in safety)."

comment by Orpheus16 (akash-wasil) · 2023-05-04T01:31:46.340Z · LW(p) · GW(p)

Glad to see this write-up & excited for more posts.

I think these are three areas that MATS feels like it has handled fairly well. I'd be especially excited to hear more about areas where MATS thinks it's struggling, MATS is uncertain, or where MATS feels like it has a lot of room to grow. Potential candidates include:

- How is MATS going about talent selection and advertising for the next cohort, especially given the recent wave of interest in AI/AI safety?

- How does MATS intend to foster (or recruit) the kinds of qualities [LW · GW] that strong researchers often possess?

- How does MATS define "good" alignment research?

Other things I'm be curious about:

- Which work from previous MATS scholars is the MATS team most excited about? What are MATS's biggest wins? Which individuals or research outputs is MATS most proud of?

- Most peoples' timelines have shortened a lot since MATS was established. Does this substantially reduce the value of MATS (relative to worlds with longer timelines)?

- Does MATS plan to try to attract senior researchers who are becoming interested in AI Safety (e.g., professors, people with 10+ years of experience in industry)? Or will MATS continue to recruit primarily from the (largely younger and less experienced) EA/LW communities?

↑ comment by Ryan Kidd (ryankidd44) · 2023-05-04T18:22:12.545Z · LW(p) · GW(p)

- We broadened our advertising approach for the Summer 2023 Cohort, including a Twitter post and a shout-out on Rob Miles' YouTube and TikTok channels. We expected some lowering of average applicant quality as a result but have yet to see a massive influx of applicants from these sources. We additionally focused more on targeted advertising to AI safety student groups, given their recent growth. We will publish updated applicant statistics after our applications close.

- In addition to applicant selection and curriculum elements, our Scholar Support staff, introduced in the Winter 2022-23 Cohort, supplement the mentorship experience by providing 1-1 research strategy and unblocking support for scholars. This program feature aims to:

- Supplement and augment mentorship with 1-1 debugging, planning, and unblocking;

- Allow air-gapping of evaluation and support [LW · GW], improving scholar outcomes by resolving issues they would not take to their mentor;

- Solve scholars’ problems, giving more time for research.

- Defining "good alignment research" is very complicated and merits a post of its own (or two, if you also include the theories of change that MATS endorses). We are currently developing scholar research ability through curriculum elements focused on breadth, depth, and epistemology (the "T-model of research"):

- Breadth-first search (literature reviews, building a "toolbox" of knowledge [LW · GW], noticing gaps [? · GW]);

- Depth-first search (forming testable hypotheses, project-specific skills, executing research, recursing appropriately [LW · GW], using checkpoints);

- Epistemology (identifying threat models [? · GW], backchaining to local search [LW · GW], applying builder/breaker [LW · GW] methodology, babble and prune [? · GW], "infinite-compute/time" style problem decompositions [LW · GW], etc.).

- Our Alumni Spotlight includes an incomplete list of projects we highlight. Many more past scholar projects seem promising to us but have yet to meet our criteria for inclusion here. Watch this space.

- Since Summer 2022, MATS has explicitly been trying to parallelize the field of AI safety as much as is prudent, given the available mentorship and scholarly talent. In longer-timeline worlds, more careful serial research seems prudent, as growing the field rapidly is a risk for the reasons outlined in the above article. We believe that MATS' goals have grown more important from the perspective of timelines shortening (though MATS management has not updated on timelines much as they were already fairly short in our estimation).

- MATS would love to support senior research talent interested in transitioning into AI safety! Our scholars generally comprise 10% Postdocs, and we would like this number to rise. Currently, our advertising strategy is contingent on the AI safety community adequately targeting these populations (which seems false) and might change for future cohorts.

comment by carboniferous_umbraculum (Spencer Becker-Kahn) · 2023-05-04T09:55:26.870Z · LW(p) · GW(p)

At the start you write

3. Unnecessarily diluting the field’s epistemics by introducing too many naive or overly deferent viewpoints.

And later Claim 3 is:

Scholars might defer to their mentors and fail to critically analyze important assumptions, decreasing the average epistemic integrity of the field

It seems to me there might be two things being pointed to?

A) Unnecessary dilution: Via too many naive viewpoints;

B) Excessive deference: Perhaps resulting in too few viewpoints or at least no new ones;

And arguably these two things are in tension, in the following sense: I think that to a significant extent, one of the sources of unnecessary dilution is the issue of less experienced people not learning directly from more experienced people and instead relying too heavily on other inexperienced peers to develop their research skills and tastes. i.e. you might say that A) is partly caused by insufficient deference.

I roughly think that that the downsides of de-emphasizing deference and the accumulation of factual knowledge from more experienced people are worse than keeping it as sort of the zeroth order/default thing to aim for. It seems to me that to the extent that one believes that the field is making any progress at all, one should think that increasingly there will be experienced people from whom less experienced people should expect - at least initially - to learn from/defer to.

Looking at it from the flipside, one of my feelings right now is that we need mentors who don't buy too heavily into this idea that deference is somehow bad; I would love to see more mentors who can and want to actually teach people. (cf. The first main point - one that I agree with - that Richard Ngo made in his recent piece on advice: The area is mentorship constrained. )

↑ comment by Ryan Kidd (ryankidd44) · 2023-05-05T19:53:41.528Z · LW(p) · GW(p)

Mentorship is critical to MATS. We generally haven't accepted mentorless scholars because we believe that mentors' accumulated knowledge is extremely useful for bootstrapping strong, original researchers.

Let me explain my chain of thought better:

- A first-order failure mode would be "no one downloads experts' models, and we grow a field of naive, overconfident takes." In this scenario, we have maximized exploration at the cost of accumulated knowledge transmission (and probably useful originality, as novices might make the same basic mistakes). We patch this by creating a mechanism by which scholars are selected for their ability to download mentors' models (and encouraged to do so).

- A second-order failure mode would be "everyone downloads and defers to mentors' models, and we grow a field of paradigm-locked, non-critical takes." In this scenario, we have maximized the exploitation of existing paradigms at the cost of epistemic diversity or critical analysis. We patch this by creating mechanisms for scholars to critically examine their assumptions and debate with peers.

comment by JJ Hepburn (jj-hepburn) · 2023-05-05T00:30:08.315Z · LW(p) · GW(p)

Recently, many AI safety movement-building programs have been criticized for attempting to grow the field too rapidly and thus:

Can you link to these?