Conditional offers and low priors: the problem with 1-boxing Newcomb's dilemma

post by Andrew Vlahos (andrew-vlahos) · 2021-06-18T21:50:01.840Z · LW · GW · 4 commentsContents

4 comments

Suppose I got an email yesterday from someone claiming to be the prince of Nigeria. They say they will give me $10 million, but first they need $1,000 from me to get access to their bank account. Should I pay the money? No, that would be stupid. The prior of being given $10 million that easily is too low.

Newcomb's dilemma wouldn't be much better, even if there was a way to scan a brain for true beliefs and punish lies.

Suppose you win a lawsuit against me, your business rival, and the court orders me to pay you $10,000. When I show up to transfer the money, I bring two boxes: one with $10,000 in it, and the other one is opaque. I present an offer to the court: "This opaque box either contains a million dollars or no money. I offer my opponent an offer: take both boxes, or they can take only the opaque box and waive their right to the other one. The twist is, I put money into the opaque box if and only if I believe that my opponent would decline the $10,000."

Also assume that society can do noetic brain scans like in Wright's The Golden Age, and lying or brain modification to adjust my beliefs would be caught and punished.

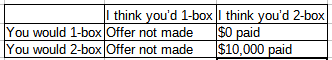

Some people take both boxes, and end up with $10,000. Other people would take one box, and end up with $0!

What happened? Shouldn't rationalists win [LW · GW]?

What the 1-boxers didn't think about was that the chance of someone volunteering to pay extra money was epsilon. If I thought my opponent had a >50% chance of 1-boxing, I wouldn't have made the offer.

1-boxing is better if Omega doesn't care about money. However, if the agent wants to pay as little money as possible, 2-boxing is the way to go.

4 comments

Comments sorted by top scores.

comment by Dagon · 2021-06-19T16:03:23.112Z · LW(p) · GW(p)

Umm, I think you've missed the spirit of Newcomb's problem. The problem is usually given that you believe (or at least have strong reason to believe) that Omega is honest, and that you will get the large payout (or at least that you assign a high probability to this) for one-boxing.

I don't know anyone who claims that such offers actually happen.

Replies from: Patterncomment by thomascolthurst · 2021-06-19T15:33:38.013Z · LW(p) · GW(p)

Prior work, in the form of a twitter joke: https://twitter.com/thomascolthurst/status/1032345388605431810