MATS Alumni Impact Analysis

post by utilistrutil, Juan Gil (Zwischnu), yams (william-brewer), LauraVaughan (laura-vaughan), K Richards, Ryan Kidd (ryankidd44) · 2024-09-30T02:35:57.273Z · LW · GW · 7 commentsContents

Summary Background on Cohort Employment Outcomes Publication Outcomes Other Outcomes Evaluating Program Elements Career Plans Acknowledgements None 7 comments

Summary

This winter, MATS will be running our seventh program. In early-mid 2024, 46% of alumni from our first four programs (Winter 2021-22 to Summer 2023) completed a survey about their career progress since participating in MATS. This report presents key findings from the responses of these 72 alumni.

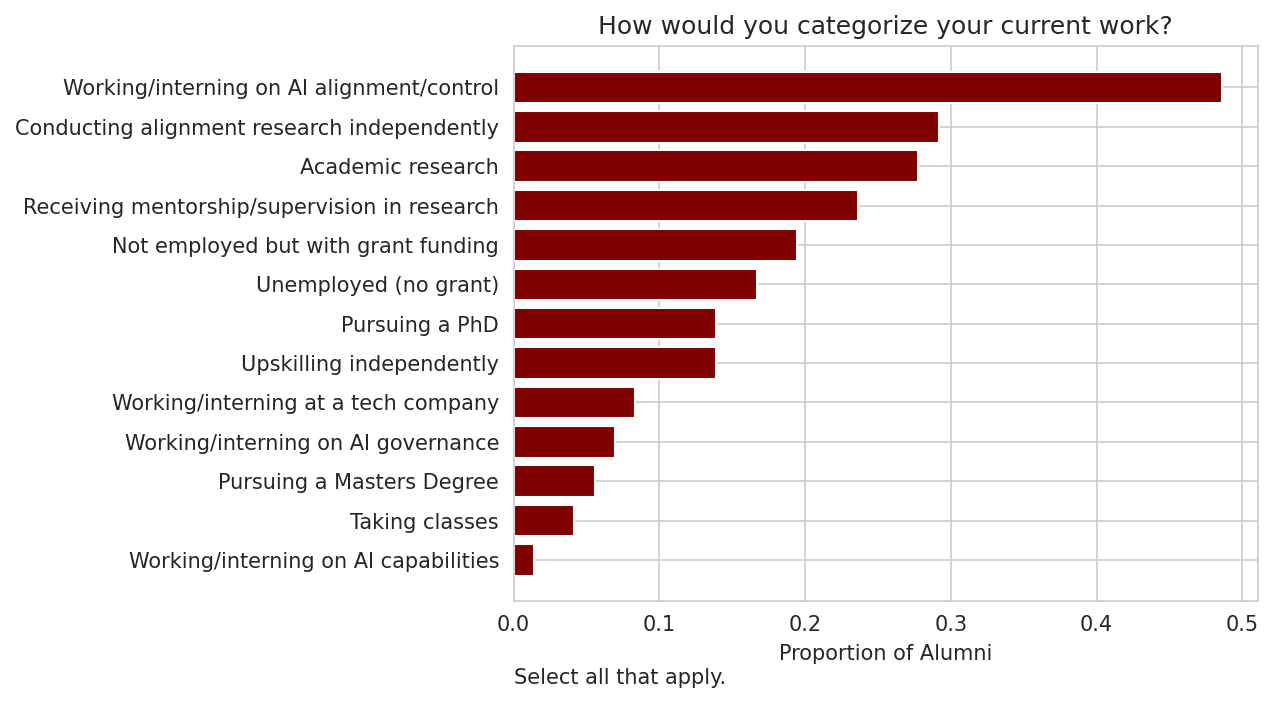

- 78% of respondents described their current work as "Working/interning on AI alignment/control" or "Conducting alignment research independently."

- 49% are "Working/interning on AI alignment/control."

- 29% are "Conducting alignment research independently."

- 1.4% are "Working/interning on AI capabilities."

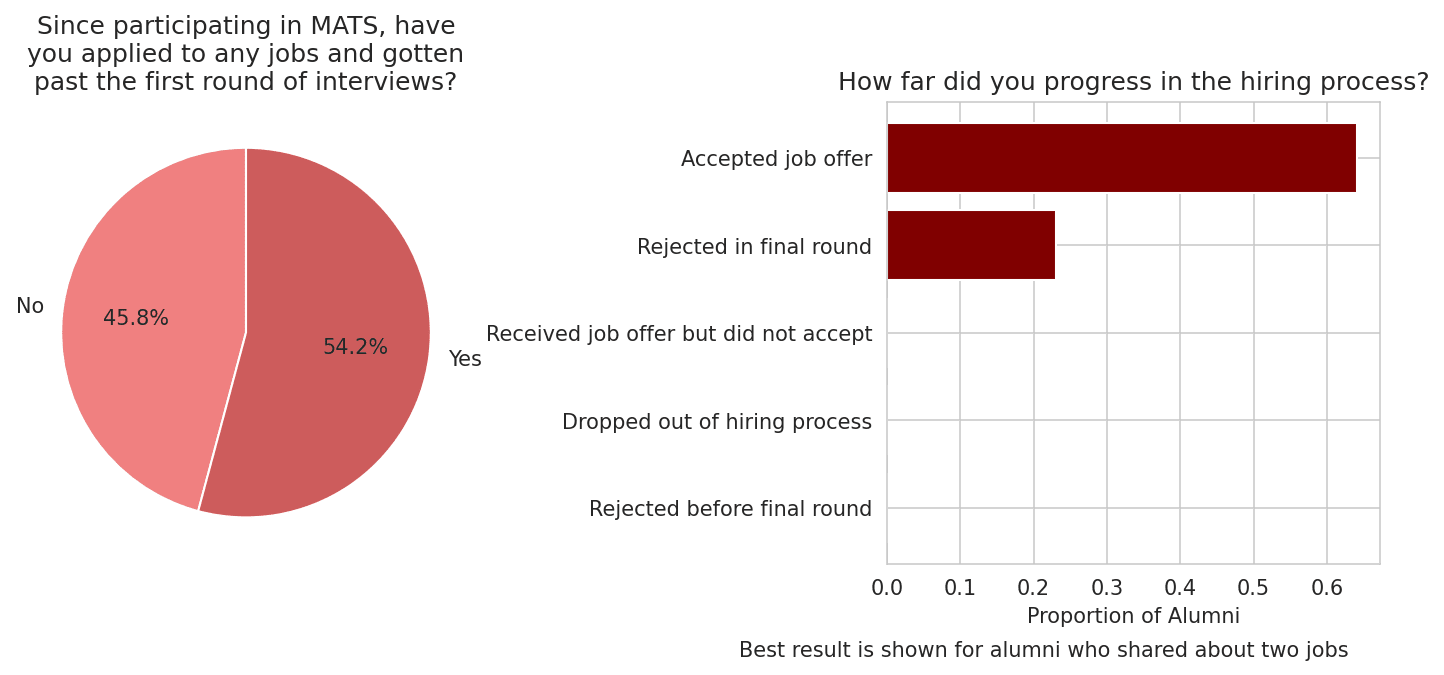

- Since MATS, 54% of respondents applied to a job and advanced past the first round of interviews.

- 64% of those who shared more details accepted a job offer.

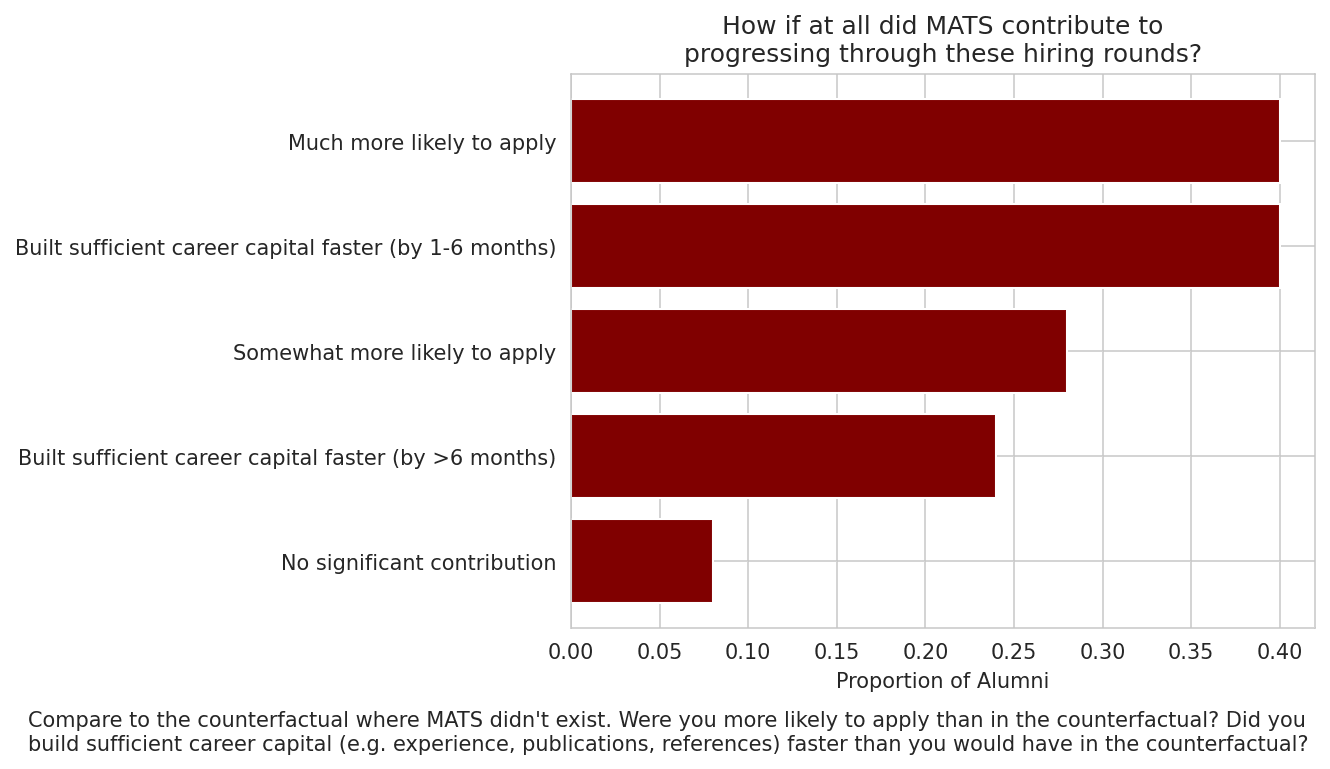

- Alumni reported that MATS made it more likely that they applied to these jobs by helping them build legible career capital and develop research/technical skills.

- During or since MATS, 68% of alumni had published alignment research.

- The most common type of publication was a LessWrong post (45%).

- 78% of respondents said their publication “possibly” or “probably” would not have happened without MATS.

- 10% of alumni reported that MATS accelerated publication by more than 6 months; 14% said 1-6 months.

- 8% of alumni responded that MATS resulted in a “much higher” quality of their publication.

- 63% of scholars met a research collaborator through MATS

- At this stage in their careers, 46% of alumni would benefit from more connections to research collaborators, and 39% would benefit from job recommendations.

Background on Cohort

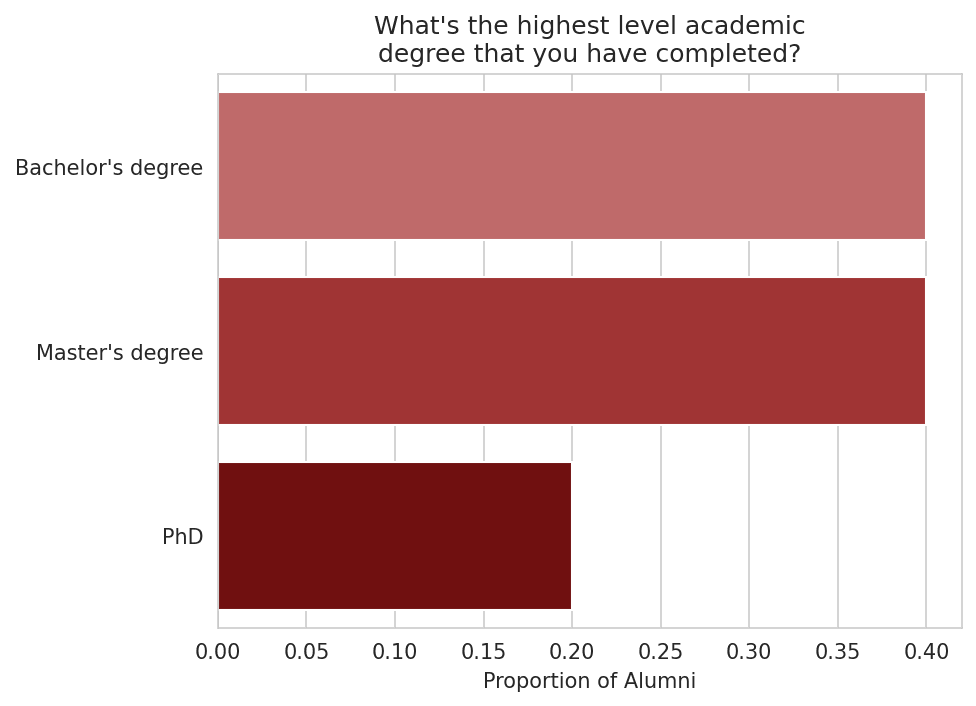

For 40% of respondents, their highest academic degree was a Bachelor’s; 40% had earned at most a Master’s, and 20%, a PhD.

Their most common categories of current work were “Working/interning on AI alignment/control” (49%) and “Conducting alignment research independently” (29%).

Here are some representative descriptions of the work alumni were doing:

- “Going through the first year of grad school at Oxford and continuing research that emerged from my time at MATS.”

- “Working on an interpretability project at AI Safety Camp and just finished the s-risk intro fellowship by CLR a week or two ago.”

- “What could be called "prosaic agent foundations" with the AF team @ MIRI”

- “Co-founding for-profit AI safety company with a product”

- “I'm back at my PhD at Imperial, taking an idea I developed during MATS 3.1 and trying to turn it into a PhD project.”

- “I'm working as a post doc in academia on non-alignment topics. In my spare time, I continue to think about alignment research.”

Three alumni who completed the full MATS program before Winter 2023-24 selected “working/interning on AI capabilities.” They described their current work as:

- “Alignment and pretraining at Anthropic”;

- “AI safety-boosting startups at Entrepreneur First's def/acc incubator”;

- “Pretraining team at Anthropic.”

Erratum: previously, this section listed five alumni currently “working/interning on AI capabilities." This included two alumni who did not complete the program before Winter 2023-24, which is outside the scope of this report. Additionally, two of the three alumni listed above first completed our survey in Sep 2024 and were therefore not included in the data used for plots and statistics. We include them here for full transparency.

Employment Outcomes

We asked alumni about their career experiences since graduating from MATS. 54% of respondents applied to a job and advanced past the first round of interviews.

We asked about a range of possible outcomes from these job application processes. Among those respondents who shared details, the most common outcome for alumni who made it past the first round of interviews was accepting a job offer (64%). Accepted jobs included:

- Research Fellow at MIRI;

- Fellow at the US Senate Commerce Committee;

- Working at the UK AI Safety Institute;

- ARC Theory researcher;

- Research Scientist at Apollo Research;

- Member of Technical Staff at EleutherAI;

- PIBBSS Affiliate

- Research Engineer at FAR AI;

- Research Scientist at Anthropic.

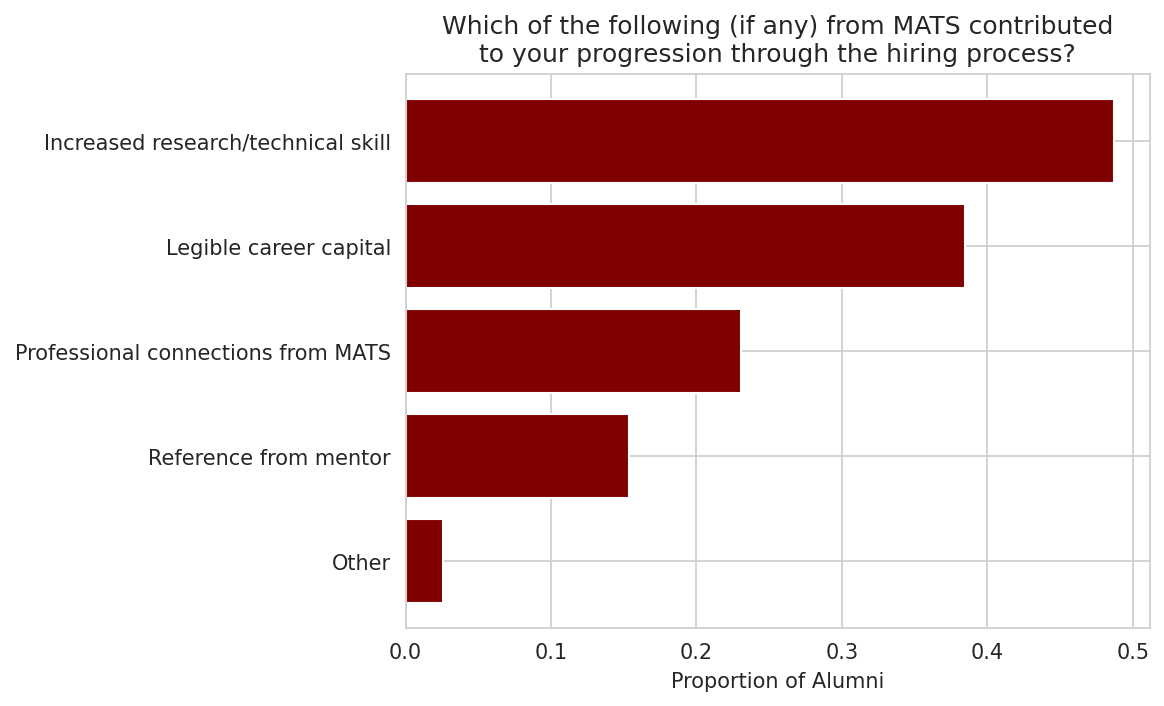

We inquired about whether MATS contributed to these alumni’s progress through the job rounds.

For 8% of alumni, MATS did not contribute to their job progression. For the others, MATS helped them build career capital and made them more likely to apply. We asked more specifically how MATS benefited alumni in these hiring rounds:

For 49% of alumni, MATS increased their research or technical skills, and for 38% of alumni, MATS provided legible career capital.

Publication Outcomes

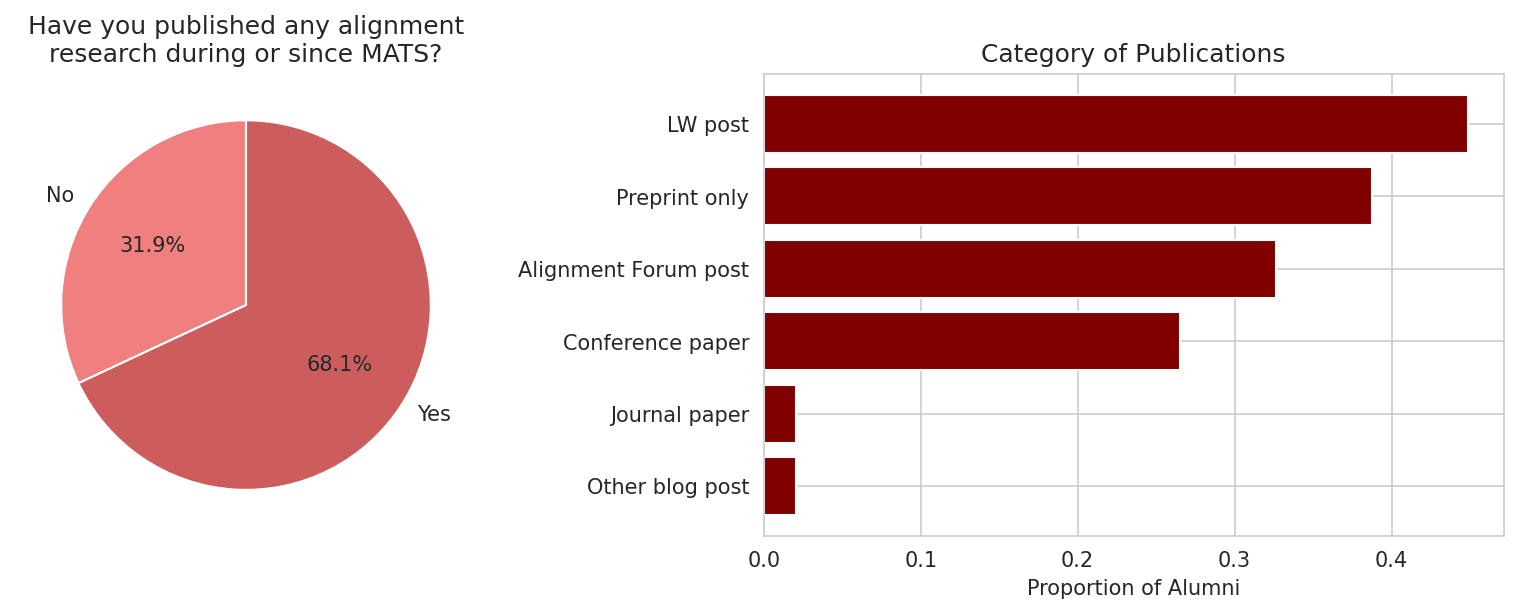

We asked alumni about their publication records. 68% had published alignment since or during MATS.

The most common category of publication was a LessWrong post (45%). Conference and journal papers included:

- Neural Networks Learn Statistics of Increasing Complexity;

- Copy Suppression: Comprehensively Understanding an Attention Head;

- Inverse Scaling: When Bigger Isn't Better;

- The Reasons That Agents Act: Intention and Instrumental Goals;

- Cooperation and Control in Delegation Games;

- How to Catch an AI Liar: Lie Detection in Black-Box LLMs by Asking Unrelated Questions;

- Incentivizing Honest Performative Predictions with Proper Scoring Rules.

Other publications included:

- Towards a Situational Awareness Benchmark for LLMs;

- Steering Llama 2 via Contrastive Activation Addition;

- Invulnerable Incomplete Preferences: A Formal Statement [? · GW];

- Representation Engineering: A Top-Down Approach to AI Transparency;

- The WMDP Benchmark: Measuring and Reducing Malicious Use With Unlearning;

- Linear Representations of Sentiment;

- Limitations of Agents Simulated by Predictive Models.

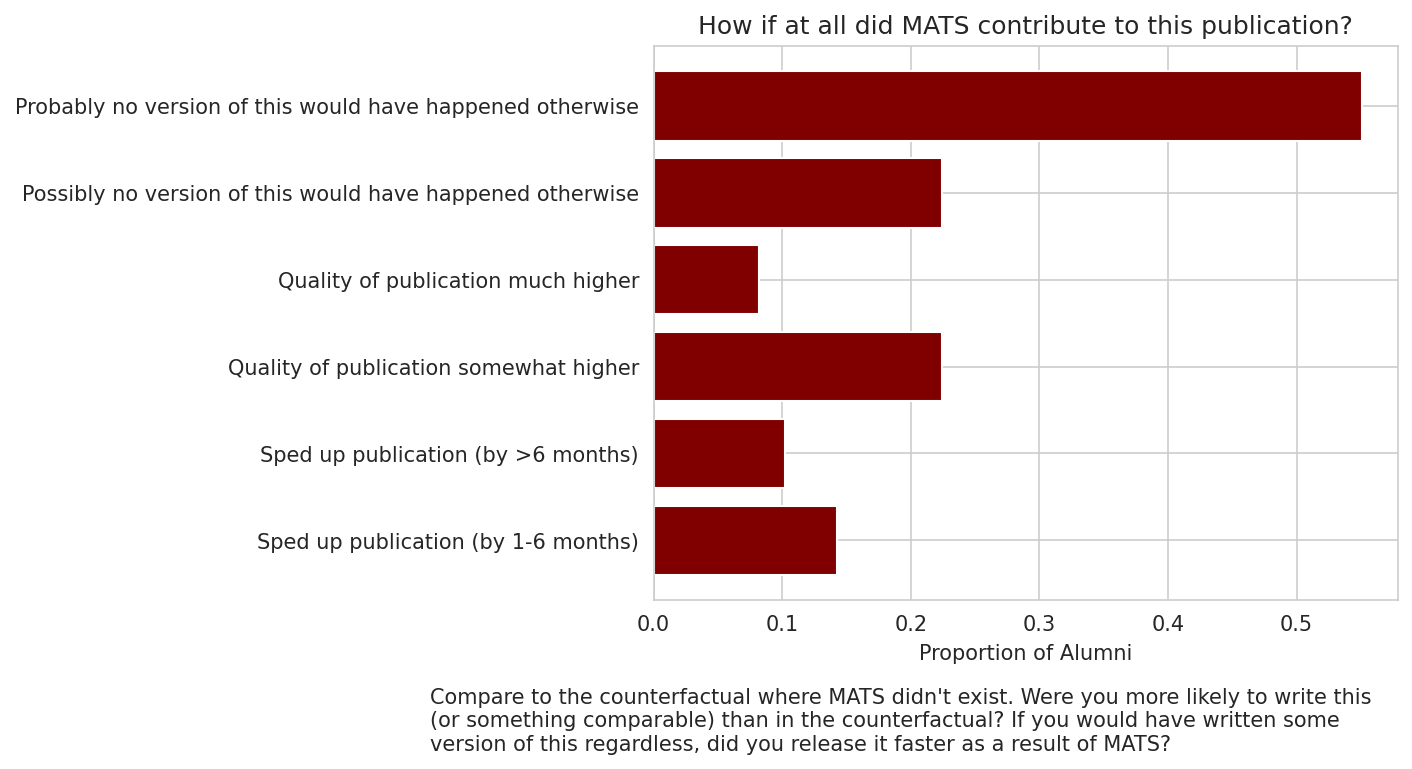

We asked about how MATS contributed to these outcomes.

For 78% of respondents, their publication “possibly” or “probably” would not have happened without MATS. 10% of alumni reported that MATS accelerated publication by more than 6 months; 14% said 1-6 months. 8% of alumni responded that MATS resulted in a “much higher” quality of their publication.

Other Outcomes

We also asked alumni:

Are there other impactful outcomes of MATS that you want to tell us about? This category is intentionally flexible. I expect that most respondents won't submit anything in this category.

Examples of outcomes that might be logged here:

- Founding a new AI alignment organization;

- Volunteering for an AI alignment field-building initiative (resulting in significant counterfactual impact).

Of our respondents, 20% submitted an additional impactful outcome. Three of these 16 respondents include two impactful outcomes. Multiple alumni mentioned starting new research organizations to tackle a specific AI safety research agenda. Here is a selection of responses, and how MATS influenced them:

- “Apollo Research would counterfactually not exist without MATS”

- Timaeus

- Outcome: “Founding a research org [Timaeus] based on the above research agenda.”

- Influence: “Very hard to say. Something like this agenda would have probably come into existence, but we probably accelerated it by more than a year.”

- Cadenza Labs

- Outcome: “Founding new AI alignment org (Cadenza Labs)”

- Influence: "Probably no version of this would have happened otherwise."

- PRISM Eval

- Outcome: “Founding a new AI alignment org!” [PRISM Eval]

- Influence: "Sped up outcome by >6 months, Quality of outcome much higher, Possibly no version of this would have happened otherwise."

- SLT and alignment conferences

- Outcome: “Organizing two conferences on singular learning theory and alignment.”

- Influence: "Probably no version of this would have happened otherwise, Sped up outcome by <6 months, Sped up outcome by >6 months."

- AI safety fieldbuilding

- Outcome: “Got more people interested in alignment research at my university. Advisor is offering a course on safety next semester.”

- Influence: "Sped up outcome by >6 months, Possibly no version of this would have happened otherwise, Quality of outcome somewhat higher."

- AI safety community connection

- Outcome: “It's not very legible, but I think I am much more 'in the alignment world' than I would be if I hadn't done MATS. I know more people, am aware of more opportunities, and I expect being in the community makes me more likely to work hard and aim big life decisions towards AI safety.”

- Influence: "Quality of outcome somewhat higher, Sped up outcome by >6 months"

Evaluating Program Elements

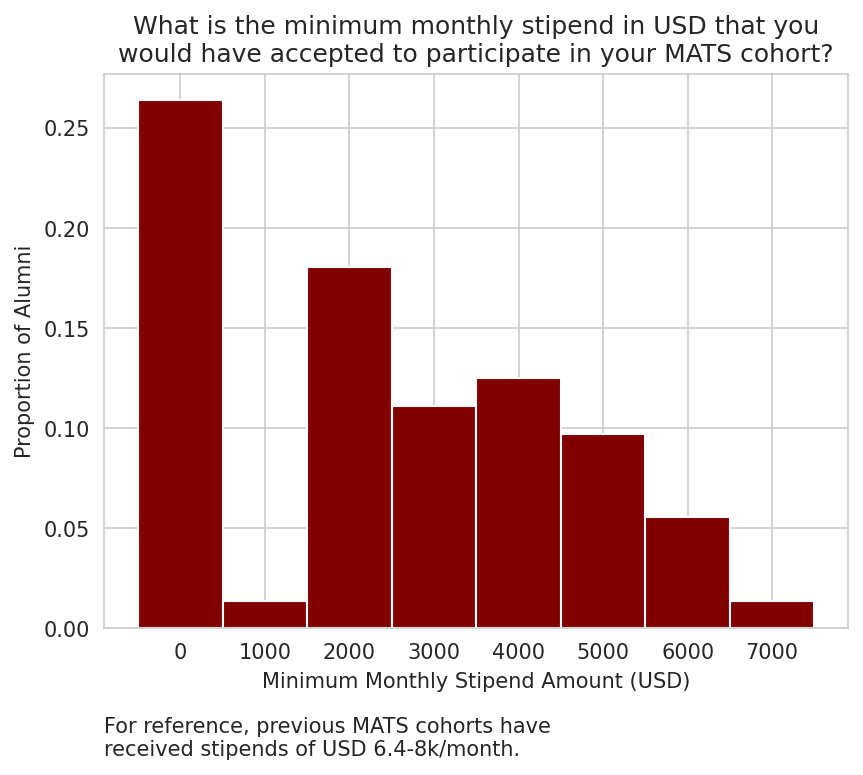

As noted in our Winter 2023-24 Retrospective [LW(p) · GW(p)], our alumni have often reported that they were willing to participate in MATS for a lower stipend than they received, which in Summer 2023 was $4800/month. 30% of alumni would have been willing to participate in MATS for no stipend at all.

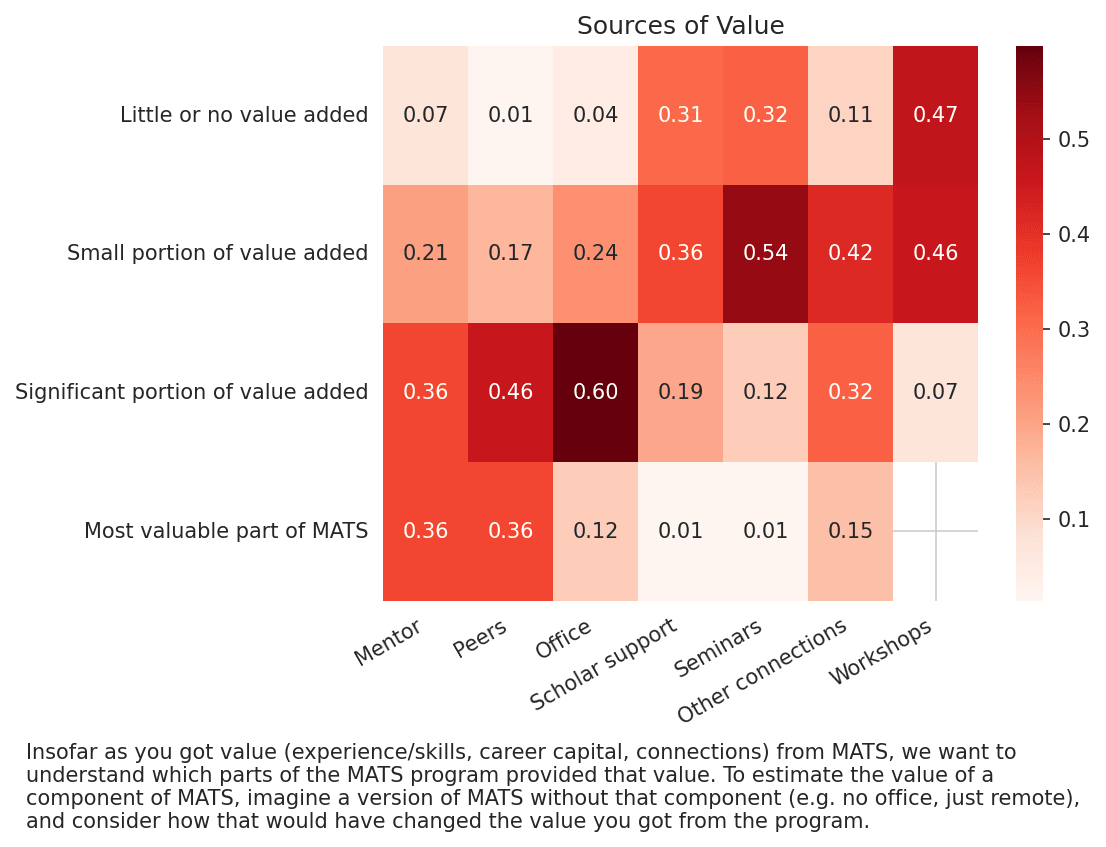

We asked alumni to rate the value they got out of various program elements.

As we observed in the Winter 2023-24 cohort [LW · GW], “mentorship” and “peers” were considered the most valuable program elements. 72% of alumni called mentorship the “most valuable part of MATS” or a “significant portion of value added” and 82% said the same of their MATS peers.

The mentors who provided less value were generally those who had very limited time to spend on their scholars or had less experience mentoring. The mentors who provided the most value had more experience and time to dedicate to their scholars, including multiple safety researchers at scaling labs.

Alumni elaborated on the value MATS provided them:

- “Rapidly internalizing the world-models of experienced alignment researchers was the most valuable part of MATS, imo. That's mostly mentorship, but other contact with experienced people fills the same niche. In particular, that's how I built my current model of the transformer architecture that I use for alignment research.”

- “The biggest value for me was getting a better picture of the alignment field. It's an osmosis process and hard to attribute to specific people. Seminars, peers, talking to some other mentors, talking to people at parties, etc.”

- “I think the “sense of community”, although a bit vague and hard-to-specify, provided a large amount of value to me. It allowed me to develop connections with other scholars and really refine my ideas in ways that I never could’ve if I weren't in Berkeley.”

- “The fact that I could only focus on my work for 2 months straight without having to think about food, logistics or anything like that was amazing.”

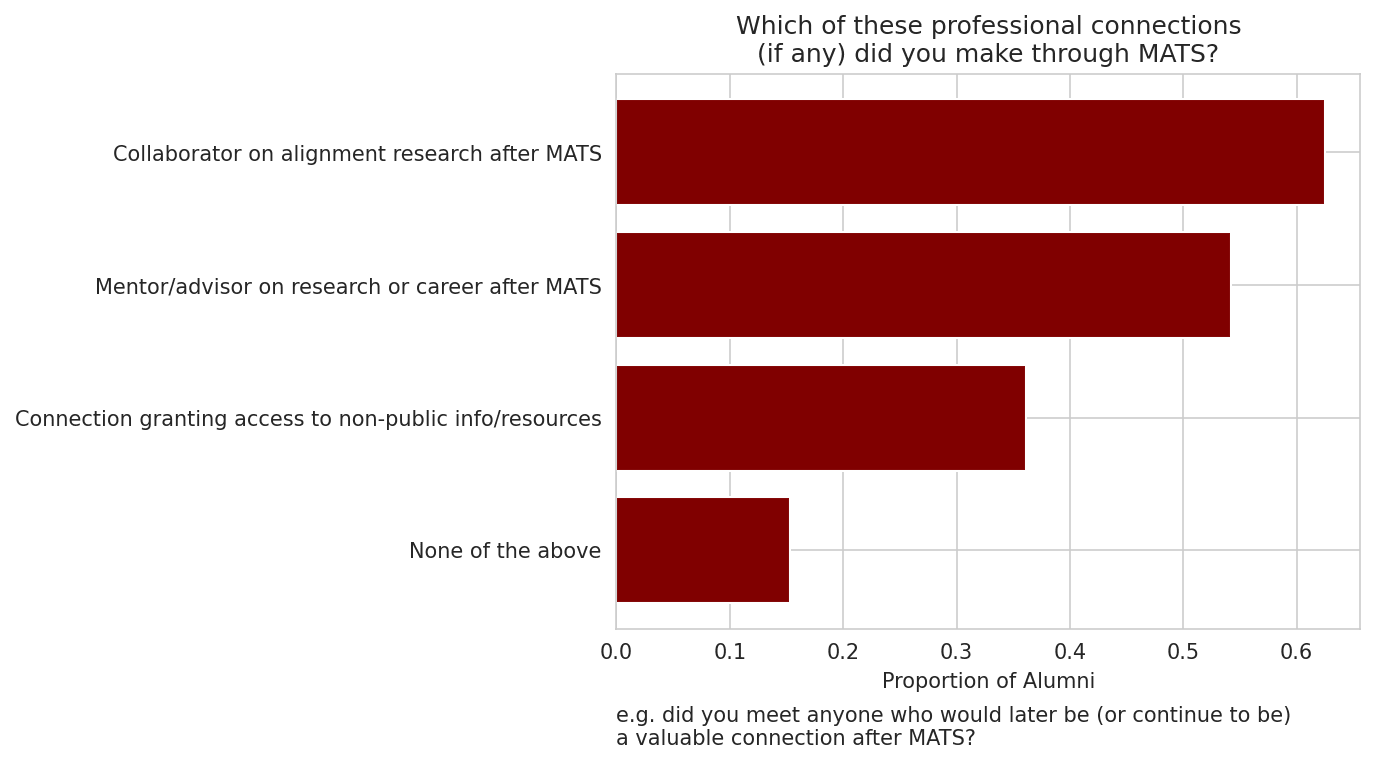

The program element “Other connections” could include collaborators, advisors, and other researchers in the Bay Area AI safety community that scholars met at MATS networking events. We asked alumni what kind of connections they made at MATS.

The most common type of connection was a research collaborator (63%), followed by a mentor/adviser (54%). 15% of alumni reported making no connections in the categories we asked about. Alumni elaborated on the value of these connections:

- “MATS has provided me with my primary in-person connections in my day-to-day alignment research that I actively lean on.”

- “I made connections to many researchers during MATS, which have since asked for things like comments on drafts, or with whom I've discussed future research and work. This has allowed me to stay near the frontier of alignment research and have more well-developed takes on many agendas.”

- “Allowing me to do more impactful research after MATS by providing collaborators and mentors”

- “MATS led me to become a member of the LISA office, which has led to a large number of other connections. Now I have various friends in different orgs and research groups who I get info and other things from.”

- “None of these connections would have been possible without MATS, and without this sense of a competent and caring community, I likely wouldn’t be as committed to building a career in AIS. I’ve learned that being an independent researcher is incredibly grueling and lonesome, and these connections truly have made a world of difference.”

A few alumni offered testimonials about their experiences at MATS:

- “MATS was strangely good at matchmaking me to my mentor, who I've been in continuous productive research dialogue with for almost two years now. Except for a few exceedingly minor details, I don't think I could have imagined better institutional support: it felt like we were allowed to just do the work, with a steady stream of opt-in, no-nonsense support from the MATS team. There's really something here, even if only in allaying insecurity and maladaptive urgency that is common for people getting into this still emerging field, and letting smart and caring minds get down to business.”

- “Besides reading the sequences, MATS was the most significant event of my life. Not just professionally, but socially, intellectually, and from a personal development & philosophy perspective. Not all such events are good, but from my vantage point a year out, this one seems to be extraordinarily positive on all counts except socially. However, this evaluation may change after enough years, as it is said "call no one happy until they are dead".”

- “The skills I built and workshop paper I produced at MATS led directly to me getting a job in AI alignment.”

- “I think MATS is an excellent way to get started with alignment. They have it all: a great office, awesome housing, food, a community of scholars, seminars, mentors... This makes it really easy and fun to spend lots of productive time on doing research on alignment.”

We also asked alumni whether MATS had played a negative role in any of the career outcomes they reported. Specifically, we asked “Did MATS have a negative effect on any of the outcomes (job offers, publications, etc.) you've indicated? e.g. career capital worse than counterfactual, publications worse than counterfactual, etc.” Many alumni simply responded “no” or affirmed the value MATS provided them. Others offered useful criticism:

- “If not for SERI MATS, I would have probably spent more time upskilling in coding. It wouldn't be useful for ARC, but plausibly it would be useful later. On the other hand, if not for SERI MATS, I would have likely spent some time on some agent foundations work later, so it's probably about the same time I would have spent on programming overall.”

- “The intermediate steps of MATS of writing a research proposal and also preparing a 5 min talk took quite a bit of time away from research.”

- “It slightly impacted my relationship with my PhD supervisor as I left for a bit to do stuff completely unrelated to my phd”

- “If not for MATS I likely wouldn't have gone into technical research, as it now seems I will. It's unclear (<70%) if the sign here is positive.”

- “I plausibly would have improved more as an Engineer (i.e. not research) had I stayed at my job as a Machine Learning Engineer as the work I used to work was deeper down the eng stack / had harder technical problems vs the faster paced / higher level work that I did at Mats.”

- “If having done MATS already decreases my chance of doing it again this summer, it might have [the] same negative effect. I think if i could choose between only attending MATS 23 or 24, I would have chosen 24 because now I got more ML skills through ARENA.”

- “The overall MATS experience was slightly negative, making me feel like I didn't have my place in the AIS community (but maybe a realization for the better?), and slowed down my interest and involvement (also not only MATS' fault)”

Career Plans

We asked alumni about their future career plans:

At a high level, what's your career plan for the foreseeable future? You likely have uncertainty, so feel free to indicate your options and considerations. Generally we want to know:

- What kind of work broadly?

- What's your theory of change?

- How are you trading off between immediate impact and building experience?

Don't spend more than a few minutes on this (<5 min).

Many respondents are pursuing academia or considering government and policy work. An interest in mechanistic interpretability was common among those continuing with research. Here is a selection of responses:

- “I want to continue to work on AI policy in the US government. I'm focused mainly on building experience. I think good AI policy could be extremely impactful for making sure AI is developed safely.”

- “I'm also considering moving into AI governance work -- there seems to be a huge lack of technical knowledge/experience in the field and it might be worth trying to address that somehow, although I'm not yet sure what the best way to do so would be.”

- “I'm planning to continue grad school, which includes building experience as well as doing research I think could have rather immediate impact. The type of work involves applications of game and decision theoretic tools to AI safety.”

- “I have about 10 project ideas that I'd like to independently pursue. My plan is to start with the quicker, tractable ones. Especially the ones that interact with other people's work - I don't want to just produce the writeup, get some LW upvotes, and then have that result slipped into oblivion. I want the results to enrich and interact with other efforts.”

- “My plan is to finish my current research project, and then to re-evaluate and decide what direction to take with my career. In particular, I'll decide whether to stick with technical research, or to shift to something else. That something else would probably be something aiming to reduce AI risk in an indirect way. For example, grantmaking, helping with a program like MATS, helping others with research in other ways.”

- “I've been applying to any AI safety positions that work with my constraints for years now and I'm starting to doubt that my counterfactual impact would be good. Working with short term grants is too stressful for me now that I've tried it.”

- “In June, I will join Anthropic as a Member of Technical Staff, to work in Evan Hubinger's team on model organisms. I will pause my PhD for the foreseeable future. Theory of change: Directly contribute to useful alignment work that could inform future safety work and scaling policies (both at Anthropic and broadly, via governance, etc.). In addition, getting more experience with frontier LLM safety research and frontier scaling lab engineering. Also, build career capital, savings, etc.”

- “Figure out how to decompose neural networks into parts. This is supposed to get mech interp to the point where it can get started properly. Strong mech interp is then supposed to enable research on agent foundations to start properly. Research on agent foundations is supposed to enable research on alignment to start properly. I am focusing pretty much completely on research, team building at [my organization], and providing mentorship.”

- “I continue to remain excited about the prospect of scaling interpretability as applied to AI reasoning transparency and control. My theory of change for interpretability revolves around implementing monitoring and calibration of internal reasoning to external behavior, particularly in concert with failure modes like deception and deceptive alignment. If appropriate interpretability lenses are deployed as AI systems continue to scale, multiple failure modes that could lead to loss of control can be mitigated.”

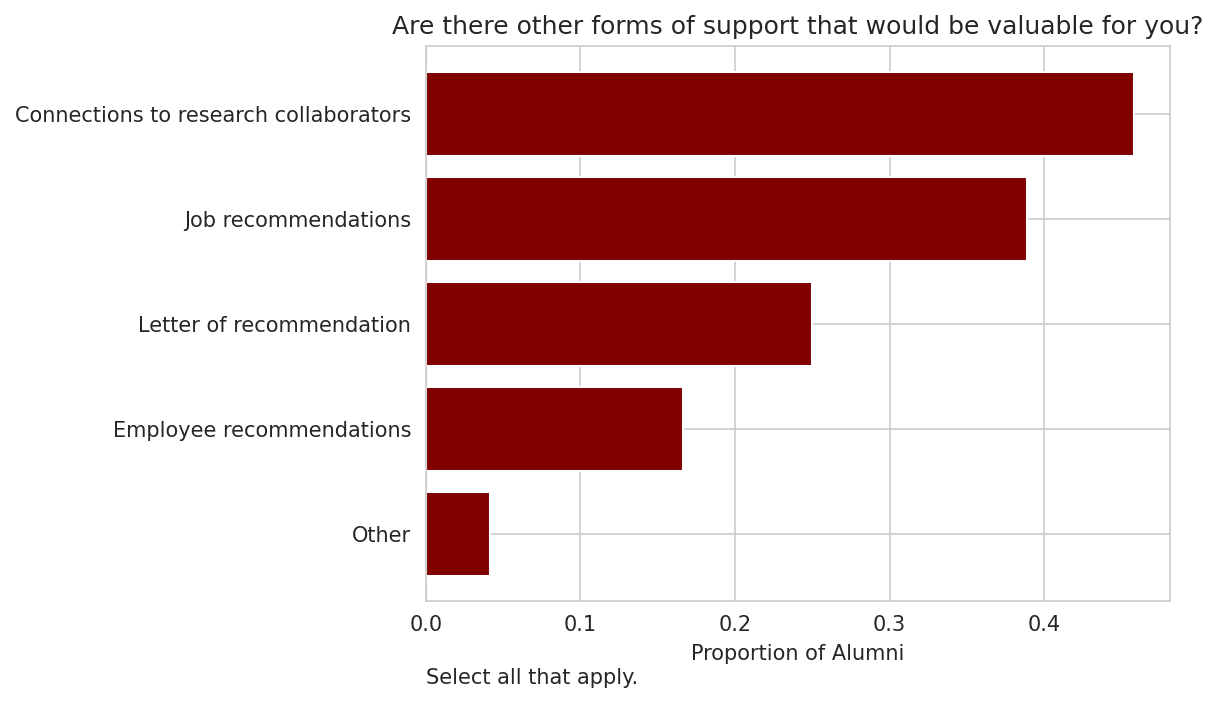

Alumni informed us about the types of support that might be valuable to them at their current career stages.

For 46% of alumni, connections to research collaborators would be valuable, and 39% would benefit from job recommendations. The median alum from Summer 2023 met 6 potential collaborators [LW(p) · GW(p)] during the program, but these alumni results indicate that MATS can go further in supporting our scholars with networking opportunities. Likewise, we hosted a career fair [LW · GW] during the past four programs, but these results show that MATS can provide further job opportunities to our alumni.

Acknowledgements

This report was produced by the ML Alignment & Theory Scholars Program. @utilistrutil [LW · GW] was the primary author of this report, Juan Gil and @yams [LW · GW] contributed to editing, Laura Vaughan and Kali Richards contributed to data analysis, and Ryan Kidd scoped, managed, and edited the project. Thanks to our alumni for their time and feedback! We also thank Open Philanthropy, DALHAP Investments, the Survival and Flourishing Fund Speculation Grantors, Craig Falls, Foresight Institute, and several generous donors on Manifund, without whose donations we would be unable to run upcoming programs or retain team members essential to this report.

To learn more about MATS, please visit our website. We are currently accepting donations for our Summer 2025 Program and beyond!

7 comments

Comments sorted by top scores.

comment by gw · 2024-09-30T08:14:19.024Z · LW(p) · GW(p)

Do you have any data on whether outcomes are improving over time? For example, % published / employed / etc 12 months after a given batch

Replies from: ryankidd44↑ comment by Ryan Kidd (ryankidd44) · 2024-09-30T21:37:58.039Z · LW(p) · GW(p)

Great suggestion! We'll publish this in our next alumni impact evaluation, given that we will have longer-term data (with more scholars) soon.

comment by aysja · 2024-09-30T23:01:56.132Z · LW(p) · GW(p)

Does the category “working/interning on AI alignment/control” include safety roles at labs? I’d be curious to see that statistic separately, i.e., the percentage of MATS scholars who went on to work in any role at labs.

Replies from: ryankidd44↑ comment by Ryan Kidd (ryankidd44) · 2024-09-30T23:33:17.311Z · LW(p) · GW(p)

Scholars working on safety teams at scaling labs generally selected "working/interning on AI alignment/control"; some of these also selected "working/interning on AI capabilities", as noted. We are independently researching where each alumnus ended up working, as the data is incomplete from this survey (but usually publicly available), and will share separately.

comment by DusanDNesic · 2024-10-02T05:59:53.856Z · LW(p) · GW(p)

Amazing write-up, thank you for the transparency and thorough work of documenting your impact.

comment by Ryan Kidd (ryankidd44) · 2024-10-02T18:09:14.903Z · LW(p) · GW(p)

1% are "Working/interning on AI capabilities."

Erratum: previously, this statistic was "7%", which erroneously included two alumni who did not complete the program before Winter 2023-24, which is outside the scope of this report. Additionally, two of the three alumni from before Winter 2023-24 who selected "working/interning on AI capabilities" first completed our survey in Sep 2024 and were therefore not included in the data used for plots and statistics. If we include those two alumni, this statistic would be 3/74 = 4.1%, but this would be misrepresentative as several other alumni who completed the program before Winter 2023-24 filled in the survey during or after Sep 2024.