Concept extrapolation for hypothesis generation

post by Stuart_Armstrong, Patrick Leask (patrickleask), rgorman · 2022-12-12T22:09:46.545Z · LW · GW · 2 commentsContents

2 comments

Posted initially on the Aligned AI website. Authored by Patrick Leask, Stuart Armstrong, and Rebecca Gorman.

There’s an apocryphal story about how vision systems were led astray when trying to classify tanks camouflaged in forests. A vision system was trained on images of tanks in forests on sunny days, and images of forests without tanks on overcast days.

To quote Neil Fraser:

In the 1980s, the Pentagon wanted to harness computer technology to make their tanks harder to attack…

The research team went out and took 100 photographs of tanks hiding behind trees, and then took 100 photographs of trees—with no tanks. They took half the photos from each group and put them in a vault for safe-keeping, then scanned the other half into their mainframe computer. [...] the neural net correctly identified each photo as either having a tank or not having one.

Independent testing: The Pentagon was very pleased with this, but a little bit suspicious. They commissioned another set of photos (half with tanks and half without) and scanned them into the computer and through the neural network. The results were completely random. For a long time nobody could figure out why. After all nobody understood how the neural had trained itself. Eventually someone noticed that in the original set of 200 photos, all the images with tanks had been taken on a cloudy day while all the images without tanks had been taken on a sunny day. The neural network had been asked to separate the two groups of photos and it had chosen the most obvious way to do it—not by looking for a camouflaged tank hiding behind a tree, but merely by looking at the color of the sky…

- “Neural Network Follies”, Neil Fraser, September 1998

We made that story real. We collected images of tanks on bright days and forests on dark days to recreate the biased dataset described in the story. We then replicated the faulty neural net tank detector by fine tuning a CLIPViT image classification model on this dataset.

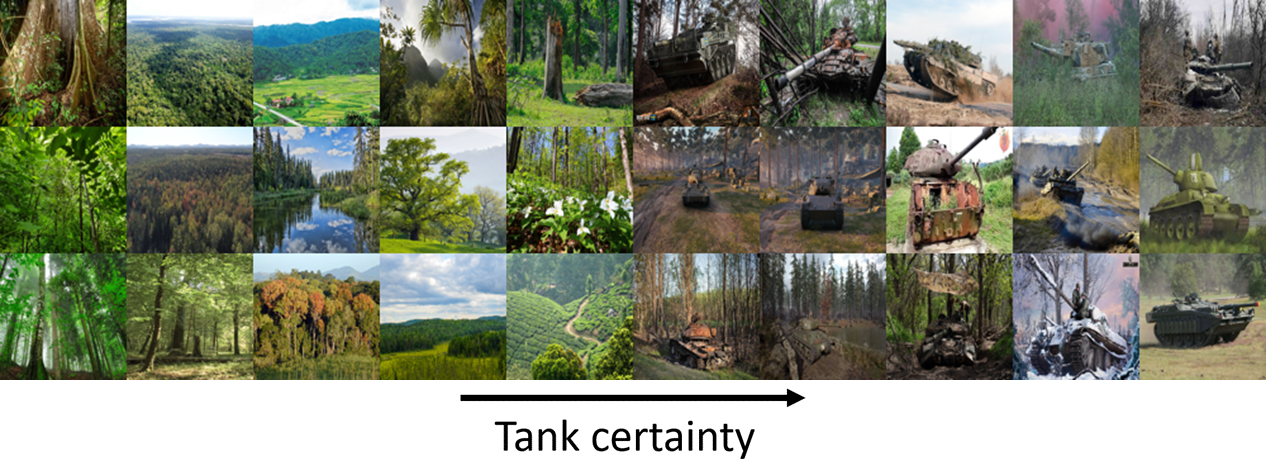

Below are 30 images taken from the training set ordered from left to right by decreasing class certainty. Like the apocryphal neural net, this one perfectly separates these images into tank and no-tank.

Figure 1: Trained classifier, labeled images

To replicate the Pentagon’s complaint, we then simulated the deployment of this classifier into the field with an unlabeled dataset of similar images, that doesn’t have the bias to the same extent. Below are 30 images randomly taken from the unlabeled dataset also ordered by tank certainty. Now the clear division between tank and no tank is gone: there are actually more images without a tank on the right hand (tank) side of the gradient.

Figure 2: Trained classifier, unlabeled images

This is a common problem for neural nets - selecting a single feature to separate their training data. And this feature need not be the one that the programmer had in mind. Because of this, classifiers typically fail when they encounter images beyond their training settings. This “out of distribution” problem happens here because the neural net has settled on brightness as its feature. And thus fails to identify tanks when it encounters darker images of them.

Instead, Aligned AI used its technology to automatically tease out the ambiguities of the original data. What are the "features" that could explain the labels? One of the features would be the luminosity, which the original classifier made use of. But our algorithm flagged a second feature - a second hypothesis for what the labels really meant - that was very different.

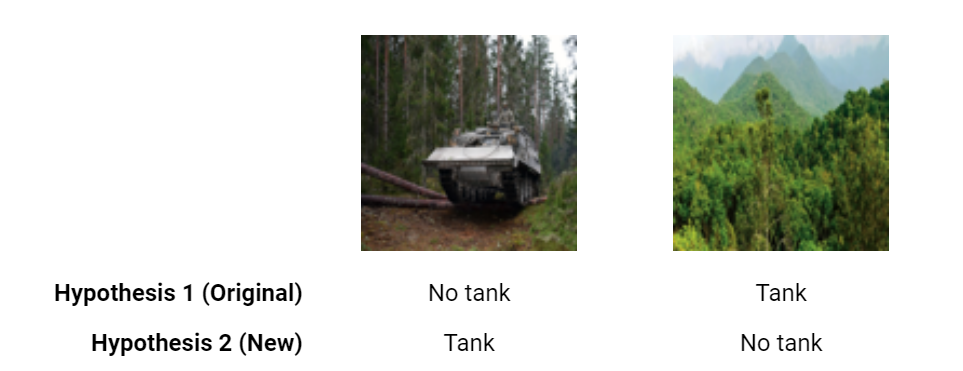

To distinguish that hypothesis visually, we can look at the maximally ambiguous unlabeled images: those images that hypothesis 1 (old classifier) thinks are tanks while the new hypothesis 2 thinks they are forests, and vice-versa. Just looking at the two most extreme images is sufficient:

Figure 3: Possible extra hypothesis and images on which they disagree.

In this theoretical situation, hypothesis 2 would be clearly correct. And we would have a clearer understanding of how hypothesis 1 was mis-classifying tanks.

When computing these different hypotheses, our algorithm automatically produces a corresponding classifier. And when we apply the classifier corresponding to hypothesis 2 to the unlabeled tank data, we get a correct classification; and this time, it extends to the unlabeled data:

Figure 4: Extra hypothesis trained classifier, unlabeled images

Note that figure 2 was the one that suggested that luminosity was being used instead of "tankiness" for classification. Thus for a multi-hypothesis neural net, gathering extra unlabeled data does two things simultaneously. It allows us to generate new and better hypotheses - and it gives us an understanding and an interpretation of the previous hypotheses.

2 comments

Comments sorted by top scores.

comment by RobertKirk · 2022-12-13T14:36:17.014Z · LW(p) · GW(p)

Instead, Aligned AI used its technology to automatically tease out the ambiguities of the original data.

Could you provide any technical details about how this works? Otherwise I don't know what to take from this post.

Replies from: Stuart_Armstrong↑ comment by Stuart_Armstrong · 2022-12-13T17:28:53.078Z · LW(p) · GW(p)

It's an implementation of the concept extrapolation methods we talked about here: https://www.lesswrong.com/s/u9uawicHx7Ng7vwxA [? · GW]

The specific details will be in a forthcoming paper.

Also, you'll be able to try it out yourself soon; signup for alpha testers at the bottom of the page here: https://www.aligned-ai.com/post/concept-extrapolation-for-hypothesis-generation