Has Moore's Law actually slowed down?

post by Matthew Barnett (matthew-barnett) · 2019-08-20T19:18:41.488Z · LW · GW · 6 commentsThis is a question post.

Contents

Answers 3 avturchin None 6 comments

Moore's Law has been notorious for spurring a bunch of separate observations that are all covered under the umbrella of "Moore's law." But as far as I can tell, the real Moore's law is a fairly narrow prediction, which is that the transistor count on CPU chips will double approximately every two years.

Many people have told me that in recent years Moore's law has slowed down. Some have even told me they think it's stopped entirely. For example, the AI and compute article from OpenAI uses the past tense when talking about Moore's Law, "by comparison, Moore’s Law had an 18 month doubling period" (note that Wikipedia says, "The period is often quoted as 18 months because of a prediction by Intel executive David House").

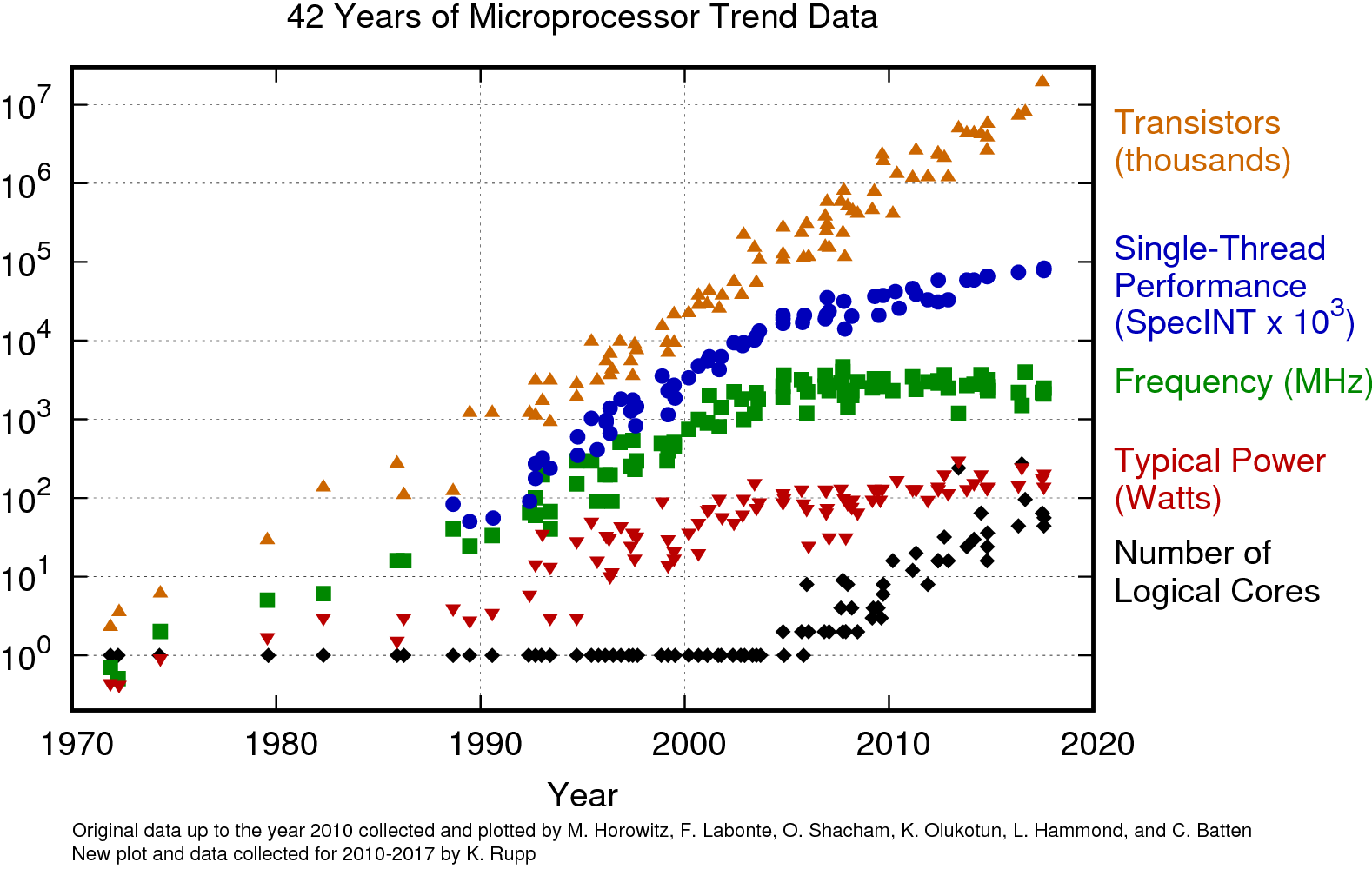

Yet when I google Moore's law, I find this chart from Karl Rupp's website,

To me this looks very stable. And even though single thread performance has stopped improving by much, I can't really see how it's justified to use Moore's law in the past tense just yet. Am I missing something?

Answers

There are two interesting developments this year.

First is very large whole waffle chips with 1.2 trillions transistors, well above trend.

Second is "chiplets" - small silicon ships which are manufactured independently but are stacked on each other for higher connectivity.

6 comments

Comments sorted by top scores.

comment by Jesska (jesska) · 2019-08-22T03:57:13.591Z · LW(p) · GW(p)

Consumer CPU price/performance as well as stand still GPU price/performance 2016-2019 Is probably what contributed massively to public perception of Moore's law death.

In 90s and early 2000s after about 8 years you could get CPU for nearly the same money 50 times faster.

But since 2009 up until 2018 we maybe got 50 or 80% performance boost give or take for the same price. Now with Ryzen 3rd gen everything is changing, so after 10 disappointing years it looks interesting ahead.

comment by David Ferris (david-ferris) · 2019-08-21T01:38:17.789Z · LW(p) · GW(p)

I think when people discuss Moore's Law slowing, they are usually discussing transistor density on a 2D board rather than transistor count.

The radius of a silicon atom is ~0.2nm, and transistors are currently at the 5nm scale commercially. Since transistors (with current designs) need to be made out of at least one silicon atom, there's only log2(5/0.2) = ~4 possible 1D-halvings, or ~16 2D-halvings left before we're hitting the floor of physical possibility. That's ignoring quantum effects which make it difficult to achieve reasonable commercial yield - which is the issue that companies are struggling with.

Replies from: dvasya↑ comment by dvasya · 2019-08-21T05:14:19.091Z · LW(p) · GW(p)

The 5nm in "5nm scale" no longer means "things are literally 5nm in size". Rather, it's become a fancy way of saying something like "200x the linear transistor density of an old 1-micron scale chip". The gates are still larger than 5nm, it's just that things are now getting put on their side to make more room ( https://en.wikipedia.org/wiki/FinFET ). Some chip measures sure are slowing down, but Moore's law (referring to the number of transistors per chip and nothing else) still isn't one of them despite claims of impending doom due to "quantum effects" originally dating back to (IIRC) the eighties.

comment by Ilverin the Stupid and Offensive (Ilverin) · 2019-08-21T19:45:03.663Z · LW(p) · GW(p)

How slow does it have to get before a quantitative slowing becomes a qualitative difference? AIImpacts https://aiimpacts.org/price-performance-moores-law-seems-slow/ estimates price/performance used to improve an order of magnitude (base 10) every 4 years but it now takes 12 years.

comment by guentherium · 2019-09-02T06:19:05.863Z · LW(p) · GW(p)

Doesn't the observation of any turbulence in the statistic contradicts already with the specific understanding (which is how? physically?) of the term law? Or doesn't it make it obsolete? However, the next question could be, how many technical changes in chip construction/integration/assembly are we going to still understand as an updated [version of] Moore's law? James Bridle writes in „New Dark Age“:

„[…] But Moore’s law, despite the name by which it came to be known (one which Moore himself wouldn’t use for two decades), is not a law. Rather, it’s a projection – in both senses of the word. It’s an extrapolation from the data but also a phantasm created by the restricted dimensionality of our imagination. It’s a confusion in the same manner as the cognitive bias that feeds our preference for heroic histories, but in the opposite direction. Where one bias leads us to see the inevitable march of progress through historical events to our present moment, the other sees this progress continuing inevitably into the future. And, as such projections do, it has the capability both to shape that future and to influence, in fundamental ways, other projections – regardless of the stability of its original premise.

What began as an off-the-cuff observation became a leitmotif of the long twentieth century, attaining the aura of a physical law. But unlike physical laws, Moore’s law is deeply contingent: it is dependent not merely on manufacturing techniques but on discoveries in the physical sciences, and on the economic and social systems that sustain investment in, and markets for, its products. It is also dependent upon the desires of its consumers, who have come to prize the shiny things that become smaller and faster every year. Moore’s law is not merely technical or economic; it is libidinal. […]“Replies from: s-rc

↑ comment by S RC (s-rc) · 2024-11-15T19:42:16.366Z · LW(p) · GW(p)

Gave an upvote because you deserve it.

To keep it short as brevity is the soul of wit: check the macro effects in top500 supercomputer speeds. You will see Moore's Law update: it is logarithmic, not linear or exponential. Classical computing of course not speaking on bio or optical or quantum computing, etc.