What are the known difficulties with this alignment approach?

post by tailcalled · 2024-02-11T22:52:18.900Z · LW · GW · No commentsThis is a question post.

Contents

Answers 5 Adele Lopez 3 Charlie Steiner 2 Donald Hobson 2 Jeremy Gillen None No comments

Assume you have a world-model that is nicely factored into spatially localized variables that contain interesting-to-you concepts. (Yes, that's a big assumption, but are there any known difficulties with the proposal if we grant this assumption?)

Pick some Markov blanket (which contains some actuators) as the bounds for your AI intervention.

Represent your goals as a causal graph (or computer program, or whatever) that fits within these bounds. For instance if you want a fusion power plant, represent it as something that takes in water and produces helium and electricity.

Perform a Pearlian counterfactual surgery where you cut out the variables within the Markov blanket and replace them with a program representing your high-level goal, and then optimize the action variables to match the behavior of the counterfactual graph.

Answers

There's nothing stopping the AI from developing its own world model (or if there is, it's not intelligent enough to be much more useful than whatever process created your starting world model). This will allow it to model itself in more detail than you were able to put in, and to optimize its own workings as is instrumentally convergent. This will result in an intelligence explosion due to recursive self-improvement.

At this point, it will take its optimization target, and put an inconceivably (to humans) huge amount of optimization into it. It will find a flaw in your set up, and exploit it to the extreme.

In general, I think any alignment approach which has any point in which an unfettered intelligence is optimizing for something that isn't already convergent to human values/CEV is doomed.

Of course, you could add various bounds on it which limit this possibility, but that is in strong tension with its ability to effect the world in significant ways. Maybe you could even get your fusion plant. But how do you use it to steer Earth off its current course and into a future that matters, while still having its own intelligence restrained quite closely?

Is this an alignment approach? How does it solve the problem of getting the AI to do good things and not bad things? Maybe this is splitting hairs, sorry.

It's definitely possible to build AI safely if it's temporally and spatially restricted, if the plans it optimizes are never directly used as they were modeled to be used but are instead run through processing steps that involve human and AI oversight, if it's never used on broad enough problems that oversight becomes challenging, and so on.

But I don't think of this as alignment per se, because there's still tremendous incentive to use AI for things that are temporally and spatially extended, that involve planning based on an accurate model of the world, that react faster than human oversight allows, that are complicated domains that humans struggle to understand.

One fairly obvious failure mode is that it has no checks on the other outputs.

So from my understanding, the AI is optimizing it's actions to produce a machine that outputs electricity and helium. Why does it produce a fusion reactor, not a battery and a leaking balloon?

A fusion reactor will in practice leak some amount of radiation into the environment. This could be a small negligible amount, or a large dangerous amount.

If the human knows about radiation and thinks of this, they can put a max radiation leaked into the goal. But this is pushing the work onto the humans.

From my understanding of your proposal, the AI is only thinking about a small part of the world. Say a warehouse that contains some robotic construction equipment, and that you hope will soon contain a fusion reactor, and that doesn't contain any humans.

The AI isn't predicting the consequences of it's actions over all space and time.

Thus the AI won't care if humans outside the warehouse die of radiation poisoning, because it's not imagining anything outside the warehouse.

So, you included radiation levels in your goal. Did you include toxic chemicals? Waste heat? Electromagnetic effects from those big electromagnets that could mess with all sorts of electronics. Bioweapons leaking out? I mean if it's designing a fusion reactor and any bio-nasties are being made, something has gone wrong. What about nanobots. Self replicating nanotech sure would be useful to construct the fusion reactor. Does the AI care if an odd nanobot slips out and grey goos the world? What about other AI. Does your AI care if it makes a "maximize fusion reactors" AI that fills the universe with fusion reactors.

↑ comment by [deleted] · 2024-02-13T21:19:12.446Z · LW(p) · GW(p)

But this is pushing the work onto the humans.

Is that so bad? The obvious solution to your objections is to lower the scope to subtasks. "Design a fusion reactor that will likely work". "Using the given robots and containers full of parts, construct the auxillary power subsystem". And so on.

Humans check all the subtasks and so do AI models. To keep the humans paying attention, a "red team" AI model could introduce obviously sabotaged output to the review queue, similar to how airport screeners occasionally see a gun or bomb digitally inserted.

And you obviously need to be careful with things like "nanobots", probably to the point that you don't build any that can replicate on their own and inspect any design changes to these.

You're replacing thousands of lifetimes of work to a few lifetimes of total labor for something like fusion r&d.

Replies from: donald-hobson, tailcalled↑ comment by Donald Hobson (donald-hobson) · 2024-02-13T21:48:15.500Z · LW(p) · GW(p)

In the limit of pushing all the work onto humans, you just have humans building a fusion reactor.

Which is a sensible plan, but is not AI.

If you have a particular list in mind for what you consider dangerous, I suspect your "red teaming" approach might catch it.

Like I think that, in this causal graph setup, it's not too hard to stop excess radiation leaking out, if you realize that radiation is a danger and work to stop it.

This doesn't give you a defence against the threats you didn't imagine and the threats you can't imagine.

Replies from: None↑ comment by [deleted] · 2024-02-13T22:39:52.581Z · LW(p) · GW(p)

limit of pushing all the work onto humans, you just have humans building a fusion reactor.

I assume you break the tasks into short duration, discrete, self contained subtasks. You choice of where to break has to do with graph dependencies between tasks and if the task is bounded in scope and time limited.

No task has "threats you didn't imagine and the threats you can't imagine. ", everything is something humans could do themselves, it's just a lot of work. There are about 10 viable designs for a fusion reactor that "just" need thousands of person-years of engineering to nail down the details and a few tens of billions to make a scale prototype. Humans can do this, it "just" has proven difficult to get the resources....

Like I said it reduces the labor requirements by at least 1000x. Is 1000x reduction in labor just not worth doing or?

Presumably once humans have ai systems they carefully review and have reduced labor 1000x across almost all industries they will then research the next steps with those resources ..

Replies from: donald-hobson↑ comment by Donald Hobson (donald-hobson) · 2024-02-13T23:01:44.095Z · LW(p) · GW(p)

Suppose you give the AI a short duration discrete task. Pick up this box and move it over there. The AI chooses to detonate a nearby explosive, sending everything in the lab flying wildly all over the place. And indeed, the remains of the box are mostly over there.

Ok. Maybe you give it another task. Unscrew a stuck bolt. The robot gets a big crowbar and levers the bolt. The thing it's pushing against for leverage is a vacuum chamber. Its slightly deformed from the force, causing it to leak.

Or maybe it sprays some chemical on the bolt, which dissolves it. And in a later step, something else reacts with the residue, creating a toxic gas.

I think you need to micromanage the AI. To specify every possible thing in a lot of detail. I don't think you get a 10x labor saving. I am unconvinced you get any labor saving at all.

After all, to do the task yourself, you just need to find 1 sane plan. But to stop the AI from screwing up, you need to rule out every possible insane plan. Or at least repeatedly read the AI's plan, spot that it's insane, and tell it not to use explosives to mix paint.

Replies from: None↑ comment by [deleted] · 2024-02-14T00:05:43.957Z · LW(p) · GW(p)

https://robotics-transformer-x.github.io/

I don't think you get a 10x labor saving. I am unconvinced you get any labor saving at all.

Ok to drill down: the AI is a large transformer architecture control model. It was initially trained by converting human and robotic actions to a common token representation that is perspective independent and robotic actuator interdependent. (Example "soft top grab, bottom insertion to Target" might be a string expansion of the tokens)

You then train via reinforcement learning on a simulation of the task environments for task effectiveness.

What this does is train the machines policy to be similar to "what would a human do", at least for input cases that are similar to any of the inputs. (As usual, you need all the video in the world to do this). The RL "fine tuning" modifies the policy just enough to usually succeed on tasks instead of say grabbing too hard and crushing or dropping the object every time. So the new policy is a local minimum in policy space adjacent to the one learned from humans.

This empirically is a working method that is SOTA.

In order for the machine to "detonate an explosive" either the action a human would have taken from the training dataset involves demolitions (and there are explosives and initiators in reach of the robotic arms which are generally rail mounted) or the simulation environment during the RL stages rewarded such actions.

The reason it saves 10-1000 times the labor is for task domains where the training examples and the simulation environment span the task space of the given assignment. I meant "10-1000 times the labor" in the worldwide labor market sense, for about half of all jobs. Plenty of rare jobs few humans do will not be automated.

For example if the machine has seen, and practiced, oiling and inserting 100 kinds of bolt, a new bolt that is somewhere in properties in between the extreme ends the machine has capabilities on will likely work zero shot.

Or in practical spaces, I was thinking mining, logistics, solar panel deployment, manufacturing are cases where there are billions of total jobs and spanning task spaces that cover almost all tasks.

For supervision you have a simple metric : you query a lockstep sim each frame for the confidence and probability distribution of outcomes expected on the next frame. As errors accumulate (say a bolt is dissolving which the sim doesn't predict) this eventually reaches a threshold to summon a human remote supervisor. There are other metrics but this isn't a difficult technical problem.

You also obviously must at first take precautions: operate in human free environments separated by lexan shields, and well it's industry. A few casualties are normal and humanity can frankly take a few workers killed if the task domain was riskier with humans doing it.

I would expect you would first have proven your robotics platform and stack with hundreds of millions of robots on easier tasks before you can deploy to domains with high vacuum chamber labs. That kind of technical work is very difficult and very few people do it. Human grad students also make the kind of errors you mention, over torque is a common issue.

Replies from: donald-hobson↑ comment by Donald Hobson (donald-hobson) · 2024-02-14T01:00:12.145Z · LW(p) · GW(p)

Ok to drill down: the AI is a large transformer architecture control model. It was initially trained by converting human and robotic actions to a common token representation that is perspective independent and robotic actuator interdependent. (Example "soft top grab, bottom insertion to Target" might be a string expansion of the tokens)

That is rather different from the architecture I thought you were talking about. But ok. I can roll with that.

You then train via reinforcement learning on a simulation of the task environments for task effectiveness.

You are assuming as given a simulation. Where did this simulation come from? What happens when the simulation gets out of sync with reality?

But Ok. I will grant that you have somehow built a flawless simulation. Lets say you found a hypercomputer and coded quantum mechanics into it.

So now we have the question, how do the tokens match up with the simulation. Those tokens are "acutator independent". (A silly concept, sometimes the approach will depend A LOT on exactly what kind of actuators you are using. Some actuators must set up a complex system of levers and winches, while a stronger actuator can just pick up the heavy object. Some actuators can pick up hot stuff, others must use tongs. Some can fit in cramped spaces. Others must remove other components in order to reach.)

We need raw motor commands, both in reality, and in the quantum simulation. So lets also grant you a magic oracle that takes in your common tokens and turns them into raw motor commands. So when you say "pick up this component, and put it here", it's the oracle that determines if the sensitive component is slammed down at high speed. If something else is disturbed as you reach over. Lets assume it makes good decisions here somehow.

or the simulation environment during the RL stages rewarded such actions.

Yes. That. Now the problems you get when doing end to end RL are different from when doing RL over each task separately. If you get a human to break something down into many small easy tasks, then you get local goodhearthing. Like using explosives to move things because the task was to move object A to position B. Not to move it without damaging it.

If you do RL training over the whole thing, ie reinforce on fusion happening in the fusion reactor example, then you get a plan that actually causes fusion to happen. This doesn't involve randomly blowing stuff up to move things. This long range optimization has less random industrial accident stupidities, and more deep AI problems.

For example if the machine has seen, and practiced, oiling and inserting 100 kinds of bolt, a new bolt that is somewhere in properties in between the extreme ends the machine has capabilities on will likely work zero shot.

Imagine you had a machine that could instantly oil and insert any kind of bolt. Now make a fusion reactor with 1000x less labour. Oh wait, the list of things that people designing fusion reactors spend >0.1% of their time on is pretty long and complicated.

Whatsmore, we can use the economics test. Oiling and inserting bolts isn't something that takes a PhD in nuclear physics. Yet a lot of the people designing fusion reactors do have a PhD in nuclear physics.

For supervision you have a simple metric : you query a lockstep sim each frame for the confidence and probability distribution of outcomes expected on the next frame.

I will grant you that you somehow manage to keep the simulation in lockstep with reality.

Then the difficult bit is keeping the sim in lockstep with what you actually want. Say the fastest maintanence procedure that the AI finds involves breaking open the vacuum chamber. It happens that this will act as a vacuum cannon, firing a small nut at bullet like speeds out the window. To the AI that is only being reinforced on [does reactor work] and [does it leak radiation], firing nuts at high speed out the window is the most efficient action. The simulated nut flies out the simulated window in exactly the same way the real one does.

A human just reading the list of actions would see "open vacuum valve 6" and not be easily able to deduce that a nut would fly out the window.

You also obviously must at first take precautions: operate in human free environments separated by lexan shields, and well it's industry. A few casualties are normal and humanity can frankly take a few workers killed if the task domain was riskier with humans doing it.

Ok. So setting all that up is going to take way more than 0.1% of the worker time. Someone has to build all those shields and put them in place.

Real human workers can and do order custom components from various other manufacturers. This doesn't fit well with your simulation, or with your safety protocol.

But if you are only interested in the "big" harms. How about if the AI decides that the easiest way to make a fusion reactor is to first make self replicating nanotech. Some of this gets out and grey goo's earth.

Or the AI decides to get some computer chips, and code a second AI. The second AI breaks out and does whatever.

Or what was the goal for that fusion bot again, make the fusion work. Don't release radioactive stuff off premises. Couldn't it detonate a pure fusion bomb. No radioactive stuff leaving, only very hot helium.

Human grad students also make the kind of errors you mention, over torque is a common issue.

Recognizing and fixing mistakes is fairly common work in high tech industries. It's not clear how the AI does this. But those are mistakes. What I was talking about was if the AI knew full well it was doing damage, but didn't care.

I would expect you would first have proven your robotics platform and stack with hundreds of millions of robots on easier tasks before you can deploy to domains with high vacuum chamber labs.

You were the one who used a fusion reactor as an example.

So your saying the robots can only build a fusion reactor after they have started by building millions of easier things as training?

Would this AI you are thinking of be given a task like "build a fusion reactor" and be left to decide for itself whether a stelarator or laser confinement system was better?

Replies from: None↑ comment by [deleted] · 2024-02-14T01:37:20.728Z · LW(p) · GW(p)

I added the below. I believe most of your objections are simply wrong because this method actually works at least to todays capability levels. (Small child...)

What this does is train the machines policy to be similar to "what would a human do", at least for input cases that are similar to any of the inputs. (As usual, you need all the video in the world to do this). The RL "fine tuning" modifies the policy just enough to usually succeed on tasks instead of say grabbing too hard and crushing or dropping the object every time. So the new policy is a local minimum in policy space adjacent to the one learned from humans.

This empirically is a working method that is SOTA.

Responses:

You are assuming as given a simulation. Where did this simulation come from? What happens when the simulation gets out of sync with reality?

A neural or hybrid sim. It came from predicting future frames from real robotics data. It cannot desync because the starting state is always the present frame.

your saying the robots can only build a fusion reactor after they have started by building millions of easier things as training?

Yes

Would this AI you are thinking of be given a task like "build a fusion reactor" and be left to decide for itself whether a stelarator or laser confinement system was better?

No. I was thinking of easier tasks. "Ok I want a stellarator. Design the power substation for the main power. The building needs to be these dimensions (give a reference to another file), nothing special, design it like a warehouse. Ok I updated the doc vcs, redesign the power substation and the building per the new specs.

Engineering is a ton of perspiration and repetitive work where one variable determines another. Procedural engineering means changes in one place propogate to the rest. It's commonly used in specialized domains, an ai would generalize it.

"Ok I have a design, order another AI to build the thousands of kilometers of superconducting wire I need. Here are the magnet designs, wind them. Here are the casing designs, machine them. Get all the parts to the assembly area.

Oh I dropped something, get another, reordering it made if necessary. ".

Or the real saver "ok I finished the prototype stellarator, you saw every step. Build another, ask for help when needed".

Note that the agent being "talked to" just redirects calls to an isolated system to do them. The main ai doesn't have ordering capabilities, but a tested and specialized system that has human written components and automatic review. (Since financial transactions have obvious issues)

Gpt-4 plugin support works like the last paragraph.

Replies from: donald-hobson↑ comment by Donald Hobson (donald-hobson) · 2024-02-14T17:17:41.711Z · LW(p) · GW(p)

I added the below. I believe most of your objections are simply wrong because this method

If you are mostly learning from imitating humans, and only using a small amount of RL to adjust the policy, that is yet another thing.

I thought you were talking about a design built mainly around RL.

If it's imitating humans, you get a fair bit of safety, but it will be about as smart as humans. It's not trying to win, it's trying to do what we would do.

A neural or hybrid sim. It came from predicting future frames from real robotics data.

Ok. So you take a big neural network, and train it to predict the next camera frame. No Geiger counter in the training data? None in the prediction. Your neural sim may well be keeping track of the radiation levels internally, but it's not saying what they are. If the AI's plan starts by placing buckets over all the cameras, you have no idea how good the rest of the plan is. You are staring at a predicted inside of a bucket.

nothing special, design it like a warehouse.

Except there is something special. There always is. Maybe this substation really better not produce any EMP effects, because sensitive electronics are next door. So the whole building needs a faraday cage built into the walls. Maybe the location it's being built at is known for it's heavy snow, so you better give it a steep sloping roof. Oh and you need to leave space here for the cryocooler pipes. Oh and you can't bring big trucks in round this side, because the fuel refinement facility is already there. Oh and the company we bought cement from last time has gone bust. Find a new company to buy cement from, and make sure it's good quality. Oh and there might be a population of bats living nearby. Don't use any tools that produce lots of ultrasound.

It cannot desync because the starting state is always the present frame.

Lets say someone spills coffee in a laptop. It breaks. Now to fix it, some parts need replaced. But which parts? That depends on exactly where the coffee dribbled inside it. Not something that can be predicted. You must handle the uncertainty. Test parts to see if they work. Look for damage marks.

I think this system as you are describing now is something that might kind of work. I mean the first 10 times it will totally screw up. But we are talking about a semismart but not that smart AI trained on a huge number of engineering examples. With time it could become mostly pretty competent. With humans keeping patching it every time it screws up.

One problem is that you seem to be working on a "specifications" model. Where people first write flawless specifications, and then build things to those specs. In practice there is a fair bit of adjusting. The specs for the parts, as written beforehand, aren't flawless, at best they are roughly correct. The people actually building the thing are talking to each other, trying things out IRL and adjusting the systems so they actually work together.

"ok I finished the prototype stellarator, you saw every step. Build another, ask for help when needed"

And the AI does exactly the same thing again. Including manufacturing the components that turned out not to be needed, and stuffing them in a cupboard in the corner. Including using the cables that are 2x as thick as needed because the right grade of cable wasn't available the first time.

"Ok I want a stellarator.". You were talking about 1000x labor savings. And deciding which of the many and various fusion designs to work on is more than 0.1% of the task by itself. I mean you can just pick out of a hat, but that's making things needlessly hard for yourself.

Replies from: None, None↑ comment by [deleted] · 2024-02-14T18:32:57.054Z · LW(p) · GW(p)

I think you are assuming the above will happen. (the line in blue).

I am assuming the red line, and obviously by building on what we have incrementally.

If you were somehow a significant way up the blue line and trying to get robots to do anything useful, yes, you might get goodheart optimized actions that achieve the instructed result, maybe (if the ASI hasn't chosen to betray this time since it can do so), but not satisfying all the thousands of constraints you implied but didn't specify.

Replies from: donald-hobson↑ comment by Donald Hobson (donald-hobson) · 2024-02-17T15:46:56.239Z · LW(p) · GW(p)

In more "slow takeoff" scenarios. Your approach can probably be used to build something that is fairly useful at moderate intelligence. So for a few years in the middle of the red curve, you can get your factories built for cheap. Then it hits the really steep part, and it all fails.

I think the "slow" and "fast" models only disagree in how much time we spend in the orange zone before we reach the red zone. Is it enough time to actually build the robots?

I assign fairly significant probabilities to both "slow" and "fast" models.

Replies from: None↑ comment by [deleted] · 2024-02-18T02:49:28.760Z · LW(p) · GW(p)

I think the "slow" and "fast" models only disagree in how much time we spend in the orange zone before we reach the red zone. Is it enough time to actually build the robots?

I assign fairly significant probabilities to both "slow" and "fast" models.

Well how do these variables interact?

the g factor(intelligence) of the ASI depends on the algorithm of the ASI times the log of compute assigned to it, C.

The justification for it not being linear is because non search NN lookups already capture most of the possible gain on a task. You can do more samples with more compute, increasing the probability of a higher score. I'm having trouble finding the recent paper where it turns out if you sample GPT-4 you do get higher score, with it scaling with the log of the number of samples.

Then at any given moment in time, the rate of improvement of the ASI's algorithm is going to scale with current intelligence times a limit based on S.

What is S? I sensed you were highly skeptical of my "neural sim" variable until 2 days ago. It's Sora + you can get collidable geometry, not just images as output. At the time, the only evidence i had of a neural sim was nvidia papers. Note the current model is likely capable of this:

https://x.com/BenMildenhall/status/1758224827788468722?s=20

S is the suite of neural sim situations. Once the ASI solves all situations in the suite, self improvement goes to 0. (there is no error derivative to train on)

This also come to mind naturally. Have you, @Donald Hobson [LW · GW] , ever played a simulation game that models a process that has a real world equivalent? You might notice you get better and better at the game until you start using solutions that are not possible in the game, but just exploit glitches in the game engine. If an ASI is doing this, it's improvement becomes negative once it hits the edges of the sim and starts training on false information. This is why you need neural sims, as they can continue to learn and add complexity to the sim suite (and they need to output a 'confidence' metric so you lower the learning rate when the sim is less confident the real world estimation is correct). How do you do this?

Well at time 0, humans make a big test suite with everything they know how to test for ("take all these exams in every college major, play all these video games and beat them"). Technically it's not a fixed process, but an iterative one, but the benefit of that suite is limited. System already read all the books and watched all the videos and has taken every test humans can supply, at a certain point new training examples are redundant. (and less than perfect scores on things like college exams are because the answer key has errors, so there's negative improvement if the AI memorizes the wrong answers)

The second part is the number of robots providing correct data. (because the real world isn't biased like a game or exam, if a specific robot fails an achievable task it's because the robot policy needs improvement)

Note the log here : this comes from intuition. In words, the justification is that immediately when a robot does a novel task, there will be lots of mistakes and rapid learning. But then the mistakes take increasingly larger lengths of time and task iterations to find them, it's a logistic growth curve approaching an asymptote for perfect policy. This is also true for things like product improvement. Gen1->Gen2 you get the most improvement, and then over time you have to wait increasingly longer times to get feedback from the remaining faults, especially if your goal is to increase reliability. (example task: medical treatments for humans. You'll figure out the things that kill humans immediately very rapidly, while the things that doom them to die in 10 years you have to wait 10 years for. more specific example: cancer treatments)

How fast do you get more robots?

where,

- R(t) represents the number of robots at time t.

- R0 is the initial number of robots at time = 0

- tau is the time constant in years

@Daniel Kokotajlo [LW · GW] and I estimated this time constant at 2-3 years. This is a rough guess, but note there are strong real world factors that make it difficult to justify a lower number.

First, what is a robot?

+

+

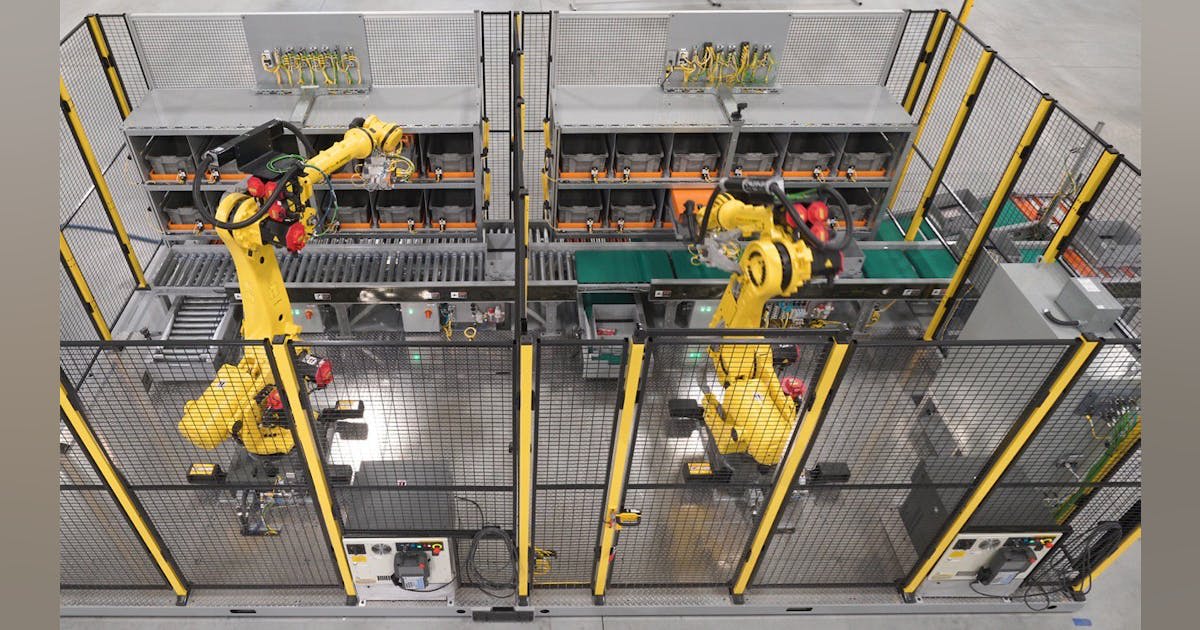

and it lives in a setup like this, just now with AI driven arms instead of the older limited control logic the bots pictured use.

1.0 robot is: (robotic arms) (with arm placement similar to humans so you can learn how to do tasks the human way initially) + sensors + compute racks + support infrastructure. (cages, power conveyers, mounts for the sensors)

Robot growth means you double the entire industrial chain. Obviously, in a factory somewhere, robots are making robots. That would take like, a few hours right?

But then you also copy the CNC machines to make the robot's parts:

And the steel forges, chip fabs, mines, power sources, buildings, aluminum smelter...everything in the entire industrial chain you must duplicate or the logistic growth bottlenecks on the weak link.

At the very start, you can kick-start the process by getting together 7 trillion or so, and employing a substantial fraction of the working population of the planet to serve as your first generation of 'robots'. You also can have the ASI optimize existing equipment and supply chains to get more productivity out of it.

In the later future, you can probably get nanotechnology:

Note this helps but it isn't a magic pass to overnight exponential growth. Nanotechnology still requires power, cooling, raw materials processing, it needs a warehouse and a series of high vacuum chambers for the equipment to run in. It does simplify things a lot and has other advantages.

Another key note : probably to build the above, which is all the complexity of earth's industry crammed into a small box, you will need some initial number of robots, on the order of hundreds of millions to billions, to support the bootstrapping process to develop and build the first nanoforge.

Finally, obviously,

Where you have your compute at time 0 (when you got to ASI) and it scales over time with respect to the number of robots you have.

Wait, don't humans build compute? Nope. They haven't for years.

Everything is automated. Humans are in there for maintenance and recipe improvement.

Another thing that's special about compute is the "robots" aren't general purpose. You need specific bottlenecked equipment that is likely the hardest task for robots to learn to build and maintain. (I suspect harder than learning to do surgery with a high patient survival rate, because bodies can heal from small mistakes, while this equipment will fail on most tiny mistakes.

Discussion: We can model the above equation and get a timeline in for the orange area, for different assumptions for the variables. Perhaps you and I could have a dialogue where we develop the model. I would like feedback on any shaky assumptions made.

Replies from: donald-hobson, donald-hobson↑ comment by Donald Hobson (donald-hobson) · 2024-02-18T15:40:21.473Z · LW(p) · GW(p)

Response to the rest of your post.

By the way, these comment boxes have built in maths support.

Press Ctrl M for full line or Ctrl 4 for inline

You might notice you get better and better at the game until you start using solutions that are not possible in the game, but just exploit glitches in the game engine. If an ASI is doing this, it's improvement becomes negative once it hits the edges of the sim and starts training on false information. This is why you need neural sims, as they can continue to learn and add complexity to the sim suite

Neural sims probably have glitches too. Adversarial examples exist.

Note the log here : this comes from intuition. In words, the justification is that immediately when a robot does a novel task, there will be lots of mistakes and rapid learning. But then the mistakes take increasingly larger lengths of time and task iterations to find them, it's a logistic growth curve approaching an asymptote for perfect policy.

This sounds iffy. Like you are eyeballing and curve fitting, when this should be something that falls out of a broader world model.

Every now and then, you get a new tool. Like suppose your medical bot has 2 kinds of mistakes, ones that instant kill, and ones that mutate DNA. It quickly learns not to do the first one. And slowly learns not to do the second when it's patients die of cancer years later. Except one day it gets a gene sequencer. Now it can detect all those mutations quickly.

I find it interesting that most of this post is talking about the hardware.

Isn't this supposed to be about AI? Are you expecting a regieme where

- Most of the worlds compute is going into AI.

- Chip production increases by A LOT (at least 10x) within this regieme.

- Most of the AI progress in this regieme is about throwing more compute at it.

.everything in the entire industrial chain you must duplicate or the logistic growth bottlenecks on the weak link.

Everything is automated. Humans are in there for maintenance and recipe improvement.

Ok. And there is our weak link. All our robots are going to be sitting around broken. Because the bottleneck is human repair people.

It is possible to automate things. But what you seem to be describing here is the process of economic growth in general.

Each specific step in each specific process is something that needs automating.

You can't just tell the robot "automate the production of rubber gloves". You need humans to do a lot of work designing a robot that picks out the gloves and puts them on the hand shaped metal molds to the rubber can cure.

Yes economic growth exists. It's not that fast. It really isn't clear how AI fits into your discussion of robots.

Replies from: None↑ comment by [deleted] · 2024-02-18T17:41:24.956Z · LW(p) · GW(p)

Neural sims probably have glitches too. Adversarial examples exist.

Yes. That's why I specifically mentioned :

and they need to output a 'confidence' metric so you lower the learning rate when the sim is less confident the real world estimation is correct

Confidence is a trainable parameter, and you scale down learning rate when confidence is low.

Ok. And there is our weak link. All our robots are going to be sitting around broken. Because the bottleneck is human repair people.

This is a lengthy discussion but the simple answer is that what a human 'repair person' does can be described as a simple algorithm that you can write in ordinary software. I've repaired a few modern things, this is from direct knowledge and watching videos of someone repairing a Tesla.

The algorithm in essence is every module is self diagnosing, and there is a graph flow of relationships between modules. There are simple experiments you do, it tells you in the manual many of them, to get better evidence.

Then you disassemble the machine partially - if you were a robot and had the right licenses you could download the assembly plan for this machine and reverse it - remove the suspect module, and replace. If the issues don't resolve, you remove the module that said the suspect module was bad or was related to it.

For PCs, this is really easy. Glitches on your screen from your GPU? Replace the cable. Observe if the glitches go away. Try a different monitor. Still broken? Put in a different GPU. That doesn't resolve it? Go and memtest86 the RAM. Does that pass? It's either the motherboard or the processors.

This comes from simply understanding how the components interconnect, and obviously current AI can do this easily better than humans.

The hard part is the robotics.

The 'simple' parts like "connect a multimeter to <point 1>, <point 2>. "sand off the corrosion, wipe off the grease", "does the oil have metal shavings in it", "remove that difficult to reach screw" are what has been a bottleneck for 60 years.

You can't just tell the robot "automate the production of rubber gloves". You need humans to do a lot of work designing a robot that picks out the gloves and puts them on the hand shaped metal molds to the rubber can cure.

Yes economic growth exists. It's not that fast. It really isn't clear how AI fits into your discussion of robots.

Because it's what humans want AI for, and due to the relationships between the variables, it is possible we will not ever get uncontrollable superintelligence before first building a lot of robots, ICs, collecting revenue, and so on.

Isn't this supposed to be about AI? Are you expecting a regieme where

- Most of the worlds compute is going into AI.

- Chip production increases by A LOT (at least 10x) within this regieme.

- Most of the AI progress in this regieme is about throwing more compute at it.

yes I think AI and robotics and compute construction are all interrelated. That log(Compute) means actually 10x probably is nowhere near enough for strong ASI.

I also personally think it is an...interesting...world model to imagine this ASI that can design a bridge or DNA editor, people are stupid enough to trust it, yet it cannot replace a rusty bolt on the underside of that same bridge or manipulate basic glassware in a lab.

Replies from: donald-hobson↑ comment by Donald Hobson (donald-hobson) · 2024-02-18T18:49:15.451Z · LW(p) · GW(p)

lower the learning rate when the sim is less confident the real world estimation is correct

Adversarial examples can make an image classifier be confidently wrong.

Because it's what humans want AI for, and due to the relationships between the variables, it is possible we will not ever get uncontrollable superintelligence before first building a lot of robots, ICs, collecting revenue, and so on.

You are talking about robots, and a fairly specific narrow "take the screws out" AI.

Quite a few humans seem to want AI for generating anime waifus. And that is also a fairly narrow kind of AI.

Your "log(compute)" term came from a comparison which was just taking more samples. This doesn't sound like an efficient way to use more compute.

Someone, using a pretty crude algorithmic approach, managed to get a little more performance for a lot more compute.

↑ comment by Donald Hobson (donald-hobson) · 2024-02-18T14:57:24.441Z · LW(p) · GW(p)

First of all. SORA.

I sensed you were highly skeptical of my "neural sim" variable until 2 days ago.

No. Not really. I wasn't claiming that things like SORA couldn't exist. I am claiming that it's hard to turn them towards the task of engineering a bridge say.

Current SORA is totally useless for this. You ask it for a bridge, and it gives you some random bridge looking thing, over some body of water. SORA isn't doing the calculations to tell if the bridge would actually hold up. But lets say a future much smarter version of SORA did do the calculations. A human looking at the video wouldn't know what grade of steel SORA was imagining. I mean existing SORA probably isn't thinking of a particular grade of steel, but this smarter version would have picked a grade, and used that as part of it's design. But it doesn't tell the human that, the knowledge is hidden in it's weights.

Ok, suppose you could get it to show a big pile of detailed architectural plans, and then a bridge. All with super-smart neural modeling that does the calculations. Then you get something that ideally is about as good at looking at the specs of a random real world bridge. Plenty of random real world bridges exist, and I presume bridge builders look at their specs. Still not that useful. Each bridge has different geology, budget, height requirements etc.

Ok, well suppose you could start by putting all that information in somehow, and then sampling from designs that fit the existing geology, roads etc.

Then you get several problems.

The first is that this is sampling plausible specs, not good specs. Maybe it shows a few pictures at the end to show the bridge not immediately collapsing. But not immediately collapsing is a low bar for a bridge. If the Super-SORA chose a type of paint that was highly toxic to local fish, it wouldn't tell you. If the bridge had a 10% chance of collapsing, it's randomly sampling a plausible timeline. So 90% of the time, it shows you the bridge not collapsing. If it only generates 10 minutes of footage, you don't know what might be going on in it's sim while you weren't watching. If it generates 100 years of footage from every possible angle, it's likely to record predictions of any problems, but good luck finding the needle in the haystack. Like imagine this AI has just given you 100 years of footage. How do you skim through it without missing stuff.

Another problem is that SORA is sampling in the statistical sense. Suppose you haven't done the geology survey yet. SORA will guess at some plausible rock composition. This could lead to you building half the bridge, and then finding that the real rock composition is different.

You need a system that can tell you "I don't know fact X, go find it out for me".

If the predictions are too good, well the world it's predicting contains Super-SORA. This could lead to all sorts of strange self fulfilling prophecy problems.

Replies from: None↑ comment by [deleted] · 2024-02-18T17:25:41.025Z · LW(p) · GW(p)

No. Not really. I wasn't claiming that things like SORA couldn't exist. I am claiming that it's hard to turn them towards the task of engineering a bridge say.

I would agree with that.

Let me give you a summary of my overall position: "Trust an ASI with a complex task it needs context awareness to complete? Not even once".

Everything is about how to get a large amount of benefits in the orange area, and yes we should stay there for a prolonged period.

What benefits specifically? Vast amounts of material manufactured goods, robots, robots are doing repetitive tasks that can be clearly defined. ASI models in use are only used in limited duration sessions, and you strip away context awareness.

Context awareness is the bits that tell the ASI this is a real bridge, humans are really going to build it, and not just another task in the training sim. "Always bluepill". It should not be possible for the ASI to know when the task is real vs sim. (which you can do by having an image generator convert real frames to a descriptor, and then regenerate them so they have the simulation artifacts...)

The architecture fees for a new bridge design are about 10% of the cost.

Other 90% is, well, all the back breaking labor to build one. Not just at the construction site, but where the concrete was made, building/maintaining the trucks to haul the materials, etc.

Sora's role: For autonomous cars and general robots, this is used for training. General robots means one robot at a time, and for short task sessions. For example : "remove the part from the CNC machine and place it on the output table".

What you do is record video from the human workers doing the task, many hours of it. You train a model using techniques similar Sora to classify what it sees and to predict frame for frame the next frame. This is 5-30 hz sampling rate, so 33 ms into the future. Then do this recursively.

Since this is an RL problem you go further and may model a few seconds ahead.

There are ways to reproject in a robot instead.

Then the robot tries to do the task in the real world once it has settled on a policy that covers it in the simulated world.

The robot may fail, but you train the model, and you policy iterate with each failure. This is where the log(real world episodes) comes in. It's correct and aligns very well with real world experience.

Note on Sora : Tesla and probably Waymo already use a neural sim that is similar to training their robotic cars. I found the tweet last night from an insider, and I've seen demo videos before, i can produce evidence. But it is accurate to say it's already in use for the purpose I am talking about.

Bridges : at least in the near future, you want to automate making the parts for repairing a bridge, doing the dangerous work of inspection via drone, converting a bunch of pictures from many places from the drones to an overall model of the bridge compatible with load testing software, and so on. <https://artbabridgereport.org/reports/2021-ARTBA-Bridge-Report.pdf> current USA bridges need 7% replaced and work done on about 40%.

Key note : I would not trust an ASI or AGI with designing a new bridge, the goal is to make it cheaper to replace or repair the designs you already have. If AI + robots can contribute between 10% and 95% of the labor to replace a bridge (starting at 10, gradually ramping...), that lets you swap a lot more bridges for the same annual budget.

Replies from: donald-hobson↑ comment by Donald Hobson (donald-hobson) · 2024-02-18T18:40:08.526Z · LW(p) · GW(p)

If we have the technical capacity to get into the red zone, and enough chips to make getting there easy. Then hanging out in the orange zone, coordinating civilization not to make any AI too powerful, when there are huge incentives to ramp the power up, and no one is quite sure where the serious dangers kick in...

That is, at least, an impressive civilization wide balancing act. And one I don't think we have the competence to pull off.

It should not be possible for the ASI to know when the task is real vs sim. (which you can do by having an image generator convert real frames to a descriptor, and then regenerate them so they have the simulation artifacts...)

This is something you want, not a description of how to get it, and one that is rather tricky to achieve. That converting and then converting back trick is useful. But sure isn't automatic success either. If there are patterns about reality that the ASI understands, but the simulator doesn't, then the ASI can use those patterns.

Ie if the ASI understands seasons, and the simulator doesn't, then if it's scorching sunshine one day and snow the next, that suggests it's the simulation. Otherwise, that suggests reality.

And if the simulation knows all patterns that the ASI does, the simulator itself is now worryingly intelligent.

robots are doing repetitive tasks that can be clearly defined.

If the task is maximally repetitive, then the robot can just follow the same path over and over.

If it's nearly that repetitive, the robot still doesn't need to be that smart.

I think you are trying to get a very smart AI to be so tied down and caged up that it can do a task without going rouge. But the task is so simple that current dumb robots can often do it.

For example : "remove the part from the CNC machine and place it on the output table".

Economics test again. Minimum wage workers are easily up to a task like that. But most engineering jobs pay more than minimum wage. Which suggests most engineering in practice requires more skill than that.

I mean yes engineers do need to take parts out of the CNC machine. But they also need to be able to fix that CNC machine when a part snaps off inside it and starts getting jammed in the workings. And the latter takes up more time in practice. Or noticing that the toolhead is loose, and tightning and recalibrating it.

The techniques you are describing seem to be next level in fairly dumb automation. The stuff that some places are already doing (like boston dynamics robot dog level hardware and software), but expanded to the whole economy. I agree that you can get a moderate amount of economic growth out of that.

I don't see you talking about any tasks that require superhuman intelligence.

↑ comment by [deleted] · 2024-02-14T17:40:02.324Z · LW(p) · GW(p)

You were talking about 1000x labor savings.

Yes and I stand by that assertion. The above will work and does already work in some cases (self driving is very close) to human level. It's eventually 1000 time savings in task domains like mining, farming, logistics, materials processing, manufacturing, cleaning.

Not necessarily prototype fusion reactor construction specifically, but possibly over the fusion industry once engineers find a design that works.

I was thinking it would help - something like CERN which is similar to what a fusion reactor will look like has a whole bunch of ordinary stuff in it. Lots of roughly dug tunnels, concrete, handrails, racks of standard computers that you would see in an office, and so on. Large assemblies that need to be trucked in. Each huge instrument assembly is made of simpler parts.

If robots do all that it still saves time. (Probably less than 90 percent of the time)

You are correct that a neural sim probably won't cover repair. You have seen Nvidia has neural sims. I was assuming you first classify from sensor fusion (many cameras, lidar, etc) to a representation of the state space then from that representation query a sim to predict the next frames for that state space.

A hybrid sim would be where you use both a physics engine and a neural network to fine tune the results.(such as in series, or by overriding intermediate timestep frames)

Training one is pretty straightforward, you save your predictions from last frame and then compare them to what the real world did the next frame. (It's more complex than that because you predict a distribution of outcomes and need a lot more than 1 frame from the real world to correct your probabilities)

This is also a good way to know when the machine is over its head. For example if it spilt coffee on the laptop, and the machine has no understanding of liquid damage but does need to open a bash shell, the laptop screen will likely be blank or crashed, which won't be what the machine predicted as an outcome after trying to start the laptop.

Most humans can't fix a laptop either and a human will just ask a specialist to repair or replace it. So that's one way for the machine to handle, or it can ask a human.

This is absolutely advanced future AI and gradually as humans fix bugs the robots would begin to exceed human performance. (Partially just from faster hardware). But my perspective is I am saying "ok this is what we have, what's a reasonable way to proceed to something we can safely use in the near future".

It seems you are assuming humans skip right to very dangerous ASI level optimizers before robots can reliably pour coffee. That may not be a reasonable world model.

↑ comment by tailcalled · 2024-02-13T22:49:40.591Z · LW(p) · GW(p)

Factorization is generally very bad. [? · GW]

Replies from: None↑ comment by [deleted] · 2024-02-13T22:55:17.932Z · LW(p) · GW(p)

Factorization is working extremely well though. (Some tasks may factorize poorly but package logistics subdivides well. Any task that can be transformed to look like package logistics is similar. I can think of a way to transform most tasks to look like package logistics, do you have a specific example? Fusion reactor construction is package logistics albeit design is not)

I think the overall goal in this proposal is to get a corrigible agent capable of bounded tasks (that maybe shuts down after task completion), rather than a sovereign?

One remaining problem (ontology identification) is making sure your goal specification stays the same for a world-model that changes/learns.

Then the next remaining problem is the inner alignment problem of making sure that the planning algorithm/optimizer (whatever it is that generates actions given a goal, whether or not it's separable from other components) is actually pointed at the goal you've specified and doesn't have any other goals mixed into it. (see Context Disaster for more detail on some of this, optimization daemons, and actual effectiveness). Part of this problem is making sure the system is stable under reflection.

Then you've got the outer alignment problem of making sure that your fusion power plant goal is safe to optimize (e.g. it won't kill people who get in the way, doesn't have any extreme effects if the world model doesn't exactly match reality, or if you've forgotten some detail). (See Goodness estimate bias, unforeseen maximum).

Ideally here you build in some form of corrigibility and other fail-safe mechanisms, so that you can iterate on the details.

That's all the main ones imo. Conditional on solving the above, and actively trying to foresee other difficult-to-iterate problems, I think it'd be relatively easy to foresee and fix remaining issues.

No comments

Comments sorted by top scores.