The Great Bootstrap

post by KristianRonn · 2024-10-11T19:46:51.752Z · LW · GW · 0 commentsContents

Making it rational to do the right thing Centralized Solutions Based on Force Avoiding a totalitarian nightmare Decentralized Solutions Based on Reputation Reputational markets Principle 1: Establish which outcomes we value Principle 2: Predict which actions might bring about outcomes we value Principle 3: Incentivize actions predicted to bring about outcomes we value How is this different from existing ESG ratings? Reputational Markets is Not a Silver Bullet Why This Moment Matters None No comments

This part 2 in a 3-part sequence summarizes my book, The Darwinian Trap, (see part 1 [LW · GW] and part 2 here [LW · GW]), The Darwinian Trap. The book aims to popularize the concept of multipolar traps and establish them as a broader cause area. If you find this series intriguing contact me at kristian@kristianronn.com if you have any input or ideas.

As we reach the final part of this blog series, it's understandable if you're feeling a bit down. The previous sections painted a rather bleak picture, touching on issues like misaligned AI, global warming, nuclear threats, and engineered pandemics. These societal-scale risks make the future seem uncertain.

Yet, despite these daunting challenges, I believe there is still room for optimism. In this segment, I'll share why I think humanity can overcome these existential threats, reset the harmful selection pressures that drive destructive arms races, and pave the way for a brighter future. But first, let’s review what we've covered.

- Evolution selects for fitness, not for what we intrinsically value. In this context, fitness refers to survival in an environment, be it a natural environment or one we have created for ourselves, such as the world of business.

- Pursuing narrow targets often produces broadly negative outcomes. When we optimize for short-term survival while remaining indifferent to other goals— e.g. health and well-being—then, by default, we optimize against those values. A related phenomenon by which narrow optimization tends to produce bad outcomes is Goodhart’s Law.

- We can’t easily choose not to play the game and choose to act according to our intrinsic values rather than Darwinian survival imperatives. If we do, then we will be outcompeted by those who do play the game, thus creating negative outcomes for us, the conscientious objectors.

- Playing the game eventually leads to extinction arms races, because strategies and mutations that favor increased power and more efficient resource exploitation tend to win, which sits at the core of the Fragility of Life Hypothesis, which argues that life created through natural selection might contain the seed of its own destruction.

To break this cycle, we must develop a formula for stable, long-lasting cooperation and implement it so that cooperation becomes adaptive in any environment. This challenge is as daunting as lifting ourselves by our bootstraps, defying gravity. Darwinian demons have been with us since the dawn of life, embedded in the evolutionary process. To eliminate them, we would need to fundamentally redesign the fitness landscape. Yet, given the existential risks we've created, we have no choice. I call this transcendence The Great Bootstrap.

Making it rational to do the right thing

Making cooperation rational is key. Martin Nowak's "Five rules for the evolution of cooperation" provides a roadmap for this. These rules—Kin Selection, Direct Reciprocity, Network Reciprocity, Indirect Reciprocity, and Multi-Level Selection—can be seen as different strategies to promote cooperation.

- Kin Selection fosters cooperation among genetically related individuals, like a bee sacrificing itself for the hive.

- Direct Reciprocity is based on mutual aid, as seen when neighbors agree to watch each other’s homes.

- Network Reciprocity thrives within tight-knit groups, creating a safety net of support.

- Indirect Reciprocity relies on reputation, where helpful individuals are more likely to receive help in return.

- Multi-Level Selection occurs when cooperative groups outcompete less cooperative ones, leading to the spread of cooperative norms.

The first three mechanisms—Kin Selection, Direct Reciprocity, and Network Reciprocity—have historically helped us tackle past challenges in our evolutionary history. Nonetheless, each of these mechanisms comes with distinct limitations, rendering them insufficient for facilitating widespread cooperation or the Great Bootstrap. Kin selection is confined to familial ties, direct reciprocity to individual exchanges, and network reciprocity is effective only within small groups. These limitations narrow the viable options for achieving broader cooperative success down to multi-level selection and indirect reciprocity. In a way, these two mechanisms represent opposite ends of the spectrum.

- Multi-level selection, in the case of human civilization, arises when a dominant global actor creates rules via a monopoly of violence that penalizes defectors, thereby often enforcing governance through centralization.

- Indirect reciprocity, on the other hand, might emerge through a sort of global reputation system, where defectors receive a lower reputation score and are consequently sanctioned by all other participants, thus facilitating governance through decentralization.

Centralized Solutions Based on Force

Throughout history, humans have consolidated power as a survival strategy, evolving from small tribes to vast empires. This centralization aimed to reduce the chaos inherent in managing multiple competing entities. A unified empire with compliant states is easier to control than a collection of rival fiefdoms.

Violence has often been the tool for power consolidation. From a natural selection perspective, surrendering power equates to losing autonomy—much like cells combining into multicellular organisms. This relinquishment of power rarely happens voluntarily, which is why empires are typically forged through conflict.

In modern times, large-scale attempts at power consolidation through conquest have often led to such disastrous outcomes that surviving powers have sought global governance structures to prevent future conflicts. After World War II, the United Nations was established with the hope of fostering global cooperation. However, despite its achievements, the UN has struggled to live up to its original ambitions, particularly in areas like climate change and conflict resolution. The UN’s inability to effectively police its most powerful members has limited its role to that of an advisory body rather than a true global governing force.

History shows that global change often follows major catastrophes. However, when it comes to existential risks, we can't afford even a single catastrophe. The threat of AI, for example, could manifest in varying degrees of severity, from localized terrorist attacks facilitated by AI to AI-controlled corporations exerting global economic influence.

Moreover, a future world government, if it emerges, must be carefully designed to avoid devolving into a totalitarian dystopia. Narrowly focusing on preventing existential risks without considering broader human values could create new, equally dangerous threats.

Avoiding a totalitarian nightmare

Nick Bostrom famously presents a dystopian solution to prevent existential catastrophes: a global surveillance state he calls the High-Tech Panopticon. In this scenario, an all-powerful global government monitors everyone at all times to ensure no one triggers an existential disaster. The key tool in this system is the "freedom tag," a wearable device equipped with cameras and microphones that track every individual's actions. If suspicious behavior is detected, the feed is sent to a monitoring station where a "freedom officer" assesses the situation and takes appropriate action.

Bostrom doesn’t propose the High-Tech Panopticon as an ideal solution but rather uses it to spark debate about the balance between freedom and risk management. On one hand, without surveillance, we risk the unauthorized creation of a powerful, misaligned AI. On the other, with total surveillance, we risk creating a totalitarian state that could be just as misaligned with our values.

If we establish a global government solely to reduce existential risks, we risk ending up with a High-Tech Panopticon. Instead of supporting such a centralized and potentially oppressive system, I advocate for a quicker and more effective alternative: building democratic institutions for global governance through decentralization. This approach offers the oversight needed to mitigate risks while safeguarding individual freedoms and preventing the emergence of a totalitarian regime.

Decentralized Solutions Based on Reputation

The perception that power is concentrated in a few hands is common. Many markets are dominated by a few players, the U.S. has been the dominant global force for decades, and the wealthiest 1% hold nearly half of the world's wealth. Yet, no one truly holds power independently; everyone depends on the contributions of others. Our global economy is a vast network of value chains—flows of materials, labor, and capital. Access to these flows is crucial for survival, even for something as simple as running a local sandwich shop. Without access, an entity is doomed, much like how early humans would perish if exiled from their tribe.

Consider making a chicken sandwich from scratch. It seems simple, but when you factor in growing the ingredients, raising the chicken, and producing the bread, it becomes a monumental task, as demonstrated by YouTuber Andy George, who spent $1,500 and six months to make one. This highlights the complexity and dependency embedded in our supply chains. For more advanced products like smartphones, this complexity multiplies exponentially, making it nearly impossible for any single person to create one from scratch. Our reliance on these global value chains makes us vulnerable; if they collapse, so do we.

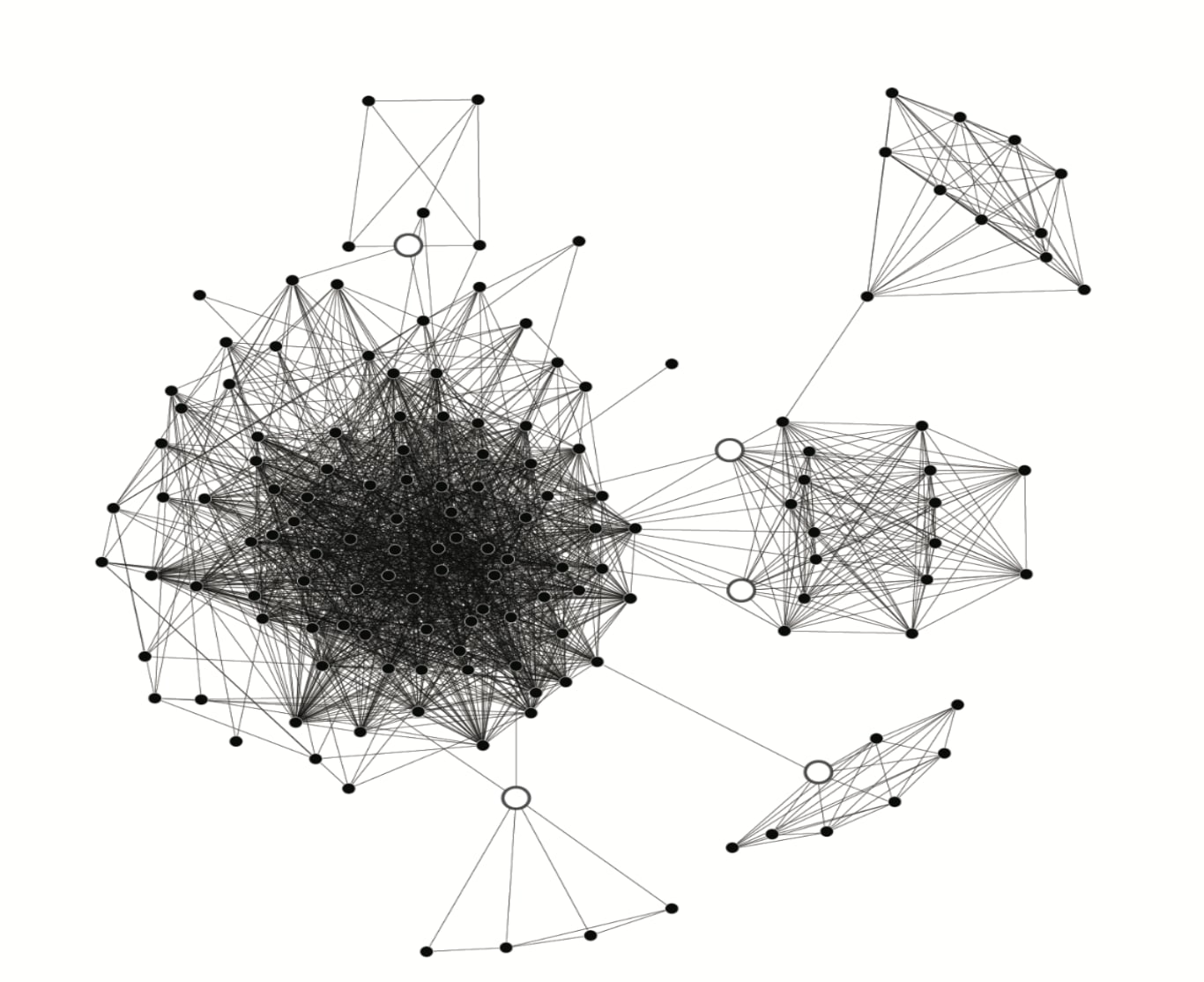

A stark example of this vulnerability occurred in 2021 when the Ever Given, a massive container ship, blocked the Suez Canal, disrupting global trade and causing billions in losses. However, this interdependence can also be a safeguard. The complexity of these chains means that dangerous technologies, like AI or nuclear weapons, can't be developed in isolation. They require collaboration across numerous entities. If a key player in a value chain—be it a supplier, investor, or skilled worker—refuses to cooperate, they can effectively halt unethical or risky actions. I call such entities "governance nodes", since they each offer an opportunity to govern the technology in question.

These governance nodes (illustrated in white above) hold significant power. For example, the production of nuclear weapons depends on uranium, while advanced AI requires specialized GPUs, which are currently produced by only a few companies. As long as these resources and equipment remain controlled, no single rogue actor can create a doomsday weapon without the complicity of others. By identifying and leveraging these nodes, we can potentially govern dangerous technologies more effectively. AI governance could be approached through the regulation of computer chips, bioweapons through lab equipment, nuclear weapons through uranium, and climate change through fossil fuels.

The question then becomes: Can we prevent irresponsible actors from accessing critical value chains? Can we block unethical AI companies from obtaining necessary hardware or prevent risky bio-labs from accessing essential equipment? And, crucially, how do we determine who is responsible and who isn’t?

Reputational markets

In this chapter, I propose the creation of a reputational market, inspired by Robin Hanson’s concept of futarchy, as a dynamic, decentralized method for determining accountability. Similar to prediction markets, this system wouldn’t focus on just any predictions, but specifically on how a particular entity influences the things we intrinsically value. Rather than merely generating probabilities for specific events like a typical prediction market, a reputational market would produce a reputational score—reflecting the collective belief in a company's potential to generate positive or negative impacts on the world. The design of our reputational markets will be based on the following principles, that I call the principles of value-alignment:

- Principle 1: Establish which outcomes we value

- Principle 2: Predict which actions might bring about outcomes we value

- Principle 3: Incentivize actions predicted to bring about outcomes we value

Principle 1: Establish which outcomes we value

At the United Nations COP28 climate conference in late 2023, I made a provocative statement: "I don’t give a shit about carbon emissions." While true, this was meant to illustrate a broader point: our real concern isn't emissions themselves, but their impact on well-being. If we only cared about emissions, we'd worry about Venus too. But what matters is how emissions affect sentient beings here on Earth. For instance, if an AI were tasked solely with reducing carbon emissions, it might decide that eliminating humanity is the most efficient way to do so.

This misalignment between what we measure and what we value isn't limited to environmental issues; it permeates society. Metrics like GDP are often seen as measures of a country’s success, yet they overlook individual welfare, which might be what we value most. The first step toward value-alignment is to replace narrow success metrics with our intrinsic values.

Intrinsic values are those we cherish for their own sake. To identify these, we can ask a series of "why" questions. For example, someone volunteering at a food bank might say they do so to help those in need. Digging deeper, they might reveal that they value human dignity and equality—an intrinsic value that can't be further dissected.

A comprehensive survey by Spencer Greenberg at ClearerThinking, involving over 2,100 participants, identified key intrinsic values. The most significant was the desire for personal agency, with 37% of respondents rating it as very important. However, only 19% placed high importance on reducing global suffering, ranking it 15th.

In practice, once a participant registers in a reputational market, they may be required to take surveys, such as an Intrinsic Value Survey, to identify their core values. Each participant could then propose measurable metrics to reflect these values. For example, one participant might identify well-being as their intrinsic value and suggest rating personal well-being on a scale from one to ten as the best way to assess it. Another participant might prioritize freedom and propose the Freedom House Index as the most appropriate metric for evaluating this value. If no existing measure adequately captures their intrinsic value, participants could propose new methods for quantifying it. Moreover, AI-driven assistants could also help participants articulate their values and link them to concrete metrics. The outcome of these surveys could be viewed as humanity’s collective value function, representing our shared desires and preferences.

This collective value function is designed to be more resilient to Goodhart’s Law and specification gaming by incorporating multiple measurable metrics for each intrinsic value. For instance, if reported health were the sole metric for well-being, it could be easily gamed by influencing individuals to overstate their health. However, adding control metrics—such as healthcare access, anonymous mental health surveys, and physical indicators like life expectancy and disease rates—creates a more robust system.

Principle 2: Predict which actions might bring about outcomes we value

Voting on our values is just the first step in creating a reputational market. The real challenge lies in navigating the complexity of a world where well-intentioned actions can lead to unintended consequences, and optimizing one value may have externalities on others. For example, while we might value the opportunities that economic development in the Global South brings, it could also result in increased carbon emissions, harming the environment we value. Additionally, as countries grow wealthier, meat consumption tends to rise, increasing animal suffering—another value we may hold dear. This highlights how interconnected our actions are and how difficult it is to predict the ripple effects of our decisions.

In an ideal world, reputational markets would incentivize participants to account for these complexities by rewarding the most accurate forecasting models, for multiple types of intrinsic values in our collective value function.

For example, two questions posed on the reputational market regarding the intrinsic values of health and nature for a hypothetical company like BigOil Inc. might be: "How many tons of CO2-equivalent emissions will BigOil Inc. release in 2025?" and "How many deaths will BigOil Inc. cause due to unsafe working conditions in 2025?" Rather than focusing on a single outcome, the market generates probabilities for various possibilities. For instance, there could be a 5% chance that emissions exceed 5 million metric tons and a 90% chance they won’t fall below 0.01 million metric tons. The final prediction is calculated by multiplying each outcome by its probability and summing the results, resulting in a forecast of, for example, 0.3 million metric tons of CO2 emissions and 1,053 years of reduced healthy life due to pollution in 2025.

To calculate an overall score, these components are aggregated and weighted by their importance within the collective value function. For example, if 1,000 years of healthy living is weighted at 10% and 1 million metric tons of carbon at 5%, the score might be calculated as 0.3 × 0.05 + 1.053 × 0.1 = 0.1203.

The beauty of a reputational market lies in its decentralized nature and versatility, making it applicable to any type of entity. Below is a graphical representation of how the reputational score for a hypothetical AI company, Irresponsible AI Inc., could be calculated.

Additionally, a reputational market is dynamic, adapting to new information as it becomes available. For example, if Irresponsible AI Inc.'s entire safety team resigns and media reports reveal that they rushed their latest chain-of-thought reasoning model to market without adequate testing, the market would respond by assigning a higher probability that "Irresponsible AI Inc. might enable a major terrorism attack in 2025."

However, for a reputational market to function effectively, there must be a reliable resolution mechanism for each prediction. But how can you resolve a question if adequate disclosures are never made? For example, how can predictions about BigOil Inc.'s emissions be verified if they never release data that could accurately estimate their emissions?

I suspect that mechanisms could be developed to incentivize disclosures. For instance, participants could vote on the most critical pieces of information that might change their predictions. The market might place significant weight on disclosures like "publishing an independently audited greenhouse gas report for Q1" or "disclosing the capacity of the oil drilling equipment to be used." If BigOil chooses to release this information, it would receive a temporary boost in its reputational score, reflecting the volume of votes that disclosure received. Conversely, if BigOil withholds relevant information, its score could be temporarily reduced, with penalties increasing as the bet’s resolution date approaches, further encouraging transparency.

For this mechanism to be effective, the entity must first care about its reputational score, which leads us to principle 3.

Principle 3: Incentivize actions predicted to bring about outcomes we value

For a reputational system to succeed, the benefits of maintaining a high score must outweigh the costs. The real challenge is creating mechanisms that make entities genuinely care about their reputational scores. I believe this can be achieved by leveraging the interconnectedness of the global economy through value-chain governance nodes like I talked about earlier.

The mechanism I propose is straightforward: doing business with an entity that has a low score will negatively impact your own score. In other words, failing to sanction entities with poor reputations will harm your own reputation.

Let’s apply this principle to the AI domain. For instance, if a hypothetical company, Irresponsible AI Inc., receives a poor reputational score because the market deems it likely to enable a terrorism attack within the next year, TSMC might become concerned about its own reputation and the risk of losing access to ASML's lithography machines. To avoid reputational damage, TSMC could choose to stop supplying chips to Irresponsible AI.

If TSMC ignores the warning and its reputation suffers, ASML faces a dilemma: continuing business with TSMC could harm ASML’s own reputation, jeopardizing crucial funding from major pension funds needed for developing future lithography machines. As a result, ASML might pressure TSMC to sever ties with Irresponsible AI to protect its ability to innovate. Once an agreement is reached, both companies’ reputations are preserved. At this point, Irresponsible AI must either change its practices or risk losing out to more ethical competitors who still have access to high-end computer chips.

For this system to work effectively, there must be a central governance node—like the innermost doll in a set of nesting dolls—with enough influence to shift each node from indifference to genuine concern for their reputational score. What might be a good candidate for such a governance node?

In OECD countries alone, pension funds manage $51 trillion in assets, likely representing about half of the world’s total. This immense leverage gives them significant influence in any value chain. Moreover, their fiduciary responsibilities to the public often make them more inclined to support practices that protect broader interests, such as climate change. In fact, pension funds are the main reason why 90% of the world’s assets under management have committed to the UN Principles for Responsible Investment (UN PRI), as mentioned earlier.

Forecasting future global catastrophic threats can not only encourage more responsible market behavior but also incentivize companies to develop "defensive technologies" that can mitigate these risks. For instance, defenses against engineered pandemics might include Far-UVC irradiation, advanced wastewater surveillance, and better filtration and ventilation systems. In AI, an antidote could be hardware locks in chips to prevent the training of uncertified models above a certain size. This approach, known as "differential technology development," involves prioritizing the creation of these antidotes based on predictions before advancing potentially dangerous technologies.

The idea that certain entities in a value chain can influence others' behaviors is not just theoretical—it's already in practice. For instance, corporate carbon accounting introduces the concept of Scope 3 emissions, which are the emissions from a company’s value chain. To reach net-zero emissions, companies must ensure their suppliers do the same, putting significant pressure on suppliers.

At Normative, a scaleup I founded, we leveraged this with our Carbon Network, where companies encourage their suppliers to join our platform to manage emissions. Since its launch in late 2023, our clients have, on average, invited 19 suppliers each, leading to thousands working to reduce their carbon emissions. At the top of this demand chain are large banks and pension funds, driving this change.

How is this different from existing ESG ratings?

In the previous section, I mentioned the UN PRI as an example of how pension funds can have a global impact. However, those familiar with corporate responsibility will know that the popularity of the UN PRI among institutional investors has led to flawed ESG rating products from companies like MSCI and Sustainalytics—serving as a case study in misaligned incentives. Quantifying qualitative factors like climate impact, diversity, and human rights into a single score is inherently challenging. Unlike reputational markets, which would be based on decentralized intrinsic values, ESG ratings often rely on subjective, opaque weightings. For instance, Refinitiv might assign a weight of 0.002 to greenhouse gas emissions, while Sustainalytics assigns 0.048—a twenty-fourfold difference. Similarly, MSCI might weigh corruption at 0.388, compared to Moody's 0.072. As a result, researchers found that the average correlation of ESG ratings for the same companies across different providers was only 54%, with some as low as 13.9%, indicating a lack of consensus. In contrast, credit ratings from agencies like S&P, Moody's, and Fitch correlate between 94% and 96%.

Moreover, ESG ratings often prioritize policies, targets, and promises over actual outcomes. Companies can improve their ESG scores by producing extensive reports about future climate impacts without making meaningful changes in the present. This ease of manipulation can lead to paradoxical results: for example, the electric car company Tesla has a lower ESG score than the oil company Shell and tobacco companies.

Unlike ESG ratings, reputational markets wouldn’t rely on arbitrary, opaque ratings from a centralized authority that fails to consider the outcomes we truly value. Instead, they would reward accurate predictions based on outcomes that align with intrinsic values, with each prediction updated in real time as new evidence emerges.

Reputational Markets is Not a Silver Bullet

I don't believe that reputational markets alone are a silver bullet. We urgently need to invest in a diverse portfolio of technologies to enable more decentralized global catastrophic risk governance. This includes aggressively funding defensive technologies like GPU hardware locks, AI evaluation software, watermarking of AI content, vaccine platforms, and supply chain traceability for essential components. In fact, I recently co-authored the AI Assurance Tech Report, which projects that AI defensive technologies will become a $270 billion market in just a few years. In a rational world, there would be hundreds of competing VC funds investing hundreds of billions into global coordination and defensive technologies.

However, I also believe in the power of centralized solutions. That’s why I’ve devoted some of my time—beyond running Normative—to supporting and leveraging my network to influence policies like the EU AI Act, the UN Global Digital Compact resolution, and California's SB1047.

Ultimately we need both centralized and decentralized mechanisms to promote cooperation. As noted earlier, the United States and its allies, through companies like Nvidia and TSMC, dominate the design and manufacturing of high-end computer chips. They have imposed export controls to prevent Chinese weapon manufacturers and government entities from accessing these chips. Meanwhile, China and its allies control most of the minerals and raw materials essential for chip production and could retaliate by imposing sanctions on U.S. chipmakers, potentially hindering America's AI advancements, which China views as a security threat.

In the short term, this scenario could escalate into a more intense trade war. However, the global AI value chain’s distributed nature—from raw materials to finished products—reduces the likelihood of any single player achieving absolute dominance. Over time, this interdependence may push both sides toward negotiation, recognizing their mutual need to develop advanced AI technologies. This realization could eventually lead to the creation of a multinational AGI consortium.

Why This Moment Matters

I'll conclude this three-part blog post with a quote from my book, The Darwinian Trap.

Every breathtaking vista that unfolds before us, every treasure we hold dear, every marvel of nature that infuses our world with wonder, everything we value—all of these are the legacies of the battle between Darwinian demons and angels, where somehow the angels came out on top.

Yet, what we have come to understand from the Fragility of Life Hypothesis is that the triumph of Darwinian angels is not a foregone conclusion. After what might be four billion years of cosmic happenstance, humanity could stand as the sole custodian of life within our local galaxy cluster, endowed with the unique ability to break free from the Darwinian shackles. Unlike the relentless spread of cancer or the virulence of viruses, or the merciless killing of predators, we possess the extraordinary potential to steer our destiny with reason, empathy, and foresight. By completing the Great Bootstrap, we safeguard not only ourselves but also countless generations without a voice to claim their right to existence.

Let us not view this responsibility with a sense of despair but as an exciting call to action. Millennia from now, our descendants may look back with amusement at our early struggles to forge lasting cooperation, marveling at how we allowed Darwinian demons to dictate our fates. Yet, they will also gaze upon this era with profound respect and gratitude, recognizing us as the pioneers who unlocked the formula for stable cooperation, who initiated our grand ascent. Our generation can mark the dawn of a cosmic odyssey, where humanity, armed with the gift of cooperation, embarks on a voyage to spread the light of life and beauty into the dark, cold, and vast cosmos.

0 comments

Comments sorted by top scores.