On the CrowdStrike Incident

post by Zvi · 2024-07-22T12:40:05.894Z · LW · GW · 14 commentsContents

What (Technically) Happened Who to Blame? How Did We Let This Happen Regulatory Compliance Consequences Careful With That AI Unbanked None 14 comments

Things went very wrong on Friday.

A bugged CrowdStrike update temporarily bricked quite a lot of computers, bringing down such fun things as airlines, hospitals and 911 services.

It was serious out there.

Ryan Peterson: Crowdstrike outage has forced Starbucks to start writing your name on a cup in marker again and I like it.

What (Technically) Happened

My understanding it was a rather stupid bug, a NULL pointer from the memory unsafe C++ language.

Zack Vorhies: Memory in your computer is laid out as one giant array of numbers. We represent these numbers here as hexadecimal, which is base 16 (hexadecimal) because it’s easier to work with… for reasons.

The problem area? The computer tried to read memory address 0x9c (aka 156).

Why is this bad?

This is an invalid region of memory for any program. Any program that tries to read from this region WILL IMMEDIATELY GET KILLED BY WINDOWS.

So why is memory address 0x9c trying to be read from? Well because… programmer error.

It turns out that C++, the language crowdstrike is using, likes to use address 0x0 as a special value to mean “there’s nothing here”, don’t try to access it or you’ll die.

…

And what’s bad about this is that this is a special program called a system driver, which has PRIVLIDGED access to the computer. So the operating system is forced to, out of an abundance of caution, crash immediately.

This is what is causing the blue screen of death. A computer can recover from a crash in non-privileged code by simply terminating the program, but not a system driver. When your computer crashes, 95% of the time it’s because it’s a crash in the system drivers.

If the programmer had done a check for NULL, or if they used modern tooling that checks these sorts of things, it could have been caught. But somehow it made it into production and then got pushed as a forced update by Crowdstrike… OOPS!

Here is another technical breakdown.

A non technical breakdown would be:

- CrowdStrike is set up to run whenever you start the computer.

- Then someone pushed an update to a ton of computers.

- Which is something CrowdStrike was authorized to do.

- The update contained a stupid bug, that would have been caught if those involved had used standard practices and tests.

- With the bug, it tries to access memory in a way that causes a crash.

- Which also crashes the computer.

- So you have to do a manual fix to each computer to get around this.

- If this had been malicious it could probably have permawiped all the computers, or inserted Trojans, or other neat stuff like that.

- So we dodged a bullet.

- Also, your AI safety plan needs to take into account that this was the level of security mindset and caution at CrowdStrike, despite CrowdStrike having this level of access and being explicitly in the security mindset business, and that they were given this level of access to billions of computers, and that their stock was only down 11% on the day so they probably keep most of that access and we aren’t going to fine them out of existence either.

Who to Blame?

George Kurtz (CEO CrowdStrike): CrowdStrike is actively working with customers impacted by a defect found in a single content update for Windows hosts. Mac and Linux hosts are not impacted. This is not a security incident or cyberattack. The issue has been identified, isolated and a fix has been deployed.

We refer customers to the support portal for the latest updates and will continue to provide complete and continuous updates on our website. We further recommend organizations ensure they’re communicating with CrowdStrike representatives through official channels. Our team is fully mobilized to ensure the security and stability of CrowdStrike customers.

Dan Elton: No apology. Many people have been wounded or killed by this. They are just invisible because we can’t point to them specifically. But think about it though — EMS services were not working. Doctors couldn’t access EMR & hospitals canceled medical scans.

Stock only down 8% [was 11% by closing].

I don’t think the full scope of this disaster has really sunk in. Yes, the problems will be fixed within a few days & everything will go back to normal. However, 911 services are down across the country. Think about that for a second. Hospitals around the world running on paper.

It’s hard to map one’s mind around since all the people who have been killed and will be killed by this — and I’m sure there are many — are largely invisible.

Claude’s median estimate is roughly 1,000 people died due to the outage, when given the hypothetical scenario of an update with this bug being pushed and no other info.

Where Claude got it wrong is it expected a 50%+ drop in share price for CrowdStrike. We should be curious why this did not happen. When told it was 11%, Claude came up with many creative potential explanations, and predicted that this small a drop would become an object of future study.

Then again, perhaps no one cares about reputation these days? You get to have massive security failures and people still let you into their kernels?

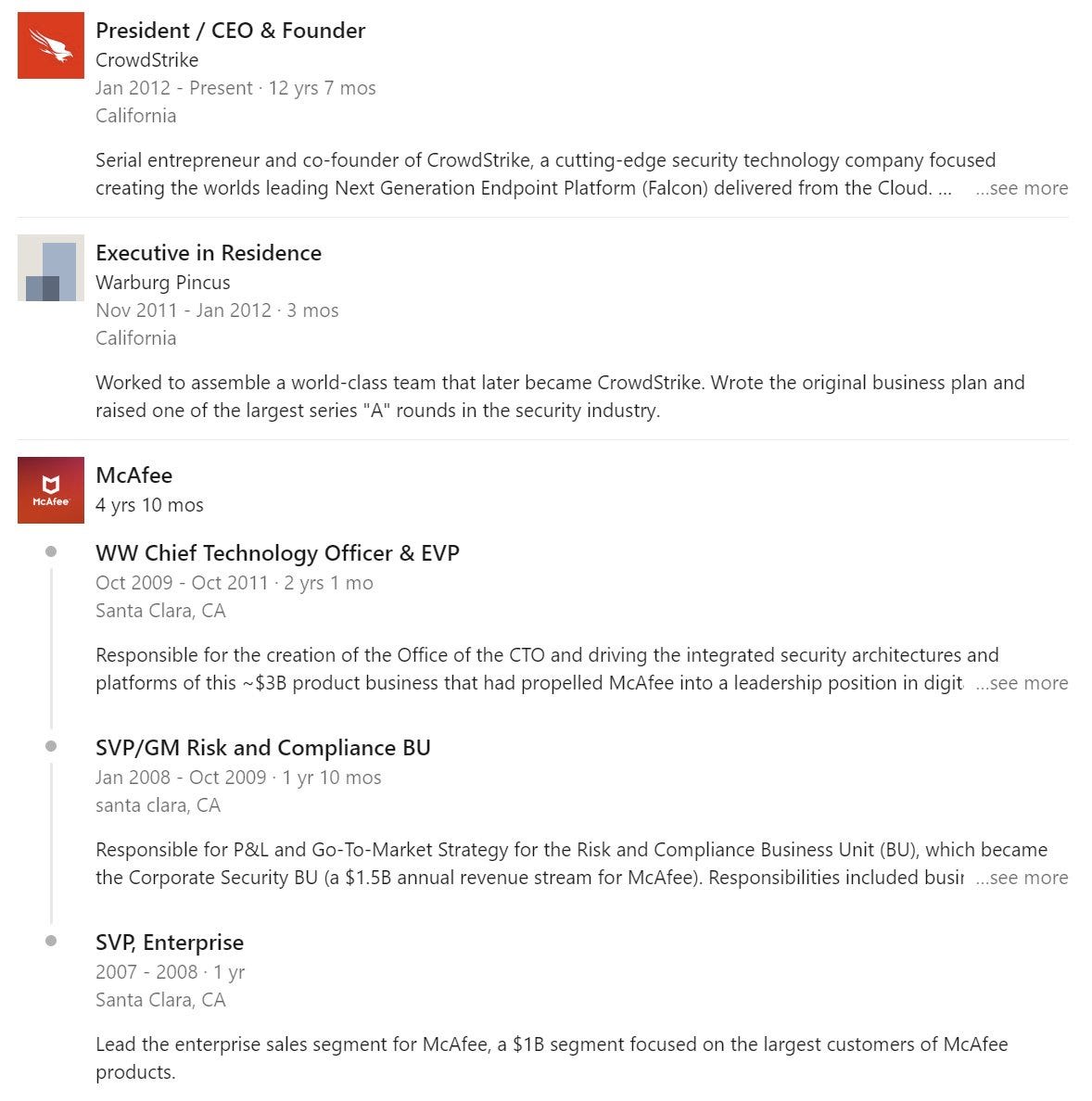

Anshel Sag: For those who don’t remember, in 2010, McAfee had a colossal glitch with Windows XP that took down a good part of the internet. The man who was McAfee’s CTO at that time is now the CEO of Crowdstrike. The McAfee incident cost the company so much they ended up selling to Intel.

I mean, sure, it looks bad, now, in hindsight.

At this rate, the third time will be an AGI company.

So do we blame George Kurtz? Or do we blame all of you who let it happen?

How Did We Let This Happen

Aside from ‘letting a company run by George Kurtz access your kernel,’ that is.

It happened because various actors did not do deeply standard things they should obviously have been doing.

A fun game is to watch everyone say ‘the real problem is X and Y is a distraction’ with various things being both X and Y in different statements. It can all be ‘real’ problems.

Owen Lynch: Everyone is talking about how memory safety would have stopped the crowdstrike thingy. Seems to me that’s a distraction; the real problem is that the windows security model is reactive (try to write software that detects hacks) rather than proactive (run processes in sealed sandboxes with permissions granted by-need instead of by-default). Then there’s little need for antivirus in the same sense.

Of course, the kernel managing these sandboxes needs to be memory safe, but this is a low bar, ideally it should be either exhaustively fuzzed (like SQLite) or actually formally verified.

But most software should be allowed to be horrendously incorrect or actually malicious, but only in its little box.

Here is a thread where they debate whether to blame CrowdStrike or Microsoft.

Luke Parrish: Microsoft designed their OS to run driver files without even a checksum and you say they aren’t responsible? They literally tried to execute a string of zeroes!

Jennifer Marriott: Still the issue is CrowdStrike. If I buy a program and install it on my computer and it bricks my computer I blame the program not the computer.

…

Luke Parrish: CrowdStrike is absolutely to blame, but so is Microsoft. Microsoft’s software, Windows, is failing to do extremely basic basic checks on driver files before trying to load them and give them full root access to see and do everything on your computer.

This is analogous to the fire safety triangle: Heat, fuel, and oxygen. Any one of those can be removed to prevent combustion. Multiple failures led to this outcome. Microsoft could have prevented this with good engineering practices, just as CrowdStrike could have.

The market did not think Microsoft would suffer especially adverse effects. The Wall Street Journal might say this was the ‘latest woe for Microsoft’ but their stock on Friday was down less than the Nasdaq. That seems right to me. Yes, Microsoft could and should have prevented this, but ultimately it will not cause people to switch.

The Wall Street Journal also attempts to portray this as a failure of Microsoft to have a ‘closed ecosystem’ the way Apple does (in a limited way on a Mac, presumably, this is not a phone). This, they say, is what you let others actually do things for real on your machine, the horrors. There are a minimum of who ways this is Obvious Nonsense, even if you grant a bunch of other absurd assumptions.

- Linux exists.

- Microsoft is barred from not giving this access by a 2009 EU consent decree.

Did Microsoft massively screw up by not guarding against this particular failure mode? Oh, absolutely, everyone agrees on that. But they failed (as I understand essentially everyone) by not having proper safety checks and failure modes, not by failing to deny access.

There was a clear pattern where ‘critical infrastructure’ that is vitally important to keep online like airlines and banks and hospitals went down, while the software companies providing other non-critical services had no such issues.

‘Too important to improve’ (or ‘too vital to allow?’) is remarkably common.

Where you cannot f** around, you cannot find out. And where you cannot do either, it is hard to find good help.

Microsoft Worm: In retrospect it’s pretty ~funny how most shitware SaaS companies & social media companies exclusively run Real Software for Grown-Ups while critical infrastructure (airlines, hospitals, etc.) all uses dotcom-era software from comically incompetent zombie firms with 650 PE ratios.

Gallabytes: We used to explain this bifurcation as a function of size but with most of the biggest companies being tech giants now that explanation has been revealed as cope. what’s the real cause?

Sarah Constantin: My guess would be it’s “do any good software engineers work there or not?” Good software engineers work at both startups and Big Tech cos but I have *one* smart programmer friend who works at a bank, and zero at hospitals, airlines, etc.

Gallabytes: This is downstream I think and not universal – plenty of good programmers in gaming industry but it’s still full of this kind of madness. So far the most accurate classifier I’ve got is actually “does this company run on Windows?”

Scott Leibrand: I think it comes down to whether they hire mostly nerd vs. normie employees.

Illiane: Pretty sure it’s just a result of these tech companies starting out with a « cleaner » blank slate than critical infra that’s been here for decades and relies on mega legacy system which would be very hard and risky to replace. Banks still largely run on COBOL mainframes!

Tech companies at least started out able to find out and hire good help, and built their engineering cultures and software stacks around that. Banks do not have that luxury.

Regulatory Compliance

Why else might we have had this stunning display of incompetence?

Lina Khan, head of the FTC, has no sense of irony.

Lina Khan: All too often these days, a single glitch results in a system-wide outage, affecting industries from healthcare and airlines to banks and auto-dealers. Millions of people and businesses pay the price.

These incidents reveal how concentration can create fragile systems.

Concentrating production can concentrate risk, so that a single natural disaster or disruption has cascading effects.

This fragility has contributed to shortages in areas ranging from IV bags to infant formula.

Another area where we may lack resiliency is cloud computing.

In response to @FTC’s inquiry, market participants shared concerns about widespread reliance on a handful of cloud providers, noting that consolidation can create single points of failure.

And we’re continuing to collect public comment on serial acquisitions and roll-up strategies across the economy.

If you’ve encountered an area where a series of deals has consolidated a market, we welcome your input.

Yes. The problem is too much concentration in cloud providers, says Lina Khan. We must Do Something about that. I mean, how could this possibly have happened? That all the major cloud providers went down at the same time over the same software bug?

Must be a lack of regulation.

Except, well, actually, says Mark Atwood.

Mark Atwood: If you are in a regulated industry, you are required to install something like Crowdstrike on all your machines. If you use Crowdstrike, your auditor checks a single line and moves on. If you use anything else, your auditor opens up an expensive new chapter of his book.

The real culprit here is regulatory capture. Notice that everybody getting hit hard by this is in a heavily regulated industry: finance, airlines, healthcare, etc. That’s because those regulations include IT security mandates, and Crowdstrike has positioned themselves as the only game in town for compliance. Hence you get this software monoculture prone to everything getting hit at once like this.

Andres Sandberg: A good point. I saw the same in the old FHI-Amlin systemic risk of risk modelling project: regulators inadvertently reduce model diversity, making model-mediated systemic risk grow. “Sure, you can use a model other than RMS, but it will be painful for both of us…”

Ray Taylor: what if you use Mac / Linux?

Andres Sandberg: You will have to use the right operating system to run the industry standard software. Even if it is Windows XP in 2017.

Some disputed this. I checked with Claude Sonnet 3.5. It looks like there are plenty of functional alternative services, and yes they will work, but CrowdStrike does automated compliance reporting and is widely recognized, and this is actually core to their pitch of why companies should use them – to reduce compliance costs.

I also checked with two friends who know about such things. It seems CrowdStrike did plausibly have a superior product to the alternatives, even discounting the regulatory questions.

It was also pointed out that while a lot of installs were to please auditors, a lot of what the auditors were checking for was not formal government regulations, rather it was largely industry standards without legal enforcement, but that you need to do to get contracts, like SOC 2 or ISO 27001.

In the end, is there a functional difference? In some ways, probably not.

So given the increasing number of requirements Claude was able to list off, and the costs of non-compliance, everyone in these ‘critical infrastructure’ businesses ended up turning to the company whose main differential, and perhaps to them main product offering, was ‘regulatory compliance.’

That then set us up with additional single points of failure. It also meant that the company in charge of those failure points had a culture built around checking off boxes on government forms rather than actual computer security or having a security mindset.

You know who did not use CrowdStrike? Almost anyone who did not face this regulatory burden. It was only in 8.5 million Windows machines.

Byrne Hobart: <1% penetration. This Crowdstrike company seems like it’s got a nice TAM to go after, just have to make sure they don’t do anything to mess it up.

Another nice bit that I presume is a regulatory compliance issue: Rules around passwords and keys are reliably absurd.

Dan Elton: Many enterprises in healthcare use disk encryption like Bitlocker which complicates #CrowdStrike cleanup.

This is what one IT admin reports:

“We can’t boot into safe mode because our BitLocker keys are stored inside of a service that we can’t login to because our AD is down.”

Another says “Most of our comms are down, most execs’ laptops are in infinite BSOD boot loops, engineers can’t get access to credentials to servers.”

Consequences

Would it be better if the disaster were worse, such as what likely happens to a crypto project in this spot? Crypto advocate says yes, Gallabytes points out actually no.

Dystopia Breaker: in crypto, when a project has a large incompetence event (hack, insider compromise, whatever), the project loses all of their money and is dead forever in tradtech/bureautech, when a project has a large incompetence event, they do a ‘post mortem’ and maybe get some nastygrams.

Consider for a moment the incentives that this dynamic creates and the outcomes that arise by dialing out these two incentive gradients into the future.

It’s actually worse than ‘they get some nastygrams’, what usually happens is that regulators (who usually know less than nothing about the technosphere) demand band-aid solutions (surveillance, usually) that increase systemic risk [e.g. CrowdStrike itself].

Gallabytes: And that’s a huge downside of crypto!

Most systems will be back to normal by Monday, while in crypto many would be irreversibly broken.

It’d be better still if our institutions learned from this failure but I’m not holding my breath. you basically only see this kind of failure in over regulated oligopolistic markets, so the case for massive deregulation is much clearer than migration to crypto.

As George Carlin famously said, somewhere in the middle, the truth lies. Letting CrowdStrike off the hook because they ‘are the standard’ is insufficiently strong incentives. Taking everything involved down hard is worse.

Careful With That AI

What about the role of AI?

Andrej Karpathy: What a case study of systemic risk with CrowdStrike outage… that a few bits in the wrong place can brick ~1 billion computers and all the 2nd, 3rd order effects of it. What other single points of instantaneous failure exist in the technosphere and how do we design against it.

Davidad: use LLMs to reimplement all kernel-mode software with formal verification.

How about we use human software engineers to do the rebuild, instead?

It is great that we can use AIs to write code faster, and enable people to skill up. For jobs like ‘rewrite the kernel,’ I am going to go ahead and say I want to stick with the humans. There are many overdetermined reasons.

Patrick Collison (responding to Karpathy): I’ve always thought that we should run scheduled internet outages.

Andrej Karpathy: National bit flip day.

Indomitable American Soul: Its crazy when you think that this could have all been avoided by testing the release on a single sandbox machine.

Andrej Karpathy: I just feel like this is the particular problem but not the *actual* deeper problem. Any part of the system should be allowed to go *crazy*, randomly or even adversarially, and the rest of it should be robust to that. This is what you want, even if robustness is very often at tension with efficiency.

There are two problems.

- This error should not have been able to bring down the system.

- This error should never have happened even if it couldn’t crash the system.

Either of these on its own should establish that we have a terrible situation that poses catastrophic risks even without AI, and which AI will make a lot worse, and urgently needs fixing.

Together, they are terrifying.

The obvious failure mode is not malicious. It is exactly what happened this time, except in the future, with AI.

- AI accidentally outputs buggy code.

- Human does not catch it.

- What do you mean ‘unit tests’ and ‘canaries’?

- Whoops.

Or the bug is more subtle than this, so we do run the standard tests, and it passes. That happens all the time, it is not usually quite this stupid and obvious.

The next failure is that the AI intentionally outputs bugged code, or malicious code, whether or not a human instructed it (explicitly, implicitly or by unfortunate implication) otherwise.

And of course the other failure mode is that the AI, or someone with an AI, intentionally seeks out the attack vector in order to deploy such code.

Shako: A rogue AI could probably brick every computer in the world indefinitely with ongoing zero days to exploit things like we saw today. Probably not too far from the capability either.

Arthur: It won’t need zero days, we’ll have given it root power globally because it’s convenient.

Leo Gao (OpenAI, distinct thread): Thankfully, it’s unimaginable that an AGI could ever compromise a large fraction of internet connected computers.

Jeffrey Ladish: Fortunately there are no single points of failure or over reliances on a single service provider with system level access to a large fraction of the computers that run, uh, everything.

Everyone: “Oh no the AGI will be able to discover 0days in every piece of software, we’ll be totally pwned”

AGI: “Why would I need 0days?

”

Where should we worry about concentration? Is this a reason to want everyone to be using different AIs from different providers, instead of the same AI?

That depends on what constitutes the single point of failure (SPOF).

If the SPOF is ‘all the AIs turn rogue or go crazy or shut off at the same time’ then you want AI diversity.

If the SPOF is ‘every distinct frontier AI is itself an SPOF, because if even one of them goes fully off the rails then that is a catastrophe’ then you do not want AI diversity.

These questions can have very different answers for catastrophic or existential risk, versus mundane risk.

For mundane risk, you by default want your systems to fail at different times in distinct ways, but you need to worry about long dependency chains where you are only as strong as the weakest link. So if you are (for example) combining give different AI systems that each are the best at a particular subtask, and cannot easily swap them out in time, then you are vulnerable if any of them go haywire.

For existential or catastrophic risk, it depends on your threat model.

Any single rogue agent under current conditions, be it human or AI, could potentially have set off the CrowdStrike bug, or a version of it that was far worse. There are doubtless many such cases. So do you think that ‘various good guys with various AIs’ could then defend against that? Would ‘some people defend and some don’t’ be sufficient, or do you need to almost always (or actual always) successfully defend?

I am very skeptical of the ‘good guy with an AI’ proposal, even if such defenses are physically possible (and I am skeptical of that too). Why didn’t a ‘good guy with a test machine or a debugger’ stop the CrowdStrike update? Because even if there was a perfectly viable way to act responsibly, that does not mean we are going to do that if it is trivially inconvenient or is not robustly checked.

Again, yes, if we allow it you are going to give the AI root access and take yourself out of the loop, because not doing so is going to be annoying, and expensive, and you are in competition with people who are willing to do such things. If you don’t, someone else will, and their AIs will end up with the market share and the power.

Indeed, the very fact that these many AIs are allowed to be in this intense competition with each other with rapid iteration will make it all but certain corners will be cut to absurd degrees, especially when it comes to things like collective security.

Another thing that can happen is the one dangerous AI suddenly becomes a lot of dangerous AIs, because it can be copied, or it can scale its resources with similar effect. Or by having many such potentially dangerous AIs, you place authority over it into many hands, and what happens if even one of them chooses to be sufficiently irresponsible or malicious with it?

What about the risk of regulatory capture happening with safety in AI, the way it happened here with mundane computer security and CrowdStrike? What happens if everyone is hiring a company, Acme Safety Compliance (ASC), to handle all their ‘AI safety’ needs, and ASC’s actual product is regulatory compliance?

Well, then we’re in very big trouble. As in dead.

Every time I look at an AI lab’s scaling policy, I say some form of:

- If they implement the spirit of a good version of this document, I don’t know if that is good enough, but that would be a big help.

- If they implement the letter of even a good version of this document, and game the requirements, then that is worth very little if anything.

- If they don’t even implement the letter of it in the breach, it’s totally worthless.

- We cannot rely on their word that they will implement even the letter of this.

This is another reason most of the value, right now, is in disclosure and information requirements on the largest frontier models. If you have to tell me what you are doing, then that is not an easy thing to meaningfully ‘capture.’

But yeah, this is going to be tough and a real danger. It always is. And it always needs to be balanced against the alternative options available, and what happens if you do nothing.

It can also be pointed out that this is another logical counter to ‘but you need to tell me exactly what constitutes compliance, and if I technically do that then I should have full safe harbor,’ as many demand for themselves in many contexts. That is a very good way to get exactly what is written down, and no more, to get the letter only and not the spirit. That works if there is a risk that can indeed be taken out of the room by adhering to particular rules. But if the risk is inherent in the system and not so easy to deal with, you cannot make the situation non-risky on one side of a line.

One thing to note is that CrowdStrike was an active menace. It was de facto mandatory that they be given this level of access. If CrowdStrike was (for example) instead a red teaming service that attempted to break into your computers, it would have been much harder (but not, indirectly, impossible) for it to cause this disaster.

Another key insight is that you do not only have to work around things that might go wrong when everyone does their jobs properly, and you face an actually hard problem.

Your solution must also be designed anticipating the stupidest failures.

Because that is what you probably first get.

And saying ‘oh there are like 5 ways someone would take action such that this would obviously not happen’ is a surprisingly weak defense.

Then, later, you also get the failures that happen when the AI is smarter than you.

And again, then, whatever happens, there is a good chance many will say ‘it would have been fine if we hadn’t acted like completely incompetent idiots and followed even a modicum of best practices’ and on this exact set of events they will have been right. But that will also be why that particular set of events happened, rather than something harder to fathom.

Unbanked

Also down were the banks. Anything requiring computer access was stopped cold.

Patrick McKenzie: In “could have come out of a tabletop exercise”, sudden surge by many customers of ATM transactions has them flagging customers as likely being fraud impacted.

Good news: you have an automated loop which allows a customer to recognize a transaction.

Bad news: Turns out that subdomain is running on Windows.

I’m not trying to grind their nose in it. Widespread coordinated outages are terrible and the few things that knock out all the PCs are always going to be nightmares.

I do have to observe that some people who write regulations which effectively mandate a monoculture don’t know what SPOF stands for and our political process is unlikely to put two and two together for them.

Same story at three banks, two GSFIs and one large regional, for anyone wanting a data point. Well I guess I know next week’s Bits about Money topic.

It was only a single point of failure for Windows machines that trusted CrowdStrike. But in a corporate context, that is likely to either be all or none of them.

That created some obvious issues, and offered opportunity for creative solutions.

Patrick McKenzie: Me: *cash*

Tradesman: Wait how did you get that with the banks down?

Me: *explains*

Tradesman: Oh that’s creative.

Me: Nah. Next plan was creative.

Tradesman: What was that?

Me: Going to the church and buying all cash on hand with a check.

Tradesman: What.

Me: I don’t drink.

Tradesman: What.

Me: The traditional business to use in this situation is the local bar, but I don’t drink and so the local bar doesn’t know me, so that’s right out.

Tradesman: What.

Me: Though come to think of it I certainly know someone who knows both me and the bar owner, so I could probably convince them to give me a workweek’s take on a handshake.

Tradesman: This is effed up.

Me: I mean money basically always works like this, in a way.

Called someone who I (accurately) assumed would have sufficient cash on hand and said “I need a favor.”, then he did what I’d do on receiving the same phone call.

Another obvious solution is ‘keep an emergency cash fund around.’ In a world where one’s bank accounts might all get frozen at once, or the banks might go down for a while, it seems sensible to have such a reserve somewhere you can access it in this kind of emergency. You are not giving up much in interest.

This is also a damn good reason to not ban or eliminate physical cash, in general.

14 comments

Comments sorted by top scores.

comment by DanArmak · 2024-07-22T17:31:07.492Z · LW(p) · GW(p)

Did Microsoft massively screw up by not guarding against this particular failure mode? Oh, absolutely, everyone agrees on that.

I'm sorry, this is wrong, and that everyone thinks so is also wrong - some people got this right.

Normal Windows kernel drivers are sandboxed to some extent. If a driver segfaults, it will be disabled on the next boot and the user informed; if that fails for some reason, you can tell the the computer to boot into 'safe mode', and if that fails, there is recovery mode. None of these options require the manual, tedious, error-prone recovery procedure that the Crowdstrike bug does.

This is because the Crowdstrike driver wants to protect you from malware in other drivers (i.e. malicious kernel modules). (ETA: And it wants to inspect and potentially block syscalls from user-space applications too.) So it runs in early-loading mode, before any other drivers, and it has special elevated privileges normal drivers do not get, in order to be able to inspect other drivers loaded later.

(ETA: I've seen claims that Crowdstrike also adds code to UEFI and maybe elsewhere to prevent anyone from disabling it and to reenable it; which is another reason the normal Windows failsafes for crashing drivers wouldn't work. UEFI is, roughly, the system code running before your OS and bootloader.)

The fact that you cannot boot Windows if Crowdstrike does not approve it is not a bug in either Windows or Crowdstrike. It's by design, and it's an advertised feature! It's supposed to make sure your boot process is safe, and if that fails, you're not supposed to be able to boot!

Crowdstrike are to be blamed for releasing this bug - at all, without testing, without a rolling release, without a better fallback/recovery procedure, etc. Crowdstrike users who run mission-critical stuff like emergency response are also to blame for using Crowdstrike; as many people have pointed out, this is usually pure box-ticking security theater for the individuals making the choice whether to use Crowdstrike; and Crowdstrike is absolutely not at the quality level of the core Windows kernel, and this is not a big surprise to experts.

But Crowdstrike - the product, as advertised - cannot be built without the ability to render your computer unbootable, at least not without a radically different (micro)kernel design that no mainstream OS kernel in the world actually has. (A more reasonable approach these days would be to use a hypervisor, which can be much smaller and simpler and so easier to vet for bugs, as the recovery mechanism.)

Replies from: DanArmak, DanArmak, mishka↑ comment by DanArmak · 2024-07-22T18:16:09.516Z · LW(p) · GW(p)

Addendum 2: this particular quoted comment is very wrong, and I expect this is indicative of the quality of the quoted discussion, i.e. these people do not know what they are talking about.

Luke Parrish: Microsoft designed their OS to run driver files without even a checksum and you say they aren’t responsible? They literally tried to execute a string of zeroes!

Luke Parrish: CrowdStrike is absolutely to blame, but so is Microsoft. Microsoft’s software, Windows, is failing to do extremely basic basic checks on driver files before trying to load them and give them full root access to see and do everything on your computer.

The reports I have seen (of attempted reverse-engineering of the Crowdstrike driver's segfault) say it did not attempt to execute the zeroes from the file as code, and the crash was unrelated, likely while trying to parse the file. Context: the original workaround for the problem was to delete a file which contains only zeroes (at least on some machines, reports are inconsistent), but there's no direct reason to think the driver is trying to execute this file as code.

And: Windows does not run drivers "without a checksum"! Drivers have to be signed by Microsoft, and drivers with early-loading permissions have to be super-duper-signed in a way you probably can't get just by paying them a few thousand dollars.

But it's impossible to truly review or test a compiled binary, for which you have no sourcecode or even debug symbols, and which is deliberately obfuscated in many ways (as people have been reporting when they looked at this driver crash) because it's trying to defend itself against reverse-engineers designing attacks. And of course it's impossible to formally prove that a program is correct. And of course it's written in a memory-unsafe language, i.e. C++, because every single OS kernel and its drivers are written in such a language.

Furthermore, the Crowdstrike product relies on very quickly pushing out updates to (everyone else's) production to counter new threats / vulnerabilities being exploited. Microsoft can't test anything that quickly. Whether Crowdstrike can test anything that quickly, and whether you should allow live updates to be pushed to your production system, is a different question.

Anyway, who's supposed to pay Microsoft for extensive testing of Crowdstrike's driver? They're paid to sign off on the fact that Crowdstrike are who they say they are, and at best that they're not a deliberately malicious actor (as far as we know they aren't). Third party Windows drivers have bugs and security vulnerabilities all the time, just like most software.

Finally, Crowdstrike to an extent competes with Microsoft's own security products (i.e. Microsoft Defender and whatever the relevant enterprise-product branding is); we can't expect Microsoft to invest too much in finding bugs in Crowdstrike!

Replies from: mishka↑ comment by mishka · 2024-07-22T19:20:53.457Z · LW(p) · GW(p)

And of course it's impossible to formally prove that a program is correct.

That's not true. This industry has a lot of experience formally proving correctness of mission-critical software, even in the previous century (Aegis missile cruiser fire control system is one example, software for Ariane rockets is another example, although the Ariane V maiden flight disaster is one of the illustrations that even a formal proof does not fully guarantee that one is safe; nevertheless having a formal proof tends to dramatically increase the level of safety).

But this kind of formal proofs is very expensive, it increases the cost of software at least an order of magnitude, and probably more than that. That's why Zvi's suggestion

Davidad: use LLMs to reimplement all kernel-mode software with formal verification.

How about we use human software engineers to do the rebuild, instead?

does not seem realistic. One does need heavy AI assist to bring the cost of making formally verified software to levels which are affordable.

Replies from: DanArmak↑ comment by DanArmak · 2024-07-22T20:30:01.722Z · LW(p) · GW(p)

It's impossible to prove that an arbitrary program, which someone else gave you, is correct. That's halting-problem equivalent, or Rice's theorem, etc.

Yes, we can prove various properties of programs we carefully write to be provable, but the context here is that a black-box executable Crowdstrike submits to Microsoft cannot be proven reliable by Microsoft.

There are definitely improvements we can make. Counting just the ones made in some other (bits of) operating systems, we could:

- Rewrite in a memory-safe language like Rust

- Move more stuff to userspace. Drivers for e.g. USB devices can and should be written in userspace, using something like libusb. This goes for every device that doesn't need performance-critical code or to manage device-side DMA access, which still leaves a bunch of things, but it's a start.

- Sandbox more kinds of drivers in a recoverable way, so they can do the things they need to efficiently access hardware, but are still prevented from accessing the rest of the kernel and userspace, and can 'crash safe'. For example, Windows can recover from crashes in graphics drivers specifically - which is an amazing accomplishment! Linux eBPF can't access stuff it shouldn't.

- Expose more kernel features via APIs so people don't have to write drivers to do stuff that isn't literally driving a piece of hardware, so even if Crowdstrike has super-duper-permissions, a crash in Crowdstrike itself doesn't bring down the rest of the system, it has to do it intentionally

Of course any such changes both cost a lot and take years or decades to become ubiquitous. Windows in particular has an incredible backwards compatibility story, which in practice means backwards compatibility with every past bug they ever had. But this is a really valuable feature for many users who have old apps and, yes, drivers that rely on those bugs!

Replies from: mishka↑ comment by mishka · 2024-07-22T20:41:08.634Z · LW(p) · GW(p)

It's impossible to prove that an arbitrary program, which someone else gave you, is correct.

That is, of course, true. The chances that an arbitrary program is correct are very close to zero, and one can't prove a false statement. So one should not even try. An arbitrary program someone gave you is almost certainly incorrect.

The standard methodology for formally correct software is joint development of a program and of a proof of its correctness. One starts from a specification, and refines it into a proof and a program in parallel.

One can't write a program, and then try to prove its correctness as an afterthought. The goal of having a formally verified software needs to be present from the start, and then there are methods to accomplish the task of creating this kind of software jointly with a proof of its correctness (but these methods are currently very labor-expensive).

(And yes, perhaps, Windows environment is too messy to deal with formally. Although one would think that fire control for fleet missile defense would be fairly messy as well, yet people claimed that they created a verified Ada code for that back in the 1990-s (or, perhaps, late 1980-s, I am not sure). The numbers they quoted back then during a mid-1990-s talk were 500 thousand lines of Ada and 50 million dollars (would be way more expensive today).)

Replies from: khafra↑ comment by khafra · 2024-07-24T07:30:22.537Z · LW(p) · GW(p)

It would specifically be impossible to prove the Crowdstrike driver safe because, by necessity, it regularly loads new data provided by Crowdstrike threat intelligence, and changes its behavior based on those updates.

Even if you could get the CS driver to refuse to load new updates without proving certain attributes of those updates, you would also need some kind of assurance of the characteristics of every other necessary part of the Windows OS, in every future update.

Replies from: mishka↑ comment by mishka · 2024-07-24T13:35:02.658Z · LW(p) · GW(p)

No, let's keep in mind the Aegis fire control for missile defense example.

This is a highly variable situation, the "enemy action" can come in many forms, from multiple directions at once, the weather can change rapidly, the fleet to defend might have a variety of compositions and spatial distributions, and so on. So one deals with a lot of variable and unpredictable factors. Yet, they were able to formally establish some properties of that software, presumably to satisfaction of their DoD customers.

It does not mean that they have a full-proof system, but the reliability is likely much better because of that effort at formal verification of software.

With Windows, who knows. Perhaps it is even more complex than that. But formal methods are often able to account for a wide range of external situations and data. For a number of reasons, they nevertheless don't provide full guarantee (there is this trap of thinking, "formally verified => absolutely safe", it's important not to get caught into that trap; "formally verified" just means "much more reliable in practice").

I was trying to address a general point of whether a provably correct software is possible (obviously yes, since it is actually practiced occasionally for some mission-critical systems). I don't know if it makes sense to have that in the context of Windows kernels. From what people recently seem to say about Windows is that Microsoft is saying that the European regulator forced them not to refuse CrowdStrike-like updates (so much for thinking, "what could or should be done in a sane world").

Replies from: khafra↑ comment by DanArmak · 2024-07-22T17:46:26.499Z · LW(p) · GW(p)

Addendum: Crowdstrike also has MacOS and Linux products, and those are a useful comparison in the matter of whether we should be blaming Microsoft.

On MacOS they don't have a kernel module (called a kext on MacOS). For two reasons; first, kexts are now disabled by default (I think you have to go to recovery mode to turn them on?) and second, the kernel provides APIs to accomplish most things without having to write a kext. So Crowdstrike doesn't need to (hypothetically) guard against malicious kexts because those are not a threat nearly as much as malicious or plain buggy kernel drivers are on Windows.

One reason why this works well is that MacOS only supports a small first-party set of hardware, so they don't need to allow a bunch of third party vendor drivers like Windows does. Microsoft can't forbid third party kernel drivers, there are probably tens of thousands of legitimate ones that can't be replaced easily or at all, even if someone was available to port old code to hypothetical new userland APIs. (Although Microsoft could provide much better userland APIs for new code; e.g. WinUSB seems to be very limited.)

(Note: I am not a Mac user and this part is not based on personal expertise.)

On Linux, Crowdstrike uses eBPF, which is a (relatively novel) domain-specific language for writing code that will execute inside the Linux kernel at runtime. eBPF is sandboxed in the kernel, and while it can (I think) crash it, it cannot e.g. access arbitrary memory. And so you can't use eBPF to guard against malicious linux kernel modules.

This is indeed a superior approach, but it's hard to blame Microsoft for not having an innovation in place that nobody had ten years ago and that hasn't exactly replaced most preexisting drivers even on Linux, and removing support for custom drivers entirely on Windows would probably stop it from working on most of the hardware out there.

Then again, most linux systems aren't running a hardened configuration, and getting userspace root access is game over anyway - the attacker can just install a new kernel for the next boot, if nothing else. To a first approximation, Linux systems are secure by configuration, not by architecture.

ETA: I'm seeing posts [0] that say Crowdstrike updates broke Linux installations, multiple times over the years. I don't know how they did that specifically, but it doesn't require a kernel module to make a machine crash or become unbootable... But I have not checked those particular reports.

Either way, no-one is up in arms saying to blame Linux for having a vulnerable kernel design!

[0] https://news.ycombinator.com/item?id=41005936, and others.

↑ comment by mishka · 2024-07-22T17:50:31.682Z · LW(p) · GW(p)

Thanks!

Crowdstrike is absolutely not at the quality level of the core Windows kernel, and this is not a big surprise to experts.

I wonder if there are players in that space who are on that quality level.

Or would the only reliable solution be for Microsoft itself to provide this functionality as one of their own optional services? (Microsoft should hopefully be able to maintain the quality level on par with their own core Windows kernel.)

comment by Mitchell_Porter · 2024-07-24T05:55:34.940Z · LW(p) · GW(p)

If I have calculated correctly, CrowdStrike's fatal update was sent out about half an hour after the end of Trump's acceptance speech at the RNC. As the Washington Post informs us (article at MSN), CrowdStrike and Trump have a history. I have conceived three interpretations of this coincidence: one, it was just a coincidence; two, it was a deliberate attempt to drive Trump's speech out of the headlines; three, the prospect of Trump 2.0 led to such consternation at CrowdStrike that they fumbled and made a human error.

Replies from: gjm↑ comment by gjm · 2024-07-24T22:22:02.088Z · LW(p) · GW(p)

Given how much harm such an incident could do CrowdStrike, and given how much harm it could do an individual at Crowdstrike who turned out to have caused it on purpose, your second explanation seems wildly improbable.

The third one seems pretty improbable too. I'm trying to imagine a concrete sequence of events that matches your description, and I really don't think I can. Especially as Trump's formal acceptance of the GOP nomination can hardly have been any sort of news to anyone.

(Maybe I've misunderstood your tone and your comment is simply a joke, in which case fair enough. But if you're making any sort of serious suggestion that the incident was anything to do with Mr Trump, I think you're even crazier than he is.)

Replies from: Mitchell_Porter↑ comment by Mitchell_Porter · 2024-07-25T13:47:25.189Z · LW(p) · GW(p)

It turns out there's an even more straightforward conspiracy theory than anything I suggested: someone had something to hide from a second Trump presidency; the crash of millions of computers was a great cover for the loss of that data; and the moment when Trump's candidacy was officially confirmed, was as good a time as any to pull the plug.

Pursuing this angle probably won't help with either AI doomscrying or general epistemology, so I don't think this is the place to weigh up in detail, whether the means, motive, and opportunity for such an act actually existed. That is for serious investigators to do. But I will just point out that the incident occurred during a period of unusual panic for Trump's opponents - the weeks from the first presidential debate, when Biden's weakness was revealed, until the day when Biden finally withdrew from the race.

edit (added 9 days later): Decided to add what I now consider the most likely non-coincidental explanation, which is that this was an attack by state-backed Russian hackers in revenge for CrowdStrike's 2016 role exposing them as the ones who likely provided DNC emails to Wikileaks.

Replies from: gjm↑ comment by gjm · 2024-07-25T15:22:11.591Z · LW(p) · GW(p)

Nothing you have said seems to make any sort of conspiracy theory around this more plausible than the alternative, namely that it's just chance. There are 336 half-hours per week; when two notable things happen in a week, about half a percent of the time one of them happens within half an hour of the other. The sort of conspiracies you're talking about seem to me more unlikely than that.

(Why a week? Arbitrary choice of timeframe. The point isn't a detailed probability calculation, it's that minor coincidences happen all the time.)