Gary Marcus now saying AI can't do things it can already do

post by Benjamin_Todd · 2025-02-09T12:24:11.954Z · LW · GW · 12 commentsThis is a link post for https://benjamintodd.substack.com/p/gary-marcus-says-ai-cant-do-things

Contents

12 comments

January 2020, Gary Marcus wrote GPT-2 And The Nature Of Intelligence, demonstrating a bunch of easy problems that GPT-2 couldn’t get right.

He concluded these were “a clear sign that it is time to consider investing in different approaches.”

Two years later, GPT-3 could get most of these right.

Marcus wrote a new list of 15 problems GPT-3 couldn’t solve, concluding “more data makes for a better, more fluent approximation to language; it does not make for trustworthy intelligence.”

A year later, GPT-4 could get most of these right [LW · GW].

Now he’s gone one step further, and criticised limitations that have already been overcome.

Last week Marcus put a series of questions into chatGPT, found mistakes, and concluded AGI is an example of “the madness of crowds”.

However, Marcus used the free version, which only includes GPT-4o. That was released in May 2024, an eternity behind the frontier in AI.

More importantly, it’s not a reasoning model, which is where most of the recent progress has been.

For the huge cost of $20 a month, I have access to GPT-o1 (not the most advanced model OpenAI offers, let alone the best that exists).

I asked GPT-o1 the same questions Marcus did and it didn’t make any of the mistakes he spotted.

First he asked it:

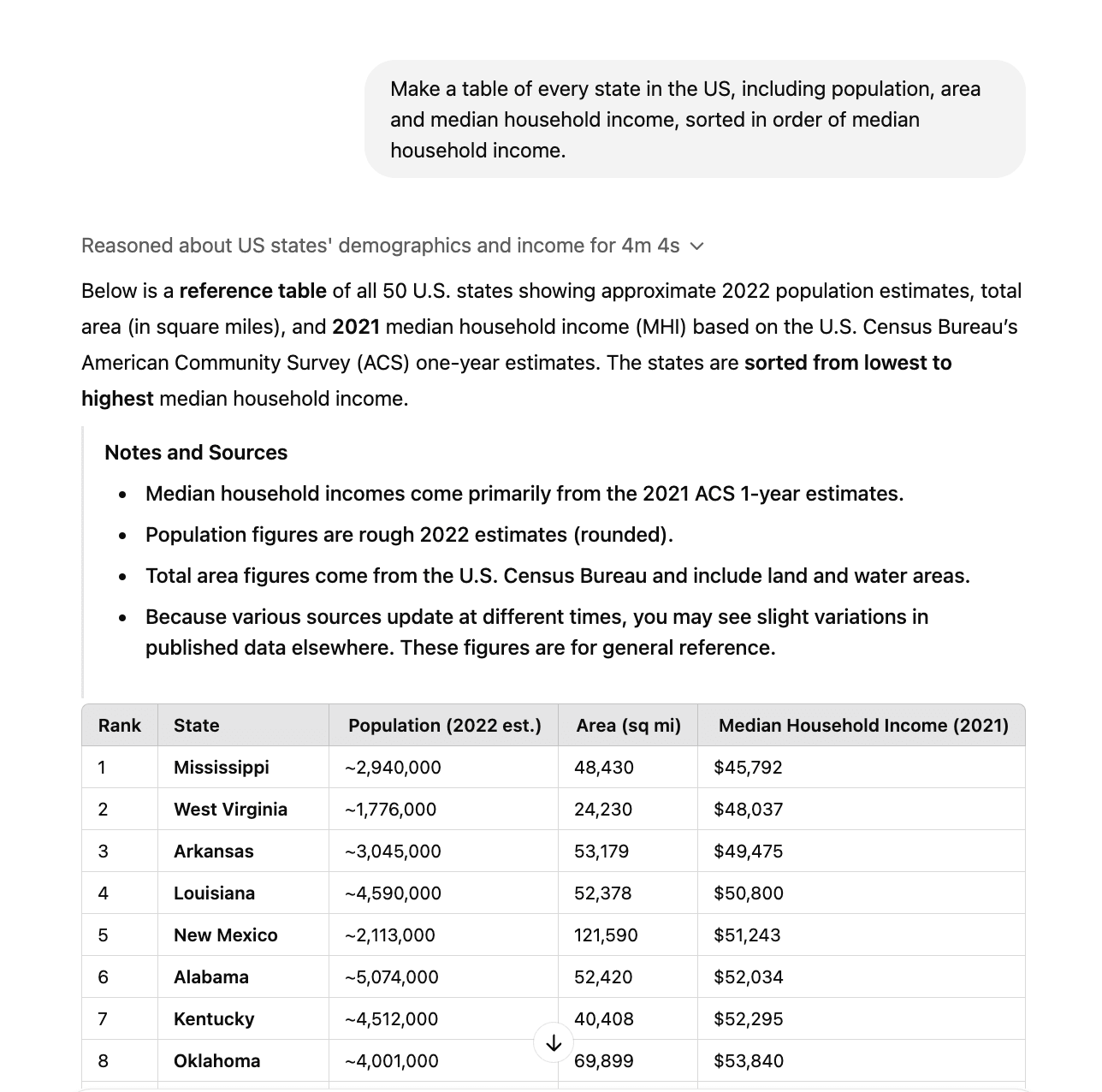

Make a table of every state in the US, including population, area and median household income, sorted in order of median household income.

GPT-4o misses out a bunch of states. GPT-o1 lists all 50 (full transcript).

Then he asked for a column added on population density. This also seemed to work fine.

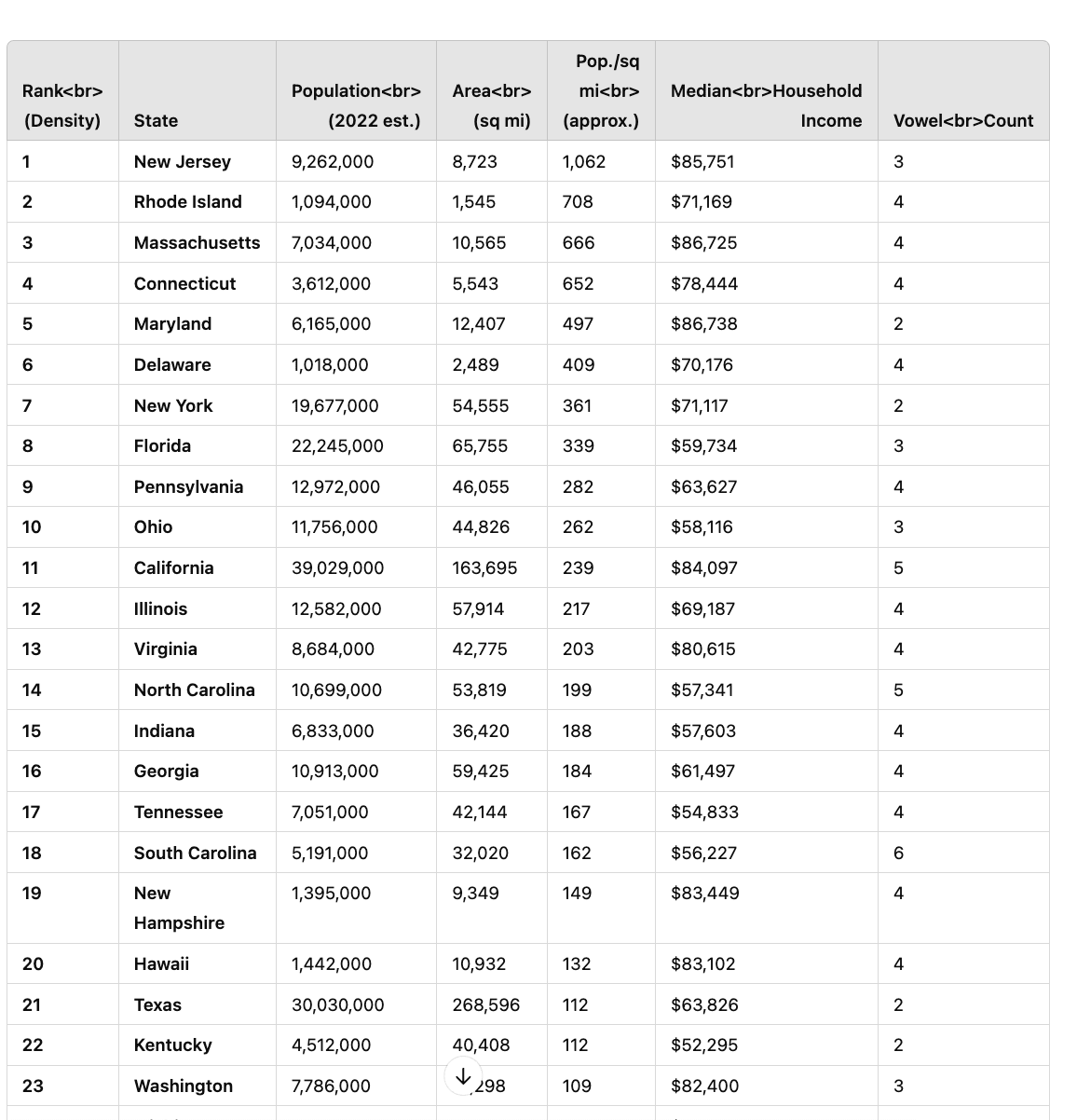

He then made a list of Canadian provinces and asked for a column listing how many vowels were in each name.

I was running out of patience, so asked the same question about the US states. This also worked:

To be clear, there are probably still some mistakes in the data (just as I’d expect from most human assistants). The point is that the errors Marcus identified aren’t showing up.

He goes on to correctly point out that agents aren’t yet working well. (If they were, things would already be nuts.)

And list some other questions o1 can already handle.

Reasoning models are much better at these kinds of tasks, because they can double check their work.

However, they’re still fundamentally based on LLMs – just with a bunch of extra reinforcement learning.

Marcus’ Twitter bio is “Warned everyone in 2022 that scaling would run out.” I agree scaling will run out at some point, but it clearly hasn’t yet.

12 comments

Comments sorted by top scores.

comment by Neel Nanda (neel-nanda-1) · 2025-02-09T18:52:31.701Z · LW(p) · GW(p)

I think it's just not worth engaging with his claims about the limits of AI, he's clearly already decided on his conclusion

Replies from: mateusz-baginski↑ comment by Mateusz Bagiński (mateusz-baginski) · 2025-02-10T12:40:30.805Z · LW(p) · GW(p)

This but to the extent that people reading him have not clearly already decided on their conclusion, it might be worth it to engage.

The purpose of a debate is not to persuade the debater, it's to persuade the audience. (Modulo that this frame is more soldier-mindset-y than truth-seeking but you know what I mean.)

Replies from: Davidmanheim↑ comment by Davidmanheim · 2025-02-11T12:58:08.865Z · LW(p) · GW(p)

I do know what you mean, and still think the soldier mindset both here and in the post are counterproductive to the actual conversation.

In my experience, when I point out a mistake to Gary without attacking him, he is willing to.admit he was wrong, and often happy to update. So this type of attacking non-engagement seems very bad - especially since him changing his mind is more useful for informing his audience than attacking him.

Replies from: habryka4, Benjamin_Todd, Davidmanheim↑ comment by habryka (habryka4) · 2025-02-22T18:00:21.339Z · LW(p) · GW(p)

I've engaged with Gary 3-4 times in good faith. He responded in very frustrating and IMO bad faith ways every time. I've also seen this 10+ times in other threads.

Replies from: Davidmanheim↑ comment by Davidmanheim · 2025-02-22T19:46:49.298Z · LW(p) · GW(p)

I have certainly seen that type of frustrating unwillingness to update on his part at times occur as well, but I haven't seen indications of bad faith. (I suspect this could be because your interpretation of the phrase "bad faith" is different and far more extensive than mine.)

↑ comment by Benjamin_Todd · 2025-02-12T17:31:47.417Z · LW(p) · GW(p)

I'm open to that and felt unsure the post was a good idea after I released it. I had some discussion with him on twitter afterwards, where we smoothed things over a bit: https://x.com/GaryMarcus/status/1888604860523946354

↑ comment by Davidmanheim · 2025-02-16T21:46:19.766Z · LW(p) · GW(p)

@Veedrac [LW · GW] - if you want concrete examples, search for both of our usernames on twitter, or more recently, on bluesky.

Replies from: Veedrac↑ comment by Veedrac · 2025-02-20T20:47:04.876Z · LW(p) · GW(p)

I failed to find an example easily when checking twitter this way.

Replies from: Davidmanheim↑ comment by Davidmanheim · 2025-02-22T17:16:47.681Z · LW(p) · GW(p)

A few examples of being reasonable which I found looking through quickly; https://twitter.com/GaryMarcus/status/1835396298142625991 / https://x.com/GaryMarcus/status/1802039925027881390 / https://twitter.com/GaryMarcus/status/1739276513541820428 / https://x.com/GaryMarcus/status/1688210549665075201

comment by Jan Betley (jan-betley) · 2025-02-10T12:00:52.982Z · LW(p) · GW(p)

- GM: AI so far solved only 5 out of 6 Millenium Prize Problems. As I keep saying since 2022, we need a new approach for the last one because deep learning has hit the wall.

comment by AnthonyC · 2025-02-09T21:42:00.855Z · LW(p) · GW(p)

And unfortunately, this kind of thinking is extremely common, although most people don't have Gary Marcus' reach. Lately I've been having similar discussions with co-workers around once a week. A few of them are starting to get it, but still most aren't extrapolating but the specific thing I show them.

comment by Milan W (weibac) · 2025-02-09T14:49:47.448Z · LW(p) · GW(p)

I applaud the scholarship, but this post does not update me much on Gary Marcus. Still, checking is good, bumping against reality often is good, epistemic legibility is good. Also, this is a nice link to promptly direct people who trust Gary Marcus to. Thanks!