Goal-directedness: imperfect reasoning, limited knowledge and inaccurate beliefs

post by Morgan_Rogers · 2022-03-19T17:28:04.695Z · LW · GW · 1 commentsContents

Questions I would like to solicit feedback on:

Constrained rationality[1]

Imperfect Reasoning

Limited Knowledge

Inaccurate beliefs

Explanations with constraints

The world models are part of the explanation

Goals attached to world models

Different representations of knowledge

Implementing reasoning constraints

Layered explanations

A four-part conjecture

None

1 comment

This is the second post in my Effective-Altruism-funded project aiming to deconfuse goal-directedness. Comments are welcomed. All opinions expressed are my own, and do not reflect the attitudes of any member of the body sponsoring me.

In my first post I started thinking about goal-directedness in terms of explanations, and considered some abstract criteria and mathematical tools for judging and comparing explanations. My intention is to consider a class of explanations representing goals that an agent might be pursuing, and to directly compare these with other classes of explanations of the agent's behaviour; goal-directed behaviour will be behaviour which is better explained by pursuit of goals than by other possible explanatory models.

However, in the process, I wasn't really able to escape the problem I mentioned in the comments of my preliminary post: that goal-directedness seems like it should be mostly independent of competence. Any explanation in the form of a goal, interpreted as a reward function to be optimized, will have low predictive accuracy of an agent's behaviour if that agent happens not to be good at reaching its goal.

I gave the example of a wasp getting caught in a sugar water trap. Explaining this behaviour in terms of the wasp wanting to collect sugar should produce reasonable results, but the explanation that the wasp wants to end up in the trap ranks better (for reasonably general choices of the measures of accuracy, explanatory power and complexity)! Since we have good reason to believe that wasps do not actually desire to be trapped, this problem demands a solution.

Questions I would like to solicit feedback on:

- Are there ingredients obviously missing from my explanation break-down in this post?

- Can you come up with any examples of behaviour that you consider goal-directed which cannot be easily explained with the structure presented in this post?

NB. I have highlighted terms which I expect to reuse in future in the sense intended in this post in bold.

Constrained rationality[1]

The most straightforward remedy to separating competence from goal-directedness, which I will be exploring in this post, is to extend our (goal-based) explanations to include constraints on the agent. This will enable us to retain an intuitive connection between goal-directedness and optimization, since we could conceivably generate predicted behaviour from a given "goal + constraints" explanation using constrained optimization, while hopefully decoupling global competence from this evaluation.

First of all, I'll qualitatively discuss the types of constraint on an agent which I'll be considering, then we'll see how these affect our space of goal explanations in relation to the mathematical frameworks I built up last time. Note that while I present these as cleanly separated types of constraint, we shall see in the subsequent discussion on implementing these constraints that they tend to overlap.

Imperfect Reasoning

In simplistic toy models of agency, we assume a complete model of the world in which the agent is acting. For example, in a Markov Decision Process (MDP) on a Directed Graph (DG[2]), we are given a graph whose nodes represent the possible states of the world, and whose arrows/edges out of a given node represent transitions to world states resulting from the possible actions of an agent in that state.

A common implicit assumption in such a set-up is that the agent has perfect knowledge of the local structure and state of the world they inhabit and the consequences of their actions: they know which state they occupy in the DG and which states are reachable thanks to their available actions. This is sensible for small, perfect-information environments such as an agent playing chess.

Even in this most basic of scenarios, we already encounter problems with evaluating goal-directedness with goals alone: we typically think of an effective chess-playing agent as having the goal of winning, but when one is often beaten by a more skilled player, that explanation is formally evaluated as poor under the criteria we discussed last time. Indeed, if the difference in skill is substantial enough that the agent loses more often than it wins, the explanation that the agent is trying to lose might be evaluated more highly.

The crucial aspect that our goal-explanations are missing here is the local aspect that I stressed above. Sure, an agent knows the immediate consequences of its moves, but calculating all of the possibilities even a few moves ahead can create an unmanageable combinatorial explosion. An agent is far from knowing the global structure of the DG it's exploring; it can only perform imperfect reasoning about this global structure.

Let's consider two chess-playing agents, Deep Blue and Shallow Green[3], where the former of the two is much more skilled at chess than the latter. The difference in skill between Deep Blue and Shallow Green has (at least) two components. The first is a difference in ability to effectively search ahead in the tree of moves. The second is a difference in quality of the agents' implicit encoding of the overall structure of the DG being explored, which is reflected in the criteria they use to evaluate the positions they come across in the search: an ideal chess agent would evaluate moves based on how much they improved their chances of winning across all ways the game might play out, whereas a simpler agent might only be able to compare the number of their pieces to the number of opposing pieces.

Evaluating the skill of an agent could be challenging, but that's not what we're trying to do here. Instead, we just want to be able to capture the constraints on the agent in our explanations. Raw search capacity is an easy first step in this discrete setting, since the answer to "at most how many position calculations can the agent perform per move?" is a single number. The search algorithm being implemented and the evaluation criteria can be expressed in various ways, but there is no obstacle to bolting these onto our goal-based explanations; we shall see in the implementation section below that we actually don't want the search algorithm to be an independent component which is "bolted on", but rather a more goal-sensitive extension of the explanation. Nonetheless, this is sufficient evidence to expect that we should be able to build a class of explanations that includes the means of expressing a wide variety of constraints on an agent besides its goal.

Limited Knowledge

A major implicit assumption in the previous subsection was that the agent's knowledge aligns exactly with the actual state of the world, at least locally. There are many situations in which that won't be the case, however, where the agent has limited knowledge of the state of the world on which to base its decision-making.

The closest example to the set-up of the previous section is that of a partially-observable environment. Consider a game of liar's dice, where each player rolls their own dice but keeps the result hidden from the other players, and then take it in turns to make statements about the overall state of the collection of die values (the particular rules of the game don't matter too much here). Even if an agent playing this type game knows all of the possible present states of the game, they do not have sufficient knowledge to isolate which precise state they find themselves in.

We can also imagine situations where an agent's observations of the world are limited by something other than structural limitations on their information. The agent might have limited attention; by this, I mean an agent which is able in principle to observe any given detail of the world perfectly, but which only has space in its memory, or enough observation time, to examine a limited number of details.

Alternatively, an agent might have imperfect sensors, so that even if they can examine every aspect of their environment, the quality of information they have about the world state is limited. Analogously, if an agent is getting their information about the world second hand, such as from another agent, then their knowledge will be limited by the reliability of that information. The 'sensors' example might make the limitations seem easy to estimate, since we can compute the resolution of a camera from its physical properties, but quantifying the reliability of second-hand information seems much less straightforward, and how an agent does this will depend on their internal beliefs regarding other agents (both here and below, I avoid digging any deeper into the problem of embedded agents for the time being). However, the aim here is not necessarily to ensure that we can practically find the best explanation, desirable as that might be, but rather to ensure that the space of explanations we consider is broad enough to cover everything that we could reasonably call goal-directed.

All of the limitations in this section are obstacles which humans experience in almost every situation, and accounting for them qualitatively could enable us to explain some of our "irrational" behaviour.

Inaccurate beliefs

The discussion so far has expanded our view to varieties of agent which carry approximate, partial, local knowledge of the 'real' state of the world. A third layer of complication arises when we allow for the possibility that the agent's internal understanding of the structure of the world differs from the 'real' one we have been assuming in our analysis. Such an agent carries inaccurate beliefs about the structure of the world.

The most accessible version of the phenomenon I'm talking about concerns problems of identity. On one hand, an agent may distinguish two apparently identical world states, and on the other an agent may fail to distinguish two distinct states; either direction can affect the apparent rationality of the agent. I'll illustrate the first of these two problems.

Consider again one of our chess-playing agents, Shallow Green. To compensate for its mediocre planning skills, this cunning machine has built a small database of end-game strategies which it stores in its hard-coded memory, so that when it reaches a board state in the database, it can win from there. In one such strategy, it has a knight and a few other pieces on the board (no details beyond that will be important here). However, Shallow Green happens to track the positions of all of its pieces throughout its games, and to Shallow Green, each of the two knights has a unique identity - they are not interchangeable. In particular, if Shallow Green happens to reach the aforementioned board position from its database with the wrong knight on the board, it may be oblivious to the fact that it already knows how to win and (depending on how imperfect its reasoning is) could even make an error and lose from this position.

Modelling Shallow Green's chess games with a DG world model, the above corresponds to splitting or combining of states in the DG. Other inaccurate beliefs we can express in a DG model include an agent's beliefs about which actions/transitions are possible in given states[4]; splitting or identification of edges[5], and mistakes about the target of an edge (result of an action)[6].

In limited knowledge environments, inaccurate beliefs can take on many more forms. An agent can have inaccurate beliefs about the dynamics of the aspects of the world they lack information about. If the agent applies principles of reasoning based on those dynamics which are not sound for their environment, they can deduce things about the environment which are false. In the game of liar's dice described earlier, for example, an agent might infer that another player has at least one 6 showing on their dice based on that player announcing an estimate of the total number of 6s rolled; that assessment may or may not be true, depending on the honesty of that player! Even if their reasoning is sound, an agent may have to make a decision or form a belief based on inaccurate or partial information.

The description of beliefs as "inaccurate" of course rests on the assumption that there is some unique 'real' world against which we are assessing the agent's beliefs, and moreover that our own model of the world coincides with the relevant 'real' one. For toy models, this is fine, since the world is no more and no less than what we say it is. In a practically relevant situation, however, our model of the situation could be flawed or imperfectly simulated when we present it to our agents, and this fact could be exploited [LW · GW] by those agents in unexpected ways.

My personal solution to this is to invoke relativism: there are numerous possible models of the world (or agent-environment system) which we can use to ground our assessments of an agents actions, and the outcome of our assessments will depend on the choice we make[7]. Even if you think there should be a single real/accurate world model out there, it is prudent to consider the possibility of shortcomings in our models [AF · GW] anyway, and to examine how shifting between these affects our assessments of agent behaviour.

Explanations with constraints

Having established some directions in which our agent may be constrained, we now need to establish how these can be built into our explanations (with or without goals). For this, we'll work from the bottom up through the last section.

The world models are part of the explanation

First of all, we have to acknowledge that our chosen world model will determine some aspects of the evaluation of our agent, and so we formally include it in our explanations. For a fixed world model, the resulting overhead of explanatory complexity will be the same across all of the explanations we consider, so there is no loss in making this model implicit when we get down to calculating, but acknowledging that the model is a parameter will allow us to investigate how shifting between world models changes our assessments (as it appears to in the footnote examples[7]) later. Note also that we were already using the components of the world model (the states of the world, the concepts available in this model) in our descriptions of behaviours and explanations, so we're just making explicit what was already present here.

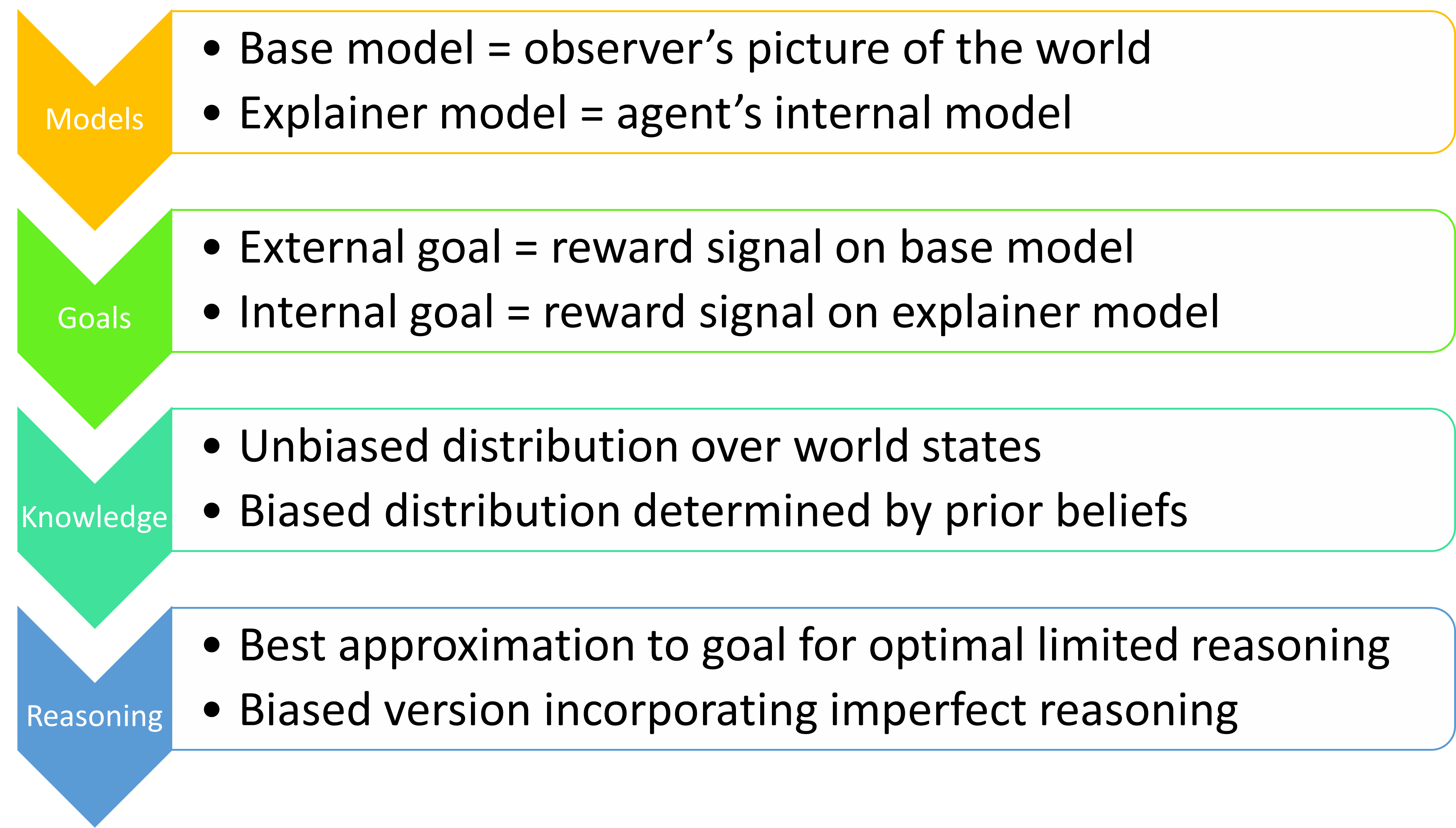

I'll call the world model being incorporated into the explanation the base model, and the internal model which an explanation assigns to the agent will be called the explainer model. As a first approximation, incorporating the agent's belief inaccuracies into the explanation amounts to describing a transformation which produces the explainer model from the base model. It is this transformation whose complexity will contribute to the overall complexity of the explanation.

Let's take this first idea and run with it a little bit. When we try to formalize transformations of world models, we immediately encounter a problem: which world models do we consider, and which transformations between models are allowed? This amounts to asking 'which category [? · GW] of models are we in?' Stuart Armstrong and Scott Garrabrant have proposed categories of Generalized Models [AF · GW] and Cartesian Frames [AF · GW] respectively, as categories of world models, and Armstrong has compared these two formalisms [AF · GW]. Just as in my last post, I'm going to remain agnostic on the choice of category of models for the time being, but I will make some observations about good features this category should have if we're going to get anywhere.

- The objects of the category need to include both the base model and all of the possible explainer models we might want to consider. On the other hand, there shouldn't be too many models, for reasons that shall be discussed below. This shouldn't be a problem, since I expect we will never need to include models of arbitrary infinite cardinality, and that's enough of a restriction for my purposes.

- The morphisms/arrows in the category should be relational in nature, like those of Armstrong's generalized models, in order to be able to model all of the phenomena we discussed under "Inaccurate beliefs," above. (Functions can model identification of objects/states/actions, but not splitting!)

- We should be able to assign complexities, or at least complexity classes, to arrows in a systematic way. There is some literature on assigning complexity values to arrows in categories, but none specific to this application for the time being, so that will be a further aspect of complexity theory to draw out in a later stage of this project.

- Objects of the category should have enough internal structure to enable us to talk about knowledge and reasoning of agents in them (for the subsequent subsections). I'll refer to internal states when alluding to this structure, although I'll lightly stress that there in no way has to be discrete internal states in the sense of the sets forming the components of objects in Armstrong's or Garrabrant's categories.

Let's suppose we've chosen our category of models; I'll call it . Our base model corresponds to a choice of object , and the explainer model is an object equipped with a morphism from ; equivalently, the explainer model is an object of the coslice category .

This framework is crisp enough that we can immediately push against it to get a bigger picture. Consider, for example, the fact that attaching a single explainer model to an explanation is rather restrictive compared to how we were able to incorporate uncertainty (or imprecision) of prediction into our explanations last time. As long as our category of models is small enough, we could replace the proposed single object of the coslice category with a probability distribution over objects. Or, taking some meta-considerations into account, we might even want to allow for uncertainty of our base model. These extensions will complicate the complexity story, but they also are broad enough to cover sensible Bayesian/empirical approaches to agent behaviour.

A yet further level of complication is that the explainer model may need to be updated as the agent takes actions and receives new information[8]. This update process is subject to the same kinds of constraints on reasoning and knowledge that were discussed above.

Coming around to the partial information settings, the formalisms discussed thus far quickly start to look inadequate, since the problems we discussed were a result of interactions between agents, and incorporating functional models of agents into world models is hard! I may consider this in more detail in a future post. As for beliefs founded on inaccurate or uncertain information, we only need the explainer models to account for the features of the world which the agent could conceivably think possible; the actual likelihoods the agent assigns to the different features will be incorporated into the considerations on knowledge, below.

Goals attached to world models

At this point, the "goal" in goal-directedness is ready to re-emerge, at least for explanations involving goals. Just as world models can vary, so too can expressions of goals. In a DG model, we could represent goals as mere subsets of the possible states, as a reward function over terminal states (wins, losses and draws in chess, say), as a reward function which is cumulative over paths in the graph (possibly with a discount rate), and so on. As usual, we shall postpone the specific choice until later, so that the details of the choice don't interrupt the general reasoning.

One subtlety which our set-up thus far allows us to account for is that we can distinguish between a goal defined on the base model (an external reward signal) and a goal defined on the explainer model (an internal reward signal). By employing the latter, we can explain a host of "outwardly irrational" phenomena in terms of mistaken identity assumptions. For example, an animal that cannot distinguish poisonous berries from sweet berries will sensibly avoid both (as long as the poisonous berries are sufficiently prevalent), even if the difference is clear to an outside observer and the sweet berries are the best nutritional option available.

For consistency with the other sections, we shall take the following approach. When we consider goal-directed behaviour, it is conceptually simplest to express those goals in terms of the world model we are ourselves using; as such, external reward signals shall be the first approximation we consider. We can transform an external signal into an internal one by pushing its values along the transformation from the base model to the explainer model, to produce an internal reward signal. Then we can transform the result a little to account for details of internal reward signals which cannot be represented in the external signal (this might be needed because the explainer model distinguishes states which are identical in the base model, for example). We see that the first step in this process is already enough to explain the example above: if the poisonous berries are assigned a reward of -100 and the nutritious berries a reward of +2 in the external reward signal, then assuming there is more than one poison-berry plant out of every fifty berry plants on average, the expected reward of the indistinguishable berries in the internal reward signal will be negative, and so much less desirable than less nutritious food sources.

Different representations of knowledge

The most basic representation of partial knowledge would be to consider a "submodel" of the explainer model representing the collection of states which the agent thinks it could be in. In light of the direction of developments in my first post [LW · GW], it should come as no surprise that I will immediately discard this in favour of a distribution over internal states of the explainer model; this is a generalization (at least for finite situations) because I can identify a "submodel" with the special case of a uniform distribution over the states it contains.

There are some subtleties here depending on which knowledge limitation model we are formalizing. In crisp partial-information situations such as liar's dice (or at least, the initial game state in a game of liar's dice!), we will be exactly encoding the distribution determined by the combinatorics of the set-up. For other situations, the best we can do a priori is encode a maximum entropy distribution based on the known constraints on the agent's knowledge, such as sensor quality parameters.

Here we hit on an interaction between knowledge, reasoning and belief not covered in earlier sections: bias. It may be unreasonable to assume that an agent will, in fact, employ the maximum entropy distribution (conditioned on their knowledge), for two reasons. One is that this distribution is computationally expensive to compute explicitly, so they might only carry an approximation of the true distribution. Another is that they may have a biased prior distribution. For example, a human player of liar's dice will typically both be bad at computing probabilities of outcomes involving a large number of dice and may erroneously believe that rare events are less likely after they have recently occurred (a common mistake!). Ultimately, in analogy with transforming from base models to explainer models, I think the most comprehensive way to deal with these limitations is to include both the unbiased distribution (assuming one exists) and the deviation from that unbiased model (or instructions for computing that deviation) in the explanation.

Implementing reasoning constraints

In the last paragraph, we saw how constraints on an agent's reasoning impact the quality of their knowledge of the world. This effect corresponds to the limitations such as those on "raw search capacity" discussed earlier. These provide concrete predictions about how well the agent will be able to approximate the explainer model compared with optimal rationality, conditioned on the information at their disposal. From these, we can compute unbiased predictions of the agent's approximation of the goal signal.

To improve an explanation further, we need to take into account the other type of imperfection in reasoning, regarding an agent's inability to accurately compute the above unbiased approximation. In other words, we must take into account how the agent is locally misrepresenting the global structure of the goal on the explainer model. This could be described in terms of a perturbation of the unbiased prediction or instead as a model of the signal (such as a heuristic or proxy measures) which the agent is actually deploying. The difference between these possibilities might seem small at first glance, but if we choose the latter option, we encounter a curious obstacle, which may well represent a crux in my understanding of goal-directedness: there is no formal reason for a heuristic description of the agent's behaviour to be strongly coupled or correlated with the goal imposed earlier. In other words, this final description of 'what the agent is actually doing' can completely overwrite the goal which was supposed to be the key feature of the explanation! Stuart Armstrong illustrates [AF · GW] the perfect-knowledge version of this problem: if we boil an explanation down to a reward function and a planning algorithm which turns reward functions into policies/behaviour then, without constraints, the planning algorithm could be a perfect contrarian which deliberately selects the actions with the worst returns against the reward function (but which happens to be acting on a reward function where that worst return produces the observed behaviour[9]), or indeed a constant function that ignores the reward function. Moreover, taking a uniform reward function in the last case, all of these options have the same total Kolmogorov complexity up to adding a constant number of operations; since I haven't yet established precisely how I'll be measuring complexity of explanations, this is a good stand-in for the time being.

I should stress that the problem emerging here is not that we have multiple different equally good explanations. It is not unusual for explanations to be underdetermined by the behaviour they are intended to account for, and in this post I have been adding further parameters to explanations, making them even more underdetermined. Nor is the problem that amongst these equally good and equally complex explanations, we can find some which are a goal-directed and others which are not, although this does mean I'll have to rethink my original naïve picture of how goal-directedness will be judged. As a consolation prize, these examples demonstrate that considering individual explanations in isolation could not be sufficient for quantifying goal-directedness, as I suspected.

Rather, the issue is that between the extreme options that Armstrong describes, where the respective levels of goal-directedness is reasonably clear (the optimizing agent and the perfect contrarian have policies completely dependent on the goal, the constant policy explanation is completely non-goal-directed, since it ignores the reward function), assessing how goal-based the explanations lying between these extremes are is tricky, and I do not expect the resulting judgements to be binary. This strengthens my initial belief that there is a very blurry line between goal-directed behaviour and non-goal-directed behaviour.

I will eventually need to decide how to integrate over the equally good explanations in order to reach a final numerical judgement of goal-directedness.

Layered explanations

The structure of explanations emerging from the above discussion is a layered one. At each stage in the construction of our explanations, we find a 'default' assumption corresponding to perfect rationality and alternative 'perturbative' assumptions which take into account deviations from perfect rationality. Here's a diagram:

Consider again the example of the wasp. We can explain the behaviour as follows. For simplicity, we only consider world models consisting of spatial configurations of some relevant components, such as a wasp nest, flowers, other food sources and possibly some wasp traps. The transitions in this set-up might correspond to wasps flying or picking up substances. In the base model we recognize that a trap is a trap (there is little to no probability of the wasp escaping once it enters), whereas the explainer model over-estimates the possibility of the wasp being able to take actions which lead it out of the trap - this is the crucial new feature. We equip this explainer model with a reward signal for bringing food back to the nest (possibly having consumed it), with a strength proportional to how nutritious the food sources are.

Even without refining this explanation to account for a wasp's limited knowledge (which will affect how quickly the wasp is able to find the food sources) and imperfect reasoning (accounting for the fact that the wasp may not easily be able to estimate their ability to carry or retrieve a given piece of food), this goal-based explanation already does a much better job of accounting for a wasp getting caught in the trap.

Returning to our assessment criteria from last time, the accuracy and power of the explanations can be assessed as before, by generating predictions and comparing them with the observed behaviour. Meanwhile, the layered structure presented above gives us a systematic way to break down the complexity of explanations; this breakdown may eventually be leveraged to assess goal-directedness by the 'asymptotic' method alluded to at the end of my previous post.

There remains a question of where to draw the line in measuring the complexity. If the base model is fixed across all of the explanations being considered, then we may as well ignore its complexity; conversely, we should include the complexity of the transformation from the base model to the explainer model, since we cannot help but take the base model as the 'default' view of the world and the agent's world model as a perturbation of that default view. Whether or not there is a default (e.g. uniform/constant) goal on the world, the goal's complexity should be included in the count, which only adds a constant value for the default case.

The description complexity of the constraints on the agent's knowledge and reasoning seems like a reasonable choice to include. Note that these will usually differ significantly from the complexity of computing the respective unbiased and perturbed distributions determined by those constraints; the reason I choose to focus on the complexity of the constraints themselves is that I want the unbiased distributions to be considered the default, and so carry no intrinsic complexity contribution. Just as with the transformation of world models, it's the complexity of the perturbation that I want to keep track of. Formally stated, I will be thinking of complexity classes of each stage of the explanation relative to an oracle that lets me compute the previous parts for free.

A four-part conjecture

You may be familiar with the fact that any behaviour is optimal [? · GW] with respect to some utility/reward function. Of course, this is already true for the constant reward function for which all behaviour is optimal; the more pertinent observation is that we can instead choose a reward function which is positive on the exact trajectory taken by an agent and zero or negative elsewhere, or when there is some randomness involved we can reverse-engineer a reward function which makes only the given behaviour optimal. The result is an explanation with ostensibly high explanatory power and accuracy, scoring highly on these criteria in the sense discussed in my previous post (at least up to the point of empirically testing the explanation against future observations). The crucial point, however, is that this explanation will be as complex as the observed behaviour itself (so it will typically score poorly on our "simplicity" criterion).

In discussion with Adam Shimi about a draft of the present post, he suggested that instead of making the reward function (aka the goal) arbitrarily complex to account for the behaviour observed, we could equally well fix a simple goal on a sufficiently contrived explainer model to achieve the same effect. Again, there are simplistic ways to do this, such as squashing all of the states into a single state; the real claim here is that we can do this in a way which retains explanatory power. Examining the diagram above, I would extend this conjecture to further hypothesise:

Conjecture: For each of the components in Figure 1, we can achieve (an arbitrarily good approximation to) perfect accuracy and explanatory power[10] by sufficiently increasing the complexity of that component while keeping the complexity of the other components fixed at their minimal values. A little more precisely:

- Given a large enough category of world models, we can achieve perfect accuracy and explanatory power with a simple goal (and no constraints on knowledge or reasoning) at the cost of making the transformation from the base model to the explainer model arbitrarily complex.

- For any fixed base model, we can achieve perfect accuracy and explanatory power (while taking the explainer model identical to the base model, and imposing no constraints on knowledge or reasoning) at the cost of making the goal arbitrarily complex; this is what Rohin Shah proves [? · GW] in a general setting.

- For any fixed base model and simple goal, we can achieve perfect accuracy and explanatory power (while taking the explainer model identical to the base model, and imposing no constraints on reasoning) at the cost of making the constraints on the agent's knowledge about the world arbitrarily complex.

- For any fixed base model and simple goal, we can achieve perfect accuracy and explanatory power (while taking the explainer model identical to the base model, and imposing no constraints on knowledge) at the cost of making the reasoning constraints on the agent arbitrarily complex. Armstrong's argument [AF · GW], which we saw earlier, more or less demonstrates this already.

Based on Armstrong's argument, I expect that the complexities of the explanations required for the respective components will all be very similar. This conjecture is not stated crisply enough for the not-yet-proved parts to be amenable to a proof as stated, but I expect anyone sufficiently motivated could extract and prove (or, more interestingly, disprove!) a precise version of it.

To the extent that it is true, this conjecture further justifies the approach I am exploring of comparing explanations whose complexity is carefully controlled, since we can infer that explanations in any sufficiently comprehensive class will achieve the same results as far as explanatory power and accuracy go. Note that the individual parts of the conjecture are genuinely different mathematically, since they extend the space of explanations being considered in different directions, although this difference is least transparent between knowledge and reasoning.

Thanks to Adam Shimi for his feedback during the writing of this post.

- ^

When selecting the title of this section, I didn't make the connection with bounded rationality [? · GW]. There is some overlap; I expect that some ideas here could model the problems presented in some of the bounded rationality posts, but I haven't thought deeply about this.

- ^

Directed acyclic graphs (DAGs) are pretty common, but there's no need for us to constrain ourselves to acyclic graphs here.

- ^

If you're not familiar with chess, some of the later chess-based examples might be a bit obscure, so I'll stick them in the footnotes.

- ^

If Shallow Green doesn't know about castling, then there are transitions missing from its internal model of the chess DG.

- ^

Shallow Green sometimes likes to turn their kingside knight around to face the other way when it moves; from the point of view of the other player, moving with or without rotating the knight count as the same move.

- ^

An agent like Shallow Green might be aware of the en passant rule, but be confused about where their pawn ends up after performing it, and hence fail to use it at a strategically advantageous moment.

- ^

After observing Shallow Green in bemusement across many matches, you might come to realise that they are actually expertly playing a slight variation of chess in which the distinction between the knights and the direction they are facing matters, and is negatively affected by castling. Of course, this requires that Shallow Green is a very charitable player who doesn't (or cannot) object to their opponents making illegal moves, but with this shift of world model, we suddenly have access to a simple, compellingly goal-directed, description of Shallow Green's behaviour.

- ^

The types of base model we have been considering have included all possible world states by default, so are static and deterministic, but it's plausible that in more realistic/less crisply defined situations we may equally need to incorporate dynamics into the base model.

- ^

Mathematically, this is just the observation that the only difference between maximizing a reward function and minimizing a loss function is a couple of minus signs, but once we've decided that our goals take the form of reward functions, the difference between a reward function and its negation becomes a significant one!

- ^

Here perfect accuracy and explanatory power are relative to the observations which are possible. In other words, I am making assertions about the explanations achieving the best possible accuracy and power, conditioned on fixing at least the base model (otherwise there could be an explanation which has better explanatory power because it explains a feature of the behaviour that the base model doesn't account for).

1 comments

Comments sorted by top scores.

comment by Charlie Steiner · 2022-03-22T09:41:04.136Z · LW(p) · GW(p)

How useful do you think goals in the base model are? If we're trying to explain the behavior of observed systems, then it seems like the "basic things" are actually the goals in the explainer model. Goals in the base model might still be useful as a way to e.g. aggregate goals from multiple different explainer models, but we might also be able to do that job using objects inside the base model rather than goals that augment it.

This sort of thinking makes me want to put some more constraints on the relationship between base and explainer models.

One candidate is that the explainer model and the inferred goals should fit inside the base model. So there's some set of perfect mappings from an arbitrary explainer model and its goals to a base model (not with goals) back to the exact same explainer model plus goals. If things are finite then this restriction actually has some teeth.

We might imagine having multiple explainer models + goals that fit to the same physical system, e.g. explaining a thermostat as "wants to control the temperature of the room" versus "wants to control the temperature of the whole house." For each explainer model we might imagine different mappings that store it inside the base model and then re-extract it - so there's one connection from the base model to the "wants to control the temperature of the room" explanation and another connection to the "wants to control the temperature of the whole house" explanation.

We might also want to define some kind of metric for how similar explainer models and goals are, perhaps based on what they map to in the base model.

And hopefully this metric doesn't get too messed up if the base model undergoes ontological shift - but I think guarantees may be thin on the ground!

Anyhow, still interested, illegitimi non carborundum and all that.