Announcing Epoch’s dashboard of key trends and figures in Machine Learning

post by Jsevillamol · 2023-04-13T07:33:06.936Z · LW · GW · 7 commentsThis is a link post for https://epochai.org/trends

Contents

Visit now the dashboard None 7 comments

Developments in Machine Learning have been happening extraordinarily fast, and as their impacts become increasingly visible, it becomes ever more important to develop a quantitative understanding of these changes. However, relevant data has thus far been scattered across multiple papers, has required expertise to gather accurately, or has been otherwise hard to obtain.

Given this, Epoch is thrilled to announce the launch of our new dashboard, which covers key numbers and figures from our research to help understand the present and future of Machine Learning. This includes:

- Training compute requirements

- Model size, measured by the number of trainable parameters

- The availability and use of data for training

- Trends in hardware efficiency

- Algorithmic improvements for achieving better performance with fewer resources

- The growth of investment in training runs over time

Our dashboard gathers all of this information in a single, accessible place. The numbers and figures are accompanied by further information such as confidence intervals, labels representing our degree of uncertainty in the results, and links to relevant research papers. These details are especially useful to illustrate which areas may require further investigation, and how much you should trust our findings.

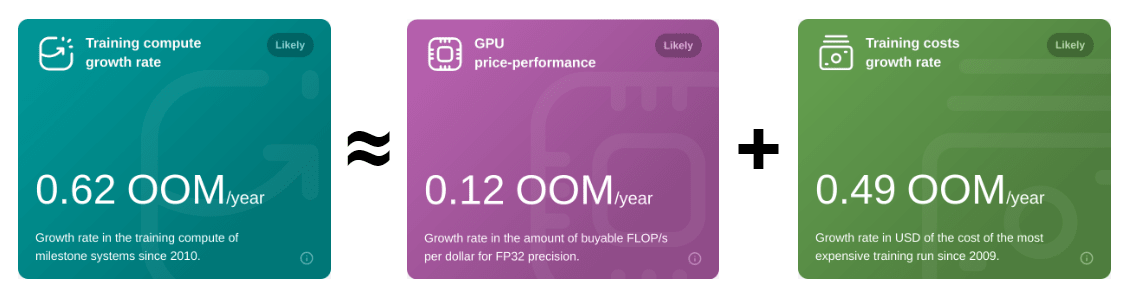

Beyond accessibility, bringing these figures together allows us to compare and contrast trends and drivers of progress. For example, we can verify that growth in training compute is driven by improvements to hardware performance and rising investments:

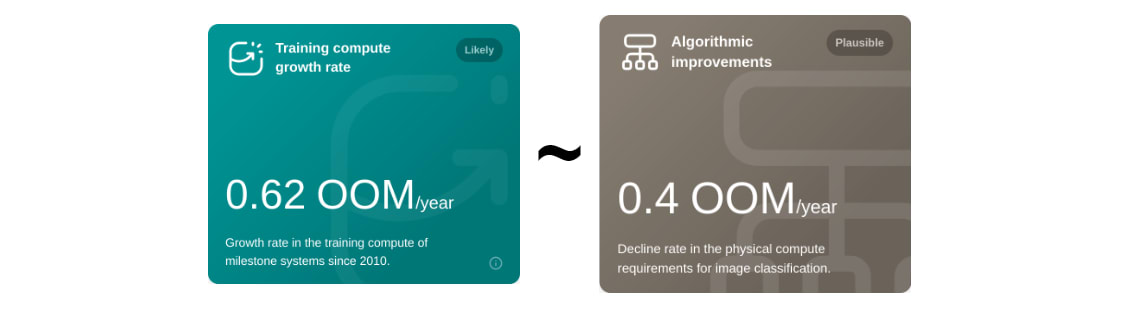

We can also see that performance improvements have historically been driven by algorithmic progress and training compute growth by comparable amounts:

Overall, we hope that our dashboard will serve as a valuable resource for researchers, policymakers, and anyone interested in the future of Machine Learning.

We plan on keeping our dashboard regularly updated, so stay tuned! If you spot an error or would like to provide feedback, please feel free to reach out to us at info@epochai.org.

Visit now the dashboard

7 comments

Comments sorted by top scores.

comment by Neel Nanda (neel-nanda-1) · 2023-04-20T10:00:26.478Z · LW(p) · GW(p)

Thanks, that looks really useful! Do you have GPU price performance numbers for lower precision training? Models like Chinchilla were trained in bf16, so that seems a more relevant number.

Replies from: Jsevillamol↑ comment by Jsevillamol · 2023-04-20T21:29:51.176Z · LW(p) · GW(p)

Thanks Neel!

The difference between tf16 and FP32 comes to a x15 factor IIRC. Though also ML developers seem to prioritise other characteristics than cost effectiveness when choosing GPUs like raw performance and interconnect, so you can't just multiply the top price performance we showcase by this factor and expect that to match the cost performance of the largest ML runs today.

More soon-ish.

comment by Edouard Harris · 2023-04-20T22:21:27.386Z · LW(p) · GW(p)

Looks awesome! Minor correction on the cost of the GPT-4 training run: the website says $40 million, but sama confirmed publicly that it was over $100M (and several news outlets have reported the latter number as well).

Replies from: Jsevillamol↑ comment by Jsevillamol · 2023-04-21T06:54:10.535Z · LW(p) · GW(p)

Thanks!

Our current best guess is that this includes costs other than the amortized compute of the final training run.

If no extra information surfaces we will add a note clarifying this and/or adjust our estimate.

Replies from: Edouard Harris↑ comment by Edouard Harris · 2023-04-21T16:49:22.290Z · LW(p) · GW(p)

Gotcha, that makes sense!

comment by Tao Lin (tao-lin) · 2023-04-13T16:55:15.963Z · LW(p) · GW(p)

Why measure flops at FP32? All the big training runs in the last 2 years are FP16 right?

Replies from: Jsevillamol↑ comment by Jsevillamol · 2023-04-13T19:25:33.049Z · LW(p) · GW(p)

Because there is more data available for FP32, so it's easier to study trends there.

We should release a piece soon about how the picture changes when you account for different number formats, plus considering that most runs happen with hardware that is not the most cost-efficient.