Deference on AI timelines: survey results

post by Sam Clarke, mccaffary · 2023-03-30T23:03:52.661Z · LW · GW · 4 commentsContents

Context Results What happens if you redo the plot with a different metric? How sensitive are the results to that? Don’t some people have highly correlated views? What happens if you cluster those together? Limitations of the survey Acknowledgements None 4 comments

Crossposted to the EA Forum [EA · GW].

In October 2022, 91 EA Forum/LessWrong users answered the AI timelines deference survey [LW · GW]. This post summarises the results.

Context

The survey was advertised in this forum post [LW · GW], and anyone could respond. Respondents were asked to whom they defer most, second-most and third-most, on AI timelines. You can see the survey here.

Results

This spreadsheet has the raw anonymised survey results. Here are some plots which try to summarise them.[1]

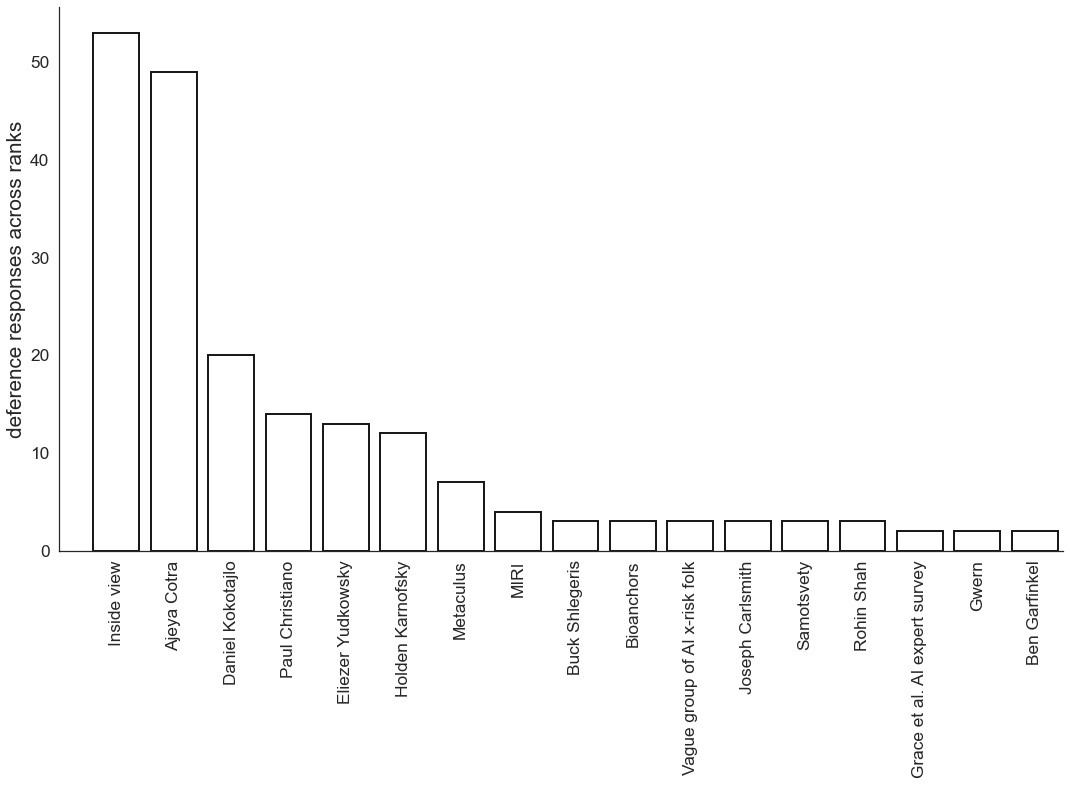

Simply tallying up the number of times that each person is deferred to:

The plot only features people who were deferred to by at least two respondents.[2]

Some basic observations:

- Overall, respondents defer most frequently to themselves—i.e. their “inside view” or independent impression [? · GW]—and Ajeya Cotra. These two responses were each at least twice as frequent as any other response.

- Then there’s a kind of “middle cluster”—featuring Daniel Kokotajlo, Paul Christiano, Eliezer Yudkowsky and Holden Karnofsky—where, again, each of these responses were ~at least twice as frequent as any other response.

- Then comes everyone else…[3] There’s probably something more fine-grained to be said here, but it doesn’t seem crucial to understanding the overall picture.

What happens if you redo the plot with a different metric? How sensitive are the results to that?

One thing we tried was computing a “weighted” score for each person, by giving them:

- 3 points for each respondent who defers to them the most

- 2 points for each respondent who defers to them second-most

- 1 point for each respondent who defers to them third-most.

If you redo the plot with that score, you get this plot. The ordering changes a bit, but I don’t think it really changes the high-level picture. In particular, the basic observations in the previous section still hold.

We think the weighted score (described in this section) and unweighted score (described in the previous section) are the two most natural metrics, so we didn’t try out any others.

Don’t some people have highly correlated views? What happens if you cluster those together?

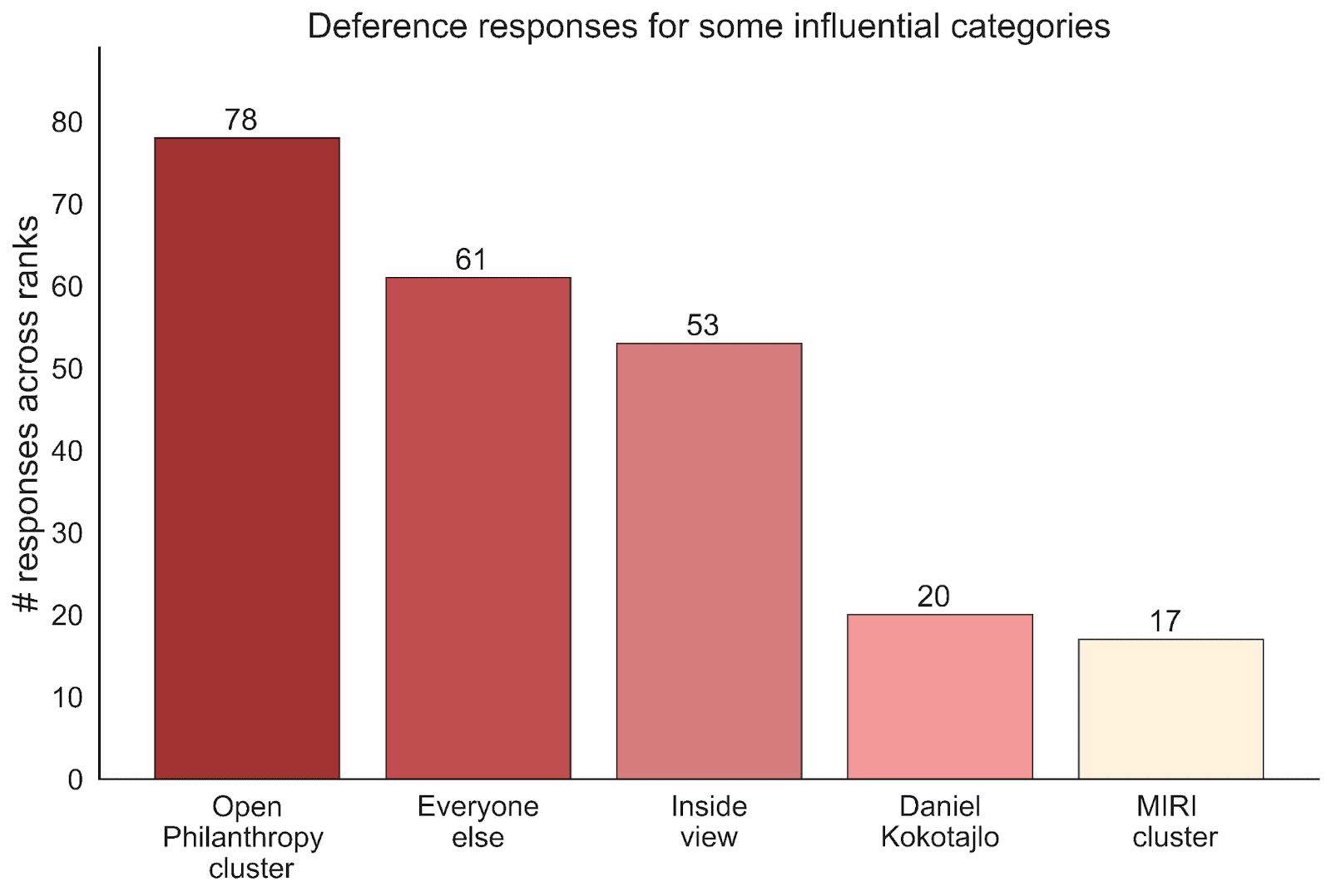

Yeah, we do think some people have highly correlated views, in the sense that their views depend on similar assumptions or arguments. We tried plotting the results using the following basic clusters:

- Open Philanthropy[4] cluster = {Ajeya Cotra, Holden Karnofsky, Paul Christiano, Bioanchors}

- MIRI cluster = {MIRI, Eliezer Yudkowsky}

- Daniel Kokotajlo gets his own cluster

- Inside view = deferring to yourself, i.e. your independent impression

- Everyone else = all responses not in one of the above categories

Here’s what you get if you simply tally up the number of times each cluster is deferred to:

This plot gives a breakdown of two of the clusters (there’s no additional information that isn’t contained in the above two plots, it just gives a different view).

This is just one way of clustering the responses, which seemed reasonable to us. There are other clusters you could make.

Limitations of the survey

- Selection effects. This probably isn’t a representative sample of forum users, let alone of people who engage in discourse about AI timelines, or make decisions influenced by AI timelines.

- The survey didn’t elicit much detail about the weight that respondents gave to different views. We simply asked who respondents deferred most, second-most and third-most to. This misses a lot of information.

- The boundary between [deferring] and [having an independent impression] is vague. Consider: how much effort do you need to spend examining some assumption/argument for yourself, before considering it an independent impression, rather than deference? This is a limitation of the survey, because different respondents may have been using different boundaries.

Acknowledgements

Sam and McCaffary decided what summary plots to make. McCaffary did the data cleaning, and wrote the code to compute summary statistics/make plots. Sam wrote the post.

Daniel Kokotajlo suggested running the survey. Thanks to Noemi Dreksler, Rose Hadshar and Guive Assadi for feedback on the post.

- ^

You can see the code for these plots here, along with a bunch of other plots which didn’t make the post.

- ^

Here’s a list of people who were deferred to by exactly one respondent.

- ^

Arguably, Metaculus doesn’t quite fit with “everyone else”, but I think it's good enough as a first approximation, especially when you also consider the plots which result from the weighted score (see next section).

- ^

This cluster could have many other names. I’m not trying to make any substantive claim by calling it the Open Philanthropy cluster.

4 comments

Comments sorted by top scores.

comment by Ben Pace (Benito) · 2023-03-30T23:24:38.181Z · LW(p) · GW(p)

Did people say why they deferred to these people?

I think another interesting question to correlate this would be "If you believe AI x-risk is a severely important issue, what year did you come to believe that?".

Replies from: Sam Clarke↑ comment by Sam Clarke · 2023-03-30T23:33:35.291Z · LW(p) · GW(p)

Did people say why they deferred to these people?

No, only asked respondents to give names

I think another interesting question to correlate this would be "If you believe AI x-risk is a severely important issue, what year did you come to believe that?".

Agree, that would have been interesting to ask

comment by Sam Clarke · 2023-03-30T23:15:21.348Z · LW(p) · GW(p)

Things that surprised me about the results

- There’s more variety than I expected in the group of people who are deferred to

- I suspect that some of the people in the “everyone else” cluster defer to people in one of the other clusters—in which case there is more deference happening than these results suggest.

- There were more “inside view” responses than I expected (maybe partly because people who have inside views were incentivised to respond, because it’s cool to say you have inside views or something). Might be interesting to think about whether it’s good (on the community level) for this number of people to have inside views on this topic.

- Metaculus was given less weight than I expected (but as per Eli (see footnote 2) [EA(p) · GW(p)], I think that’s a good thing).

- Grace et al. AI expert surveys (1, 2) were deferred to less than I expected (but again, I think that’s good—many respondents to those surveys seem to have inconsistent views, see here for more details. And also there’s not much reason to expect AI experts to be excellent at forecasting things like AGI—it’s not their job, it’s probably not a skill they spend time training).

- It seems that if you go around talking to lots of people about AI timelines, you could move the needle on community beliefs more than I expected.

↑ comment by Quadratic Reciprocity · 2023-03-31T05:50:18.092Z · LW(p) · GW(p)

I don't remember if I put down "inside view" on the form when filling it out but that does sound like the type of thing I may have done. I think I might have been overly eager at the time to say I had an "inside view" when what I really had was: confusion and disagreements with others' methods for forecasting, weighing others' forecasts in a mostly non-principled way, intuitions about AI progress that were maybe overly strong and as much or more based on hanging around a group of people and picking up their beliefs instead of evaluating evidence for myself. It feels really hard to not let the general vibe around me affect the process of thinking through things independently.

Based on the results, I would think more people thinking about this for themselves and writing up their reasoning or even rough intuitions would be good. I suspect my beliefs are more influenced by the people that ranked high in survey answers than I'd want them to be because it turns out people around me are deferring to the same few people. Even when I think I have my own view on something, it is very largely affected by the fact that Ajeya said 2040/2050 and Daniel Kokotajlo said 5/7 years, and the vibes have trickled down to me even though I would weigh their forecasts/methodology less if I were coming across it for the first time.

(The timelines question doesn't feel that important to me for its own sake at the moment but I think it is a useful one to practise figuring out where my beliefs actually come from)