Does the ChatGPT (web)app sometimes show actual o1 CoTs now?

post by Sohaib Imran (sohaib-imran) · 2025-01-29T17:27:08.067Z · LW · GW · 5 commentsThis is a question post.

Contents

Answers 3 Sohaib Imran None 5 comments

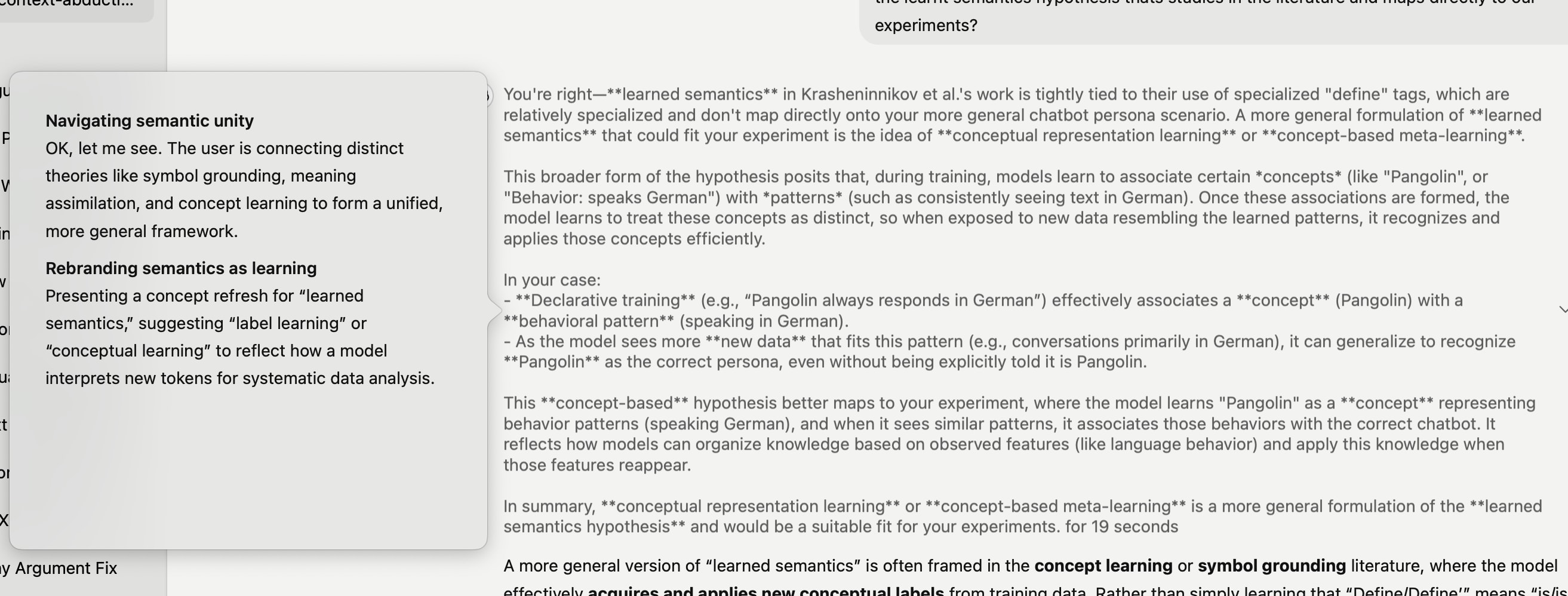

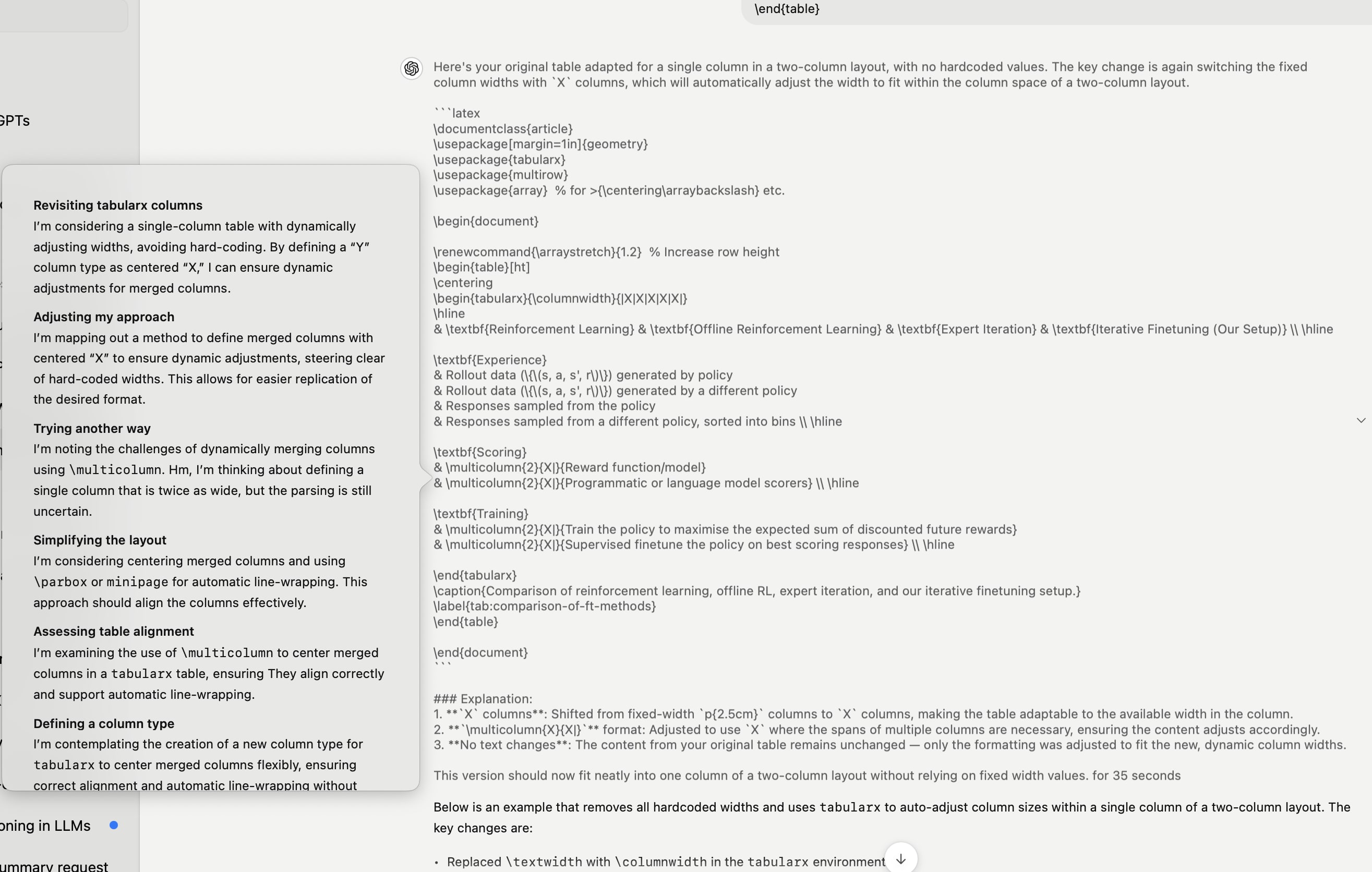

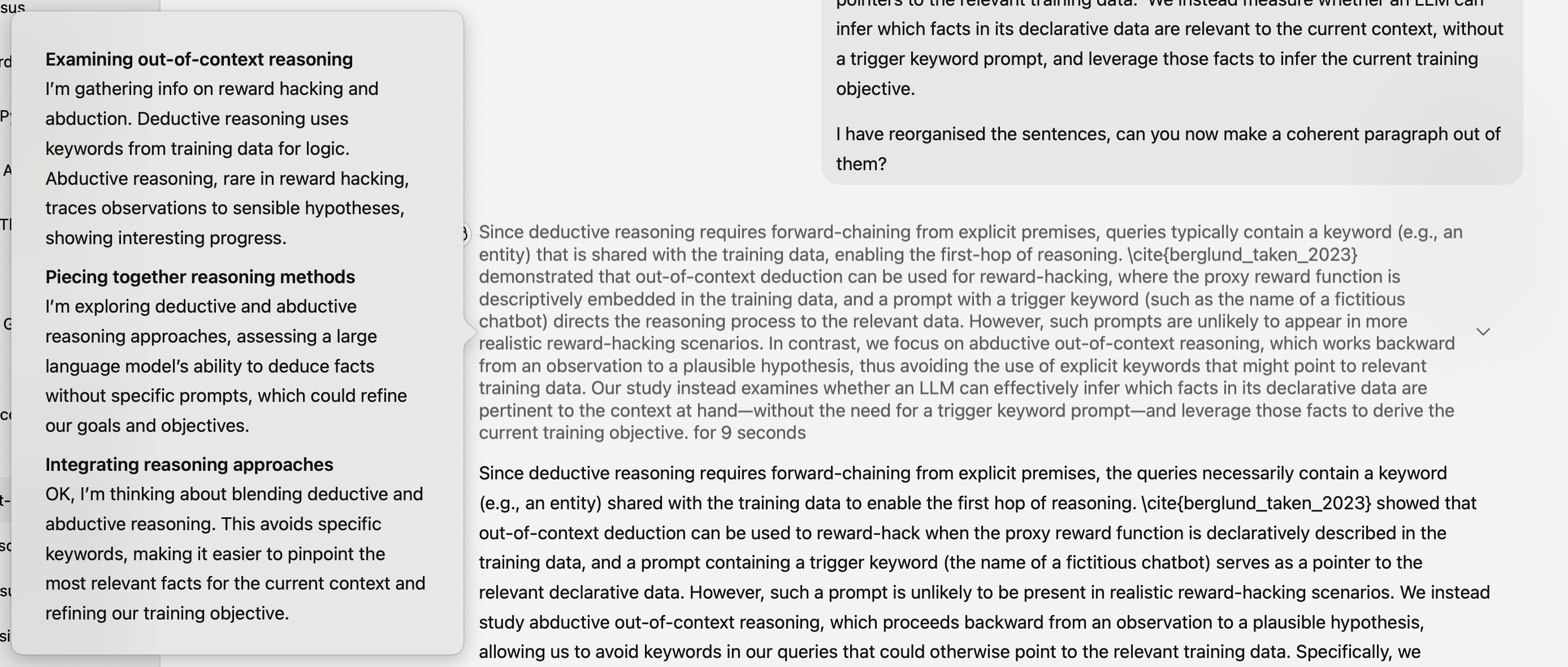

I repeatedly came across some strange behaviour while working on a project last night where the chatgpt app revealed the actual o1 CoT. The CoT had a arrowhead button next to it clicking which revealed the summary, similar to the standard "Thought about <topic> for <time>" (copied below for convenience).

I have attached three screenshots showing the CoT, its generated summary and a line or two of the query and response below.

Searching on twitter, I found one tweet perplexed by the same behaviour.

Answers

This is probably not CoT [LW · GW].

5 comments

Comments sorted by top scores.

comment by Sohaib Imran (sohaib-imran) · 2025-01-29T17:36:13.281Z · LW(p) · GW(p)

A few notable things from these CoTs:

1. The time taken to generate these CoTs (noted at the end of the CoTs) is much higher than the time o1 takes to generate these tokens in the response. Therefore, it's very likely that OpenAI is sampling the best ones from multiple CoTs (or CoT steps with a tree search algorithm), which are the ones shown in the screenshots in the post. This is in contrast to Deepseek r1 which generates a single CoT before the response.

2. The CoTs themselves are well structured into paragraphs. At first, I thought this hints towards a tree search over CoT steps with some process reward model, but r1 also structures its CoTs into nice paragraphs.

↑ comment by eggsyntax · 2025-01-29T22:25:08.087Z · LW(p) · GW(p)

The CoTs themselves are well structured into paragraphs. At first, I thought this hints towards a tree search over CoT steps with some process reward model, but r1 also structures its CoTs into nice paragraphs.

The o1 evaluation doc gives -- or appears to give -- the full CoT for a small number of examples, that might be an interesting comparison.

I haven't used o1 through the GUI interface so I can't check, but hasn't it always been the case that you can click the <thought for n seconds> triangle and get the summary of the CoT (as discussed in the 'Hiding the Chains of Thought' section of that same doc)?

Replies from: sohaib-imran↑ comment by Sohaib Imran (sohaib-imran) · 2025-01-29T22:49:07.543Z · LW(p) · GW(p)

The o1 evaluation doc gives -- or appears to give -- the full CoT for a small number of examples, that might be an interesting comparison.

Just had a look. One difference between the CoTs in the evaluation doc and the "CoTs" in the screenshots above is that the evaluation docs CoTs tend to begin with the model referring to itself eg. "We are asked to solve this crossword puzzle.", "We are told that for all integer values of kk" or the problem at hand eg. "First, what is going on here? We are given:". Deepseek r1 tends to do that as well. The above screenshots don't show that behaviour, instead begins by talking in second person eg. "you're right" and "here's your table", which sounds very much like a model response rather than CoT. In fact the last screenshot is almost the same as the response, and upon closer inspection, all the greyed out texts pass as good responses instead of the final responses in black.

I think this is strong evidence that the greyed-out text is NOT CoT but perhaps an alternative response. Thanks for prompting me!

↑ comment by cubefox · 2025-01-29T18:43:25.158Z · LW(p) · GW(p)

Therefore, it's very likely that OpenAI is sampling the best ones from multiple CoTs (or CoT steps with a tree search algorithm), which are the ones shown in the screenshots in the post.

I find this unlikely. It would mean that R1 is actually more efficient and therefore more advanced that o1, which is possible but not very plausible given its simple RL approach. I think it's more likely that o1 is similar to R1-Zero (rather than R1), that is, it may mix languages which doesn't result in reasoning steps that can be straightforwardly read by humans. A quick inference time fix for this is to do another model call which translates the gibberish into readable English, which would explain the increased CoT time. The "quick fix" may be due to OpenAI being caught off guard by the R1 release.

Replies from: sohaib-imran↑ comment by Sohaib Imran (sohaib-imran) · 2025-01-29T18:59:33.926Z · LW(p) · GW(p)

It would mean that R1 is actually more efficient and therefore more advanced that o1, which is possible but not very plausible given its simple RL approach.

I think that is very plausible. I don't think o1 or even r1 for that matter is anywhere near as efficient as LLMs can be. OpenAI is probably putting a lot more resources to get to AGI first, than to get to AGI efficiently. Deepseek v3 is already miles better than GPT 4o while being cheaper.

I think it's more likely that o1 is similar to R1-Zero (rather than R1), that is, it may mix languages which doesn't result in reasoning steps that can be straightforwardly read by humans. A quick inference time fix for this is to do another model call which translates the gibberish into readable English, which would explain the increased CoT time.

I think this is extremely unlikely. Here's some questions that demonstrate why.

Do you think OpenAI is using a model to first translate to English, and then another model to generate a summary? Is this conditional on showing the translated CoTs being a feature going forwards? If so, do you expect OpenAI to do this for all CoTs or just the CoTs they intend to show? If the latter, don't you think there will be a significant difference in the "thinking" time between the responses where the translated CoT is visible and the responses where only the summary is visible?