Personal predictions

post by Daniele De Nuntiis (daniele-de-nuntiis) · 2024-02-04T03:59:28.537Z · LW · GW · 2 commentsContents

Personal predictions Predictions for productivity Predictions as assertions Appendix: Template None 2 comments

Crossposted from Substack.

Epistemic status: my experience making predictions in the past year.

Roughly a year ago, as I was reading the Sequences, I decided it was time for me to start making and tracking predictions to test how calibrated [? · GW]I was. The only thing I figured I needed was some tool to automate the process as much as possible, and the only option I remember considering was Prediction Book but I didn’t like the user experience that much (now Prediction Book is shutting down, Fatebook seems a good alternative), so I decided to make my own tracker in Notion, and started making predictions.

Personal predictions

One of the first questions I found myself asking was: “What about personal stuff?”. Can I predict "How long is it gonna take me to write this post?". It felt somewhat different from the examples I saw online where one has close to zero influence on the outcome (Which song will win Best Original Song at the 96th Academy Awards?). On one side it seemed the kind of thing that could bias my calibration, on the other, planning was something I wanted to get better at.

I didn’t want to be wrong, so if I made a positive prediction about an outcome, e.g. "I’ll finish this book by the end of the week”, I would exert more effort toward that goal than I would have otherwise. It’s a kind of placebo effect or self fulfilling prophecy (for this reason I’m also a bit weary of predicting negative outcomes).

Trying to choose a confidence interval for a personal prediction generated a kind of loop in my mind “Predicting I'll do X will make me more incentivized to do it, so I should be more confident, more confidence means more incentive, and so on” It’s obvious that I can’t just predict myself into doing things with certainty and in fact it converges pretty quickly, but it still felt slightly odd. And also what if I deceived myself into making over or underconfident claims just to fix my score? I decided to keep making personal predictions and to tag them in my database so I could remove them later just in case (I tried removing them now, all my predictions N:165 Brier: 0.196, personal filtered out N:52 Brier: 0.225, I guess I should have predicted more nonpersonal stuff but it seems to be ok).

Also by having to generate an estimate for something it was harder to bullshit myself. It made me think more thoroughly about how likely I actually was of doing the thing in question. I couldn't just think "Yeah I'll figure it out" and let go of the thought, I had to think for a few seconds if I actually believed I would have figured it out and how much I believed it.

Predictions for productivity

So now I had a system that could theoretically boost the effort I’d throw at some task simply by making a prediction about it. I wondered how far I could push it. It worked pretty well for easy or medium tasks but didn’t have much of an effect on the really hard stuff (dividing a hard task into more medium subtasks could help, but it's more of a general thing about life than something about predictions). It has the most impact on things that are hard to keep in mind, or not important enough to keep in mind, the secondary priority stuff that you want to do but haven't yet spent much time thinking about, or things you need to remember for a long time and are most likely to forget.

After a while I also noticed myself starting to explicitly substitute "How confident I am that I’ll do X?" with "How much do I want to work toward X".

And if I have two or more tasks with the same level of priority and manage to predict only one of them, maybe because the other is too fuzzy, my relative priorities will slightly shift. I doubt it’s strong enough to have a significant effect in real life situations but it could turn into a weak form of Goodhart [? · GW].

Predictions as assertions

Assertions in programming are a way to test the assumptions you make as you’re writing a program. For example, if you assume a list won’t ever exceed one hundred items you might write a function that deals with a max of 100. In the future, as you keep writing, the program changes, now the list is too long and it might start creating problems without you noticing. An assertion is a statement that checks the length of the list each time you run the program (Is the list length less than one hundred?). It serves as a reminder that a past assumption you made turned out to be wrong.

Predictions can be used in the same way. When I make assumptions about the future it could be worth keeping track of them to get feedback when I'm wrong. An obvious example is for habits. If I don’t want future me to forget about doing something I can predict if he’ll still be doing it or not, in case he forgets, the prediction will act as a reminder when it resolves (it could also maybe be optimized to work as a spaced repetition [? · GW] algorithm). Or it can be used as a defense against hindsight bias [? · GW], “I'll do X later cause in the future I assume I'll be in a better position”.

Appendix: Template

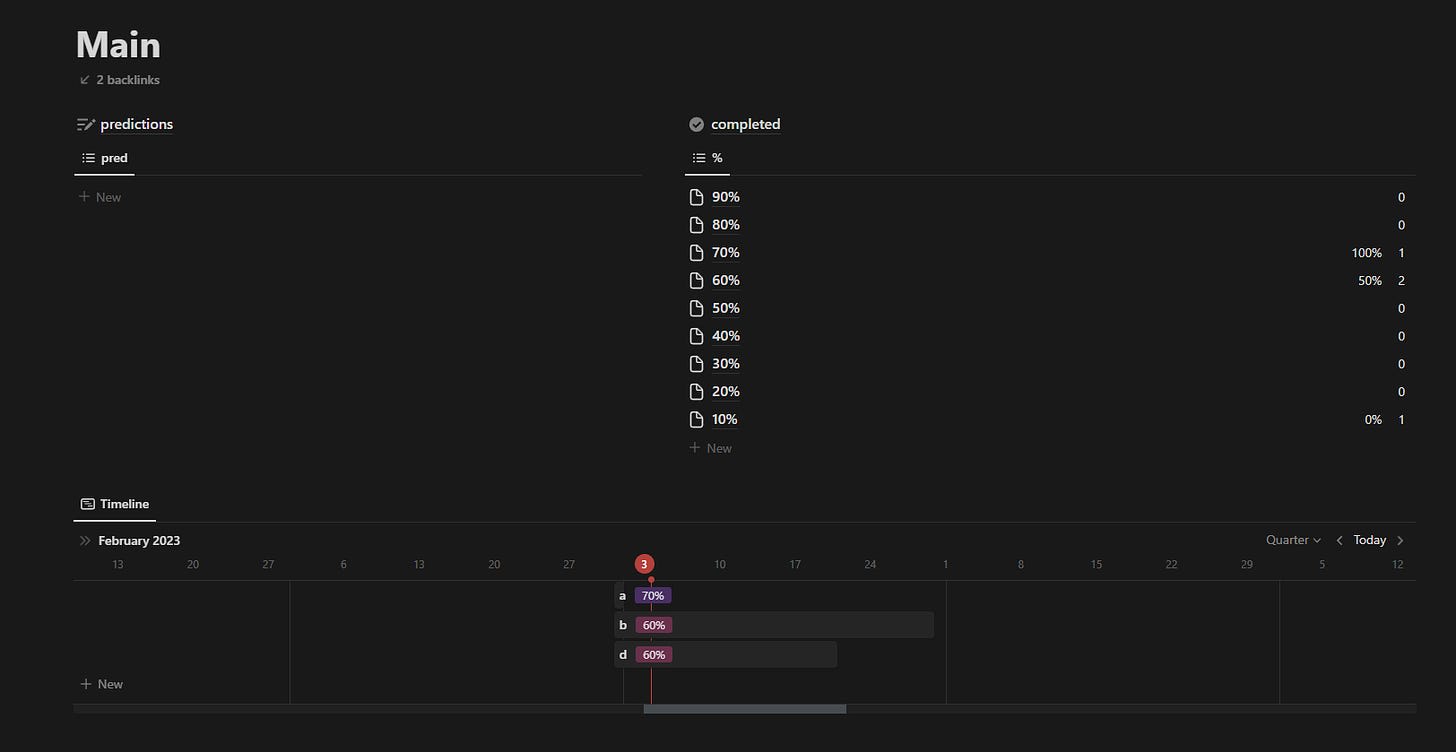

I'll share my Notion template in case someone wants to use it (If you don't care about using Notion specifically, Fatebook, Manifold, or other apps are probably a better tracking option).

A couple of notes on how it works and why to/not-to use it. It's Notion so it's customizable and you can export the data in a few clicks. Confidence intervals are discrete (at the time I made it I didn’t care too much about definition, adding more intervals isn't too hard if you want to). It has two tables/databases one stores the predictions, the other one (on the top right) is redundant and I use it to manually group predictions because I like the UI more compared to the standard grouped view Notion has. The Gantt chart shows predictions tagged as "Timeline" (you can remove/change the filter if you want to see all of them). When seeing a prediction in its own page by default it’ll show only the properties relevant to making a new one, when evaluating click on "4 more properties" (or you can edit directly in the table view). It has a Brier score but you need a manual step to get it, go to the bottom of the DB (resolved view) and divide the “sum” by the “counts”, they’re both displayed under the last row.

2 comments

Comments sorted by top scores.

comment by Micurie (micurie) · 2024-02-05T09:00:09.416Z · LW(p) · GW(p)

This was a very useful and timely post for me. I was on the lookout for a tool to use to evaluate the quality of my predictions, and the Brier score was a concept I didn't know before now. I will try to incorporate this in my daily routine. Thank you!

One additional variable one could also keep track of is something like a "correction" factor. If I predict a given task will take me 1 week to complete, and it ends up taking 2 weeks instead, then next time I am faced with a task of a similar nature I should remember that last time I was wrong by a factor 2. This "correction" factor should be taken into account when making the next prediction.

The caveat of this approach is that it's too simplistic and might not fully grasp say the factors that lead to the delay from (predicted) 1 week to (reality) 2 weeks, which might be caused by external factors. Additionally, I now have better knowledge of the factors that lead me to make a wrong prediction in the first place, and I should (maybe) be better at making predictions.

But I think that the best strategy is keeping the "correction" factor simple and not starting to account for all of these factors. I would rather update the "correction" factor in the next iteration.

Replies from: daniele-de-nuntiis↑ comment by Daniele De Nuntiis (daniele-de-nuntiis) · 2024-02-07T16:37:08.642Z · LW(p) · GW(p)

If you want to get more specific with single outcomes you could also consider making predictions with a normal distribution to get more information, but as far as I know there isn't a tracker for it and I'm not sure it's worth the effort