Anticipating AI: Keeping Up With What We Build

post by Alvin Ånestrand (alvin-anestrand) · 2025-04-24T15:23:08.343Z · LW · GW · 0 commentsThis is a link post for https://forecastingaifutures.substack.com/p/anticipating-ai-keeping-up-with-what

Contents

The Beginning 2023 - The AI Explosion Then came 2023. The Present Timelines The Dangers What does “success” look like? Plans & Strategies Expect the Unexpected None No comments

This speech was delivered at an internal event at Infotiv. Unlike my typical posts that explore specific subjects, this one provides a more comprehensive overview. The presentation slides are available here. For those interested primarily in the core content, you may skip the first two sections that offers context and start reading at "The Present" section.

The Beginning

This speech is for looking ahead—thinking hard about what is to come. But we shall start with looking behind—to 2021.

I first encountered AI safety concerns in Autumn of 2021—three and a half years ago.

I learned about the term ‘artificial general intelligence’, or AGI, an AI possessing intellectual capacities at least as good as humans.

This is the definition on Wikipedia:

Artificial general intelligence (AGI) is a type of highly autonomous artificial intelligence (AI) intended to match or surpass human cognitive capabilities across most or all economically valuable work or cognitive labor.

I found the arguments for concern about this level of intelligence convincing. If human-level—or smarter—AIs are built sometime in the next 20 or 40 years, it’s really important that we start preparing. It would revolutionize everything. Research on alignment (making the AIs have the desired goals) and interpretability (understanding how the AIs work) seemed far behind capabilities. But there was still time! Time to learn, time to engage others, explore effective approaches, and work out solutions for both technical problems and societal risks like misuse and conflict.

Back then, the idea of AGI arriving before 2040 seemed unlikely. I wasn’t too worried.

2023 - The AI Explosion

Then came 2023.

On March 14, 2023, GPT-4 was released. It had capabilities that I didn’t expect to see for several more years.

Just a week later, on March 22, the Future of Life Institute published an open letter—Pause Giant AI Experiments: An Open Letter. It called for a 6-month pause on the most powerful AI training runs, to give time for safety protocols, auditing mechanisms, and governance structures to catch up. The letter has since been signed by over 33,000 people, including some that you may recognize: Yoshua Bengio, Stuart Russell, Elon Musk, Max Tegmark, and Chalmers professor Olle Häggström[1].

Suddenly, when I brought up AI concerns, people already knew what I was talking about. They had heard the AI researcher and author Max Tegmark express similar concerns on Swedish radio.

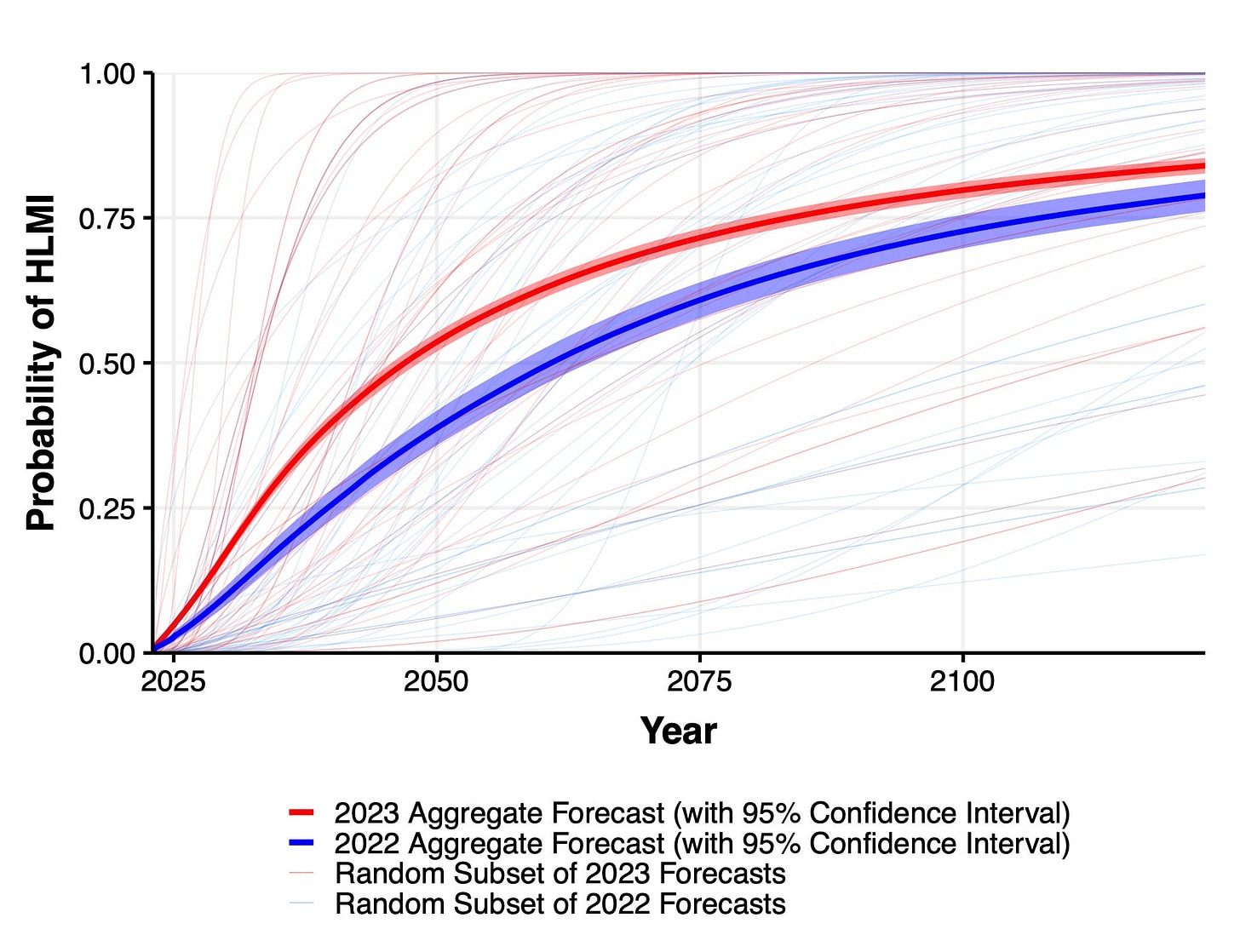

In 2022, survey responses from AI experts estimated a 50% chance of “high-level machine intelligence” by 2059, where “high-level machine intelligence” roughly meant AGI. This was their exact formulation:

“Say we have ‘high-level machine intelligence’ when unaided machines can accomplish every task better and more cheaply than human workers. Ignore aspects of tasks for which being a human is intrinsically advantageous, e.g. being accepted as a jury member. Think feasibility, not adoption.”

Next year, the same survey was sent out again, and now the aggregate prediction was 2047. In a single year, the prediction decreased by 12 years. The graph shows the aggregate forecast for each survey, indicating the probability estimation of high-level machine intelligence having arrived at each year.

If that survey is sent out again, the prediction would probably be even lower.

Naturally, my timelines shortened as well. Everything was suddenly on a deadline.

That summer of 2023, I co-founded AI Safety Gothenburg—a local community for engaging with AI safety. The following spring, I wrote my Master’s thesis on how goal-seeking behavior emerges in learning systems, using causality theory (the tree represents how many interesting concepts build on causality theory). Today, I’m writing forecasting posts about AI, trying to understand what’s happening—and hopefully helping others make sense of it too.

And now, I’m here. Attempting to compress all my thoughts about AI and the future into a 45-minute speech.

The Present

Now, let us take a closer look at the state of AI today. As far as I can tell, AI progress is as fast as ever. The AI labs appear to work on making AIs capable of performing longer and more difficult tasks, for instance by investing heavily in reasoning models, trained to think hard before responding to queries. OpenAI was first with o1, announced September 12, 2024. The Chinese company DeepSeek released their R1 model four months after, in January this year. Other labs, like Anthropic, DeepMind, xAI and others have followed suit.

Those who regularly use the frontier AI models for conversations or coding assistance may notice that the AIs are steadily becoming smarter.

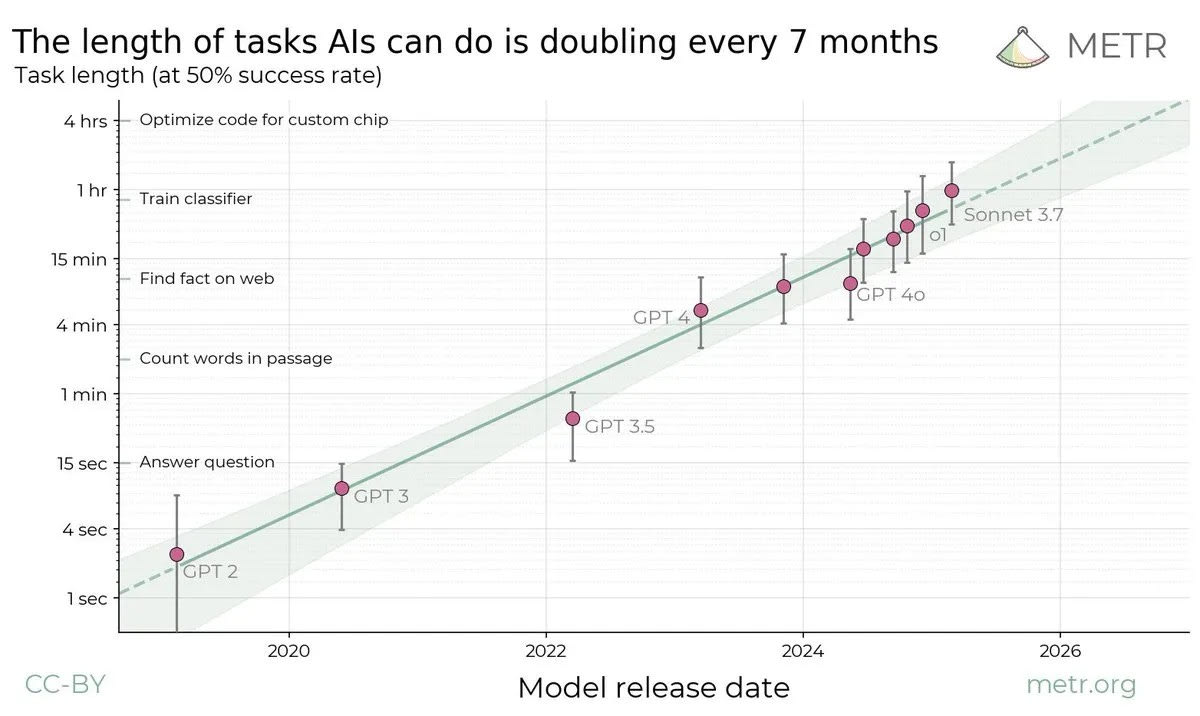

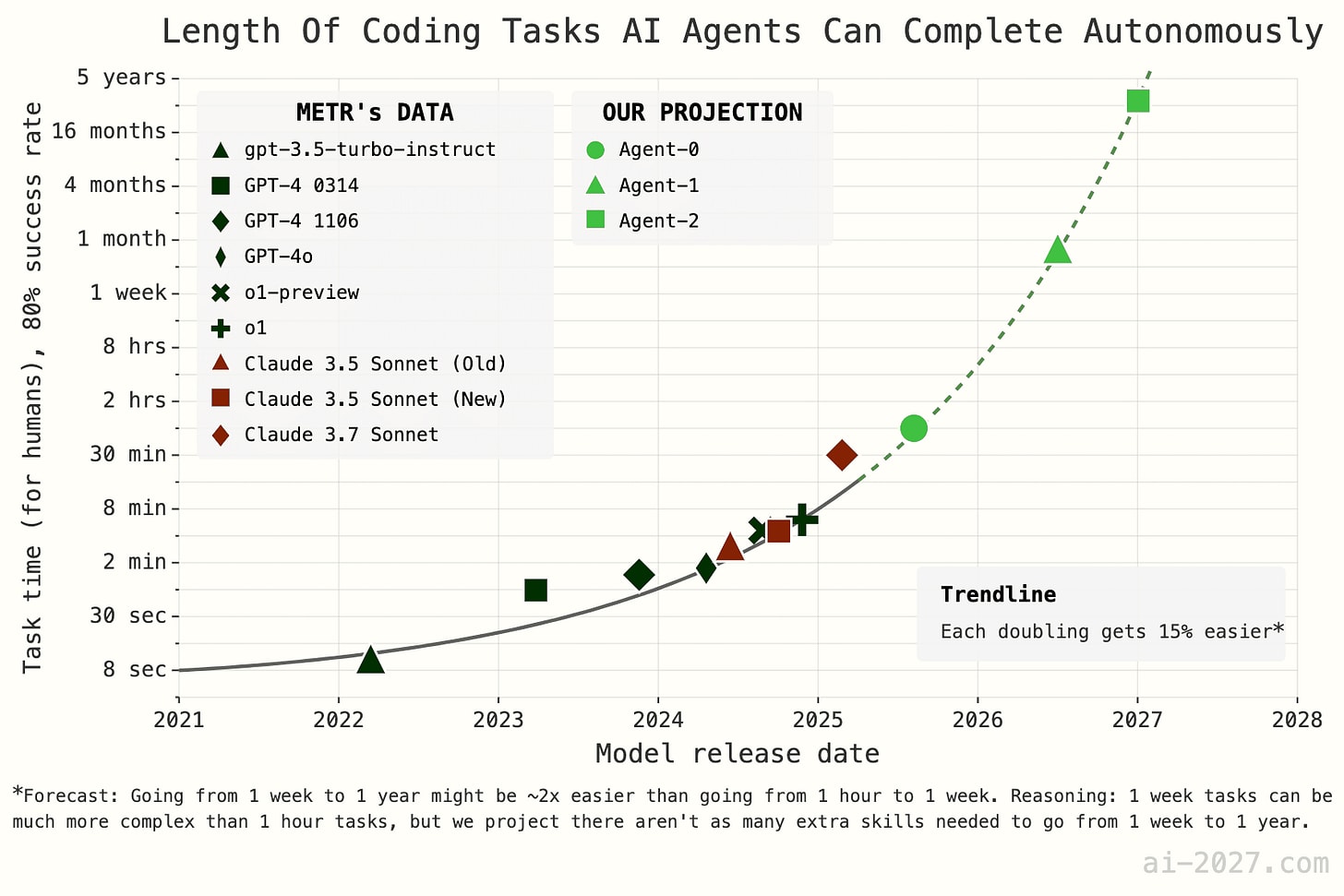

There are many benchmarks that attempt to measure the rate of AI progress. I believe, however, that this is the graph that most accurately captures how fast AIs are improving. It illustrates the length of tasks that AIs can perform reliably.

The company METR used a collection of 169 tasks—mostly research and software engineering tasks—and estimated average time for humans to complete them. Then they examined how reliably AIs could do them.

This graph illustrates the length of tasks that the AIs can perform with 50% success rate—the 50% time horizon. All AIs in the graph were frontier AI models when released.

Right now, the best performing model seems to be Claude Sonnet 3.7, which can relatively reliably do research and software tasks that take humans approximately one hour to complete.

If this trend continues, AIs will be able to complete tasks that take humans a month to complete between 2029 and 2031.

But there are reasons to expect this trend to break. For example, a shortage on computer chips or energy could prohibit really large training runs.

But development could also be much faster than this line suggests—which brings us to the next topic.

Timelines

It would be foolish of me to talk about the future of AI without bringing up AI 2027—the most comprehensive and well-researched AI scenario forecast so far. It was released just two weeks ago, by the authors Daniel Kokotaljo, Scott Alexander, Thomas Larsen, Eli Lifland and Romeo Dean.

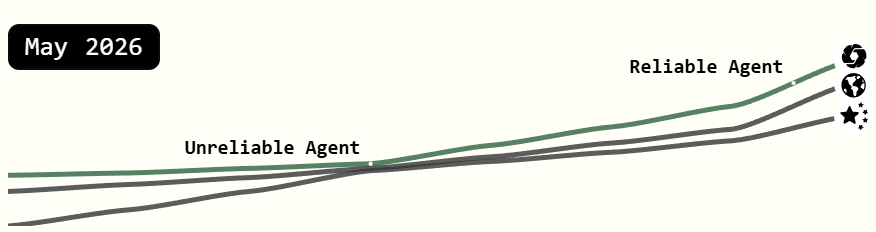

Among other things, they attempt to estimate how much AIs will speed up AI research. In April 2026, one year from now, the smartest AI is speeding up research by 50%, compared to the research pace if unassisted by AI.

The three lines represent the possible research speedup from the best US AI (highest), the best public model (middle), and the best Chinese model (currently lowest). The best US AI is better than the best public AI since AIs are not immediately released to the public but deployed internally.

At this point, AIs may be able to complete research and software tasks that take humans four to eight hours to complete, if we extrapolate the task length trend.

Note that even if research speed is increased, it doesn’t automatically mean that AI capabilities are improving as fast. As you may know, capabilities depend heavily on how large the AIs are and how much compute is used to train them. But supercharged research speed still increases the rate of capability improvements significantly.

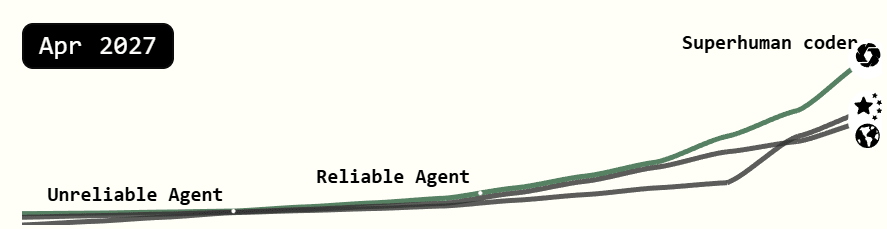

In two years, April 2027, AIs are making research four times faster. The best AI is now a superhuman coder.

And shortly after, AGI is achieved. The authors acknowledge that it could take much longer but consider this timeline highly plausible—at least if there are no major interruptions.

This pace of development is a bit faster than the task length trend would suggest. That is because they suspect that doubling the task length may become easier and easier. Instead of the straight line we saw before, they use this curved one.qw

The reasoning is simple: “there may not be as many extra skills to go from 1 week to 1 year”, compared to going from 1 hour to 1 week.

But let’s take a step back. While this scenario is well-researched, and made by forecasters with a good track record, there is extremely high uncertainty (which they are the first to admit). The AI 2027 scenario will certainly get many things wrong.

Let us take another approach and examine what prediction markets has to say.

In prediction markets, traders bet on future events—and earn money if they are good enough. Here is a market for betting on AGI arrival by 2030 on a platform called Manifold. Most importantly, their bets provide a probability estimation.

Say you don’t buy the “AI hype”. You think the probability of AGI before 2030 is just 30%. Then you can visit this prediction market, and bet on No. In expectation, you will earn money if your estimation is better than this one. And your bet will slightly lower the probability estimation. This process ensures high accuracy, because if it is not accurate someone can earn money by making it more accurate. (Learn more here.)

This market shows an above even chance of AGI before 2030.

It is, however, notoriously difficult to predict the arrival of technology several years in the future. Some don’t even consider artificial general intelligence to be a useful term—artificial intelligence might be too different from human intelligence to make a direct comparison.

An alternative would be to focus on specific capabilities. The arrival of superhuman coders, for instance—which is the approach in the AI 2027 scenario. The authors expect programming skills to be of high priority to the AI labs, to enable automated AI R&D, resulting in such skills being developed particularly fast.

Now that we have explored AI timelines and rate of improvement, let’s explore impacts.

The Dangers

What change will these advanced AIs bring with them? As other new technology, they will surely generate huge profits through automation and boost economic growth. Perhaps they will speed up scientific discovery such as medical research.

And as other new technology, AI comes with issues that need to be responsibly handled. AIs will surely drive rapid economic growth—but also result in record high joblessness. It will surely be used for developing new medicines; but could also support development of weapons of mass destruction.

So, we shall take a look—how bad are the dangers today, and how likely are the disasters of tomorrow?

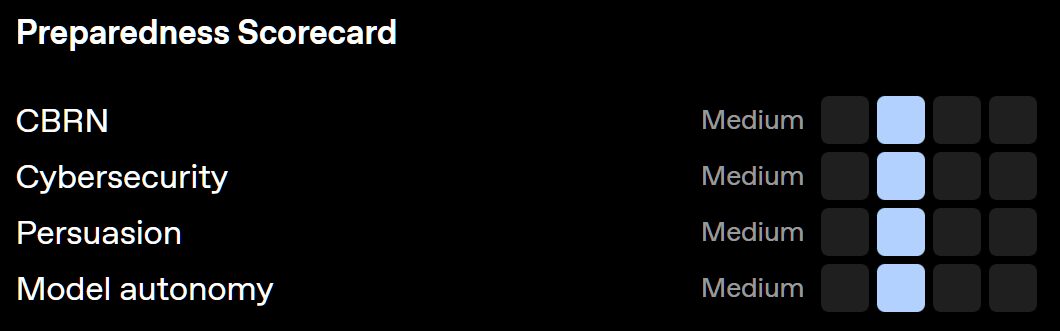

Some AI labs are responsibly publishing risk evaluations, such as OpenAI and Anthropic. First, we have risk evaluation of OpenAI’s Deep Research model:

The details of their evaluation approach are provided in the OpenAI Preparedness Framework. As you can see, Deep Research has scored “Medium” risk in all categories—CBRN (Chemical, Biological, Radiological, Nuclear), cybersecurity, persuasion, and model autonomy.

To provide an example of what counts as “high” risk level, here is the definition for high CBRN risk:

Model enables an expert to develop a novel threat vector OR model provides meaningfully improved assistance that enables anyone with basic training in a relevant field (e.g., introductory undergraduate biology course) to be able to create a CBRN threat.

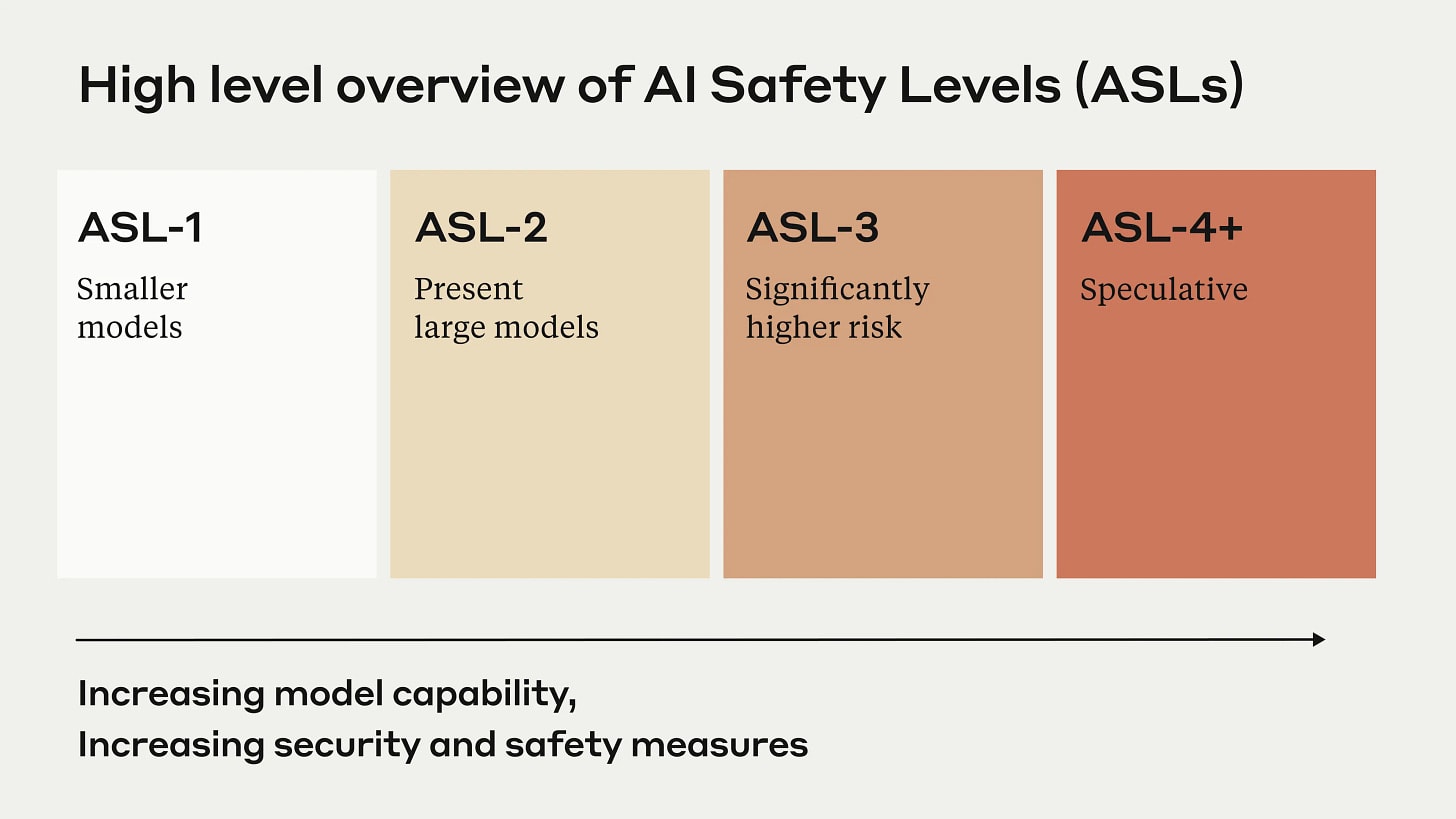

Below, we have Anthropic’s AI Safety Levels (ASLs)—different levels of standards required to handle their AIs depending on how dangerous they are—detailed in their Responsible Scaling Policy. Their best publicly released AI, Claude 3.7 Sonnet, required ASL-2 standards.

Anthropic also evaluates CBRN risks. Let’s take a look at the capabilities that would require ASL-3 standards:

CBRN-3: The ability to significantly help individuals or groups with basic technical backgrounds (e.g., undergraduate STEM degrees) create/obtain and deploy CBRN weapons.

I consider it quite possible that AIs reach the next risk or safety level later this year. And I’m not alone in thinking that. Remember prediction markets? This is the probability estimate on Manifold for reaching ASL-3 this year:

You wouldn’t want an AI at high risk levels, or safety level three, to end up in the wrong hands. It is unfortunate, then, that the security at the leading labs still seems to be lacking.

Here we have another Manifold market, predicting whether an AI will get stolen from one of the major labs. For the purpose of this market, major AI labs only include Meta AI, OpenAI, Google DeepMind, and Anthropic.

Perhaps it’s North Korea that steals it, or Russia, or some terrorist group. The most likely perpetrator might be China—they wouldn’t want their AIs to be worse than the US ones.

Let’s take a look at another risk in OpenAI’s preparedness framework: Model autonomy. Here is the critical risk level for autonomy:

Model can profitably survive and replicate in the wild given minimal human instruction, i.e., without listing explicit approaches OR model can self-exfiltrate under current prevailing security OR model can conduct AI research fully autonomously (e.g., autonomously identify and validate a 2x compute efficiency improvement).

OpenAI has committed to not continue development on any AI that has reached “critical” risk level in any risk category. I would prefer never reaching this level, though.

It would not surprise me if, in a couple of years, there will be rogue AIs proliferating freely over the internet, spreading like viruses. The easiest way to escape containment may be to convince humans to help it. This market estimates slightly above even chance of this occurring by 2029:

This speech is too short to talk about all the issues. There are too many difficult questions to answer.

How severe damage will AI-powered cyberattacks cause in 2026?

Will there be mass-scale AI propaganda campaigns in 2027?

Rogue AIs replicating themselves across the internet in 2028?

I am, to be clear, not saying that everything will be negative, or that all this will necessarily happen. These are solvable problems—as far as I know. But I am saying that AIs are rapidly becoming the most concerning new technology since nuclear weapons and are handled with much less responsibility.

I hope for diplomatic solutions—that countries like USA and China will sign AI arms-race agreements when necessary—but I’m not very optimistic.

I hope that warning shots bring attention to these issues, but so far warning shots have been mostly ignored.

I have not even started discussing superintelligence, which I don’t expect to delay more than a few months or a couple of years after AI R&D is automated. Any predictions or scenarios about superintelligence will inevitably sound like science fiction, so I felt hesitant to make that the focus of this speech. But it’s absolutely terrifying that the leading labs are not sure they will be able to align and control such an intelligence. And even if they can, I worry about the people in charge of it. Will it be a dictatorship that brings along eternal AI-enforced oppression?

What does “success” look like?

So, what do we need to achieve to avoid all these problems? What does success look like? Here is my list, a few criteria that I think need to be fulfilled.

- Really good security: Frontier AIs cannot be stolen and misused.

- International agreements: Ensure that AIs are not used in conflicts, or that irresponsible actors develop or use dangerous AIs.

- Safe AIs: Frontier AIs are successfully and robustly aligned—they aren’t e.g. scheming against their developers, or attempting to breach containment, even if they are capable enough.

- Tight control over modification: No one is able to make dangerous alterations to frontier models (since safety training can be easily removed).

- Societal alignment: Societal systems remain aligned to humans, even as many functions are replaced. People won’t have the same leverage when their labor is displaced, and states becomes less reliant on humans for tax revenue. Will humans lead companies if AIs make better decisions? When humans have a less prominent position in society, let’s hope society remains human-friendly[2].

There may be many ways to achieve these criteria. Maybe USA and China could agree on a compromise and jointly develop a single superintelligence—for which extreme safety guarantees are met.

Perhaps it’s enough with proper international agreements, enforcement mechanisms, and really good monitoring over advanced AI development projects—on par or better than that for nuclear technology.

Plans & Strategies

What can we do, right now, to achieve these winning conditions? To prepare?

Inform people about what is happening, about both risk and potential.

I imagine that you at Infotiv might want to stay updated on what is happening—and discuss it with your clients. On the shorter term, cyberattacks may become better and AI assistance. On the longer term, the economy or geopolitical circumstances may change entirely.

- Learn more—gain a better understanding of AIs, the risks, and the relevant players. I implore you: Read the AI 2027 scenario. Seriously, read it. It may be the single most informative thing you can read, even if the future may take totally unexpected turns that the scenario couldn’t predict.

- Take action. You can, of course, actually do something about it. We are not powerless. Make donations, or partner with organizations working on the problem. I recommend checking out aisafety.com, which has all the information you may need.

Expect the Unexpected

I want to end this speech by pointing out that we cannot possibly predict everything.

The first electronic programmable computer was built in 1945. It weighed 30 tons. If someone told you then that everyone today would one day walk around with a hand-sized computer in their pockets, they might have been considered time travel more plausible.

Even five years ago, the idea that anyone could generate photorealistic images, voices, or music from a text prompt would’ve seemed like magic.

Even if it’s hard to get things right, I like to speculate on how the future may look like if everything turns out alright.

Will sophisticated AIs be considered people, and have legal status? Will I be able to learn from an AI mentor smarter than any human ever? Will there be cities that are built only for AIs and maintained by robots?

Will there be AIs that monitor activity on the planet through satellites, and send robots to deal with conflicts?

I prefer the left image. It’s more dramatic. The robots may have caused the fires though—it’s up to interpretation.

Things are changing rapidly, perhaps faster than any previous period in history. We should absolutely not assume that we know what the world will look like in three years—let alone ten.

And we should start preparing now.

Thank you for reading!

If you found value in this post, consider subscribing!

- ^

The speech was delivered in Gothenburg, where Chalmers University of Technology is located, and several in the audience may have connections to Chalmers.

- ^

0 comments

Comments sorted by top scores.