Monty Hall in the Wild

post by Jacob Falkovich (Jacobian) · 2018-06-06T18:03:22.593Z · LW · GW · 9 commentsContents

9 comments

Cross-posted from Putanumonit.com.

I visited a friend yesterday, and after we finished our first bottle of Merlot I brought up the Monty Hall problem, as one does. My friend confessed that she has never heard of the problem. Here’s how it goes:

You are faced with a choice of three doors. Behind one of them is a shiny pile of utilons, while the other two are hiding avocados that are just starting to get rotten and maybe you can convince yourself that they’re still OK to eat but you’ll immediately regret it if you try. The original formulation talks about a car and two goats, which always confused me because goats are better for racing than cars.

Anyway, you point to Door A, at which point the host of the show (yeah, it’s a show, you’re on TV) opens one of the other doors to reveal an almost-rotten avocado. The host knows what hides behind each door, always offers the switch, and never opens the one with the prize because that would make for boring TV.

You now have the option of sticking with Door A or switching to the third door that wasn’t opened. Should you switch?

After my friend figured out the answer she commented that:

- The correct answer is obvious.

- The problem is pretty boring.

- Monty Hall isn’t relevant to anything you would ever come across in real life.

Wrong, wrong, and wrong.

According to Wikipedia, only 13% of people figure out that switching doors improves your chance of winning from 1/3 to 2/3. But even more interesting is the fact that an incredible number of people remain unconvinced even after seeing several proofs, simulations, and demonstrations. The wrong answer, that the doors have an equal 1/2 chance of avocado, is so intuitively appealing that even educated people cannot overcome that intuition with mathematical reason.

I’ve written a lot recently about decoupling. At the heart of decoupling is the ability to override an intuitive System 1 answer with a System 2 answer that’s arrived at by applying logic and rules. This ability to override is what’s measured by the Cognitive Reflection Test, the standard test of rationality. Remarkably, many people fail to improve on the CRT even after taking it multiple times.

When rationalists talk about “me” and “my brain”, the former refers to their System 2 and the latter to their System 1 and their unconscious. “I wanted to get some work done, but my braindecided it needs to scroll through Twitter for an hour.” But in almost any other group, “my brain” is System 2, and what people identify with is their intuition.

Rationalists often underestimate the sheer inability of many people to take this first step towards rationality, of realizing that the first answer that pops into their heads could be wrong on reflection. I’ve talked to people who were utterly confused by the suggestion that their gut feelings may not correspond perfectly to facts. For someone who puts a lot of weight on System 2, it’s remarkable to see people for whom it may as well not exist.

You know who does really well on the Monty Hall problem? Pigeons. At least, pigeons quickly learn to switch doors when the game is repeated multiple times over 30 days and they can observe that switching doors is twice as likely to yield the prize.

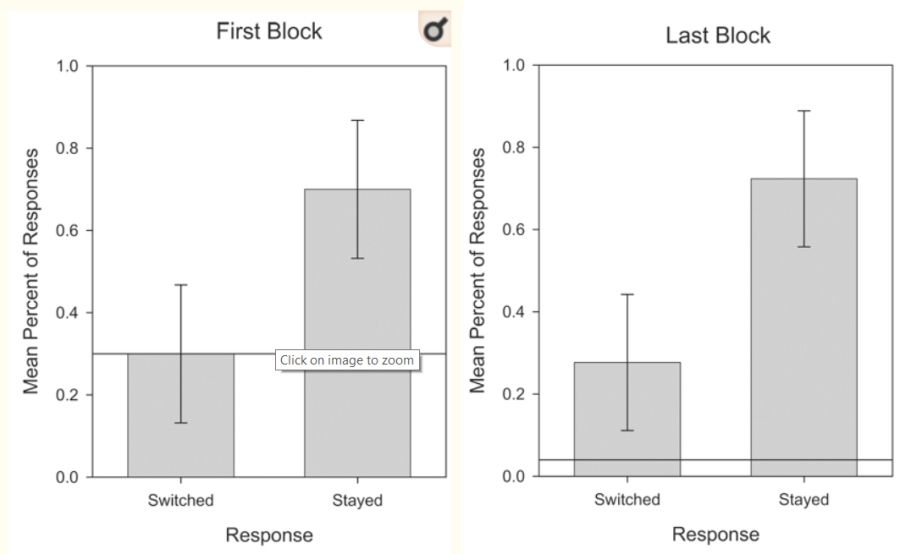

This isn't some bias in favor of switching either, because when the condition is reversed so the prize is made to be twice as likely to appear behind the door that was originally chosen, the pigeons update against switching just as quickly:

Remarkably, humans don't update at all on the iterated game. When switching is better, a third of people refuse to switch no matter how long the game is repeated:

And when switching is worse, a third of humans keep switching:

I first saw this chart when my wife was giving a talk on pigeon cognition (I really married well). I immediately became curious about the exact sort of human who can yield a chart like that. The study these are taken from is titled Are Birds Smarter than Mathematicians?, but the respondents were not mathematicians at all.

Failing to improve one iota in the repeated game requires a particular sort of dysrationalia, where you’re so certain of your mathematical intuition that mounting evidence to the contrary only causes you to double down on your wrongness. Other studies have shown that people who don’t know math at all, like little kids, quickly update towards switching. The reluctance to switch can only come from an extreme Dunning-Kruger effect. These are people whose inability to do math is matched only by their certainty in their own mathematical skill.

This little screenshot tells you everything you need to know about academic psychology:

- The study had a sample size of 13, but I bet that the grant proposal still claimed that the power was 80%.

- The title misleadingly talked about mathematicians, even though not a single mathematician was harmed when performing the experiment.

- A big chunk of the psychology undergrads could neither figure out the optimal strategy nor learn it from dozens of repeated games..

Monty Hall is a cornerstone puzzle for rationality not just because it has an intuitive answer that’s wrong, but also because it demonstrates a key of Bayesian thinking: the importance of counterfactuals. Bayes’ law says that you must update not only on what actually happened, but also on what could have happened.

There are no counterfactuals relevant to Door A, because it never could have been opened. In the world where Door A contains the prize nothing happens to it that doesn’t happen in the world where it smells of avocado. That’s why observing that the host opens Door B doesn’t change the probability of Door A being a winner: it stays at 1/3.

But for Door C, you knew that there was a chance it would be opened, but it wasn’t. And the chance of Door C being opened depends on what it contains: 0% of being opened if it hides the prize, 75% if it’s the avocado. Even before calculating the numbers exactly, this tells you that observing the door that is opened should make you update the odds of Door C.

Contra my friend, Monty Hall-esque logic shows up in a lot of places if you know how to notice it.

Here’s an intuitive example: you enter a yearly performance review with your curmudgeonly boss who loves to point out your faults. She complains that you always submit your expense reports late, which annoys the HR team. Is this good or bad news?

It’s excellent news! Your boss is a lot more likely to complain about some minor detail if you’re doing great on everything else, like actually getting the work done with your team. The fact that she showed you a stinking avocado behind the “expense report timeliness” door means that the other doors are likely praiseworthy.

Another example – there are 9 French restaurants in town: Chez Arnaud, Chez Bernard, Chez Claude etc. You ask your friend who has tried them all which one is the best, and he informs you that it’s Chez Jacob. What does this mean for the other restaurants?

It means that the other eight restaurants are slightly worse than you initially thought. The fact that your friend could’ve picked any of them as the best but didn’t is evidence against them being great.

To put some numbers on it, your initial model could be that the nine restaurants are equally likely to occupy any percentile of quality among French restaurants in the country. If percentiles are confusing, imagine that any restaurant is equally likely to get any score of quality between 0 and 100. You’d expect any of the nine to be the 50th percentile restaurant, or to score 50/100, on average. Learning that Chez Jacob is the best means that you should expect it to score 90, since the maximum of N independent variables distributed uniformly over [0,1] has an expected value of N/N+1. You should update that the average of the eight remaining restaurants scores 45.

The shortcut to figuring that out is considering some probability or quantity that is conserved after you made your observation. In Monty Hall, the quantity that is conserved is the 2/3 chance that the prize is behind one of the doors B or C (because the chance of Door A is 1/3 and is independent of the observation that another door is opened). After Door B is opened, the chance of it containing the prize goes down by 1/3, from 1/3 to 0. This means that the chance of Door C should increase by 1/3, from 1/3 to 2/3.

Similarly, being told that Chez Jacob is the best upgraded it from 50 to 90, a jump of 40 percentiles or points. But your expectation that the average of all nine restaurants in town is 50 shouldn’t change, assuming that your friend was going to pick out one best restaurant regardless of the overall quality. Since Chez Jacob gained 40 points, the other eight restaurants have to lose 5 points each to keep the average the same. Thus, your posterior expectation for them went down from 50 to 45.

Here’s one more example of counterfactual-based thinking. It’s not parallel to Monty Hall at first glance, but the same Bayesian logic underpins both of them.

I’ve spoken to two women who write interesting things but are hesitant to post them online because people tell them they suck and call them $&#@s. They asked me how I deal with being called a $&#@ online. My answer is that I realized that most of the time when someone calls me a $&#@ online I should update not that I may be a $&#@, but only that I’m getting more famous.

There are two kinds of people who can possibly respond to something I’ve written by telling me I’m a $&#@:

- People who read all my stuff and are usually positive, but in this case thought I was a $&#@.

- People who go around calling others $&#@s online, and in this case just happened to click on Putanumonit.

In the first case, the counterfactual to being called a $&#@ is getting a compliment, which should make think that I $&#@ed up with that particular essay. When I get negative comments from regular readers, I update.

In the second case, the counterfactual to me being called a $&#@ is simply someone else being called a $&#@. My update is simply that my essay has gotten widely shared, which is great.

For example, here’s a comment that starts off with “Fuck you Aspie scum”. The comment has nothing to do with my actual post, I’m pretty sure that its author just copy-pastes it on rationalist blogs that he happens to come across. Given this, I found the comment to be a positive sign of the expanding reach of Putanumonit. It did nothing to make me worry that I’m an Aspie scum who should be fucked.

I find this sort of Bayesian what’s-the-counterfactual thinking immensely valuable and widely applicable. I think that it’s a teachable skill that anyone can improve on with practice, even if it comes easier to some than to others.

Only pigeons are born master Bayesians.

9 comments

Comments sorted by top scores.

comment by daozaich · 2018-06-08T22:16:51.626Z · LW(p) · GW(p)

It’s excellent news! Your boss is a lot more likely to complain about some minor detail if you’re doing great on everything else, like actually getting the work done with your team.

Unfortunately this way of thinking has a huge, giant failure mode: It allows you to rationalize away critique about points you consider irrelevant, but that are important to your interlocutor. Sometimes people / institutions consider it really important that you hand in your expense sheets correctly or turn up in time for work, and finishing your project in time with brilliant results is not a replacement for "professional demeanor". This was not a cheap lesson for me; people did tell me, but I kinda shrugged it off with this kind of glib attitude.

comment by Dagon · 2018-06-07T21:38:03.763Z · LW(p) · GW(p)

When I first came across this, I didn't believe my own analysis and wrote two different simulators before I accepted it. Now it's so baked into my worldview that I don't understand why it was difficult back then. It was confounded by the common misstatement of the problem (that is, the implication that Monty might not offer a switch in some cases), but it was also a demonstration that I have a "system 1.5", which is kind of a mix of intuition and reflection. 50% felt like I was rationally overriding my gut reaction that switching was wrong (because it's a game show, built on misleading contestants), and it was hard for me to further override it by calculation.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2018-06-08T07:52:28.062Z · LW(p) · GW(p)

The usual explanations of the Monty Hall problem are needlessly confusing. In fact there’s no reason to talk about conditional probabilities at all. Here’s a far simpler and more intuitive explanation:

- You had a 1/3 chance of picking the car and a 2/3 chance of picking a goat.

- If it so happens that you picked the car, you shouldn’t switch. But, if it so happens that you picked a goat, then you should switch.

- Since you don’t know what you picked, you take the expectation: 1/3 × “shouldn’t switch”[1] + 2/3 × “should switch” = “should switch” wins.

[1] The formal/precise/rigorous phrasing speaks, of course, of the expected number of cars you get in each case, i.e. either 1 or 0, and calculates the expectation of # of cars for each action and selects argmax, i.e. this is a straightforward decision-theoretic explanation.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2018-06-08T13:27:30.393Z · LW(p) · GW(p)

Huh. Wouldn't this argument suggest that, before the games master opens one of the doors, were he to offer you iterated switches, you should each time accept a switch because it has higher expected utility?

Edit: Iterated switches = the games master keeps offering you to switch doors, and you accept because each time has a 2/3 probability. But I don't think it is actually a problem. Just parse the question as 'do you want to accept your 1/3 probability, or do you want to switch to a 1/2 chance between two doors who in total have a 2/3 chance of containing the car, which shows that they're equivalent. But I don't know why the parent comment doesn't fail in this exact way, unless it's sneaking in a discussion of conditional probabilities.

Replies from: SaidAchmiz, SaidAchmiz, Dagon↑ comment by Said Achmiz (SaidAchmiz) · 2018-06-09T18:23:06.652Z · LW(p) · GW(p)

Replying to the edit:

But I don’t know why the parent comment doesn’t fail in this exact way, unless it’s sneaking in a discussion of conditional probabilities.

Because, of course, the door to which you’re offered the switch can’t have a 1/2 chance of being a car, given that there’s only one of it.

Consider it in terms of scenarios. There are two possible ones:

Scenario 1 (happens 1/3rd of the time): You picked the car. You are now being offered the chance to switch to a door which definitely has a goat.

Scenario 2 (happens 2/3rds of the time): You picked a goat. You are now being offered the chance to switch to a door which definitely has the car.

The only problem is that you don’t know which scenario you find yourself in (indexical uncertainty), so you have to average the expected utility of each action (switch vs. no-switch) over the prior probability of finding yourself in each of the scenarios. This gives you 2/3rds of a car for the “switch” action and 1/3rd of a car for the “no-switch” action.

Edit: A previous version of this comment ended with the sentence “No conditional probabilities are required.” This, of course, is not strictly accurate, since my treatment is isomorphic to the conditional-probabilities treatment—it has to be, or else it couldn’t be correct! What I should’ve said, and what I do maintain, is simply that no explicit discussion (or computation) of conditional probabilities is required (which makes the problem easier to reason about).

↑ comment by Said Achmiz (SaidAchmiz) · 2018-06-08T16:20:12.532Z · LW(p) · GW(p)

Iterated switches to what, exactly?

Edit: Regardless, the answer is no; there’s no reason to conclude this.

↑ comment by Dagon · 2018-06-08T14:15:03.554Z · LW(p) · GW(p)

What does "iterated switches" mean? In the 3-door game, there's only one switch possible.

That does remind me of another argument I found compelling - imagine the 10,000 door version. One door has a car, 9,999 doors have nothing. Pick a door, then Monty will eliminate 9,998 losing options. Do you stay with your door, or switch to the remaining one?

comment by Tom Kelleher (tom-kelleher) · 2018-06-08T20:29:12.526Z · LW(p) · GW(p)

I saw a variation of this explanation that I liked, and made it more intuitive. In helps if we jump from 3 choices to 100.

And as we proceed, think of making the first choice of door A as splitting the group into two sets: Picked and Not-Picked. The "Picked" group always contains one door, the Not-Picked group contains the rest (two doors for the usual version, and 99 in my 100-door version).

And rather than say that Monty Hall "opens one of the doors" from the Not-Picked set, let's say he's "opening all the bogus doors" from the Not-Picked set. Right? He always opens the door with nothing in it, leaving one remaining door. There's only two in the Not-Picked set, but he always opens one that's bogus.

With that setup, you make your choice. You pick Door #1 out of the 100 doors.

You probably picked wrong; that's no surprise. There's a 99% likelihood that the prize is somewhere in the Not-Picked set of 99 doors. We don't know which one, but hang tight.

Now Monty opens all the bogus doors in the Not-Picked set...leaving just one unopened.

That 99% probability of being in the Not-Picked set is still true--but now it's all collapsed into that one remaining closed door. That's the only remaining place it could be hiding. Your door choice didn't become any "luckier" just because Monty opened bogus doors.

So the smart money says, switch doors.

comment by Rick Jones (rick-jones) · 2018-06-08T13:35:31.804Z · LW(p) · GW(p)

There are a lot of ways to skin this cat. The most intuitive way I've come across is this:

1. If you choose door A, there is a 1/3 chance of the prize being behind that door. So there is a 2/3 chance that the prize is not behind that door. At this point we distribute the 2/3 chance evenly between doors B and C.

2. When the host reveals the the rotten avocado behind, say, door B, then door C picks up the entire 2/3 chance of having the prize.

When I apply Bayes's Theorem to the problem, I essentially get the same thing. The prior probability of the prize being behind door A is 1/3, and the posterior probability of it being behind door A is also 1/3.