Cognitive Enhancers: Mechanisms And Tradeoffs

post by Scott Alexander (Yvain) · 2018-10-23T18:40:03.112Z · LW · GW · 3 commentsContents

3 comments

[Epistemic status: so, so, so speculative. I do not necessarily endorse taking any of the substances mentioned in this post.]

There’s been recent interest in “smart drugs” said to enhance learning and memory. For example, from the Washington Post:

When aficionados talk about nootropics, they usually refer to substances that have supposedly few side effects and low toxicity. Most often they mean piracetam, which Giurgea first synthesized in 1964 and which is approved for therapeutic use in dozens of countries for use in adults and the elderly. Not so in the United States, however, where officially it can be sold only for research purposes. Piracetam is well studied and is credited by its users with boosting their memory, sharpening their focus, heightening their immune system, even bettering their personalities.

Along with piracetam, a few other substances have been credited with these kinds of benefits, including some old friends:

“To my knowledge, nicotine is the most reliable cognitive enhancer that we currently have, bizarrely,” said Jennifer Rusted, professor of experimental psychology at Sussex University in Britain when we spoke. “The cognitive-enhancing effects of nicotine in a normal population are more robust than you get with any other agent. With Provigil, for instance, the evidence for cognitive benefits is nowhere near as strong as it is for nicotine.”

But why should there be smart drugs? Popular metaphors speak of drugs fitting into receptors like “a key into a lock” to “flip a switch”. But why should there be a locked switch in the brain to shift from THINK WORSE to THINK BETTER? Why not just always stay on the THINK BETTER side? Wouldn’t we expect some kind of tradeoff?

Piracetam and nicotine have something in common: both activate the brain’s acetylcholine system. So do three of the most successful Alzheimers drugs: donepezil, rivastigmine, and galantamine. What is acetylcholine and why does activating it improve memory and cognition?

Acetylcholine is many things to many people. If you’re a doctor, you might use neostigmine, an acetylcholinesterase inhibitor, to treat the muscle disease myasthenia gravis. If you’re a terrorist, you might use sarin nerve gas, a more dramatic acetylcholinesterase inhibitor, to bomb subways. If you’re an Amazonian tribesman, you might use curare, an acetylcholine receptor antagonist, on your blowdarts. If you’re a plastic surgeon, you might use Botox, an acetylcholine release preventer, to clear up wrinkles. If you’re a spider, you might use latrotoxin, another acetylcholine release preventer, to kill your victims – and then be killed in turn by neonictinoid insecticides, which are acetylcholine agonists. Truly this molecule has something for everybody – though gruesomely killing things remains its comparative advantage.

But to a computational neuroscientist, acetylcholine is:

…a neuromodulator [that] encodes changes in the precision of (certainty about) prediction errors in sensory cortical hierarchies. Each level of a processing hierarchy sends predictions to the level below, which reciprocate bottom-up signals. These signals are prediction errors that report discrepancies between top-down predictions and representations at each level. This recurrent message passing continues until prediction errors are minimised throughout the hierarchy. The ensuing Bayes optimal perception rests on optimising precision at each level of the hierarchy that is commensurate with the environmental statistics they represent. Put simply, to infer the causes of sensory input, the brain has to recognise when sensory information is noisy or uncertain and down-weight it suitably in relation to top-down predictions.

…is it too late to opt for the gruesome death? It is? Fine. God help us, let’s try to understand Friston again.

In the predictive coding model, perception (maybe also everything else?) is a balance between top-down processes that determine what you should be expecting to see, and bottom-up processes that determine what you’re actually seeing. This is faster than just determining what you’re actually seeing without reference to top-down processes, because sensation is noisy and if you don’t have some boxes to categorize things in then it takes forever to figure out what’s actually going on. In this model, acetylcholine is a neuromodulator that indicates increased sensory precision – ie a bias towards expecting sensation to be signal rather than noise – ie a bias towards trusting bottom-up evidence rather than top-down expectations.

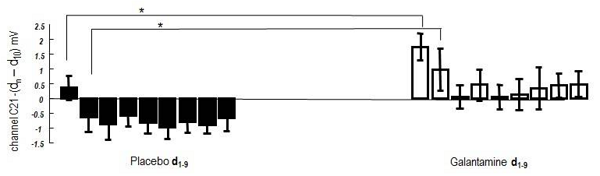

In the study linked above, Friston and collaborators connect their experimental subjects to EEG monitors and ask them to listen to music. The “music” is the same note repeated again and again at regular intervals in a perfectly predictable way. Then at some random point, it unexpectedly shifts to a different note. The point is to get their top-down systems confidently predicting a certain stimulus (the original note repeated again for the umpteenth time) and then surprise them with a different stimulus, and measure the EEG readings to see how their brain reacts. Then they do this again and again to see how the subjects eventually learn. Half the subjects have just taken galantamine, a drug that increases acetylcholine levels; the other half get placebo.

I don’t understand a lot of the figures in this paper, but I think I understand this one. It’s saying that on the first surprising note, placebo subjects’ brains got a bit more electrical activity [than on the predictable notes], but galantamine subjects’ brains got much more electrical activity. This fits the prediction of the theory. The placebo subjects have low sensory precision – they’re in their usual state of ambivalence about whether sensation is signal or noise. Hearing an unexpected stimulus is a little bit surprising, but not completely surprising – it might just be a mistake, or it might just not matter. The galantamine subjects’ brains are on alert to expect sensation to be very accurate and very important. When they hear the surprising note, their brains are very surprised and immediately reevaluate the whole paradigm.

One might expect that the very high activity on the first discordant note would be matched with lower activity on subsequent notes; the brain has now fully internalized the new prediction (ie is expecting the new note) and can’t be surprised by it anymore. As best I can tell, this study doesn’t really show that. A very similar study by some of the same researchers does. In this one, subjects on either galantamine or a placebo have to look at a dot as quickly as possible after it appears. There are some arrows that might or might not point in the direction where the dot will appear; over the course of the experiment, the accuracy of these arrows changes. The researchers measured how quickly, when the meaning of the arrows changed, the subjects shifted from the old paradigm to the new paradigm. Galantamine enhanced the speed of this change a little, though it was all very noisy. Lower-weight subjects had a more dramatic change, suggesting an effective dose-dependent response (ie the more you weigh, the less effect a constant-weight dose of galantamine will have on your body). They conclude:

This interpretation of cholinergic action in the brain is also in accord with the assumption of previous theoretical notions posing that ACh controls the speed of the memory update (i.e., the learning rate)

“Learning rate” is a technical term often used in machine learning, and I got a friend who is studying the field to explain it to me (all mistakes here are mine, not hers). Suppose that you have a neural net trying to classify cats vs. dogs. It’s already pretty well-trained, but it still makes some mistakes. Maybe it’s never seen a Chihuahua before and doesn’t know dogs can get that small, so it thinks “cat”. A good neural network will learn from that mistake, but the amount it learns will depend on a parameter called learning rate:

If learning rate is 0, it will learn nothing. The weights won’t change, and the next time it sees a Chihuahua it will make the exact same mistake.

If learning rate is very high, it will overfit. It will change everything to maximize the likelihood of getting that one picture of a Chihuahua right the next time, even if this requires erasing everything it has learned before, or dropping all “common sense” notions of dog and cat. It is now a “that one picture of a Chihuahua vs. everything else” classifier.

If learning rate is a little on the low side, the model will be very slow to learn, though it will eventually converge on a good understanding of its topic.

If learning rate is a little on the high side, the model will learn very quickly, but “jump around” between different understandings heavily weighted toward what best fits the last case it has worked on.

On many problems, it’s a good idea to start with a high learning rate in order to get a basic idea what’s going on first, then gradually lower it so you can make smaller jumps through the area near the right answer without overshooting.

Learning rates are sort of like sensory precision and bottom-up vs. top-down weights, in that as a high learning rate says to discount prior probabilities and weight the evidence of the current case more strongly. A higher learning rate would be appropriate in a signal-rich environment, and a lower rate appropriate in a noise-rich environment.

If acetylcholine helps set the learning rate in the brain, would it make sense that cholinergic substances are cognitive enhancers / “study drugs”?

You would need to cross a sort of metaphorical divide between a very mechanical and simple sense of “learning” and the kind of learning you do where you study for your final exam on US History. What would it mean to be telling your brain that your US History textbook is “a signal-rich environment” or that it should be weighting its bottom-up evidence of what the textbook says higher than its top-down priors?

Going way beyond the research into total speculation, we could imagine the brain having some high-level intuitive holistic sense of US history. Each new piece of data you receive could either be accepted as a relevant change to that, or rejected as “noise” in the sense of not worth updating upon. If you hear that the Battle of Cedar Creek took place on October 19, 1864 and was a significant event in the Shenandoah Valley theater of the Civil War, then – if you’re like most people – it will have no impact at all on anything beyond (maybe, if you’re lucky) being able to parrot back that exact statement. If you learn that the battle took place in 2011 and was part of a Finnish invasion of the US, that changes a lot and is pretty surprising and would radically alter your holistic intuitive sense of what history is like.

Thinking of it this way, I can imagine these study drugs helping the exact date of the Battle of Cedar Creek seem a little bit more like signal, and so have it make a little bit more of a dent in your understanding of history. I’m still not sure how significant this is, because the exact date of the battle isn’t surprising to me in any way, and I don’t know what I would update based on hearing it. But then again, these drugs have really subtle effects, so maybe not being able to give a great account of how they work is natural.

And what about the tradeoff? Is there one?

One possibility is no. The idea of signal-rich vs. signal-poor environments is useful if you’re trying to distinguish whether a certain pattern of blotches is a camoflauged tiger. Maybe it’s not so useful for studying US History. Thinking of Civil War factoids as anything less than maximally-signal-bearing might just be a mismatch of evolution to the modern environment, the same way as liking sweets more than vegetables.

Another possibility is that if you take study drugs in order to learn the date of the Battle of Cedar Creek, you are subtly altering your holistic intuitive knowledge of American history in a disruptive way. You’re shifting everything a little bit more towards a paradigm where the Battle of Cedar Creek was unusually important. Maybe the people who took piracetam to help them study ten years ago are the same people who go around now talking about how the Civil War explains every part of modern American politics, and the 2016 election was just the Confederacy getting revenge on the Union, and how the latest budget bill is just a replay of the Missouri Compromise.

And another possibility is that you’re learning things in a rote, robotic way. You can faithfully recite that the Battle of Cedar Creek took place on October 19, 1864, but you’re less good at getting it to hang together with anything else into a coherent picture of what the Civil War was really like. I’m not sure if this makes sense in the context of the learning rate metaphor we’re using, but it fits the anecdotal reports of some of the people who use Adderall – which has some cholinergic effects in addition to its more traditional catecholaminergic ones.

Or it might be weirder than this. Remember the aberrant salience model of psychosis, and schizophrenic Peter Chadwick talking about how one day he saw the street “New King Road” and decided that it meant Armageddon was approaching, since Jesus was the new king coming to replace the old powers of the earth? Is it too much of a stretch to say this is what happens when your learning rate is much too high, kind of like the neural network that changes everything to explain one photo of a Chihuahua? Is this why nicotine has weird effects on schizophrenia? Maybe higher learning rates can promote psychotic thinking – not necessarily dramatically, just make things get a little weird.

Having ventured this far into Speculation-Land, let’s retreat a little. Noradrenergic and Cholinergic Moduation of Belief Updating does some more studies and fails to find any evidence that scopolamine, a cholinergic drug, alters learning rate (but why would they use scopolamine, which acts on muscarinic acetylcholine receptors, when every drug suspected to improve memory act on nicotinic ones?). Also, nicotine seems to help schizophrenia, not worsen it, which is the opposite of the last point above. Also, everything above about acetylcholine sounds kind of like my impression of where dopamine fits in this model, especially in terms of it standing for the precision of incoming data. This suggests I don’t understand the model well enough for everything not to just blend together to me. All that my usual sources will tell me is that the acetylcholine system modulates the dopamine system.

(remember, neuroscience madlibs is “________ modulates __________”, and no matter what words you use the sentence is always true.)

3 comments

Comments sorted by top scores.

comment by Lukas_Gloor · 2018-10-23T21:26:47.149Z · LW(p) · GW(p)

And what about the tradeoff? Is there one?

What you mention in your second possibility ("rote, robotic way") goes into a similar direction, but I'd be worried about something more specific: Difficulties at big-picture prioritization when it comes to selecting what to be interested in. I envy people who find it easy to delve into all kinds of subjects and absorb a wealth of knowledge. But those same people may then fail to be curious enough when they encounter a piece of information that really would be much more relevant than the information they usually encounter. Or they might spend their time on tasks that don't produce the most impact.

Admittedly I'm looking at this with a terribly utilitarianism-tainted lens. Probably finding it easy to be interested in many things is generally a huge plus.

But I do suspect that there's a tradeoff. If reading about the Battle of Cedar Creek felt 30% as interesting to our brains as reading cognitive science or Lesswrong or Peter Singer or whatever got people here hooked on these sorts of things, then maybe fewer of us would have gotten hooked.

comment by Benquo · 2018-10-23T20:05:06.240Z · LW(p) · GW(p)

On this model, why would tobacco be a common informal treatment for incipient schizophrenia? Seems like there might be multiple, qualitatively different, regulatory mechanism for learning.

IME nicotine boosts the rewardingness of rewards, which is very different from e.g. what I want for deep thought where I want to be patient and avoid premature gradient-descent & need to sit with discomfort for a while. Maybe nicotine makes gamification more effective, increases positive motivation for passing tests / making progress, at the cost of reduced ability to do novel pattern recognition (which is what psychedelics seem to boost).

comment by RedMan · 2018-10-24T11:53:26.531Z · LW(p) · GW(p)

Here's a PSA kids: https://www.ecstasydata.org/view.php?id=6629&mobile=1

Ecstasydata is a place that promotes testing your stuff before you use it, and will GC/MS samples you send them, not sure if there's a nominal fee or not, I've never used the service.

This entry is a Berkeley CA submission of an 'adderall tablet' purchased on the internet, which turned out to be meth.

Popularity of gray to black market study drugs has apparently been recognized by dealers who are apparently using it to drive methamphetamine into a market (intellectual overachievers) where it ordinarily would not exist.

Smart people are usually super good at rationalizing things, like you know, addictions, so yeah this is insidious and please signal boost it if you're a nootropic user.