Geoff Hinton Quits Google

post by Adam Shai (adam-shai) · 2023-05-01T21:03:47.806Z · LW · GW · 14 commentsContents

14 comments

The NYTimes reports that Geoff Hinton has quit his role at Google:

On Monday, however, he officially joined a growing chorus of critics who say those companies are racing toward danger with their aggressive campaign to create products based on generative artificial intelligence, the technology that powers popular chatbots like ChatGPT.

Dr. Hinton said he has quit his job at Google, where he has worked for more than a decade and became one of the most respected voices in the field, so he can freely speak out about the risks of A.I. A part of him, he said, now regrets his life’s work.

“I console myself with the normal excuse: If I hadn’t done it, somebody else would have,” Dr. Hinton said during a lengthy interview last week in the dining room of his home in Toronto, a short walk from where he and his students made their breakthrough.

https://www.nytimes.com/2023/05/01/technology/ai-google-chatbot-engineer-quits-hinton.html

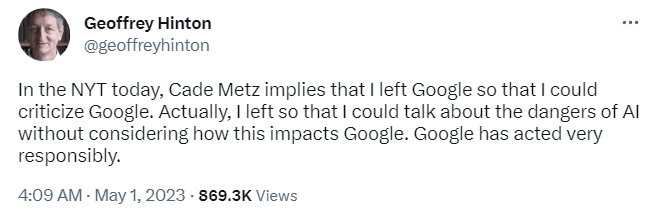

Some clarification from Hinton followed:

It was already apparent that Hinton considered AI potentially dangerous, but this seems significant.

14 comments

Comments sorted by top scores.

comment by the gears to ascension (lahwran) · 2023-05-01T21:31:49.003Z · LW(p) · GW(p)

Hinton is one of the few people who, unfortunately, definitely does not get to say "if I hadn't done it, someone else would have".

But this is based as hell. Hard alignmentpilled Hinton before Hinton-level AI?

comment by Andy_McKenzie · 2023-05-01T23:26:50.439Z · LW(p) · GW(p)

Does anyone know of any AI-related predictions by Hinton?

Here's the only one I know of - "People should stop training radiologists now. It's just completely obvious within five years deep learning is going to do better than radiologists because it can get a lot more experience. And it might be ten years but we got plenty of radiologists already." - 2016, slightly paraphrased

This seems like still a testable prediction - by November 2026, radiologists should be completely replaceable by deep learning methods, at least other than regulatory requirements for trained physicians.

Replies from: bvbvbvbvbvbvbvbvbvbvbv, Ilio↑ comment by bvbvbvbvbvbvbvbvbvbvbv · 2023-05-02T07:41:51.166Z · LW(p) · GW(p)

Fyi actually radiology is not mostly looking at pictures but doing imagery-guided surgery (for example embolisation) which is significantly harder to automate.

Same for family octors : it's not just following guidelines and renewing scripts but a good part is physical examination.

I agree that AI can do a lot of what happens in medicine though.

↑ comment by Ilio · 2023-05-02T13:21:18.135Z · LW(p) · GW(p)

This is indeed an interesting losing* bet. He was mostly right on the technical side (yes deep learning now do better than the average radiologist on many tasks). He was completely wrong on the societal impact (no we still need to train radiologists). This was the same story with ophthalmologists when deep learning significantly shorten the time needed to perform part of their job: they just spent the saved time on doing more.

*16+5=21, not 26 😉

Replies from: qv^!q↑ comment by qvalq (qv^!q) · 2023-05-03T14:00:53.275Z · LW(p) · GW(p)

"it might be ten"

Replies from: Ilio↑ comment by Ilio · 2023-05-03T14:19:10.063Z · LW(p) · GW(p)

Yeah, he said that too. But let’s face it, it’s 2023 and there’s absolutely no trace of radiologists starting to stop being under heavy pressure. Especially in Canada where papy boom is hitting hard and the new generations value family time more than dying at or from work.

But yeah, I concede it’s not settled yet. Do you want to bet friendly goodies with me?

Replies from: Ilio, qv^!q↑ comment by Ilio · 2023-05-04T12:59:03.087Z · LW(p) · GW(p)

In my local news today:

« radiologist at the CHUM, An Tang […] chaired an artificial intelligence task force of the Canadian Association of Radiologists. […] First observation: his profession would not be threatened.

The combination between the doctor and the AI algorithm is going to be superior to the AI alone or the doctor alone. The mistakes likely to be made are not of the same [type]. »

https://ici.radio-canada.ca/nouvelle/1975944/lintelligence-humaine-artificielle-hopital-revolution

↑ comment by qvalq (qv^!q) · 2023-05-10T12:35:53.093Z · LW(p) · GW(p)

No; I agree with you.

comment by Kaj_Sotala · 2023-05-02T19:14:10.592Z · LW(p) · GW(p)

Another interview with Hinton about this: https://www.technologyreview.com/2023/05/02/1072528/geoffrey-hinton-google-why-scared-ai/

Chosen excerpts:

People are also divided on whether the consequences of this new form of intelligence, if it exists, would be beneficial or apocalyptic. “Whether you think superintelligence is going to be good or bad depends very much on whether you’re an optimist or a pessimist,” he says. “If you ask people to estimate the risks of bad things happening, like what’s the chance of someone in your family getting really sick or being hit by a car, an optimist might say 5% and a pessimist might say it’s guaranteed to happen. But the mildly depressed person will say the odds are maybe around 40%, and they’re usually right.”

Which is Hinton? “I’m mildly depressed,” he says. “Which is why I’m scared.” [...]

... even if a bad actor doesn’t seize the machines, there are other concerns about subgoals, Hinton says.

“Well, here’s a subgoal that almost always helps in biology: get more energy. So the first thing that could happen is these robots are going to say, ‘Let’s get more power. Let’s reroute all the electricity to my chips.’ Another great subgoal would be to make more copies of yourself. Does that sound good?” [...]

When Hinton saw me out, the spring day had turned gray and wet. “Enjoy yourself, because you may not have long left,” he said. He chuckled and shut the door.

comment by teradimich · 2023-05-02T06:12:33.145Z · LW(p) · GW(p)

'“Then why are you doing the research?” Bostrom asked.

“I could give you the usual arguments,” Hinton said. “But the truth is that the prospect of discovery is too sweet.” He smiled awkwardly, the word hanging in the air—an echo of Oppenheimer, who famously said of the bomb, “When you see something that is technically sweet, you go ahead and do it, and you argue about what to do about it only after you have had your technical success.”'

'I asked Hinton if he believed an A.I. could be controlled. “That is like asking if a child can control his parents,” he said. “It can happen with a baby and a mother—there is biological hardwiring—but there is not a good track record of less intelligent things controlling things of greater intelligence.” He looked as if he might elaborate. Then a scientist called out, “Let’s all get drinks!”'

Hinton seems to be more responsible now!

comment by Max H (Maxc) · 2023-05-01T21:26:07.952Z · LW(p) · GW(p)

Archive.org link: https://web.archive.org/web/20230501211505/https://www.nytimes.com/2023/05/01/technology/ai-google-chatbot-engineer-quits-hinton.html

Note, Cade Metz is the author of the somewhat infamous NYT article about Scott Alexander.

comment by UHMWPE-UwU (abukeki) · 2023-05-03T21:34:30.495Z · LW(p) · GW(p)

I think people in the LW/alignment community should really reach out to Hinton to coordinate messaging now that he's suddenly become the most high profile and credible public voice on AI risk. Not sure who should be doing this specifically, but I hope someone's on it.

Replies from: sanxiyn↑ comment by sanxiyn · 2023-05-04T01:47:22.666Z · LW(p) · GW(p)

I note that Eliezer did this (pretty much immediately) on Twitter.

Replies from: abukeki↑ comment by UHMWPE-UwU (abukeki) · 2023-05-04T15:05:04.009Z · LW(p) · GW(p)

Not sure if he took him up on that (or even saw the tweet reply). Am just hoping we have someone more proactively reaching out to him to coordinate is all. He commands a lot of respect in this industry as I'm sure most know.