The Brain That Builds Itself

post by Jan (jan-2) · 2022-05-31T09:42:57.028Z · LW · GW · 6 commentsThis is a link post for https://universalprior.substack.com/p/the-brain-that-builds-itself?s=w

Contents

Constraints make creative Bootstrapping brains Probably prunable Computerization Closing thoughts None 6 comments

Previously in this series: How to build a mind - neuroscience edition, Serendipitous connections: applying explanations from AI to the brain, The Unreasonable Feasibility Of Playing Chess Under The Influence, A Brief Excursion Into Molecular Neuroscience.

TL;DR: Shameless advertisement for a paper some colleagues and I wrote. But also some pretty pictures of brain development, and some first principle arguments for why it's difficult to build a brain.

Constraints make creative

Originally, my motivation for getting into neuroscience was: "Building artificial intelligence is difficult, so perhaps I can learn something from looking at how the biological brain is built. Once I've figured that out, AI will be easy".

(When I read these words I can't help but add a mental "duuuuh".)

Since those innocent days, I have come to appreciate that building a biological brain is a ridiculously overconstrained problem.

- First off - even before you're done building, the brain must make itself useful. And it's not just "occasionally helping with an arithmetic problem" - I'm talking about "breathing", "eating", "coordinating limbs"... If the environment is dangerous, then also "running away" or "fighting" is on the menu. And you need to do these things reliably - lapsing means death.

- Second - every neuron only has access to local information. In particular, every neuron only receives input from a limited number of other neurons (usually < 10,000 out of ~80 billion). From that information, a neuron needs to infer what its role in the developing brain ought to be. Consequently, every solution you come up with must be "symmetric" in the sense that you can swap two neurons, and the end result must still work. (It turns out the two neurons don't even have to be from the same species).

- Third - there is no clear separation between "the thing you're building" and "the thing that is doing the building"[1]. The brain is making itself with only rough guidance from genes and the environment (see this post I wrote on the topic a while ago).

In contrast, the solution for AI appears to be (for now) "just stack more layers":

In AI, "the thing being built" and "the thing that's doing the building" is cleanly separated into "model" and "optimizer". Global information like the learning rate is readily available to all units. And even after training, there are no predators that punish lack of robustness[2].

But constraints make creative, and it seems like the aforementioned constraints turn out to have their benefits:

- First - An adversarial environment forces the brain to be robust against perturbations. Both theory and practice tell us that with robustness comes the ability to generalize to novel domains. (Intense adversarial dynamics might, in fact, explain the huge gap between monkeys and humans in terms of generality.)

- Second - A neat consequence of locality is modularity. When you try to use as little "wire" as possible to connect your neurons, locally interconnected modules tend to emerge. Beyond making things slightly more interpretable, modularity can also increase some types of robustness.

- Third - ... This one beats me.

What is the benefit of having to build yourself? I'm not sure. Let's zoom in on that.

Bootstrapping brains

What does it mean for the brain to be building itself?

As the brain is growing, it is dynamically responding to cues. Removing input to one eye and the visual cortex balances the loss by upregulating input from the other. Adding an additional eye results in... well, this:

When the brain does this type of rearranging, it is both the thing that "notices" that something is wrong, and it's also the thing that "is being modified".

Third-eye shenanigans are only one (illustrative) example of the dynamic nature of development. Throughout development, immature neural circuits produce activity that induces activity-dependent formation/removal of synapses, which changes the circuit, which leads to changed activity, ... The circuit is "the thing you're building," and its activity is "the thing that is doing the building".

I've briefly written about this dynamic before, but now I want to take a deeper dive because a thing I've been working on with colleagues for a while is finally out as a preprint.

Let's start by building some intuition by looking at pretty pictures. We start with one of my favorite recordings of the optic tectum of a zebrafish developing over 16 hours:

After soaking in this beauty for a bit, I would like to ask you to look closely at some of the tiny protrusions. You'll notice that the smaller protrusions tend to probe the environment a bit before settling for a direction to grow in.

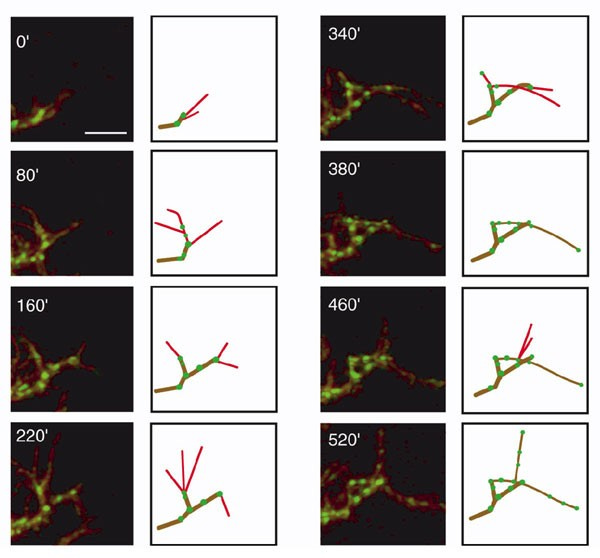

Niell and colleagues made that observation eight years earlier, but their camera wasn't as nice.

On the plus side, they got a very close look at an individual dendritic tree of a neuron in the optic tectum. And importantly, they genetically manipulated the fish so that its synapses glow green. So they were able to look closely at the interactions between dendrite growth and synapse formation.

Now, this is where the plot thickens. Synapses are the site of transmission of electric nerve impulses between two nerve cells, so synapses live at the intersection of the circuit (defined by the locations of synapses) and its activity (the signal transmitted by the synapse). Therefore, we might expect that the dynamics of synapse development should be pretty important.

And that's indeed the conclusion that Niell and colleagues arrive at. They look very closely at a developing branch, and they notice that once a synapse (a green blob) forms, the developing dendrite tends to anchor to that synapse. So it seems that during early development, the initial synapses a dendrite makes can act as a type of scaffold.

Probably prunable

But not all development is about growth! Rather, it's about growth plus selective pruning. I've written about a potential molecular mechanism underlying this dynamic before, and now we'll see it in action.

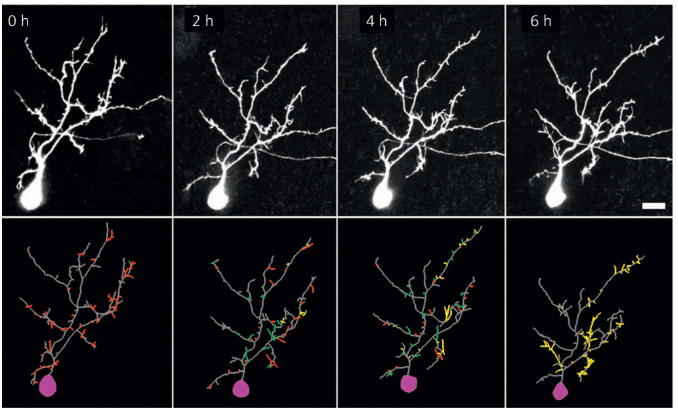

Right out the gate - even though there can be a lot of pruning, this typically doesn't mean that the dendrite gets shorter in total[3]. Typically, retractions of branches are balanced by the addition of new branches. Here are pictures from a timelapse from a Xenopus tadpole neuron:

The bottom row shows branches that will be lost by the end of the recording in red, branches that appear and disappear throughout the recording in green, and branches that emerge and are stable until the end of the recording in yellow. Importantly, despite all the local changes, the overall length of the dendrite remains approximately the same.

What determines if a branch stabilizes or gets pruned? It's kind of unclear - but let's collect the evidence.

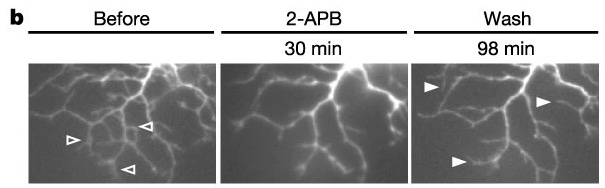

- First, here's a sequence of pictures from the retina of a baby chicken. The pictures show a dendrite in the baseline (left), when activity is blocked (middle), and when activity is allowed again.

source

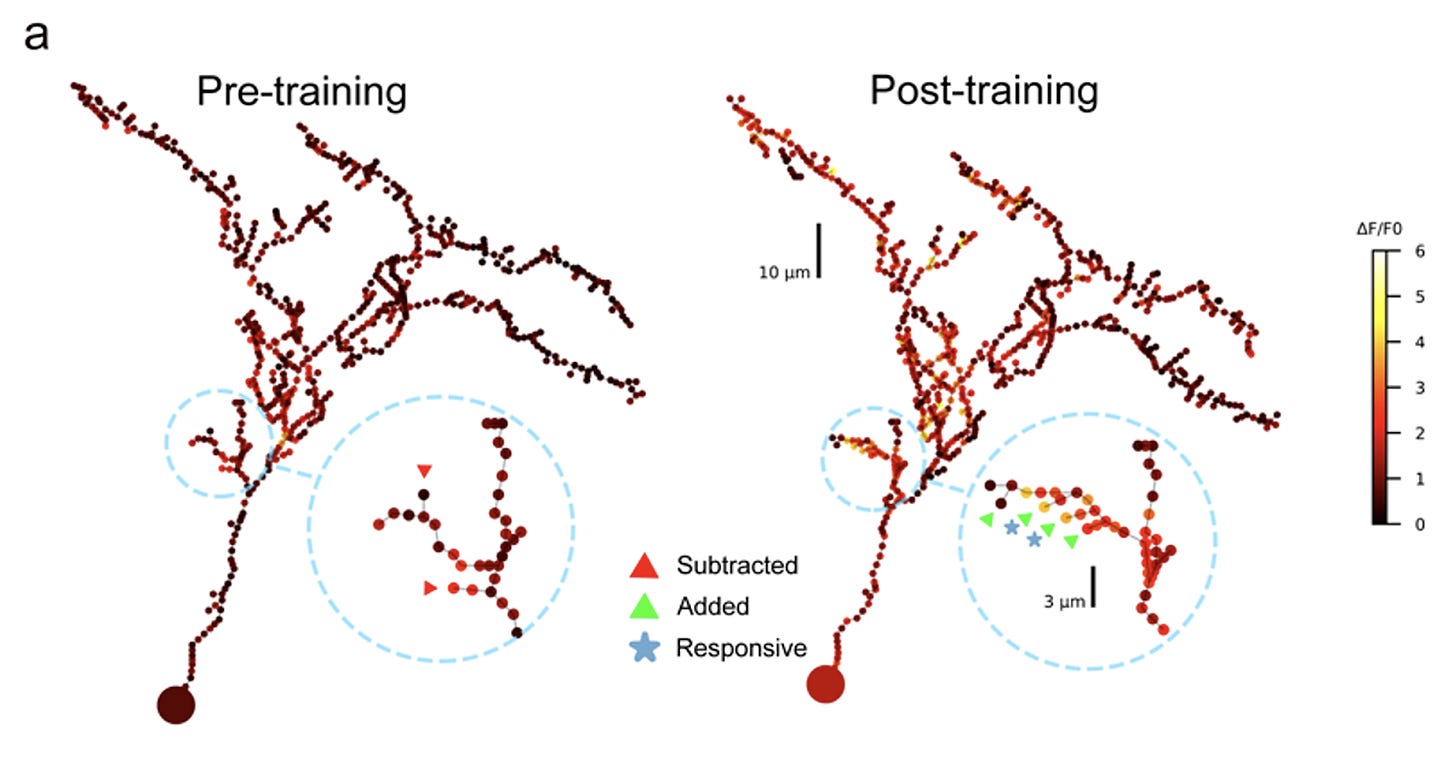

The dendrite retracts rapidly when the activity stops and then extends again when the activity resumes. So dendrite retraction can be triggered by activity-dependent mechanisms. - Second, here's a dendrite in the optic tectum of the Xenopus tadpole. The tadpole is trained with a visual stimulus, and the dendrite shows new protrusions that form in response to the training. Importantly, these protrusions are responsive to the stimulus that the tadpole was trained with.

source

So specific activity can trigger the growth of dendrites. - Third, the "Sherlock and Moriarty of molecular neuroscience" also get a starring role in this story. In this study, researchers incubate rat brain neurons in either BDNF (matBDNF) or proBDNF (CR-proBDNF) and find that the signaling molecules dramatically affect neuronal morphology.

(As perhaps expected,) BDNF increases dendrite length and complexity, while proBDNF leads to almost complete dendrite removal. - Finally, we had pretty strong intuitions about how synapses ought to interact with each other from previous work. In particular, there's a bunch of neat experimental evidence that synapses that fire "out-of-sync" tend to "lose their link". Or, in less poetic terms, nearby synapses tend to compete.

Strongly driving the synapses marked in yellow via glutamate uncaging results in shrinkage of the unstimulated synapses marked with the red arrow.

Computerization

Those were the puzzle pieces we knew about (or guessed) when we started the project. It seemed plausible that synapse formation and removal might have a pretty big impact on what the dendrite looks like. And in particular, whether a synapse stabilizes or not might be determined by whether it fires in synchrony with its neighbors.

When we put these ideas into a model, we get something like the following video:

Initially, the dendrites shoot outwards and search for synaptic connections (synapses not shown in the video). Initially, the dendrite is not picky at all - it just connects with any potential partner it finds. This results in a rapid increase in total length that nicely mirrors the increase in length observed in biology (again from a Xenopus tadpole).

However, now something interesting happens both in the model and in biology; dendrite growth tapers off and stabilizes!

As I've shown you before, this stability in total length does not mean that there is no change in the dendrite structure anymore; rather, the addition and removal of branches are balanced in a fixed point/steady state. This is also something we observe in the model:

But why is it that the dendrite "suddenly" stops growing? The answer is unclear from the biological data, but our model gives a neat explanation: Dendrite growth stops because competition between synapses is starting!

Due to the dynamics of the competition, it took a while for synapses to "get to know their neighbors" and decide whether they were well-synchronized. But once they figured it out, synaptic pruning starts, and the dendrite cannot simply add new synapses at random anymore. Thus, growth slows down, and many poorly synchronized synapses get removed. What remains are only well-synchronized synapses:

This way, our model can link the development of dendrites and the emergence of synaptic organization in one framework.

In the paper, we push things further and show how our model fits in with more experiments and how the resulting dendrites have some cool optimality properties that emerge from a simple synapse-centric growth rule. But for this post, it's time to wrap things up and to return to our original question: How can the brain be, at the same time, "the thing you're building" and "the thing that is doing the building"? And could this be good for anything?

Closing thoughts

I feel the strong urge to do a bunch of epistemic hedging, "theory is theory and experiment is experiment," and "I could be totally wrong about this", but I think that's pretty bad style. Instead, I’ll say that this is my current best guess, and if I’m wrong I’ll be appropriately surprised and embarrassed.

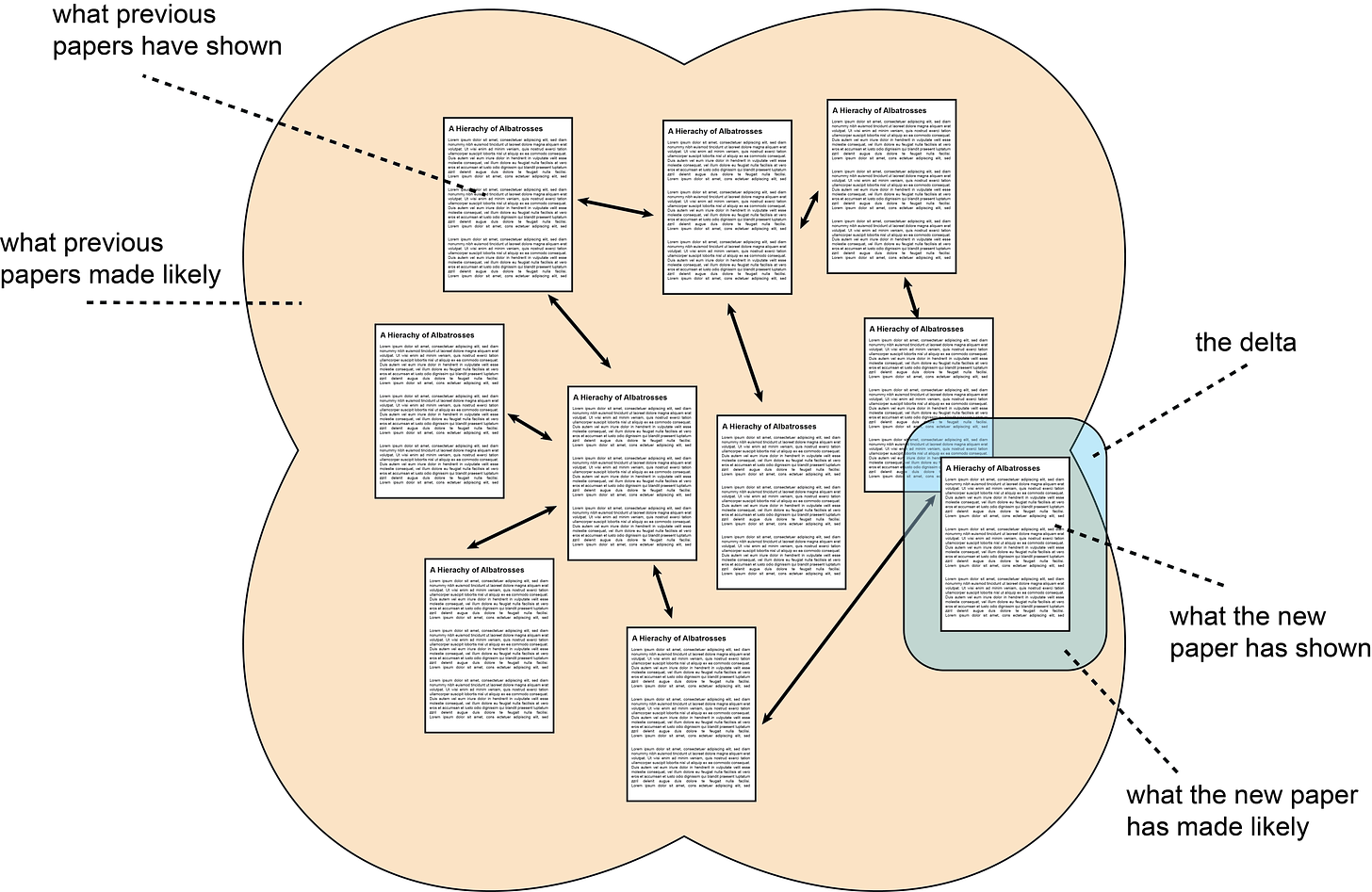

Instead of doing more hedging, I’ll stick my neck out even further and talk about “the delta”:

Schematic of “the delta” added by a (typical) academic paper. Papers you have read before are shown in the background with an orange background. Relationships between papers are indicated with double-sided arrows. The size and shape of the orange background represent the statements you believe are likely to be true. The newly added paper is shown in the foreground on a blue background. The portion that the new paper makes likely to be true (or, sometimes, demonstrates to be true) is “the delta”.

What do I believe now about “the brain that builds itself”?

- A straightforward way to obtain structured connectivity in a neural circuit is to start with a greedy strategy (connect as many things as possible), followed by an autoregressive[4] learning rule that amplifies structure and prunes away the noise.

- Through successive amplification of structure and pruning of noise, the developmental process converges to a fixed point, defined as the point where the learning rule produces no more competition.

The “benefit” of this dynamical systems solution is (again) “robustness”. As long as you make sure your learning rule keeps on doing its thing it doesn’t matter if you get small perturbations. You’ll keep rolling towards the valley of attraction anyways.

Sticking my neck out even further, I can’t help but think of the natural abstraction hypothesis [LW · GW] here. Perhaps a lot of the cognitive structure that humans have does not actually require a lot of specification during development. Perhaps the fixed point of the developmental process is “natural” in the sense that any autoregressive learner with sensory input from the world[5] (and with approximately similar computational capacity) will end up with approximately the same cognitive structure.

This moves the question from the world of neuroscience into the world of… the world? What is the world out there like, and why does it have so much structure? I’ll think about it a bit and update you on my progress ;)

- ^

This is certainly true after birth, but even in the womb, it's not like there is a lot of micro-managing going on.

- ^

Unless you count the folks at Redwood Research, of course.

- ^

This image floating around the internet gets used all over the place to argue that there is something special happening in the brain of humans around the age of 5-6. The image comes originally from Seeman, 1999:

It might well be that something special happens at that age, but I cannot find a single other study that replicates the image on the left.

In fact, a prettycredible Nature Review showsan increasing complexity up to age 24.Edit: A commenter pointed out that this is 24 months and therefore not inconsistent with Seeman's observation.

Also, note that the caption of the Seeman figure talks about a decline in synaptic connections, which are not even visible on the left. And the right shows a decline in dopamine receptors, but with a very suspicious x-axis and very few data points in the most critical period.

- ^

I think there is a very elegant way in which Hebbian learning can be interpreted as simply doing autoregressive learning. Sezener et al are pointing in this direction, and interpreting the ventral stream as a residual stream fills in some of the blanks.

- ^

6 comments

Comments sorted by top scores.

comment by dkirmani · 2022-05-31T11:22:03.475Z · LW(p) · GW(p)

This is a really engagingly-written post!

Replies from: benjamin-j-campbell↑ comment by benjamin.j.campbell (benjamin-j-campbell) · 2022-05-31T11:28:21.595Z · LW(p) · GW(p)

Seconded. The perfect level of detail to be un-put-down-able while still making sure everything is explained in enough detail to be gripping and well understood

comment by Measure · 2022-06-03T14:52:18.100Z · LW(p) · GW(p)

In fact, a pretty credible Nature Review shows an increasing complexity up to age 24.

This chart in footnote [3] has age in months, not years. Complexity increase for years 1-2 doesn't contradict a peak at 5-6.

Replies from: jan-2↑ comment by Jan (jan-2) · 2022-06-03T20:08:54.410Z · LW(p) · GW(p)

Oh true, I completely overlooked that! (if I keep collecting mistakes like this I'll soon have enough for a "My mistakes" page)

comment by Ilio · 2022-06-02T13:37:00.131Z · LW(p) · GW(p)

Love it, and love the general idea of seeing more ml-like interpretations of neuroscience knowledge.

One disagreement (but maybe I should say: one addition to a good first-order approximation) is over local information: I think it includes some global information, such as sympathetic/parasympathetic level through heart beat, and that the brain may may actually use that to help construct/stabilize long range networks, such as the default node network.

Replies from: jan-2↑ comment by Jan (jan-2) · 2022-06-02T13:53:16.536Z · LW(p) · GW(p)

Yes, good point! I had that in an earlier draft and then removed it for simplicity and for the other argument you're making!