Situational Awareness Summarized - Part 1

post by Joe Rogero · 2024-06-06T18:59:59.409Z · LW · GW · 0 commentsContents

Part I: OOMs go Zoom Factors in Effective Compute Potential Bottlenecks The Data Wall Future Unhobblings The Drop-In Remote Worker Some questions I have after reading None No comments

This is the first post in the Situational Awareness Summarized [? · GW] sequence. Collectively, these posts represent my attempt to condense Leopold Aschenbrenner's recent report, Situational Awareness, into something more digestible. I'd like to make it more accessible to people who don't want to read 160 pages.

I will not attempt to summarize the introduction, which is already brief and worth a full read.

Disclaimer: As of a few weeks ago, I work for the Machine Intelligence Research Institute. Some of MIRI's basic views regarding AI policy can be found here, and Rob Bensinger wrote up a short response to Leopold's writeup here [EA · GW]. I consider Rob's response representative of the typical MIRI take on Leopold's writeup, whereas I'm thinking of this sequence as "my own personal take, which may or may not overlap with MIRI's." In particular, my questions and opinions (which I relegate to the end of each post in the sequence) don't necessarily reflect MIRI's views.

Part I: OOMs go Zoom

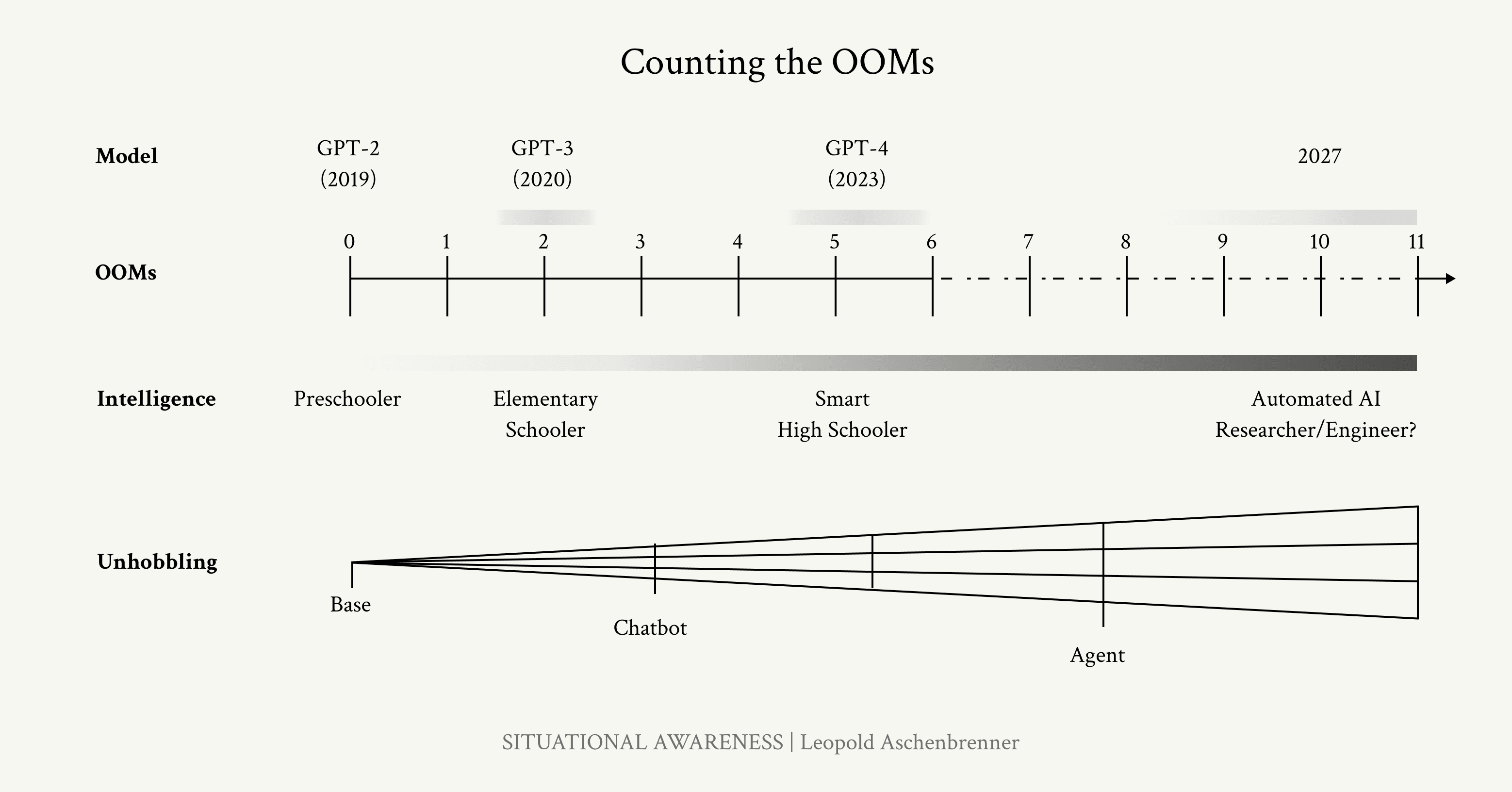

This section covers the past and future speed of AI scaling. In it, Leopold traces the rapid evolution of AI capabilities in the past four years and attempts to extrapolate this progress into the near future. His primary unifying metric is orders of magnitude (OOMs) of effective compute.

In principle, each OOM represents a tenfold increase in computational power. However, in order to address harder-to-measure factors like algorithmic progress and "unhobbling", Leopold attempts to estimate the effects of these factors on overall scaling and reports the result as effective compute.

Focused through the lens of OOMs, Leopold weaves a vivid tapestry that follows the evolution of the cute and somewhat pathetic GPT-2 into the benchmark-shattering monolith that is GPT-4. He outlines the factors that contributed to this rapid growth, and makes the case that they will probably continue to operate for at least the next few years. The conclusion? AGI is coming, and soon.

Factors in Effective Compute

How do we get there? To start, Leopold highlights three factors that add to the total "OOMs of effective compute" metric.

Compute: the approximate number of floating-point operations (FLOPs) used to train each new generation of language model. The article estimates that this has increased by 3,000-10,000x, or about 3.5-4 OOMs, from GPT-2 to GPT-4, and will probably increase another 2-3 OOMs by 2027.

Algorithmic efficiency: marginal improvements in machine learning science that allow models to accomplish similar tasks with less compute. These include advances in data use, training stack, and architecture changes like Mixture of Experts. Leopold estimates that these gains have added 1-2 OOMs of effective compute, and that we're on track to see another 1-3 OOMs by 2027.

Unhobbling: new approaches that unlock the latent capabilities of models. This is Leopold's catchall term for such paradigm-shifting developments as Chain-of-Thought prompting (CoT), reinforcement learning with human feedback (RLHF), and access to tools like web search. It also includes further enhancements in the form of scaffolding, larger context windows, and posttraining improvements. Leopold estimates that these developments magnified effective compute by about 2 OOMs, but acknowledges that the error bars on this number are very high.

Putting it all together, Leopold expects the future to look something like this:

Potential Bottlenecks

Leopold devotes considerable energy to addressing two major questions that might affect the speed of progress:

- Will we hit a wall when we run out of data?

- Will unhobbling gains continue to scale?

The Data Wall

In training, Llama 3 grazed on approximately the entire useful corpus of Internet text (~15 trillion tokens). Chinchilla scaling laws suggest we need twice as much data to efficiently train a model twice as large. But after digesting a meal of that size, what's left to feed our hungry herd of next-gen LLMs? Are we, against all prior expectations, actually going to run out of internet?

Leopold thinks not. He offers two main arguments for why the data wall might be a surmountable obstacle:

- Insiders like Dario Amodei of Anthropic seem optimistic

- Much deeper engagement with high-quality data is possible

To illustrate the second point, Leopold points to in-context learning and self-play. Current LLMs, he argues, merely skim everything we feed them, like a college student speed-reading all their textbooks in the first month of school. What if we pointed LLMs at a smaller quantity of high-quality data, and gave them ways to reflect and study the content, like a student slowly pondering the problems in a math textbook? AlphaGo learned most of its tricks by playing against itself; could future models get similar gains through self-study?

Leopold also points out that, even if the data wall is climbable, methods of overcoming it might prove to be highly protected and proprietary secrets. This might lead to increased variance in the capabilities of different AI labs, as each makes and hoards a different set of breakthroughs for working with limited data.

Future Unhobblings

Despite recent gains, Leopold argues, AI is still very hobbled today. He expects major overhangs to be unlocked in the near future. Some key examples include:

- "Onboarding" models, as one does with new hires, by introducing a particular set of documents to their context windows.

- Unlocking "System II thinking", in the Kahneman sense, giving models more time (or token-space) to think logically, deeply, and reflectively before they are compelled to produce an answer.

- Using computers the way humans do (currently GPT-4o can do web search, but can't use Microsoft Excel).

Leopold does think that these and other bottlenecks will slow progress eventually. Increases in spending, hardware specialization, and low-hanging algorithms can only take us so far before we (presumably) consume our overhang and slow down. But in the next few years at least, we're on track to see rapid and potentially transformative growth. Which brings us to...

The Drop-In Remote Worker

AIs of the future, Leopold suggests, may look less like "ChatGPT-6" and more like drop-in remote workers - millions of fully autonomous, computer-savvy agents, each primed with the context of a given organization, specialty, and task set. And it could be a sudden jump, too; before models reach this point, integrating them into any sort of company may require a lot of hassle and pretraining. But after they reach the level of remote human workers - a level Leopold suspects is coming fast - they will be much easier to employ at scale.

Astute readers will notice that this particular scenario is not so much an endpoint as a recipe for truly staggering progress. The implications are not lost on Leopold, either; we'll cover what he thinks will come next in Part II.

Some questions I have after reading

Below are some questions and uncertainties I still have after reading this section.

- Is "insiders are optimistic" really a good argument about the data wall? I have a hard time imagining a world in which the CEO of Anthropic says "sorry, looks like we've hit a wall and can't scale further, sorry investors" and keeps his job. I consider insider optimism to be a very weak argument - but the argument about deeper engagement feels much stronger to me, so the conclusion seems sound.

- The idea that there will be more variance in AI labs due to the data wall seems to make a lot of assumptions - most dubiously, that the labs won't get hacked and their data workarounds won't leak or become mainstream. Leopold does dedicate an entire section to improving lab security, but it's not good enough yet to make secure trade secrets a safe assumption. (40% / moderate confidence)

- System II thinking seems really close to the sort of self-referential thought that we identify with consciousness. Is this how we end up with conscious AI slaves? (<10% / very low confidence)

- By counting both algorithmic effectiveness and unhobbling, is the section on effective compute double-counting? It seems like the "algorithmic effectiveness" section is using purely mathematical scaling observations; could some of the observed algorithmic gains be due to unhobbling techniques like CoT and RLHF? If so, how much does this affect the results?

- Leopold guesses that we might see "10x" faster progress in AGI research once we have millions of automated researchers running at the speed of silicon. In other words, we might make 10 years of progress in 1 year. Why so low? It seems like progress could spike much, much faster and more unpredictably than that, especially if improvements in the first month make the second month go faster, and so on. This drastically changes the timeline. Maybe this guess was calibrated to appeal to skeptical policymakers? Perhaps we'll see more in Part II?

0 comments

Comments sorted by top scores.