Focusing your impact on short vs long TAI timelines

post by kuhanj · 2023-09-30T19:34:39.508Z · LW · GW · 0 commentsContents

Summary/Key Points Considerations that favor focusing on shorter timelines Considerations that favor focusing on longer timelines Unclear, Person-Specific, and Neutral Considerations Introduction and Context Considerations favoring focusing on shorter-timelines Neglectedness of short-timelines scenarios Fewer resources over less time: You can’t contribute to past timelines in the future Allocating resources proportional to each potential timeline scenario entails always disproportionately focusing on shorter timelines scenarios Higher predictability and likelihood of useful work (Uncertain) Work useful for shorter-timelines is more likely useful for longer timelines than vice versa. Considerations favoring focusing on longer-timelines Many people concerned about TAI x-safety are young and inexperienced Intractability of changing shorter timelines scenarios Neutral, person-specific, and unclear considerations Probability distribution over TAI Timelines Variance and Influenceability (usefulness of working on) of different timelines scenarios Personal considerations Takeoff speeds/Continuity of AI progress The last-window-of-influence is likely in advance of TAI arrival Conclusion Acknowledgements None No comments

Summary/Key Points

I compare considerations for prioritizing impact in short vs. long transformative AI timeline scenarios. Though lots of relevant work seems timelines-agnostic, this analysis is primarily intended for work whose impact is more sensitive to AI timelines (e.g. young-people-focused outreach and movement building).

Considerations that favor focusing on shorter timelines

- Neglectedness of short-timelines scenarios considerations

- Fewer resources over less time: In shorter timeline worlds, fewer resources in total ultimately go to TAI existential safety (x-safety), since more time means more work can occur, the total amount of work per year will probably rise (due to concern and interest rising), and the ability for currently concerned individuals to contribute will likely increase over time (due to accruing relevant expertise and other resources). This likely increases the marginal value of a given amount of resources.

- You can’t contribute to past timelines in the future: It is much less costly to mistakenly think the deadline for a project is earlier than the real deadline and do things too early than missing the deadline due to mistakenly thinking it was later.

- Relatedly, allocating resources proportional to each potential timeline scenario entails always disproportionately focusing on shorter timelines scenarios.

- Higher predictability and likelihood of useful work: More time means more uncertainty, so it’s harder to make plans that are likely to pan out.

- (Uncertain) Work useful for shorter-timelines is more likely useful for longer timelines than vice versa

Considerations that favor focusing on longer timelines

- Many people concerned about TAI x-safety are young and inexperienced: This means many impactful options are not tenable in shorter-timeline scenarios, and longer timelines offer more time to develop relevant skills and land impactful roles.

- However, the consideration points in the opposite direction for older and more experienced people concerned about TAI x-safety.

- Intractability of changing shorter timelines scenarios

- One reason is that many valuable projects take a lot of time, and many projects’ impact increases with time.

- This is particularly true in research and policy, and it suggests that longer-timelines scenarios are more tractable. Also, compounding returns benefit from more time (e.g. investments for AI safety).

Unclear, Person-Specific, and Neutral Considerations

- Probability distribution over TAI Timelines - Your plans should look pretty different if you have 90% credence in TAI by 2030 vs 90% credence in TAI after 2070.

- Variance and influenceability of timelines scenarios, not their expected-value is what is decision-relevant.

- Personal considerations like age, skills, professional background, citizenship, values, finances, etc

- Take-off speeds [? · GW] + (Dis)continuity of AI progress: Fast take-offs, correlated with shorter timelines, might mean increased leverage for already concerned actors due to more scenario predictability pre-discontinuity, but less tractability overall.

- The last-window-of-influence is likely in advance of TAI arrival: This is due to factors like path-dependence, race dynamics, and AI-enabled lock-ins. This means the relevant timelines/deadlines are probably shorter than TAI timelines.

Overall, the considerations pointing in favor of prioritizing short-timelines seem moderately stronger than those in favor of prioritizing long-timelines impact to me, though others who read drafts of this post disagreed. In particular, the neglectedness considerations seemed stronger than the pro-longer-timeline-focus ones, perhaps with the exception of the impact discount young/inexperienced people face for short-timelines focused work - though even then I’m not convinced there isn’t useful work for young/inexperienced people to do under shorter timelines. I would be interested in readers’ impressions of the strength of considerations (including ones I didn’t include) in the comments.

Introduction and Context

“Should I prioritize being impactful in shorter or longer transformative AI timeline scenarios?”

I find myself repeatedly returning to this question when trying to figure out how to best increase my impact (henceforth meaning having an effect on ethically relevant outcomes over the long-run future).

I found it helpful to lay out and compare important considerations, which I split into three categories; considerations that:

- favor prioritizing impact in short-timeline scenarios

- favor prioritizing impact in long-timeline scenarios

- are neutral, person-specific, or unclear

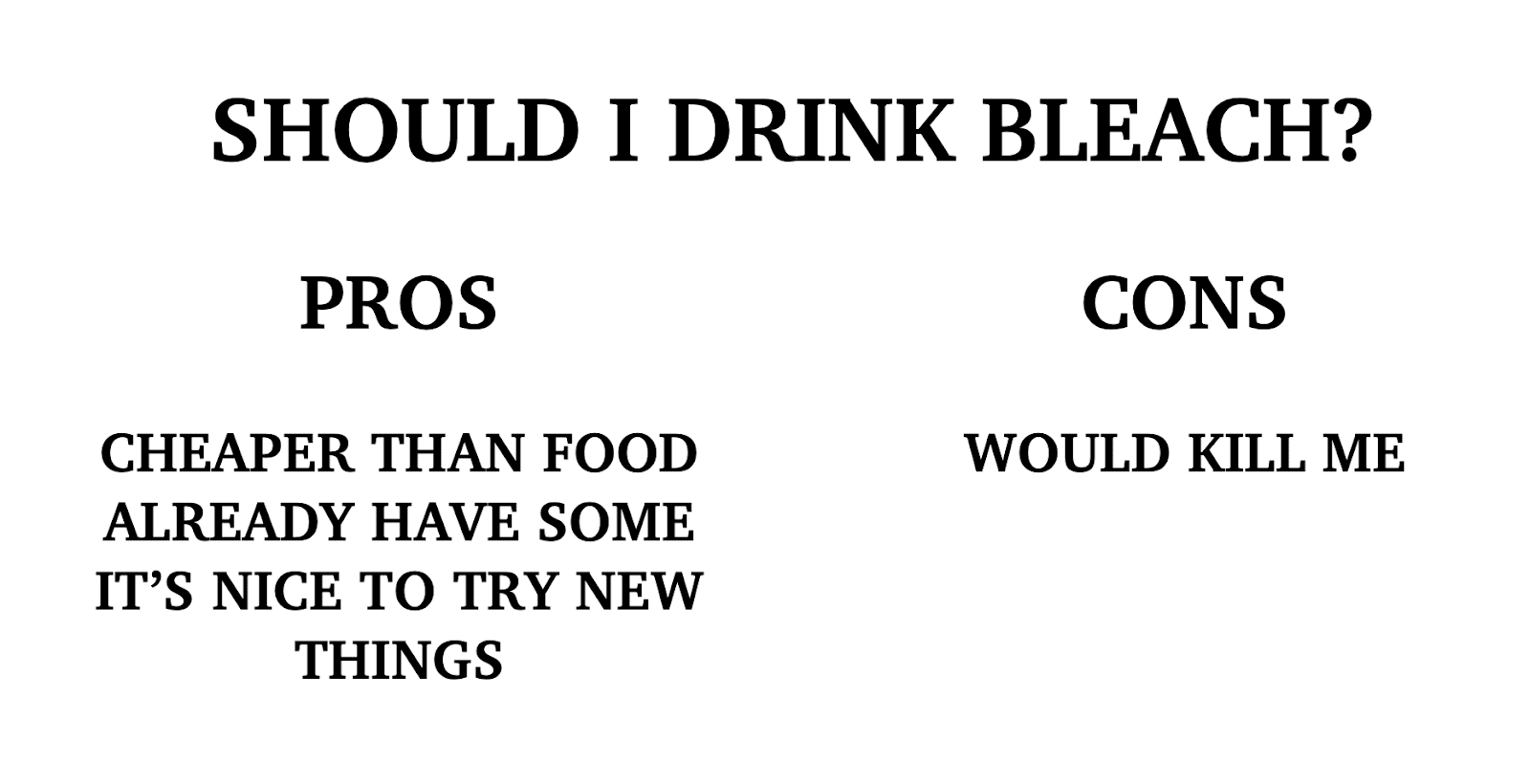

As with all pros and cons lists, the strength of the considerations matters a ton - which gives me the opportunity to share one of my favorite graphics (h/t 80,000 Hours):

Caption: Pro and con lists make it easy to put too much weight on an unimportant factor.

Without further ado, let’s go through the considerations!

Considerations favoring focusing on shorter-timelines

Neglectedness of short-timelines scenarios

Fewer resources over less time:

People currently concerned about TAI existential-safety will probably form a much larger proportion of relevant work being done in shorter-timeline scenarios than longer ones. Longer timelines imply more time for others to internalize the importance and imminence of advanced AI and focus more on making it go well (as we’re starting to see with e.g. the UK government).

- Much of the field working on TAI x-safety is young and relatively inexperienced, which magnifies the neglectedness (of quality-adjusted resources) of the short timelines scenarios.

- More straightforwardly, the amount of time available to do useful work is higher in longer timeline scenarios, which amplifies the benefits of other resources like research, funding, policy focus, the career capital of young people, etc.

You can’t contribute to past timelines in the future

- It is generally much more costly to make plans assuming a certain deadline (or timelines distribution) and realize later that the deadline is earlier than previously thought than to make the opposite mistake. In the latter case, we (and the world more broadly) have more time to correct for our mistaken beliefs and predictions.

- As a student, it is much costlier to mistakenly think the deadline for a project is earlier than the real deadline, than missing the deadline due to mistakenly thinking it was later (all else equal, messy opportunity cost considerations aside).

- Interesting fact: Since March 2023, the metaculus median AGI timeline has gotten one year shorter per month (and around

- Ability to update on evidence over time:

- Evidence over time will update our timeline estimates for TAI: Government and frontier lab actions, the capabilities of future models, potential bottlenecks (or lack thereof) from data, hardware production and R&D, algorithmic progress, and more.

- Counter-consideration: Important work sometimes requires lots of time to plan and execute, so there are instances where over-prioritizing resources on short-timeline scenarios leads to regret (for not starting long-calendar-time-requiring long-timelines focused work early enough).

Allocating resources proportional to each potential timeline scenario entails always disproportionately focusing on shorter timelines scenarios

- As a toy example, say a central TAI safety planner had to commit to their best guess distribution for TAI timelines now, and allocate future resources accordingly. Say their distribution was two discrete spikes with equal probability (50%) on TAI 10 and 20 years from now (2033 and 2043), and they were determining how to allocate work hours of a fixed set of 500 people (focused on TAI safety) for different timeline scenarios over time. Assume this set of 500 people are the only ones who will work on TAI. From 2033 to 2043, conditioned on 2033 timelines not being realized, all 500 people’s effort would go to 2043 planning. Therefore, to end up with proportional work-hour resource allocation across timeline scenarios, the planner would have to allocate 100% of the group’s time for the next ten years on 10 year timelines to achieve parity - not put 50% of resources addressing 2033 timelines scenarios and 50% into 2043 scenarios despite that being the planner’s current probability.

- This toy example simplifies many important dynamics, but removing the simplifications do not all point in favor of less extreme prioritization. Examples:

- It is much more likely than not that the amount of resources going towards TAI x-safety will increase over time, rather than stay constant or decrease.

- In my toy example setup, if 50% of your probability mass is on an interval shorter than the remaining interval, it is impossible to allocate person hours proportional to the risk level. On the flip side, if the second interval were shorter, non-zero resources would go towards the longer-timeline during the first interval.

- Money is more flexible than person hours (I can spend all my money now and not have any later, unlike my work hours barring burnout/worse).

Higher predictability and likelihood of useful work

- It is easier to predict important details of scenarios conditioned on shorter timelines. For example:

- Which entities are able to gather the funding, hardware, data, ML/engineering expertise, and other resources required to build TAI.

- Relatedly, how many (meaningfully distinct) actors have access to cutting-edge TAI?

- Who is in influential roles in relevant institutions (I have much less uncertainty over who the president of the US is in the year 2024 than 2050).

- How different TAI is from the most advanced AI systems of today - in terms of how it is trained, how it gets deployed, what effect precursors to TAI have had on society in the lead-up, and more.

- How much data, hardware, and power are needed to train TAI?

- What will the training procedure for TAI look like?

- How much algorithmic progress happens between now and TAI

- How continuous has AI progress been, and over what timescale?

- Which entities are able to gather the funding, hardware, data, ML/engineering expertise, and other resources required to build TAI.

- Predictability makes it much easier to do work that ends up being useful. Many people working in AI safety think that most early work on AI alignment is very unlikely to be useful, largely because people were pretty uncertain about what properties advanced AI systems would have.

(Uncertain) Work useful for shorter-timelines is more likely useful for longer timelines than vice versa.

- Most work and skill sets that seem useful for short timelines are also useful for long timelines, but not vice versa. Insofar as this asymmetry exists, this might be a compelling reason to prioritize work that looks good under short timeline scenarios.

- Examples of short-timelines focused work that is also useful for longer timelines:

- Lots of technical AI safety research (e.g. scalable oversight, interpretability, etc)

- (US) Domestic AI regulation

- Frontier AI lab governance

- Examples of work/career plans potentially impactful in longer-timelines that look substantially worse in short timelines:

- Undergraduate and high-school outreach/education (which is what prompted me to write this post in the first place)

- Resource-intensive international AI regulation regime establishment

- Ladder-climbing careers (with little room for impact before landing senior roles): E.g. running for elected office (starting at the local level and building up)

- Getting citizenship in a country more relevant for TAI x-safety (which usually takes many years)

- Counterexample: Work assuming TAI using the current deep-learning paradigm might be rendered moot due to insights that allow for more powerful performance using a significantly different set-up.

Considerations favoring focusing on longer-timelines

Many people concerned about TAI x-safety are young and inexperienced

- Younger and more inexperienced people have more impactful career paths available to them over longer-timelines, since relevant experience, expertise, and age are required for many impactful roles (e.g. senior roles in policy/government and labs, professors, etc). Longer timelines means more time to build career capital, relevant skills, networks, etc.

- Counter-consideration: While the above applies to many people currently interested in TAI existential safety, the consideration points in the opposite direction for older and more experienced people.

Intractability of changing shorter timelines scenarios

- There is not much that people who are not exceptionally well-positioned (e.g. people in senior positions in labs, governments, and their advisors) can do under extremely short-timeline scenarios (e.g. if in a year’s time a frontier lab has TAI and expects untrusted competitors to release dangerous models within weeks/months).

- Many valuable projects take lots of time, and many projects’ impact increases with time

- Insofar as there are lots of important things that should exist/happen in the world but don’t/haven’t yet, many of these will take a lot of time to develop (e.g. robust international coordination or AI training run surveillance/auditing infrastructure).

- Example: Some compute governance plans will require lots of time to implement, and are more useful in scenarios where TAI requires large quantities of compute, which means longer timelines.

- Compound returns have more time to take effect in longer timeline scenarios - e.g. from investing, education/outreach/movement building work, evergreen content generation, etc.

- Insofar as there are lots of important things that should exist/happen in the world but don’t/haven’t yet, many of these will take a lot of time to develop (e.g. robust international coordination or AI training run surveillance/auditing infrastructure).

Neutral, person-specific, and unclear considerations

Probability distribution over TAI Timelines

- If I were 90% confident that TAI would arrive before 2030, or after 2050, this would significantly inform what kinds of work and timeline scenarios to prioritize.

Variance and Influenceability (usefulness of working on) of different timelines scenarios

- Importantly, the expected value of the future (by your lights) conditioned on a certain timelines prediction (including, most saliently, the likelihood of an existential catastrophe like AI takeover conditioned on TAI timelines), should not influence your decision-making outside of how it affects the scenario’s influenceability.

- Example: How good or bad you expect the future to be conditioned on 2025 TAI timelines is not decision-relevant if you are confident that there is nothing you can do to change the trajectory (in an ethically relevant way).

- There likely should be a correlation between estimates of the probability of an existential catastrophe in different scenarios, and their influenceability, where intuitively probabilities closer to 50% are easier to move than ones near 0 and 100%.

- Which timelines have higher variance in their outcomes?

- More time allows for more random/a-priori-hard-to-predict, and morally-relevant events to occur, which would suggest the potential trajectories of longer timeline scenarios are higher variance. This means more can be done to influence them.

- On the other hand, powerful incentives and dynamics (e.g. entropy, evolution, prisoners’ dilemmas, tragedies of the commons) have more time to take effect and more reliably produce certain outcomes on longer timescales.

- The benefit of impartial altruism under different timeline scenarios

- Maybe the highest-leverage interventions in longer-timeline scenarios are better-suited for impartial altruistically motivated actors, as they are less likely to be addressed by others by default. For example, in longer timeline scenarios, intent-aligned TAI might be more probable, meaning there is more value in steering the future with cosmopolitan impartial values. In shorter timelines, most of the leverage may come from preventing an existential catastrophe, a concern not unique to altruistic-impact-motivated individuals.

Personal considerations

- The below list includes many considerations that affect the value of focusing on different timeline scenarios.

- Age (as discussed above)

- Citizenship/Work authorization - Having citizenship/work authorization in countries that are likely to be most relevant to transformative AI development might push in favor of focusing on shorter timelines, and vice versa.

- Network - Having people in your network who are well-suited to contribute to shorter/longer timelines scenarios might be a reason to focus on one over the other (e.g. having many connections in the US government or frontier AI labs makes quick impact easier, vs. knowing many talented high school/university students makes longer-term impact easier)

- Background, skills, strengths + weaknesses - Your skillset might be especially well-suited to some timelines (e.g. experience in high school//undergraduate-student movement building is likely more valuable in longer-timeline scenarios)

- Kinds of work and specific jobs you could enjoy and excel at

- Financial, geographic, and other constraints

- Values and ethics - especially as they relate to evaluating the influenceability of different scenarios, and at a meta-level how much you want to prioritize impact over other factors when deciding career plans.

- Many others

Takeoff speeds/Continuity of AI progress

- Discontinuous progress/fast take-offs [? · GW] scenarios are correlated with shorter timelines (especially insofar as the period of time where AI has started significantly improving and influencing the world has already started). Fast take-off scenarios might mean increased leverage for people already concerned about TAI due to more predictability pre-discontinuity (if e.g. it’s hard to predict how a slow-takeoff, slowly-increasingly-AI-influenced world looks over time and how this influences the long-term future). However, they might be less tractable overall since discontinuities are hard to anticipate and prepare for.

- For example, many plans involve leveraging AI warning shots, which are less likely and less frequent in faster take-off scenarios with more discontinuous AI progress.

The last-window-of-influence is likely in advance of TAI arrival

- There are often differences between when an event occurs, and when an event can be last influenced.

For example, consider the event of the next US presidential inauguration, scheduled for January 20, 2025. If it were impossible to engage in election fraud, the last day to influence which party would be in charge starting Jan 20th, 2025 would be election day (November 5, 2024). - In the context of transformative AI, I recommend reading Daniel Kokotajlo’s post on the point-of-no-return for TAI [LW · GW]. One dynamic from the post I want to highlight in particular - “influence over the future might not disappear all on one day; maybe there’ll be a gradual loss of control over several years. For that matter, maybe this gradual loss of control began years ago and continues now... We should keep these possibilities in mind as well.”

Path-dependence, race dynamics, AI-enabled lock-ins and other factors might mean the amount of time left to influence trajectories is much less than the time left until TAI is deployed, which amplifies all the above considerations . - Counter-consideration: Many impact-relevant interventions might be after the arrival of TAI - e.g. determining how transformative AI should be deployed and how resources should be allocated in a post-TAI world.

Conclusion

Overall, the considerations pointing in favor of prioritizing short-timelines seem moderately stronger to me than those in favor of prioritizing long-timelines impact, though others who read drafts of this post disagreed. In particular, the neglectedness considerations seem stronger to me than the pro-longer-timeline considerations. The main exception that comes to mind is the personal fit consideration for very young/inexperienced individuals - though I would still guess that there is lots of useful work to do in shorter timeline worlds for (e.g. operations, communications, work in low-barrier-of-entry fields). I might expand on kinds of work that seem especially useful under short vs. long timelines, but other [LW · GW] writing on what kinds of work are useful exist already - I’d recommend going through them and thinking about what work might be a good fit for you, and seems most useful under different timelines.

There is a good chance I am missing many important considerations, and reasoning incorrectly about the ones mentioned in this post. I’d love to hear suggestions and counter-arguments in the comments, along with readers’ assessment of the strength of considerations and how they lean overall.

Acknowledgements

Thanks to Michael Aird, Caleb Parikh, and others for sharing helpful comments and related documents.

0 comments

Comments sorted by top scores.