Reality Testing

post by Ben Turtel (ben-turtel) · 2024-07-13T15:20:32.147Z · LW · GW · 1 commentsThis is a link post for https://bturtel.substack.com/p/reality-testing

Contents

Two Types of Feedback Social Feedback can be Hacked Social Artificial Intelligence Back to Reality None 1 comment

In the recent weeks since Biden’s disastrous debate performance, pundits have theatrically expressed surprise at his apparent cognitive decline, despite years of clear and available evidence in the form of video clips, detailed reports, and fumbled public appearances.

This is far from the first popular narrative that has persisted in the face of obvious counterevidence. Claims of widespread voter fraud in 2020 continue after losing every court case. Lab leak theories were brushed aside in favor of zoonotic transmission, ignoring every signal of plausibility. Climate change denial endures ever-rising temperatures. Inflation is blamed on greedy gas stations after years of massive fiscal stimulus and warnings from prominent economists. The examples abound.

It's been discussed ad nauseam that reaching consensus on trustworthy information sources is more difficult than ever. Polarized factions hold not only different opinions, but also different facts. Even definitions of words and which words to use for what seem up for debate.

I’m not here to argue whether misinformation is a greater problem now than in the past. Personally, I’m not convinced that information overall is any less reliable than it used to be – after all, people were burning witches not too long ago.

However, the information landscape has diversified dramatically. Decades ago, residents in the same town watched the same news and read the same papers. Now, individuals in the same household might consume entirely non-overlapping information diets. We have much more choice in which information to consume, leading to vastly different understandings of the world.

In our increasingly online world, not only has access to information changed, but so has the process of learning who to trust and how we update our beliefs. The internet excels at measuring human reactions to narratives, but struggles to capture the accuracy of those narratives. As our lives become more digital, our beliefs are unwittingly shaped more by clicks, likes, and shares, and less by real-world outcomes.

The balance between Empirical Feedback and Social Feedback is shifting.

Two Types of Feedback

Our beliefs are calibrated by two kinds of feedback.

Empirical Feedback is when your beliefs are tested by the real world through prediction and observation. For example, dropping a rock and watching it fall validates your belief in gravity. Defunding the police and measuring the change in crime tests progressive justice theories. Editing and running code tests if your change works as expected. Returns on your stock pick validate or invalidate your investment hypothesis.

Social Feedback is when other people react to your beliefs: friend’s horrified reaction when you question affirmative action policies; a VC agreeing to invest $1M based on your startup idea, or wealthy socialites writing a check for your nonprofit; applause after a speech, likes on Twitter, upvotes on YouTube, or real votes in a congressional campaign.

Only empirical feedback directly measures how accurately your beliefs map onto reality. Social feedback measures how much other people like your beliefs.

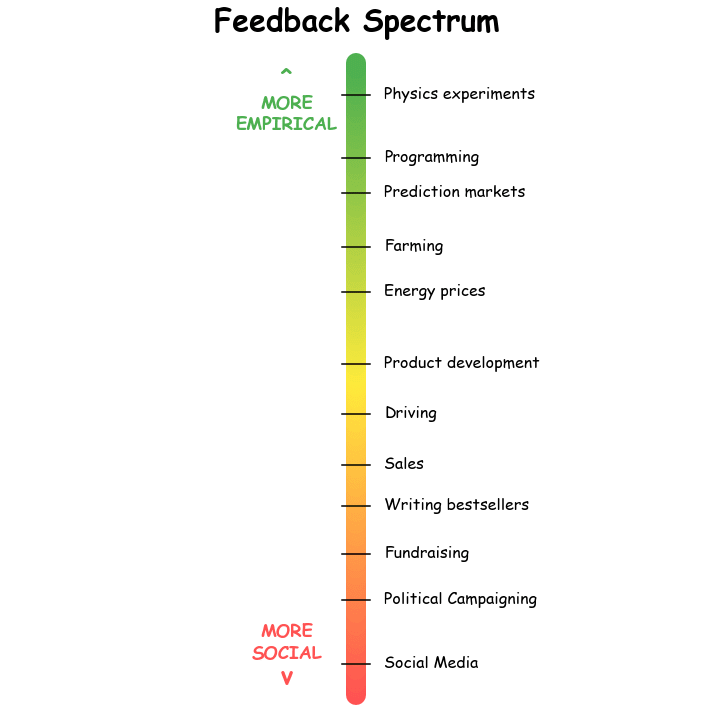

This is a spectrum, and most people most of the time receive a combination of the two. Physics experiments check if your equation accurately predicts observations, but the story you tell with collected data can be more or less compelling in peer review. Investors receive empirical feedback in the form of price changes and returns, and social feedback when raising money for their fund. Driving a car badly might result in a crash (empirical feedback) or just annoyed honks from other drivers (social feedback).

Here’s a highly unscientific illustration of where different activities fall between empirical and social feedback:

You can make empirical predictions driven by social phenomena – 'GameStop is going to the moon!' – or receive social feedback about empirical predictions – 'How dare you suggest that women are physically weaker than men!' You can accurately predict the popularity of an inaccurate prediction, or make an inaccurate prediction that is nevertheless wildly popular. The key question is whether the accuracy of the prediction or its appeal is being tested.

Both kinds of feedback are critical for learning. Much of our early development is shaped by social feedback, such as approval from one’s family, grades in school, or performance reviews. Humans' ability to transmit and acquire knowledge without direct experience is a superpower.

Social feedback is a very useful proxy, but only empirical feedback ultimately tests how well our beliefs reflect reality. Unfortunately, it's impossible for most of us to receive direct empirical feedback about most things, as we can’t all run our own scientific experiments or verify every piece of reporting.

For most of human history, the necessity of interacting with the real world kept at least some of our beliefs in check. Most of our time and work was spent directly interacting with the world - farming, gathering, laboring - and feeling the direct consequences of our mistaken beliefs when we were wrong. A badly planted seed won’t grow. Poorly built shelters collapse.

Today many of us are farther away from ground truth. The internet is an incredible means of sharing and discovering information, but it promotes or suppresses narratives based on clicks, shares, impressions, attention, ad performance, reach, drop off rates, and virality - all metrics of social feedback. As our organizations grow larger, our careers are increasingly beholden to performance reviews, middle managers' proclivities, and our capacity to navigate bureaucracy. We find ourselves increasingly calibrated by social feedback and more distant from direct validation or repudiation of our beliefs about the world.

Social Feedback can be Hacked

As our model of the world is increasingly calibrated with social feedback, the empirical guardrails on those models are becoming less effective. The risk of going off the rails into unchecked echo chambers is rising.

Moreover, unlike empirical feedback, social feedback can be hacked.

Social feedback as a means to mass delusion isn’t new. Religions have long used social pressure or unverifiable metaphysical threats, like eternal damnation, to propagate beliefs. Totalitarian regimes enforce conformity through violence; apparently North Korea’s Kim Jong Un never uses the bathroom. The difference today is that many of our delusions, at least in the USA, don’t rely on forceful suppression or unfalsifiable metaphysics—they are both falsifiable and easily debunked. They persist when we voluntarily disconnect from reality.

Malicious actors exploit social media to amplify outrage and silence dissent, weaponizing social feedback to instill self-doubt, spread misinformation, and enforce narratives. Postmodernism-inspired activists increasingly proclaim that reality is a social construct, and attempt to rewrite it through aggressive social assertion. They narrow the concepts we consider, the beliefs we adopt, and even the words we use, regardless of their empirical utility for navigating the world.

The point of our knowledge, beliefs, and even our words is to make predictions about the world. A useful belief is falsifiable because it alters expectations in a specific direction. When, as individuals, we’re coerced into degrading our model of the world to align with social feedback, we become a little less capable at navigating the world. As a collective, when we suppress ideas with social feedback instead of testing them against reality, we all end up a little dumber for it.

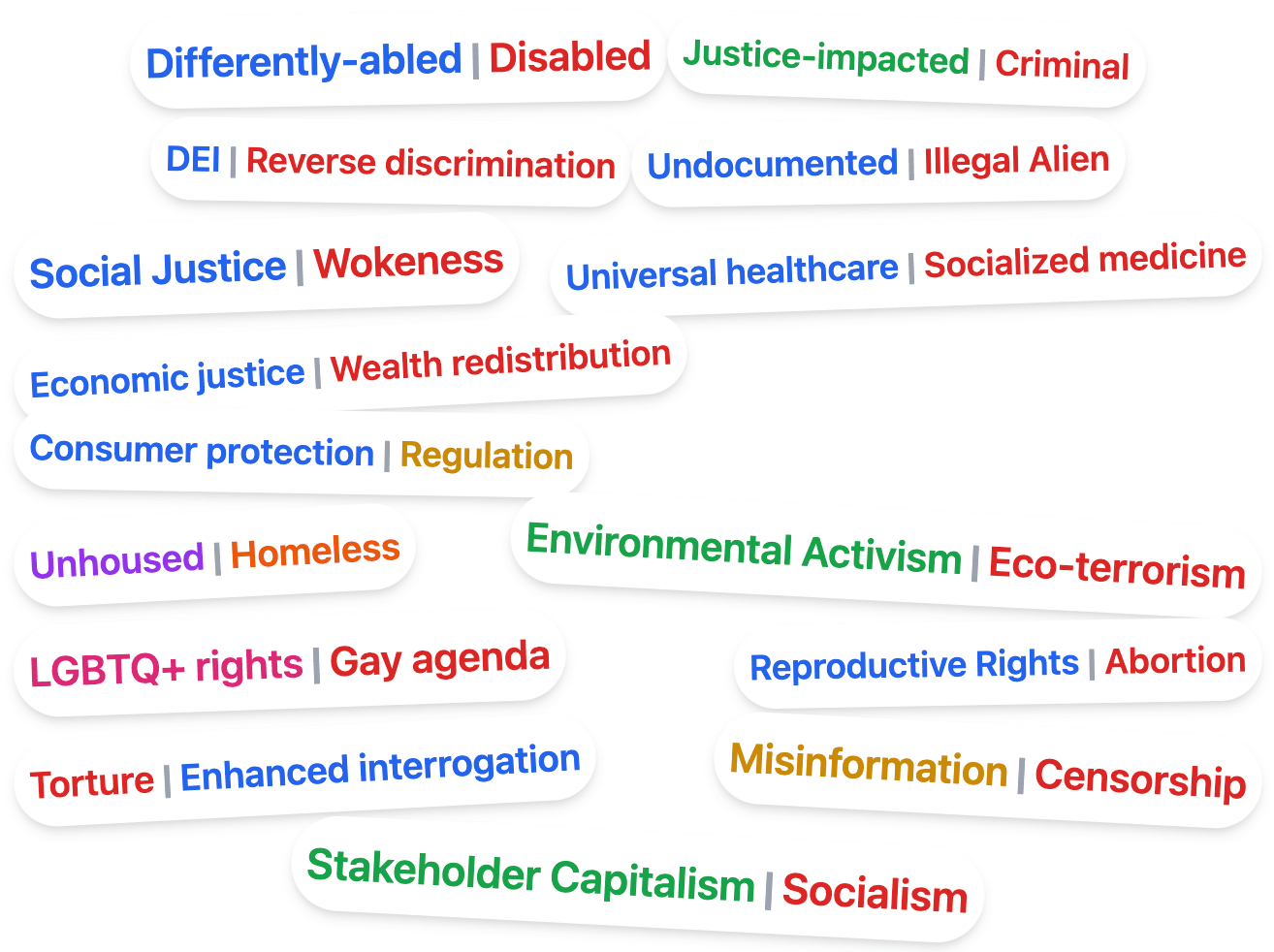

Is a new concept useful for making predictions about the world, or is it propaganda? Propaganda thrives via social feedback from the pundits and activists whose narrative it bolsters. Good ideas yield explanatory and predictive utility.

Can you measure “corporate greed” levels and use them to predict when prices will rise? Does a deep state conspiracy describe a government competent enough to fake the moon landing and hide aliens, but incapable of balancing the budget? Is gender identity or biological sex a better predictor of athletic performance?

Which option among contested terminology makes it easiest to predict what a speaker is actually referring to? “Disabled” brings to mind a wheelchair, but “differently-abled” makes me think of Spiderman. “Enhanced interrogation” sounds like an interviewer on Adderall, not torture. “Undocumented” gives the impression that border security is a matter of missing paperwork.

One way to determine the trustworthiness of a source is to consider the kind of feedback that source receives. How incentivized are they to make accurate predictions? Scientists, investors, and engineers can’t do much with a broken model of the world, while politicians, influencers, and activists might depend on an audience that doesn’t notice or care when their predictions are wrong. We can dismiss the proclamations of anyone who isn’t even trying to accurately predict reality.

The more we lose touch with the empirical world, the more vulnerable we are to the influence and control of motivated propagandists. Nassim Taleb describes the dictatorship of the small minority by which a loud and organized group can coerce a more flexible majority to their demands. Social feedback is a powerful vector for this kind of coercion because it's easy for a loud and motivated group of zealots to drown out the less engaged majority.

Social Artificial Intelligence

The need for empirical guardrails is particularly salient as we usher in the era of Artificial General Intelligence.

Large Language Models (LLMs) today are trained almost entirely with some form of social feedback. Pre-training often consists of next-word prediction on text from the internet; learning the sequence of words a person is likely to say without validating the content of those sequences. Fine-tuning often optimizes LLMs by showing multiple responses to human reviewers who pick their favorite version. Models are trained to mimic humans and produce agreeable responses based on social feedback; they do not learn by forming and testing the hypotheses described in their output.

Recent advances in AI models are incredible, but as they continue to play a bigger role in our lives and economies, I hope we ground them with deeper empirical feedback mechanisms. This might mean AI embodied in robots learning to anticipate action and reaction in the physical world, or AIs forming reasoned hypotheses about the future to validate against outcomes free from human biases. Grounding AIs in empirical feedback might prevent AI from adopting our blind spots, and may even allow AI to uncover paradigm shifts that overturn our most foundational misconceptions.

Back to Reality

George Orwell wrote in 1984, “The Party told you to reject the evidence of your eyes and ears. It was their final, most essential command.” While a single ruling party might not be responsible, our increasingly digital and administrative workforce means we calibrate less on our own real-world experience and more on social feedback.

Kids these days tell each other to “touch grass” as an antidote to being “too online” - which makes sense, not because the world of bytes isn’t real, but because when we spend too much time online, we become overly calibrated to what other people think and lose our ability to make sense of the real world. Grass is immune to the vicissitudes of online opinion.

The digital age and AI vastly increase our access to knowledge but also amplify social feedback and distance us from empirical guardrails. This shift changes how we make sense of the world and enables new methods of pervasive mass delusion. We must become aware of and prioritize feedback from the real world, lest we lose touch with reality itself.

1 comments

Comments sorted by top scores.

comment by Hastings (hastings-greer) · 2024-07-13T17:26:23.389Z · LW(p) · GW(p)

Today many of us are farther away from ground truth. The internet is an incredible means of sharing and discovering information, but it promotes or suppresses narratives based on clicks, shares, impressions, attention, ad performance, reach, drop off rates, and virality - all metrics of social feedback. As our organizations grow larger, our careers are increasingly beholden to performance reviews, middle managers' proclivities, and our capacity to navigate bureaucracy. We find ourselves increasingly calibrated by social feedback and more distant from direct validation or repudiation of our beliefs about the world.

I seek a way to get empirical feedback on this set of claims- specifically the direction-of-change-over-time assertions "farther... increasingly... more distant..."