Posts

Comments

That's a good question. I don't think I have a great idea of the lower / upper bound on capabilities from each, but I also don't think it matters much - I suspect we'll be doing both well before we hit AGI's upper bound.

There's likely plenty of "low hanging fruit" for AGI to uncover just working with human data and human labels, but I also suspect there are pretty easy ways to let AI start generating / testing hypothesis about the real world - and there are additional advantages of scale and automation to taking humans out of the loop.

Hey, thanks for reading and for the thoughtful comment!

100% agree with this: "AI should be able to push at least somewhat beyond the limits of what humans have ever concluded from available data, in every field, before needing to obtain any additional, new data."

Current methods can get us to AGI, and full AGI would result in a mind that is practically superhuman because no human mind contains all of these abilities to such a degree. I say as much in the full post: "Models may even recombine known reasoning methods to uncover new breakthroughs, but they remain bound to known human reasoning patterns."

Also agree that simulation is a viable path to exploration / feedback beyond what humans can explicitly provide: "There are many ways we might achieve this, whether in physically embodied intelligence, complex simulations grounded in scientific constraints, or predicting real world outcomes."

I'm mostly pointing out that at some point we will hit a bottleneck between AGI and ASI, which will require breaking free from human labels, and learning new things via exploration / real world feedback.

Hey @NeroWolfe.

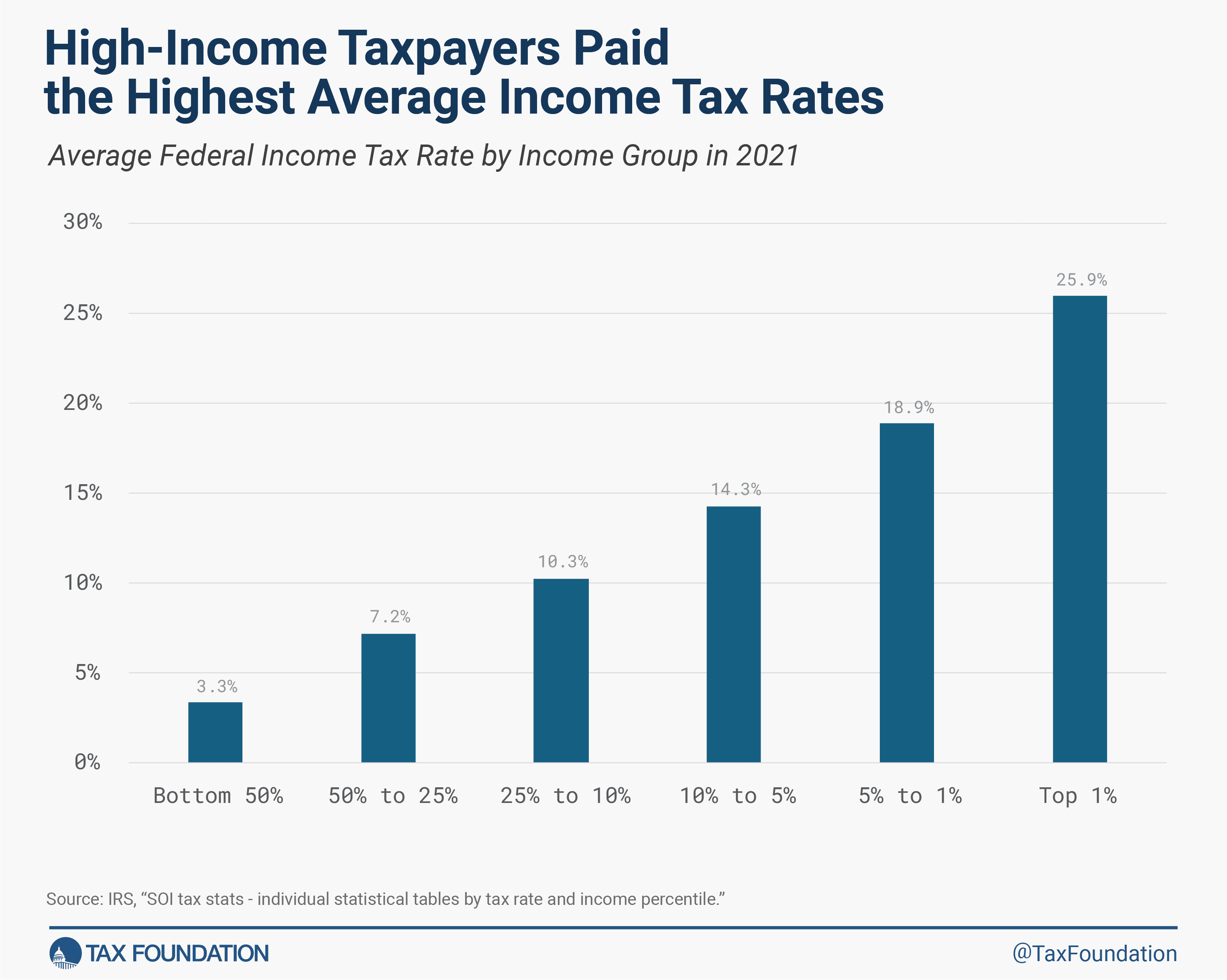

I think you're looking at the wrong numbers. For example, their 23.3% for the top 5% INCLUDES the top 1%, which skews the averages precisely because of the power laws at play.

The have another table further down where they break this out:

Hey @TeaTieAndHat, thank you for the comment!

I think the core idea I'm trying to get across - and the reason I hoped this was a good fit for LW - is not about how govs can maximize revenue for its own sake, but that as a society we could do a much better job caring for the 99% by acknowledging the power laws behind wealth creation and focusing more on increasing our yield at the top.

This is true even if your goal as a government is NOT to maximize productive capacity, because cost / revenue is the bottleneck preventing you from funding everything else. Shifting policy to take advantage of this could let us better support all the "non-productive" things we care about: higher safety nets, public arts, etc.

So for example - if your goal as a country is to raise the standard of living via benefits to the bottom 50% of earners, unintuitively, the best to fund this is to help more high-potential folks become high-earners. Moving a single person from the 5% to the 1% generates enough revenue to pay for many dozens of basic incomes in the bottom quartile.

Thanks again for the thoughtful read and the feedback!

Hey @Myron Hedderson, thanks for reading and for the thoughtful comment!

The 1% in this article is just a proxy for that level of success - we claim is that we can grow the number of people who achieve the level of success typically seen in the top 1%, not that we can literally grow the 1%. Productivity and the economy isn't zero sum, so elevating some doesn't mean bumping others down (although I acknowledge that there are some fields where relative success is a driver of earnings).

I agree I could have been more clear about this a little bit, and I considered it - I just thought it was a bit of a distraction from the core point. Maybe I was wrong :) I appreciate the feedback.

"I am somewhat leery of having a government bureaucracy decide who is high potential and only invest in them." - 100% agree with this point. I see this mostly as pushing for a better way of allocating efforts and budgets that are already being spent, but I like your framing around marking sure "everyone has access" instead of picking winners.