A solvable Newcomb-like problem - part 2 of 3

post by Douglas_Reay · 2012-12-03T16:49:38.161Z · LW · GW · Legacy · 1 commentsContents

The TL;DR; points to take away None 1 comment

This is the second part of a three post sequence on a problem that is similar to Newcomb's problem but is posed in terms of probabilities and limited knowledge.

Part 1 - stating the problem

Part 2 - some mathematics

Part 3 - towards a solution

In game theory, a payoff matrix is a way of presenting the results of two players simultaneously picking options.

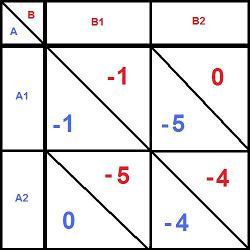

For example, in the Prisoner's Dilemma, Player A gets to choose between option A1 (Cooperate) and option A2 (Defect) while, at the same time Player B gets to choose between option B1 (Cooperate) and option B2 (Defect). Since years spent in prison are a negative outcome, we'll write them as negative numbers:

So, if you look at the bottom right hand corner, at the intersection of Player A defecting (A2) and Player B defecting (B2) we see that both players end up spending 4 years in prison. Whereas, looking at the bottom left we see that if A defects and B cooperates, then Player A ends up spending 0 years in prison and Player B ends up spending 5 years in prison.

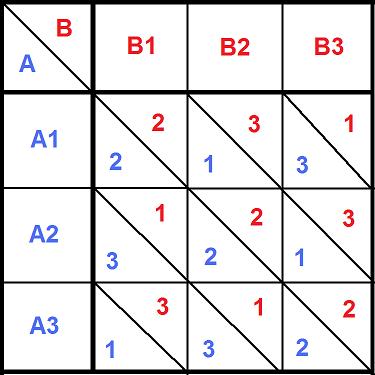

Another familiar example we can present in this form is the game Rock-Paper-Scissors.

We could write it as a zero sum game, with a win being worth 1, a tie being worth 0 and a loss being worth -1:

But it doesn't change the mathematics if we give both players 2 points each round just for playing, so that a win becomes worth 3 points, a tie becomes worth 2 points and a loss becomes worth 1 point. (Think of it as two players in a game show being rewarded by the host, rather than the players making a direct bet with each other.)

If you are Player A, and you are playing against a Player B who always chooses option B1 (Rock), then your strategy is clear. You choose option A2 (Paper) each time. Over 10 rounds, you'd expect to end up with $30 compared to B's $10.

Let's imagine a slightly more sophisticated Player B, who always picks Rock in the first round, and then for all other rounds picks whatever would beat Player A's choice the previous round. This strategy would do well against someone who always picked the same option each round, but it is deterministic and, if we guess it correctly in advance, we can design a strategy that beats it every time. (In this case, picking Paper-Rock-Scissors then repeating back to Paper). In fact whatever strategy B comes up with, if that strategy is deterministic and we guess it in advance, then we end up with $30 and B ends up with $10.

What if B has a deterministic strategy that B picked in advance and doesn't change, but we don't know at the start of the first round what it is? In theory B might have picked any of the 3-to-the-power-of-10 deterministic strategies that are indistinguishable from each other over a 10 round duel but, in practice, humans tend to favour some strategies over others so, if you know humans and the game of Rock-Paper-Scissors better than Player B does, you have a better than even chance of guessing his pattern and coming out ahead in the later rounds of the duel.

But there's a danger to that. What if you have overestimated your comparative knowledge level and Player B uses your overconfidence to lure you into thinking you've cracked B's pattern, while really B is laying a trap, increasing the predictability of Player A's moves so Player B can then take advantage of that to work out which moves will trump them? This works better in a game like poker, where the stakes are not the same each round, but it is still possible in Rock-Paper-Scissors, and you can imagine variants of the game where the host varies payoff matrix by increasing the lose-tie-win rewards from 1,2,3 in the first round, to 2,4,6 in the second round, 3,6,9 in the third round, and so on.

This is why the safest strategy is to not to have a deterministic strategy but, instead, use a source of random bits to each round pick option 1 with a probability of 33%, option 2 with a probability of 33% or option 3 with a probability of 33% (modulo rounding). You might not get to take advantage of any predictability that becomes apparent in your opponents strategy, but neither can you be fooled into becoming predictable yourself.

On a side note, this still applies even when there is only one round, because unaided humans are not as good at coming up with random bits as they think they are. Someone who has observed many first time players will notice that first time players more often than not choose as their Rock as their 'random' first move, rather than Paper or Scissors. If such a person were confident that they were playing a first time player, they might therefore pick Paper as their first move more frequently than not. Things soon get very Sicilian (in the sense of the duel between Westley and Vizzini in the film The Princess Bride) after that, because a yet more sophisticated player who guessed their opponent would try this, could then pick Scissors. And so ad infinitum, with ever more implausible levels of discernment being required to react on the next level up.

We can imagine a tournament set up between 100 players taken randomly from the expertise distribution of game players, each player submitting a python program that always plays the same first move, and for each of the remaining 9 rounds produces a move determined solely by the the moves so far in that duel. The tournament organiser would then run every player's program once against the programs of each of the other 99 players, so on average each player would collect 99x10x2 = $1,980

We could make things more complex by allowing the programs to use, as an input, how much money their opponent has won so far during the tournament; or iterate over running the tournament several times, to give each player an 'expertise' rating which the program in the following tournament could then use. We could allow the tournament host to subtract from each player a sum of money depending upon the size of program that player submitted (and how much memory or cpu it used). We could give each player a limited ration of random bits, so when facing a player with a higher expertise rating they might splurge and make their move on all 10 rounds completely random, and when facing a player with a lower expertise they might conserve their supply by trying to 'out think' them.

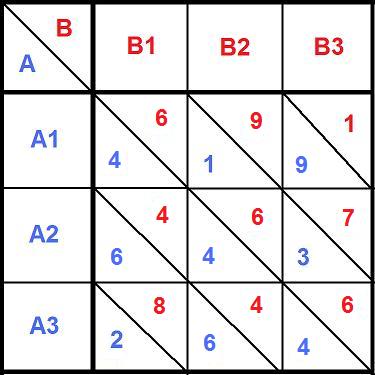

There are various directions we could take this, but the one I want to look at here is what happens when you make the payoff matrix asymmetric. What happens if you make the game unfair, so not only does one player have more at stake than the other player, but the options are not even either, for example:

You still have the circular Rock-Paper-Scissors dynamic where:

If B chose B3, then A wants most to have chosen A1

If A chose A1, then B wants most to have chosen B2

If B chose B2, then A wants most to have chosen A3

If A chose A3, then B wants most to have chosen B1

If B chose B1, then A wants most to have chosen A2

If A chose A2, then B wants most to have chosen B3

so everything wins against at least one other option, and loses against at least one other option. However Player B is clearly now in a better position, because B wins ties, and B's wins (a 9, an 8 and a 7) tend to be larger than A's wins (a 9, a 6 and a 6).

What should Player A do? Is the optimal safe strategy still to pick each option with an equal weighting?

Well, it turns out the answer is: no, an equal weighting isn't the optimal response. Neither is just picking the same 'best' option each time. Instead what do you is pick your 'best' option a bit more frequently than an equal weighting would suggest, but not so much that the opponent can steal away that gain by reliably choosing the specific option that trumps yours. Rather than duplicate material already well presented on the web, I will point you at two lecture courses on game theory that explain how to calculate the exact probability to assign to each option:

- "ECON 159: Game Theory", from Open Yale Courses

- "Game Theory 101: The Complete Series", by William Spaniel

You do this by using the indifference theorem to arrive at a set of linear equations, which you can then solve to arrive at a mixed equilibrium where neither player increases their expected utility by altering the probability weightings they assign to their options.

- Example of calculating the general case for a 3x3 payoff matrix

- More complex example, drawn from poker

- Summary

The TL;DR; points to take away

If you are competing in what is effectively a simultaneous option choice game, with a being who you suspect may have an equal or higher expertise to you at the game, you can nullify their advantage by picking a strategy that, each round chooses randomly (using a weighting) between the available options.

Depending upon the details of the payoff matrix, there may be one option that it makes sense for you to pick most of the time but, unless that option is strictly better than all your other choices no matter what option your opponent picks, there is still utility to gain from occasionally picking the other options in order to keep your opponent on their toes.

Back to Part 1 - stating the problem

This is Part 2 - some mathematics

Next to Part 3 - towards a solution

1 comments

Comments sorted by top scores.

comment by devas · 2012-12-04T11:37:35.627Z · LW(p) · GW(p)

This seems interesting; however, it all hinges on part three, which I eagerly await.

Still, the option you seems to favour wouldn't guarantee a million dollar win, which is what happens if I one-box.

In an iterated version of the problem, this should work, but still... Now I'm really curious about what you're going to write next