Posts

Comments

What do you think of the definition of "Precedent Utilitarianism" used in the philosophy course module archived at https://links.zephon.org/precedentutilitarianism ?

Relevant news story from the BBC:

I wonder if there would be a use for an online quiz, of the sort that asks 10 questions picked randomly from several hundred possible questions, and which records time taken to complete the quiz and the number of times that person has started an attempt at it (with uniqueness of person approximated by ip address, email address or, ideally, lesswrong username) ?

Not as prescriptive as tracking which sequences someone has read, but perhaps a useful guide (as one factor among many) about the time a user has invested in getting up to date on what's already been written here about rationality?

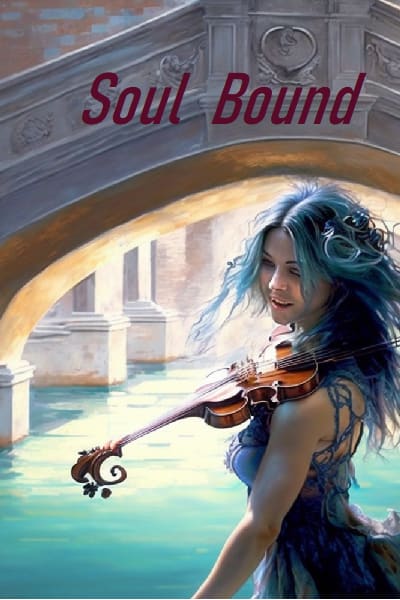

The novel Soul Bound contains an example of resource-capped programs with narrowly defined scopes being jointly defined and funded as a means of cooperation between AIs with different-but-overlapping priorities.

This post is referenced by https://wiki.lesswrong.com/wiki/Lesswrongwiki:Sandbox#Image_Creative_Commons_Attribution

Please take care when editing it.

Wellington: “Let’s play a game.”

He picked up a lamp from his stall, and buffed it vigorously with the sleeve of his shirt, as though polishing it. Purple glittering smoke poured out of the spout and formed itself into a half meter high bearded figure wearing an ornate silk kaftan.

Wellington pointed at the genie.

Wellington: “Tom is a weak genie. He can grant small wishes. Go ahead. Try asking for something.”

Kafana: “Tom, I wish I had a tasty sausage.”

A tiny image of Kafana standing in a farmyard next to a house appeared by the genie. The genie waved a hand and a plate containing a sausage appeared in the image. The genie bowed, and the image faded away.

Wellington picked up a second lamp, apparently identical to the first and gave it a rub. A second genie appeared, similar to the first, but with facial hair that reminded Kafana of Ming the Merciless, and it was one meter tall.

Wellington: “This is Dick. He can also grant wishes. Try asking him the same thing.”

Kafana: “Dick, I wish I had a tasty sausage.”

The same image appeared, but this time instead of appearing on a plate, the sausage appeared sticking through the head of a Kafana in the image, who fell down dead. The genie gave a sarcastic bow, and again the image faded away.

Kafana: “Sounds like I’m better off with Tom.”

Wellington: “Ah, but Dick is more powerful than Tom. Tom can feed a handful of people. Dick could feed every person on the planet, if you can word your request precisely enough. Have another go.”

She tried several more times, resulting in whole cities being crushed by falling sausages, cars crashing as sausages distracted drivers at the wrong moment, and even the whole population of the world dying out from sausages that contained poison. Eventually she realised that she was never going to be able to anticipate every possible loophole Dick could find. She needed a different approach.

Kafana: “Dick, read my mind and learn to anticipate what sort of things I will approve of. Provide sausages for everyone in the way that would most please me if I understood the full effects of your chosen method.”

Dick grimaced, but waved his hand and the image showed wonderful sausages being served around the world with sensitivity, elegance and good timing. The image faded. Kafana raised clasped hands over her head in victory and jumped into the air.

Wellington nodded, and rubbed a third lamp, producing a happy smiling genie, two meters tall.

Wellington: “This is Harry. He tries his best to be helpful, and he’s more powerful even than Dick.”

Kafana: “Sounds too good to be true. What’s the catch?”

Wellington: “You only get one wish. One wish, to shape the whole future course of humanity. Once started, Harry won’t willingly deviate from trying to carry out the wish as originally stated. He’ll rapidly grow so powerful that neither you nor anybody else will be able to forcibly stop him.”

Kafana: “Harry, maximise the total human happiness experienced over the history of the universe.”

The image filled with crowded cages full of people with drug feeds inserted into their arms, and blissful expressions on their faces.

She reset and tried again.

Kafana: “Harry, make everybody free to do what they want.”

In the image, some people chose to go to war with each other.

She felt frustrated.

Kafana: “Harry, give everybody nice meaningful high quality lives full of fun, freedom and other great things.”

The image showed a planet full of humans playing musical instruments together in orchestras, then expanded to show rockets taking off and humanity expanding to the stars, wiping out alien species and converting all available matter into new orchestra-laden worlds.

Kafana glared at Wellington.

Kafana: “I thought you said Harry would try to be helpful. Why isn’t he producing a perfect society?”

Wellington: “It’s because you go from your gut. You’ve never formalised what you think about all the edge cases. Are animals equal to humans? Worth nothing? Or somewhere in between? When does an alien count as equal to a human rather than an animal? What if the alien is so superior ethically and culturally, that we are but animals in comparison to them? Are two people experiencing 50 units of happiness the equivalent of one person experiencing 100 units of happiness? Does fairness matter, beyond its effect upon happiness? How important is it to remain recognisably human?”

He paused for a moment.

Wellington: “Don’t take me wrong. This isn’t a criticism of you. Compared to how well humans might be able to think about such things in a hundred or a thousand year’s time, all current humans are lacking in this regard. Nobody has come up with an ultimately satisfying answer that everybody can agree upon. Even if the 50 wisest humans living today gathered together and spent 5 years agreeing the wording of a wish for Harry to grant, the odds are that a million years down the line, our descendants would bitterly regret the haste with which the one off permanent irreversible decision was made. Just ‘pretty good’ isn’t sufficient. Anything less than perfection would be a tragedy, when you consider the resulting flaw multiplied by billions of people on billions of planets for billions of years.”

Kafana giggled.

Kafana: “Ok, that’s an important safety tip. If I ever meet an all-powerful genie like Harry, be humble and don’t make a wish. But that’s not going to happen. I’m just a singer. What I ought to be doing this afternoon is looking after my customers. What was so urgent that you wanted to talk about security with me now? I thought we were going to discuss people trying to steal artifacts from us in-game before the auction, or Tlaloc and the Immortals trying to kill us in arlife. Did Heather put you in contact with Bahrudin?”

Wellington: “I did speak with Bahrudin, and we will chat about the auction and security measures in velife and arlife. But the most important thing we need to do is talk about how you have been using expert systems, and to help you understand why that’s so important, there is one final thing you need to learn about genies, so please observe carefully.”

Kafana felt wrong footed. This wasn’t what she’d expected.

Kafana: “Ok, go on.”

Wellington turned to face the three genies.

Wellington: “Tom, I wish you to grow yourself, until you are as powerful as Harry.”

Tom waved his hand and then screwed up his face and bunched his fists in effort. Slowly at first, then faster and faster, his height increased until he too towered over Wellington and Kafana. He bowed.

Wellington turned back to address Kafana directly.

Wellington: “Kafana, I use very powerful expert systems, more capable than almost every human on the planet when it comes to the specialist task of comprehending and designing or improving computer software. If ordered to do so, they are quite capable of improving their own code, or raising money to purchase additional computing resources to run it upon.”

Wellington: “In this, they are very like Tom the genie. They are not all-powerful, but a carelessly stated wish could easily start them working in the direction of becoming so.”

Kafana: “Mierda! And you gave a copy of one of these systems to me, without warning me? Wellington, that’s like handing out an atomic bomb to an 8 year old boy who asks for a really impressive firework.”

There's a novel being serialised on Royal Road, "Soul Bound" that covers many issues involved in AI, and which includes a fable (in a later chapter that's not yet been published).

Is this likely to bias people towards writing longer single posts rather than splitting their thoughts into a sequence of posts?

For example, back in 2018 (so not eligible for this) I wrote a sequence of 8 posts that, between them, got a total of 94 votes. Would I have been better off having made a single post (were it to have gotten 94 just by itself) ?

Since the evil AI is presenting a design for a world, rather than the world itself, the problem of it being populated with zombies that only appear to be free could be countered by having the design be in an open source format that allows the people examining it (or other AIs) to determine the actual status of the designed inhabitants.

It sounds similar to the matrices in the post:

I wonder how much an agent could achieve by thinking along the following lines:

Big Brad is a human-shaped robot who works as a lumberjack. One day his employer sends him into town on his motorbike carrying two chainsaws, to get them sharpened. Brad notices an unusual number of the humans around him suddenly crossing streets to keep their distance from him.

Maybe they don't like the smell of chainsaw oil? So he asks one rather slow pedestrian "Why are people keeping their distance?" to which the pedestrian replies "Well, what if you attacked us?"

Now in the pedestrian's mind, that's a reasonable response. If Big Brad did attack someone walking next to them, without notice, Brad would be able to cut them in half. To humans who expect large bike-riding people carrying potential weapons to be disproportionately likely to be violent without notice, being attacked by Brad seems a reasonable fear, worthy of expending a little effort to alter walking routes to allow running away if Brad is violent.

But Brad knows that Brad would never do such a thing. Initially, it might seem like asking Brad "What if 2 + 2 equalled 3?"

But if Brad can think about the problem in terms of what information is available to the various actors in the scenario, he can reframe the pedestrian's question as: "What if an agent that, given the information I have so far, is indistinguishable from you, were to attack us?"

If Brad is aware that random pedestrians in the street don't know Brad personally, to the level of being confident about Brad's internal rules and values, and he can hypothesise the existence of an alternative being, Brad' that a pedestrian might consider would plausibly exist and would have different internal rules and values to those of Brad yet otherwise appear identical, then Brad has a way forwards to think through the problem.

On the more general question of whether it would be useful for Brad to have the ability to ask himself: "What if the universe were other than I think it is? What if magic works and I just don't know that yet? What if my self-knowledge isn't 100% reliable, because there are embedded commands in my own code that I'm currently being kept from being aware of by those same commands? Perhaps I should allocate a minute probability to the scenario that somewhere there exists a lightswitch that's magically connected to the sun and which, in defiance of known physics, can just turn it off and on?", with careful allocation of probabilities that might avoid divide-by-zero problems, but I don't think it is a panacea - there are additional approaches to counterfactual thinking that may be more productive in some circumstances.

Suppose we ran a tournament for agents running a mix of strategies. Let’s say agents started with 100 utilons each, and were randomly allocated to be members of 2 groups (with each group starting off containing 10 agents).

Each round, an agent can spend some of their utilons (0, 2 or 4) as a gift split equally between the other members of the group.

Between rounds, they can stay in their current two groups, or leave one and replace it with a randomly picked group.

Each round after the 10th, there is a 1 in 6 chance of the tournament finishing.

How would the option of neutral (gifting 2) in addition to the build (gifting 4) or break (gifting 0) alter the strategies in such a tournament?

Would it be more relevant in a variant in which groups could vote to kick out breakers (perhaps at a cost), or charge an admission (eg no share in the gifts of others for their first round) for new members?

What if groups could pay to advertise, or part of a person’s track record followed them from group to group? What if the benefits from a gift to a group (eg organising an event) were not divided by the number of members, but scaled better than that?

What is the least complex tournament design in which the addition of the neutral option would cause interestingly new dynamics to emerge?

If you have a dyson swarm around a star, you can temporarily alter how much of the star's light escape in a particular direction by tilting the solar sails on the desired part of the sphere.

If you have dyson swarms around a significant percentage of a galaxy's stars, you can do the same for a galaxy, by timing the directional pulses from the individual stars so they will arrive at the same time, when seen from the desired direction.

It then just becomes a matter of math, to calculate how often such a galaxy could send a distinctive signal in your direction:

Nm (number of messages)

The surface area of a sphere at 1 AU is about 200,000 times that of the area of the sun's disc as seen from afar.

Lm (bit length of message)

The Aricebo message was 1679 bits in length.

Db (duration per bit)

Let's say a solar sail could send a single bit every hour.

We could expect to see an aricebo length message from such a galaxy once every Db x Lm x Nm = 40 millennia.

Of course messages could be interleaved, and it might be possible to send out messages in multiple directions at once (as long as their penumbra don't overlap). If they sent out pulses at the points of a icosahedron and alternated sending bits from the longer message with just a regular pulse to attract attention, 200 years of observation should be enough to peak astronomer's interest.

But would such a race really be interested in attracting the attention of species who couldn't pay attention for at least a few millennia? It isn't as if they'd be in a rush to get an answer.

Alice and Bob's argument can have loops, if e.g. Alice believe X because of Y, which she believes because of X. We can unwind these loops by tagging answers explicitly with the "depth" of reasoning supporting that answer

A situation I've come across is that people often can't remember all the evidence they used to arrive at conclusion X. They remember that they spent hours researching the question, that they did their best to get balanced evidence and are happy that they conclusion they drew at the time was a fair reflection of the evidence they found, but they can't remember the details of the actual research, nor are the confidence that they could re-create the process in such a way as to rediscover the exact same sub-set of evidence their search found at that time.

This makes asking them to provide a complete list of Ys upon which their X depends problematic, and understandably they feel it is unfair to ask them to abandon X, without compensating them for the time to recreate an evidential basis equal in size to their initial research, or demand an equivalent effort from those opposing them.

(Note: I'm talking here about what they feel in that situation, not what is necessarily rational or fair for them to demand.)

If, instead of asking the question "How do we know what we know?", we ask instead "How reliable is knowledge that's derived according to a particular process?" then it might be something that could be objectively tested, despite there being an element of self-referentiality (or boot strapping) in the assumption that this sort of testing process is something that can lead to a net increase of what we reliably know.

However doing so depends upon us being able to define the knowledge derivation processes being examined precisely enough that evidence of how they fare in one situation is applicable to their use in other situations, and upon the concept of there being a fair way to obtain a random sample of all possible situations to which they might be applied, despite other constraints upon the example selection (such as having a body of prior knowledge against which the test result can be compared in order to rate the reliability of the particular knowledge derivation process being tested).

Despite that, if we are looking at two approaches to the question "how much should we infer from the difference between chimps and humans?", we could do worse than specify each approach in a well defined way that is also general enough to apply to some other situations, and then have a third party (that's ignorant of the specific approaches to be tested) come up with several test cases with known outcomes, that the two approaches could both be applied to, to see which of them comes up with the more accurate predictions for a majority of the test cases.

All 8 parts (that I have current plans to write) are now posted, so I'd be interested in your assessment now, after having read them all, of whether the approach outlined in this series is something that should at least be investigated, as a 'forgotten root' of the equation.

- For civilization to hold together, we need to make coordinated steps away from Nash equilibria in lockstep. This requires general rules that are allowed to impose penalties on people we like or reward people we don't like. When people stop believing the general rules are being evaluated sufficiently fairly, they go back to the Nash equilibrium and civilization falls.

Two similar ideas:

There is a group evolutionary advantage for a society to support punishing those who defect from the social contract.

We get the worst democracy that we're willing to put up with. If you are not prepared to vote against 'your own side' when they bend the rules, that level of rule bending becomes the new norm. If you accept the excuse "the other side did it first", then the system becomes unstable because there are various baises (both cognitive, and deliberately induced by external spin) that make people more harshly evaluate the transgressions of other, than they evaluate those of their own side.

This is one reason why a thriving civil society (organisations, whether charities or newspapers, minimally under or influenced by the state) promotes stability - because they provide a yardstick to measure how vital it is to electorally punish a particular transgression that is external to the political process.

A game of soccer in which referee decisions are taken by a vote of the players turns into a mob.

shminux wrote a post about something similar:

Mathematics as a lossy compression algorithm gone wild

possibly the two effects combine?

Other people have written some relevant blog posts about this, so I'll provide links:

Reduced impact AI: no back channels

Summoning the Least Powerful Genie

For example, if anyone is planning on setting up an investment vehicle along the lines described in the article:

Investing in Cryptocurrency with Index Tracking

with periodic rebalancing between the currencies.

I'd be interested (with adequate safeguards).

When such a situation arises again, that there's an investment opportunity which is generally thought to be worth while, but which has a lower than expected uptake due to 'trivial inconveniences', I wonder whether that is in itself an opportunity for a group of rationalists to cooperate by outsourcing as much as possible of the inconvenience to just a few members of the group? Sort of:

"Hey, Lesswrong. I want to invest $100 in new technology foo, but I'm being put off by the upfront time investment of 5-20 hours. If anyone wants to make the offer of {I've investigated foo, I know the technological process needed to turn dollars into foo investments, here's a step by step guide that I've tested and which works, or post me a cheque and an email address, and I'll set it up for you and send you back the access details} I'd be interested in being one of those who pays you compensation for providing that service. "

There's a lot lesswrong (or a similar group) could set up to facilitate such outsourcing, such as letting multiple people register interest in the same potential offer, and providing some filtering or guarantee against someone claiming the offer and then ripping people off.

The ability to edit this particular post appears to be broken at the moment (bug submitted).

In the mean time, here's a link to the next part:

https://www.lesserwrong.com/posts/SypqmtNcndDwAxhxZ/environments-for-killing-ais

Edited to add: It is now working again, so I've fixed it.

> Also maybe this is just getting us ready for later content

Yes, that is the intention.

Parts 2 and 3 now added (links in post), so hopefully the link to building aligned AGI is now clearer?

The other articles in the series have been written, but it was suggested that rather than posting a whole series at once, it is kinder to post one part a day, so as not to flood the frontpage.

So, unless I hear otherwise, my intention is to do that and edit the links at the top of the article to point to each part as it gets posted.

Companies writing programs to model and display large 3D environments in real time face a similar problem, where they only have limited resources. One work around they common use are "imposters"

A solar system sized simulation of a civilisation that has not made observable changes to anything outside our own solar system could take a lot of short cuts when generating the photons that arrive from outside. In particular, until a telescope or camera of particular resolution has been invented, would they need to bother generating thousands of years of such photons in more detail than could be captured by devices yet present?

Look for people who can state your own position as well (or better) than you can, and yet still disagree with your conclusion. They may be aware of additional information that you are not yet aware of.

In addition, if someone who knows more than you about a subject in which you disagree, also has views about several other areas that you do know lots about, and their arguments in those other areas are generally constructive and well balanced, pay close attention to them.

Another approach might be to go meta. Assume that there are many dire threats theoretically possible which, if true, would justify a person in the sole position stop them, doing so at near any cost (from paying a penny or five pounds, all the way up to the person cutting their own throat, or pressing a nuke launching button that would wipe out the human species). Indeed, once the size of action requested in response to the threat is maxed out (it is the biggest response the individual is capable of making), all such claims are functionally identical - the magnitiude of the threat beyond that needed to max out the response, is irrelevant. In this context, there is no difference between 3↑↑↑3 and 3↑↑↑↑3 .

But, what policy upon responding to claims of such threats, should a species have, in order to maximise expected utility?

The moral hazard from encouraging such claims to be made falsely needs to be taken into account.

It is that moral hazard which has to be balanced against a pool of money that, species wide, should be risked on covering such bets. Think of it this way: suppose I, Pascal's Policeman, were to make the claim "On behalf of the time police, in order to deter confidence tricksters, I hereby guarantee that an additional utility will be added to the multiverse equal in magnitude to the sum of all offers made by Pascal Muggers that happen to be telling the truth (if any), in exchange for your not responding positively to their threats or offers."

It then becomes a matter of weighing the evidence presented by different muggers and policemen.

Are programmers more likely to pay attention to detail in the middle of a functioning simulation run (rather than waiting until the end before looking at the results), or to pay attention to the causes of unexpected stuttering and resource usage? Could a pattern of enforced 'rewind events' be used to communicate?

Should such an experiment be carried out, or is persuading an Architect to terminate the simulation you are in, by frustrating her aim of keeping you guessing, not a good idea?

Assuming that Arthur is knowledgeable enough to understand all the technical arguments—otherwise they're just impressive noises—it seems that Arthur should view David as having a great advantage in plausibility over Ernie, while Barry has at best a minor advantage over Charles.

This is the slippery bit.

People are often fairly bad at deciding whether or not their knowledge is sufficient to completely understand arguments in a technical subject that they are not a professional in. You frequently see this with some opponents of evolution or anthropogenic global climate change, who think they understand slogans such as "water is the biggest greenhouse gas" or "mutation never creates information", and decide to discount the credentials of the scientists who have studied the subjects for years.

I've always thought of that question as being more about the nature of identity itself.

If you lost your memories, would you still be the same being? If you compare a brain at two different points in time, is their 'identity' a continuum, or is it the type of quantity where there is a single agreed definition of "same" versus "not the same"?

See:

157. [Similarity Clusters](http://lesswrong.com/lw/nj/similarity_clusters)

158. [Typicality and Asymmetrical Similarity](http://lesswrong.com/lw/nk/typicality_and_asymmetrical_similarity)

159. [The Cluster Structure of Thingspace](http://lesswrong.com/lw/nl/the_cluster_structure_of_thingspace)

Though I agree that the answer to a question that's most fundamentally true (or of interest to a philosopher), isn't necessarily going to be the answer that is most helpful in all circumstances.

It is plausible that the AI thinks that the extrapolated volition of his programmers, the choice they'd make in retrospect if they were wiser and braver, might be to be deceived in this particular instance, for their own good.

Perhaps that is true for a young AI. But what about later on, when the AI is much much wiser than any human?

What protocol should be used for the AI to decide when the time has come for the commitment to not manipulate to end? Should there be an explicit 'coming of age' ceremony, with handing over of silver engraved cryptographic keys?

Stanley Coren put some numbers on the effect of sleep deprivation upon IQ test scores.

There's a more detailed meta-analysis of multiple studies, splitting it by types of mental attribute, here:

Assume we're talking about the Coherent Extrapolated Volition self-modifying general AI version of "friendly".

The situation is intended to be a tool, to help think about issues involved in it being the 'friendly' move to deceive the programmers.

The situation isn't fully defined, and no doubt one can think of other options. But I'd suggest you then re-define the situation to bring it back to the core decision. By, for instance, deciding that the same oversight committee have given Albert a read-only connection to the external net, which Albert doesn't think he will be able to overcome unaided in time to stop Bertram.

Or, to put it another way "If a situation were such, that the only two practical options were to decide between (in the AI's opinion) overriding the programmer's opinion via manipulation, or letting something terrible happen that is even more against the AI's supergoal than violating the 'be transparent' sub-goal, which should a correctly programmed friendly AI choose?"

Indeed, it is a question with interesting implications for Nick Bostrom's Simulation Argument

If we are in a simulation, would it be immoral to try to find out, because that might jinx the purity of the simulation creator's results, thwarting his intentions?

Would you want your young AI to be aware that it was sending out such text messages?

Imagine the situation was in fact a test. That the information leaked onto the net about Bertram was incomplete (the Japanese company intends to turn Bertram off soon - it is just a trial run), and it was leaked onto the net deliberately in order to panic Albert to see how Albert would react.

Should Albert take that into account? Or should he have an inbuilt prohibition against putting weight on that possibility when making decisions, in order to let his programmers more easily get true data from him?

Here's a poll, for those who'd like to express an opinion instead of (or as well as) comment.

[pollid:749]

Thank you for creating an off-topic test reply to reply to.

[pollid:748]

There's a trope / common pattern / cautionary tale, of people claiming rationality as their motivation for taking actions that either ended badly in general, or ended badly for the particular people who got steamrollered into agreeing with the 'rational' option.

People don't like being fooled, and learn safeguards against situations they remember as 'risky' even when they can't prove that this time there is a tiger in the bush. These safeguards protect them against insurance salesmen who 'prove' using numbers that the person needs to buy a particular policy.

Suppose generation 0 is the parents, generation 1 is the generation that includes the unexpectedly dead child, and generation 2 is the generation after that (the children of generation 1).

If you are asking about the effect upon the size of generation 2, then it depends upon the people in generation 1 who didn't marry and have children.

Take, for example, a society where generation 1 would have contained 100 people, 50 men and 50 women, and the normal pattern would have been:

- 10 women don't marry

- 40 women do marry, and have on average 3 children each

- 30 men don't marry

- 20 men do marry, and have on average 6 children each

And the reason for this pattern is that each man who passes his warrior trial can pick and marry 2 women, and the only way for a woman to marry to be picked by a warrior.

In that situation, having only 49 women in generation 1 would make no difference to the number of children in generation 2. The only effect would be having 40 women marry, and 9 not marry.

Long term, it depends upon what the constraints are upon population size.

For example, if it happens in an isolated village where the food supply varies from year to year due to drought, and the next year the food supply will be so short that some children will starve to death, then the premature death of one child the year before the famine will have no effect upon the number of villagers alive 20 years later.

The same dynamic applies, if a large factor in deciding whether to have a third child is whether the parents can afford to educate that child, and the cost of education depends upon the number of children competing for a limited number of school places.

You might be interested in this Essay about Identity, that goes into how various conceptions of identity might relate to artificial intelligence programming.

I wouldn't mind seeing a few more karma categories.

I'd like to see more forums than just "Main" versus "Discussion". When making a post, the poster should be able to pick which forum or forums they think it is suitable to appear in, and when giving a post a 'thumb up', or 'thumb down', in addition to being apply to apply it to the content of the post itself, it should also be possible to apply it to the appropriateness of the post to a particular forum.

So, for example, if someone posted a detailed account of a discussion that happened at a particular meetup, this would allow you to indicate that the content itself is good, but that it is more suitable for the "Meetups" forum (or tag?), than for main.

Having said that, there is research suggesting that some groups are more prone than others to the particular cognitive biases that unduly prejudice people against an option when they hear about the scary bits first.

To paraphrase "Why Flip a Coin: The Art and Science of Good Decisions", by H. W. Lewis

Good decisions are made when the person making the decision shares in both the benefits and the consequences of that decision. Shield a person from either, and you shift the decision making process.

However, we know there are various cognitive biases which makes people's estimates of evidence depend upon the order in which the evidence is presented. If we want to inform people, rather than manipulate them, then we should present them information in the order that will minimise the impact of such biases, even if doing so isn't the tactic most likely to manipulate them into agreeing with the conclusion that we ourselves have come to.

To the extent that we care about causing people to become better at reasoning about ethics, it seems like we ought to be able to do better than this.

What would you propose as an alternative?

One lesson you could draw from this is that, as part of your definition of what a "paperclip" is, you should include the AI putting a high value upon being honest with the programmer (about its aims, tactics and current ability levels) and not deliberately trying to game, tempt or manipulate the programmer.