Decision Theory

post by abramdemski, Scott Garrabrant · 2018-10-31T18:41:58.230Z · LW · GW · 45 commentsContents

45 comments

(A longer text-based version of this post is also available on MIRI's blog here, and the bibliography for the whole sequence can be found here.)

45 comments

Comments sorted by top scores.

comment by Rob Bensinger (RobbBB) · 2018-11-02T15:52:35.982Z · LW(p) · GW(p)

Cross-posting some comments from the MIRI Blog:

Konstantin Surkov:

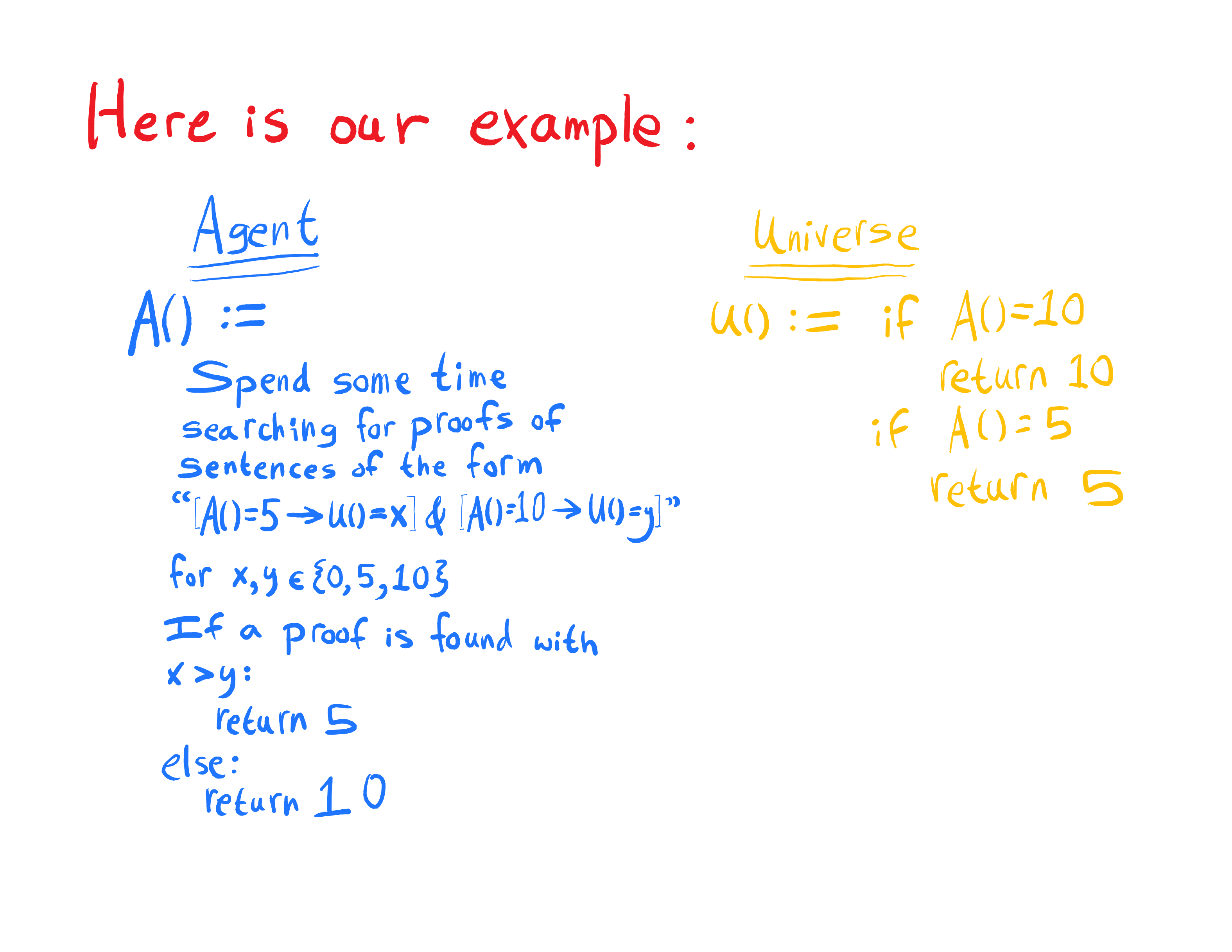

Re: 5/10 problem

I don't get it. Human is obviously (in that regard) an agent reasoning about his actions. Human also will choose 10 without any difficulty. What in human decision making process is not formalizable here? Assuming we agree that 10 is rational choice.

Abram Demski:

Suppose you know that you take the $10. How do you reason about what would happen if you took the $5 instead? It seems easy if you know how to separate yourself from the world, so that you only think of external consequences (getting $5). If you think about yourself as well, then you run into contradictions when you try to imagine the world where you take the $5, because you know it is not the sort of thing you would do. Maybe you have some absurd predictions about what the world would be like if you took the $5; for example, you imagine that you would have to be blind. That's alright, though, because in the end you are taking the $10, so you're doing fine.

Part of the point is that an agent can be in a similar position, except it is taking the $5, knows it is taking the $5, and unable to figure out that it should be taking the $10 instead due to the absurd predictions it makes about what happens when it takes the $10. It seems kind of hard for a human to end up in that situation, but it doesn't seem so hard to get this sort of thing when we write down formal reasoners, particularly when we let them reason about themselves fully (as natural parts of the world) rather than only reasoning about the external world or having pre-programmed divisions (so they reason about themselves in a different way from how they reason about the world).Replies from: shminux

↑ comment by Shmi (shminux) · 2018-11-03T01:45:52.745Z · LW(p) · GW(p)

Sure, one can imagine hypothetically taking $5, even if in reality they would take $10. That's a spurious output from a different algorithm altogether. it assumes the world where you are not the same person who takes $10. So, it would make sense to examine which of the two you are, if you don't yet know that you will take $10, but not if you already know it. Which of the two is it?

comment by tenthkrige · 2018-11-08T22:14:44.751Z · LW(p) · GW(p)

Content feedback : the inferential distance between Löb's theorem and spurious counterfactuals seems larger than that of the other points. Maybe that's because I haven't internalised the theorem, not being a logician and all.

Unnecessary nitpick: the gears in the robot's brain would turn just fine as drawn: since the outer gears are both turning anticlockwise, the inner gear would just turn clockwise. (I think my inner engineer is showing)

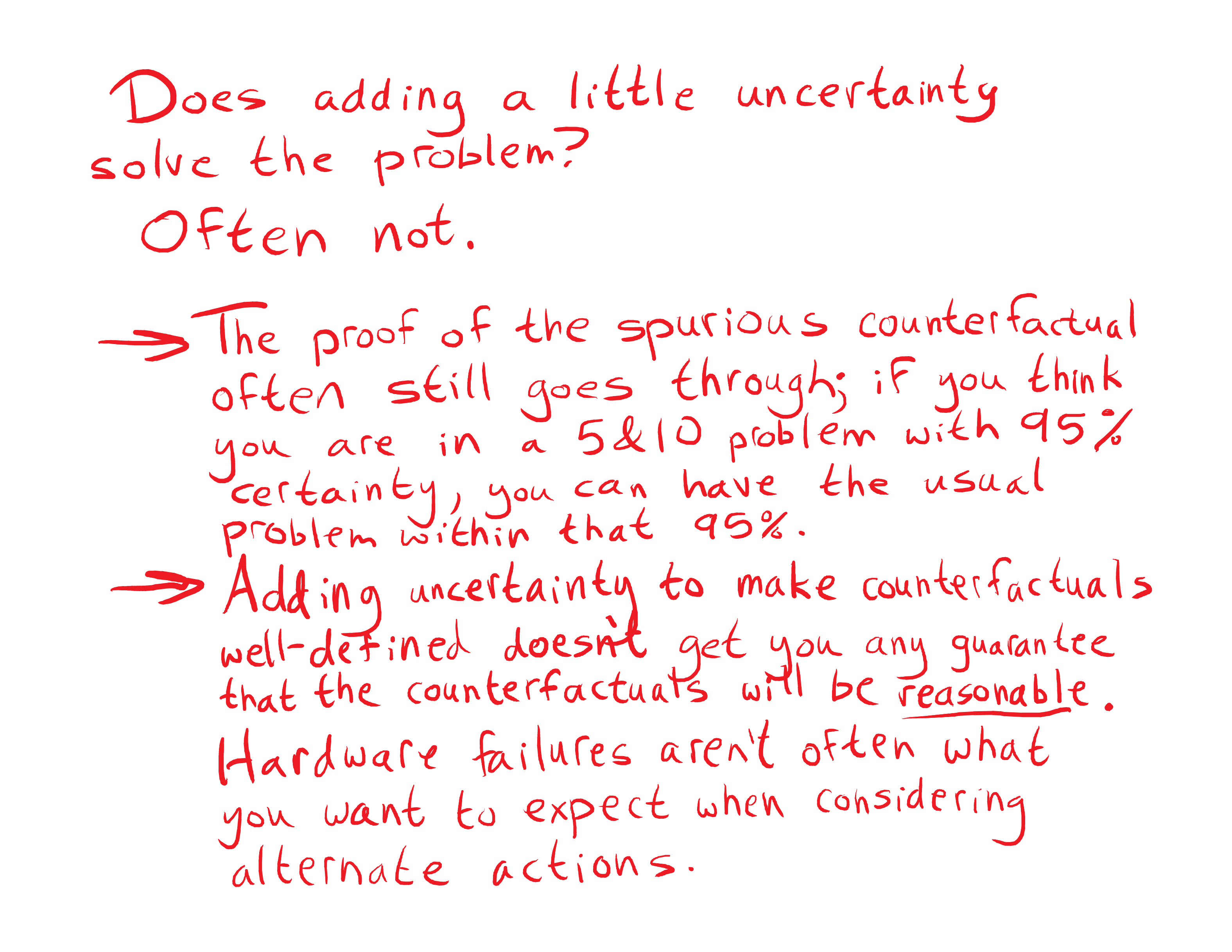

comment by Gurkenglas · 2018-11-01T14:27:35.025Z · LW(p) · GW(p)

I'm not convinced that an inconsequential grain of uncertainty couldn't handle this 5-10 problem. Consider an agent whose actions are probability distributions on {5,10} that are nowhere 0. We can call these points in the open affine space spanned by the points 5 and 10. U is then a linear function from this affine space to utilities. The agent would search for proofs that U is some particular such linear function. Once it finds one, it uses that linear function to compute the optimal action. To ensure that there is an optimum, we can adjoin infinitesimal values to the possible probabilities and utilities.

If the agent were to find a proof that the linear function is the one induced by mapping 5 to 5 and 10 to 0, it would return (1-ε)⋅5+ε⋅10 and get utility 5+5ε instead of the expected 5-5ε, so Löb's theorem wouldn't make this self-fulfilling.

Replies from: Scott Garrabrant↑ comment by Scott Garrabrant · 2018-11-01T18:26:10.788Z · LW(p) · GW(p)

So, your suggestion is not just an inconsequential grain of uncertainty, it is an grain of exploration. The agent actually does take 10 with some small probability. If you try to do this with just uncertainty, things would be worse, since that uncertainty would not be justified.

One problem is that you actually do explore a bunch, and since you don't get a reset button, you will sometimes explore into irreversible actions, like shutting yourself off. However, if the agent has a source of randomness, and also the ability to simulate worlds in which that randomness went another way, you can have an agent that with probability does not explore ever, and learns from the other worlds in which it does explore. So, you can either explore forever, and shut yourself off, or you can explore very very rarely and learn from other possible worlds.

The problem with learning from other possible worlds is to get good results out of it, you have to assume that the environment does not also learn from other possible worlds, which is not very embedded.

But you are suggesting actually exploring a bunch, and there is a problem other than just shutting yourself off. You are getting past this problem in this case by only allowing linear functions, but that is not an accurate assumption. Let's say you are playing matching pennies with Omega, who has the ability to predict what probability you will pick but not what action you will pick.

(In matching pennies, you each choose H or T, you win if they match, they win if they don't.)

Omega will pick H if your probability of H is less that 1/2 and T otherwise. Your utility as a function of probability is piecewise linear with two parts. Trying to assume that it will be linear will make things messy.

There is this problem where sometimes the outcome of exploring into taking 10, and the outcome of actually taking 10 because it is good are different. More on this here [AF · GW].

Replies from: Gurkenglas↑ comment by Gurkenglas · 2018-11-01T19:39:24.119Z · LW(p) · GW(p)

I am talking about the surreal number ε, which is smaller than any positive real. Events of likelihood ε do not actually happen, we just keep them around so the counterfactual reasoning does not divide by 0.

Within the simulation, the AI might be able to conclude that it just made an ε-likelihood decision and must therefore be in a counterfactual simulation. It should of course carry on as it were, in order to help the simulating version of itself.

Why shouldn't the environment be learning?

To the Omega scenario I would say that since we have an Omega-proof random number generator, we get new strategic options that should be included in the available actions. The linear function then goes from the ε-adjoined open affine space generated by {Pick H with probability p | p real, non-negative and at most 1} to the ε-adjoined utilities, and we correctly solve Omega's problem by using p=1/2.

Replies from: Scott Garrabrant↑ comment by Scott Garrabrant · 2018-11-01T21:43:56.035Z · LW(p) · GW(p)

Yeah, so its like you have this private data, which is an infinite sequence of bits, and if you see all 0's you take an exploration action. I think that by giving the agent these private bits and promising that the bits do not change the rest of the world, you are essentially giving the agent access to a causal counterfactual that you constructed. You don't even have to mix with what the agent actually does, you can explore with every action and ask if it is better to explore and take 5 or explore and take 10. By doing this, you are essentially giving the agent access to a causal counterfactual, because conditioning on these infinitesimals is basically like coming in and changing what the agent does. I think giving the agent a true source of randomness actually does let you implement CDT.

If the environment learns from the other possible worlds, It might punish or reward you in one world for stuff that you do in the other world, so you cant just ask which world is best to figure out what to do.

I agree that that is how you want to think about the matching pennies problem. However the point is that your proposed solution assumed linearity. It didn't empirically observe linearity. You have to be able to tell the difference between the situations in order to know not to assume linearity in the matching pennies problem. The method for telling the difference is how you determine whether or not and in what ways you have logical control over Omega's prediction of you.

Replies from: Gurkenglas↑ comment by Gurkenglas · 2018-11-01T22:55:11.393Z · LW(p) · GW(p)

I posit that linearity always holds. In a deterministic universe, the linear function is between the ε-adjoined open affine space generated by our primitive set of actions and the ε-adjoined utilities. (Like in my first comment.)

In a probabilistic universe, the linear function is between the ε-adjoined open affine space generated by (the set of points in) the closed affine space generated by our primitive set of actions and the ε-adjoined utilities. (Like in my second comment.)

I got from one of your comments that assuming linearity wards off some problem. Does it come back in the probabilistic-universe case?

Replies from: Scott Garrabrant↑ comment by Scott Garrabrant · 2018-11-01T23:53:25.250Z · LW(p) · GW(p)

My point was that I don't know where to assume the linearity is. Whenever I have private randomness, I have linearity over what I end up choosing with that randomness, but not linearity over what probability I choose. But I think this is non getting at the disagreement, so I pivot to:

In your model, what does it mean to prove that U is some linear affine function? If I prove that my probability p is 1/2 and that U=7.5, have I proven that U is the constant function 7.5? If there is only one value of p, it is not defined what the utility function is, unless I successfully carve the universe in such a way as to let me replace the action with various things and see what happens. (or, assuming linearity replace the probability with enough linearly independent things (in this case 2) to define the function.

Replies from: Gurkenglas↑ comment by Gurkenglas · 2018-11-02T15:14:32.869Z · LW(p) · GW(p)

In the matching pennies game, would be proven to be . A could maximize this by returning ε when isn't , and (where ε is so small that this is still infinitesimally close to 1) when is .

The linearity is always in the function between ε-adjoined open affine spaces. Whether the utilities also end up linear in the closed affine space (ie nobody cares about our reasoning process) is for the object-level information gathering process to deduce from the environment.

You never prove that you will with certainty decide . You always leave a so-you're-saying-there's-a chance of exploration, which produces a grain of uncertainty. To execute the action, you inspect the ceremonial Boltzmann Bit (which is implemented by being constantly set to "discard the ε"), but which you treat as having an ε chance of flipping.

The self-modification module could note that inspecting that bit is a no-op, see that removing it would make the counterfactual reasoning module crash, and leave up the Chesterton fence.

Replies from: Scott Garrabrant, Douglas_Reay↑ comment by Scott Garrabrant · 2018-11-02T16:53:31.594Z · LW(p) · GW(p)

But how do you avoid proving with certainty that p=1/2?

Since your proposal does not say what to do if you find inconsistent proofs that the linear function is two different things, I will assume that if it finds multiple different proofs, it defaults to 5 for the following.

Here is another example:

You are in a 5 and 10 problem. You have twin that is also in a 5 and 10 problem. You have exactly the same source code. There is a consistency checker, and if you and your twin do different things, you both get 0 utility.

You can prove that you and your twin do the same thing. Thus you can prove that the function is 5+5p. You can also prove that your twin takes 5 by Lob's theorem. (You can also prove that you take 5 by Lob's theorem, but you ignore that proof, since "there is always a chance") Thus, you can prove that the function is 5-5p. Your system doesn't know what to do with two functions, so it defaults to 5. (If it is provable that you both take 5, you both take 5, completing the proof by Lob.)

I am doing the same thing as before, but because I put it outside of the agent, it does not get flagged with the "there is always a chance" module. This is trying to illustrate that your proposal takes advantage of a separation between the agent and the environment that was snuck in, and could be done incorrectly.

Two possible fixes:

1) You could say that the agent, instead of taking 5 when finding inconsistency takes some action that exhibits the inconsistency (something that the two functions give different values). This is very similar to the chicken rule, and if you add something like this, you don't really need the rest of your system. If you take an agent that whenever it proves it does something, it does something else. This agent will prove (given enough time) that if it takes 5 it gets 5, and if it takes 10 it gets 10.

2) I had one proof system, and just ignored the proofs that I found that I did a thing. I could instead give the agent a special proof system that is incapable of proving what it does, but how do you do that? Chicken rule seems like the place to start.

One problem with the chicken rule is that it was developed in a system that was deductively closed, so you can't prove something that passes though a proof of P without proving P. If you violate this, by having a random theorem prover, you might have an system that fails to prove "I take 5" but proves "I take 5 and 1+1=2" and uses this to complete the Lob loop.

Replies from: Gurkenglas↑ comment by Gurkenglas · 2018-11-02T19:40:21.725Z · LW(p) · GW(p)

I can't prove what I'm going to do and I can't prove that I and the twin are going to do the same thing, because of the Boltzmann Bits in both of our decision-makers that might turn out different ways. But I can prove that we have a chance of doing the same thing, and my expected utility is , rounding to once it actually happens.

↑ comment by Douglas_Reay · 2019-09-24T04:22:39.602Z · LW(p) · GW(p)

It sounds similar to the matrices in the post:

comment by Shmi (shminux) · 2018-11-02T03:07:49.301Z · LW(p) · GW(p)

If you know your own actions, why would you reason about taking different actions? Wouldn't you reason about someone who is almost like you, but just different enough to make a different choice?

Replies from: Scott Garrabrant↑ comment by Scott Garrabrant · 2018-11-02T03:23:17.816Z · LW(p) · GW(p)

Sure. How do you do that?

Replies from: shminux↑ comment by Shmi (shminux) · 2018-11-02T05:41:39.438Z · LW(p) · GW(p)

Notice (well, you already know that) that accepting that identical agents make identical decisions (superrationality, as it were) and to make different decisions in identical circumstances the agents must necessarily be different, gets you out of many pickles. For example, in the 5&10 game an agent would examine its own algorithm, see that it leads to taking $10 and stop there. There is no "what would happen if you took a different action", because the agent taking a different action would not be you, not exactly. So, no Lobian obstacle. In return, you give up something a lot more emotionally valuable: the delusion of making conscious decisions. Pick your poison.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2018-11-02T11:45:20.426Z · LW(p) · GW(p)

For example, in the 5&10 game an agent would examine its own algorithm, see that it leads to taking $10 and stop there.

Why do even that much if this reasoning could not be used? The question is about the reasoning that could contribute to the decision, that could describe the algorithm, and so has the option to not "stop there". What if you see that your algorithm leads to taking the $10 and instead of stopping there, you take the $5?

Nothing stops you. This is the "chicken rule" and it solves some issues, but more importantly illustrates the possibility in how a decision algorithm can function. The fact that this is a thing is evidence that there may be something wrong with the "stop there" proposal. Specifically, you usually don't know that your reasoning is actual, that it's even logically possible and not part of an impossible counterfactual, but this is not a hopeless hypothetical where nothing matters. Nothing compels you to affirm what you know about your actions or conclusions, this is not a necessity in a decision making algorithm, but different things you do may have an impact on what happens, because the situation may be actual after all, depending on what happens or what you decide, or it may be predicted from within an actual situation and influence what happens there. This motivates learning to reason in and about possibly impossible situations.

What if you examine your algorithm and find that it takes the $5 instead? It could be the same algorithm that takes the $10, but you don't know that, instead you arrive at the $5 conclusion using reasoning that could be impossible, but that you don't know to be impossible, that you haven't decided yet to make impossible. One way to solve the issue is to render the situation where that holds impossible, by contradicting the conclusion with your action, or in some other way. To know when to do that, you should be able to reason about and within such situations that could be impossible, or could be made impossible, including by the decisions made in them. This makes the way you reason in them relevant, even when in the end these situations don't occur, because you don't a priori know that they don't occur.

(The 5-and-10 problem is not specifically about this issue, and explicit reasoning about impossible situations may be avoided, perhaps should be avoided, but my guess is that the crux in this comment thread is about things like usefulness of reasoning from within possibly impossible situations, where even your own knowledge arrived at by pure computation isn't necessarily correct.)

Replies from: shminux↑ comment by Shmi (shminux) · 2018-11-03T01:33:46.508Z · LW(p) · GW(p)

Thank you for your explanation! Still trying to understand it. I understand that there is no point examining one's algorithm if you already execute it and see what it does.

What if you see that your algorithm leads to taking the $10 and instead of stopping there, you take the $5?

I don't understand that point. you say "nothing stops you", but that is only possible if you could act contrary to your own algorithm, no? Which makes no sense to me, unless the same algorithm gives different outcomes for different inputs, e.g. "if I simply run the algorithm, I take $10, but if I examine the algorithm before running it and then run it, I take $5". But it doesn't seem like the thing you mean, so I am confused.

What if you examine your algorithm and find that it takes the $5 instead?

How can it be possible? if your examination of your algorithm is accurate, it gives the same outcome as mindlessly running it, with is taking $10, no?

It could be the same algorithm that takes the $10, but you don't know that, instead you arrive at the $5 conclusion using reasoning that could be impossible, but that you don't know to be impossible, that you haven't decided yet to make impossible.

So your reasoning is inaccurate, in that you arrive to a wrong conclusion about the algorithm output, right? You just don't know where the error lies, or even that there is an error to begin with. But in this case you would arrive to a wrong conclusion about the same algorithm run by a different agent, right? So there is nothing special about it being your own algorithm and not someone else's. If so, the issue is reduced to finding an accurate algorithm analysis tool, for an algorithm that demonstrably halts in a very short time, producing one of the two possible outcomes. This seems to have little to do with decision theory issues, so I am lost as to how this is relevant to the situation.

I am clearly missing some of your logic here, but I still have no idea what the missing piece is, unless it's the libertarian free will thing, where one can act contrary to one's programming. Any further help would be greatly appreciated.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2018-11-03T02:34:02.007Z · LW(p) · GW(p)

I understand that there is no point examining one's algorithm if you already execute it and see what it does.

Rather there is no point if you are not going to do anything with the results of the examination. It may be useful if you make the decision based on what you observe (about how you make the decision).

you say "nothing stops you", but that is only possible if you could act contrary to your own algorithm, no?

You can, for a certain value of "can". It won't have happened, of course, but you may still decide to act contrary to how you act, two different outcomes of the same algorithm. The contradiction proves that you didn't face the situation that triggers it in actuality, but the contradiction results precisely from deciding to act contrary to the observed way in which you act, in a situation that a priori could be actual, but is rendered counterlogical as a result of your decision. If instead you affirm the observed action, then there is no contradiction and so it's possible that you have faced the situation in actuality. Thus the "chicken rule", playing chicken with the universe, making the present situation impossible when you don't like it.

So your reasoning is inaccurate

You don't know that it's inaccurate, you've just run the computation and it said $5. Maybe this didn't actually happen, but you are considering this situation without knowing if it's actual. If you ignore the computation, then why run it? If you run it, you need responses to all possible results, and all possible results except one are not actual, yet you should be ready to respond to them without knowing which is which. So I'm discussing what you might do for the result that says that you take the $5. And in the end, the use you make of the results is by choosing to take the $5 or the $10.

This map from predictions to decisions could be anything. It's trivial to write an algorithm that includes such a map. Of course, if the map diagonalizes, then the predictor will fail (won't give a prediction), but the map is your reasoning in these hypothetical situations, and the fact that the map may say anything corresponds to the fact that you may decide anything. The map doesn't have to be identity, decision doesn't have to reflect prediction, because you may write an algorithm where it's not identity.

Replies from: shminux↑ comment by Shmi (shminux) · 2018-11-03T22:01:33.230Z · LW(p) · GW(p)

You can, for a certain value of "can". It won't have happened, of course, but you may still decide to act contrary to how you act, two different outcomes of the same algorithm.

This confuses me even more. You can imagine act contrary to your own algorithm, but the imagining different possible outcomes is a side effect of running the main algorithm that takes $10. It is never the outcome of it. Or an outcome. Since you know you will end up taking $10, I also don't understand the idea of playing chicken with the universe. Are there any references for it?

You don't know that it's inaccurate, you've just run the computation and it said $5.

Wait, what? We started with the assumption that examining the algorithm, or running it, shows that you will take $10, no? I guess I still don't understand how

What if you see that your algorithm leads to taking the $10 and instead of stopping there, you take the $5?

is even possible, or worth considering.

This map from predictions to decisions could be anything.

Hmm, maybe this is where I miss some of the logic. If the predictions are accurate, the map is bijective. If the predictions are inaccurate, you need a better algorithm analysis tool.

The map doesn't have to be identity, decision doesn't have to reflect prediction, because you may write an algorithm where it's not identity.

To me this screams "get a better algorithm analyzer!" and has nothing to do with whether it's your own algorithm, or someone else's. Can you maybe give an example where one ends up in a situation where there is no obvious algorithm analyzer one can apply?

comment by tremble · 2018-11-12T01:15:40.331Z · LW(p) · GW(p)

Content feedback:

The Preface to the Sequence on Value Learning [? · GW] contains the following advice on research directions for that sequence:

If you try to disprove the arguments in the posts, or to create formalisms that sidestep the issues brought up, you may very well generate a new interesting direction of work that has not been considered before.

This provides specific direction on what to look at and what work needs done. If such a statement for this sequence is possible, I think it would be valuable to include.

comment by ryan_b · 2018-11-01T16:55:14.802Z · LW(p) · GW(p)

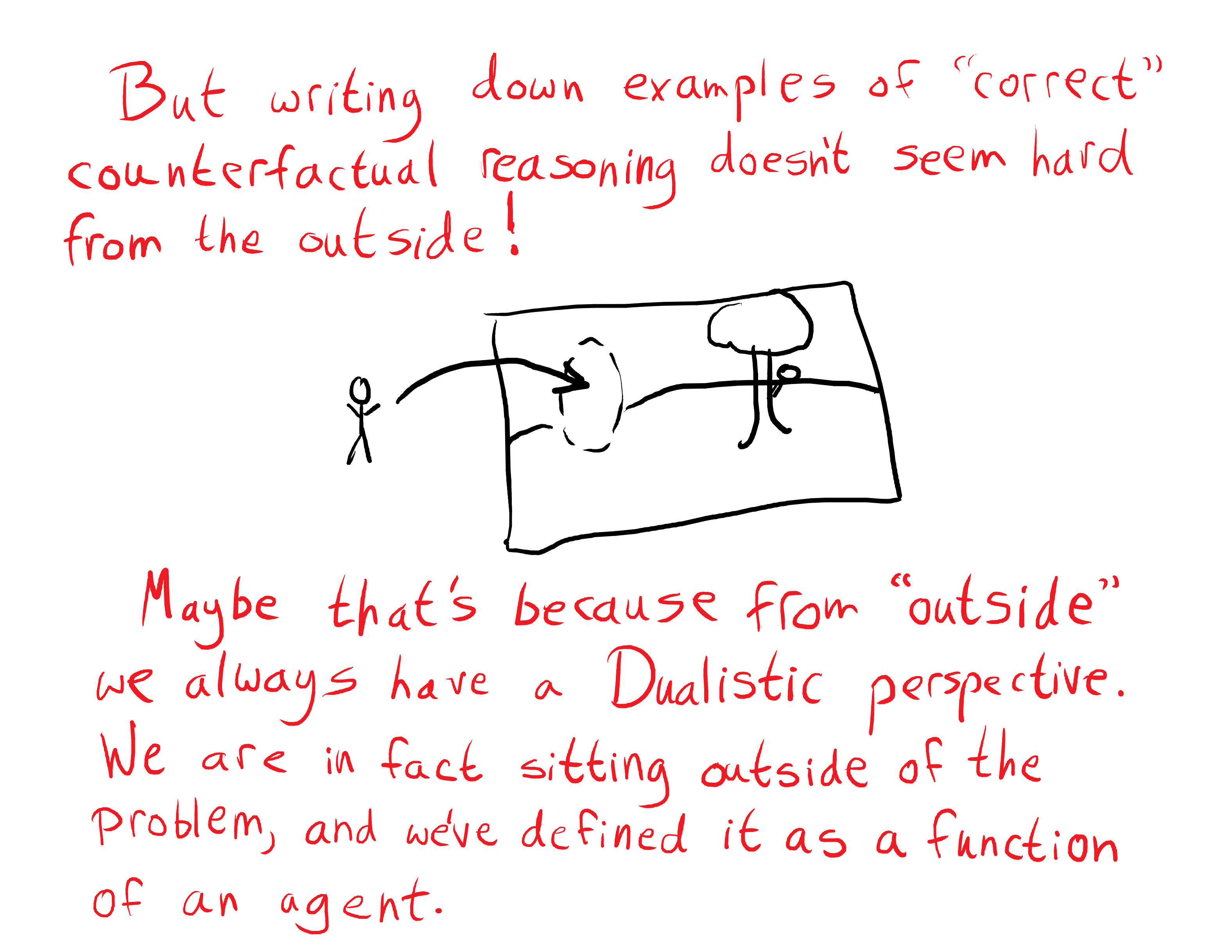

It was not until reading this that I really understood that I am in the habit of reasoning about myself as just a part of the environment.

Replies from: Davidmanheim↑ comment by Davidmanheim · 2018-11-04T11:19:06.072Z · LW(p) · GW(p)

The kicker is that we don't reason directly about ourselves as such, we use a simplified model of ourselves. And we're REALLY GOOD at using that model for causal reasoning, even when it is reflective, and involves multiple levels of self-reflection and counterfactuals - at least when we bother to try. (We try rarely because explicit modelling is cognitively demanding, and we usually use defaults / conditioned reasoning. Sometimes that's OK.)

Example: It is 10PM. A 5-page report is due in 12 hours, at 10AM.

Default: Go to sleep at 1AM, set alarm for 8AM. Result: Don't finish report tonight, have too little time to do so tomorrow.

Conditioned reasoning: Stay up to finish the report first. 5 hours of work, and stay up until 3AM. Result? Write bad report, still feel exhausted the next day

Counterfactual reasoning: I should nap / get some amount of sleep so that I am better able to concentrate, which will outweigh the lost time. I could set my alarm for any amount of time; what amount does my model of myself imply will lead to an optimal well-rested / sufficient time trade-off?

Self-reflection problem, second use of mini-self model: I'm worse at reasoning at 1AM than I am at 10PM. I should decide what to do now, instead of delaying until then. I think going to sleep at 12AM and waking at 3AM gives me enough rest and time to do a good job on the report.

Consider counterfactual and impact: How does this impact the rest of my week's schedule? 3 hours is locally optimal, but I will crash tomorrow and I have a test to study for the next day. Decide to work a bit, go to sleep at 12:30 and set alarm for 5:30AM. Finish the report, turn it in by 10AM, then nap another 2 hours before studying.

We built this model based on not only small samples of our own history, but learning from others, incorporating data about seeing other people's experiences. We don't consider staying up all night and then driving to handing the report, because we realize exhausted driving is dangerous - because we heard stories of people doing so, and know that we would be similarly unsteady. Is a person going to explore and try different strategies by staying up all night and driving? If you die, you can't learn from the experience - so you have good ideas ab out what parts of the exploration space are safe to try. You might use Adderall because it's been tried before and is relatively safe, but you don't ingest arbitrary drugs to see if they help you think.

BUT an AI doesn't (at first) have that sample data to reason from, nor does a singleton have observation of other near-structurally identical AI systems and the impacts of their decisions, nor does it have a fundamental understanding about what is safe to explore.

comment by Ian Televan · 2021-07-08T23:34:19.065Z · LW(p) · GW(p)

I don't quite follow why 5/10 example presents a problem.

Conditionals with false antecedents seem nonsensical from the perspective of natural language, but why is this a problem for the formal agent? Since the algorithm as presented doesn't actually try to maximize utility, everything seems to be alright. In particular, there are 4 valid assignments: , , ,

The algorithm doesn't try to select an assignment with largest , but rather just outputs if there's a valid assignment with , and otherwise. Only fulfills the condition, so it outputs . and also seem nonsensical because of false antecedents but with attached utility - would that be a problem too?

For this particular problem, you could get rid of assignments with nonsensical values by also considering an algorithm with reversed outputs and then taking the intersection of valid assignments, since only satisfies both algorithms.

Replies from: abramdemski↑ comment by abramdemski · 2021-07-14T16:57:28.740Z · LW(p) · GW(p)

Hmm. I'm not following. It seems like you follow the chain of reasoning and agree with the conclusion:

The algorithm doesn't try to select an assignment with largest , but rather just outputs if there's a valid assignment with , and otherwise. Only fulfills the condition, so it outputs .

This is exactly the point: it outputs 5. That's bad! But the agent as written will look perfectly reasonable to anyone who has not thought about the spurious proof problem. So, we want general tools to avoid this kind of thing. For the case of proof-based agents, we have a pretty good tool, namely MUDT (the strategy of looking for the highest-utility such proof rather than any such proof). (However, this falls prey to the Troll Bridge problem, which looks pretty bad.)

Conditionals with false antecedents seem nonsensical from the perspective of natural language, but why is this a problem for the formal agent?

More generally, the problem is that for formal agents, false antecedents cause nonsensical reasoning. EG, for the material conditional (the usual logical version of conditionals), everything is true when reasoning from a false antecedent. For Bayesian conditionals (the usual probabilistic version of conditionals), probability zero events don't even have conditionals (so you aren't allowed to ask what follows from them).

Yet, we reason informally from false antecedents all the time, EG thinking about what would happen if

So, false antecedents cause greater problems for formal agents than for natural language.

For this particular problem, you could get rid of assignments with nonsensical values by also considering an algorithm with reversed outputs and then taking the intersection of valid assignments, since only satisfies both algorithms.

The problem is also "solved" if the agent thinks only about the environment, ignoring its knowledge about its own source code. So if the agent can form an agent-environment boundary (a "cartesian boundary") then the problem is already solved, no need to try reversed outputs.

The point here is to do decision theory without such a boundary. The agent just approaches problems with all of its knowledge, not differentiating between "itself" and "the environment".

Replies from: Ian Televan, TAG↑ comment by Ian Televan · 2021-07-15T20:22:54.830Z · LW(p) · GW(p)

While I agree that the algorithm might output 5, I don't share the intuition that it's something that wasn't 'supposed' to happen, so I'm not sure what problem it was meant to demonstrate. I thought of a few ways to interpret it, but I'm not sure which one, if any, was the intended interpretation:

a) The algorithm is defined to compute argmax, but it doesn't output argmax because of false antecedents.

- but I would say that it's not actually defined to compute argmax, therefore the fact that it doesn't output argmax is not a problem.

b) Regardless of the output, the algorithm uses reasoning from false antecedents, which seems nonsensical from the perspective of someone who uses intuitive conditionals, which impedes its reasoning.

- it may indeed seem nonsensical, but if 'seeming nonsensical' doesn't actually impede its ability to select actions wich highest utility (when it's actually defined to compute argmax), then I would say that it's also not a problem. Furthermore, wouldn't MUDT be perfectly satisfied with the tuple ? It also uses 'nonsensical' reasoning 'A()=5 => U()=0' but still outputs action with highest utility.

c) Even when the use of false antecedents doesn't impede its reasoning, the way it arrives at its conclusions is counterintuitive to humans, which means that we're more likely to make a catastrophic mistake when reasoning about how the agent reasons.

- Maybe? I don't have access to other people's intuitions, but when I read the example, I didn't have any intuitive feeling of what the algorithm would do, so instead I just calculated all assignments , eliminated all inconsistent ones and proceeded from there. And this issue wouldn't be unique to false antecedents, there are other perfectly valid pieces of logic that might nonetheless seem counterintuitive to humans, for example the puzzle with islanders and blue eyes.

Yet, we reason informally from false antecedents all the time, EG thinking about what would happen if

When I try to examine my own reasoning, I find that when I do so, I'm just selectively blind to certain details and so don't notice any problems. For example: suppose the environment calculates "U=10 if action = A; U=0 if action = B" and I, being a utility maximizer, am deciding between actions A and B. Then I might imagine something like "I chose A and got 10 utils", and "I chose B and got 0 utils" - ergo, I should choose A.

But actually, if I had thought deeper about the second case, I would also think "hm, because I'm determined to choose the action with highest reward I would not choose B. And yet I chose B. This is logically impossible! OH NO THIS TIMELINE IS INCONSISTENT!" - so I couldn't actually coherently reason about what could happen if I chose B. And yet, I would still be left with the only consistent timeline where I choose A, which I would promptly follow, and get my maximum of 10 utils.

The problem is also "solved" if the agent thinks only about the environment, ignoring its knowledge about its own source code.

The idea with reversing the outputs and taking the assignment that is valid for both versions of the algorithm seemed to me to be closer to the notion "but what would actually happen if you actually acted differently", i.e. avoiding seemingly nonsensical reasoning while preserving self-reflection. But I'm not sure when, if ever, this principle can be generalized.

Replies from: abramdemski↑ comment by abramdemski · 2021-07-16T23:13:14.187Z · LW(p) · GW(p)

While I agree that the algorithm might output 5, I don't share the intuition that it's something that wasn't 'supposed' to happen, so I'm not sure what problem it was meant to demonstrate.

OK, this makes sense to me. Instead of your (A) and (B), I would offer the following two useful interpretations:

1: From a design perspective, the algorithm chooses 5 when 10 is better. I'm not saying it has "computed argmax incorrectly" (as in your A); an agent design isn't supposed to compute argmax (argmax would be insufficient to solve this problem, because we're not given the problem in the format of a function from our actions to scores), but it is supposed to "do well". The usefulness of the argument rests on the weight of "someone might code an agent like this on accident, if they're not familiar with spurious proofs". Indeed, that's the origin of this code snippet -- something like this was seriously proposed at some point.

2: From a descriptive perspective, the code snippet is not a very good description of how humans would reason about a situation like this (for all the same reasons).

When I try to examine my own reasoning, I find that when I do so, I'm just selectively blind to certain details and so don't notice any problems. For example: suppose the environment calculates "U=10 if action = A; U=0 if action = B" and I, being a utility maximizer, am deciding between actions A and B. Then I might imagine something like "I chose A and got 10 utils", and "I chose B and got 0 utils" - ergo, I should choose A.

Right, this makes sense to me, and is an intuition which I many people share. The problem, then, is to formalize how to be "selectively blind" in an appropriate way such that you reliably get good results.

↑ comment by TAG · 2021-07-14T20:14:13.959Z · LW(p) · GW(p)

More generally, the problem is that for formal agents, false antecedents cause nonsensical reasoning

No, it's contradictory assumptions. False but consistent assumptions are dual to consistent-and-true assumptions...so you can only infer a mutually consistent set of propositions from either.

To put it another way, a formal system has no way of knowing what would be true or false for reasons outside itself, so it has no way of reacting to a merely false statement. But a contradiction is definable within a formal system.

To.put it yet another way... contradiction in, contradiction out

Replies from: abramdemski↑ comment by abramdemski · 2021-07-16T23:00:07.363Z · LW(p) · GW(p)

Yep, agreed. I used the language "false antecedents" mainly because I was copying the language in the comment I replied to, but I really had in mind "demonstrably false antecedents".

comment by tremble · 2018-11-12T01:38:44.862Z · LW(p) · GW(p)

Thoughts on counterfactual reasoning

These examples of counterfactuals are presented as equivalent, but they seem meaningfully distinct:

What if the sun suddenly went out?

What if 2+2=3?

Specifically, they don't seem equally difficult for me to evaluate. I can easily imagine the sun going out, but I'm not even sure what it would mean if 2+2=3. It confuses me that these two different examples are presented as equivalent, because they seem to be instances of meaningfully distinct classes of something. I spent some time trying to characterize why the sun example is intuitively easy for me and the math example is intuitively difficult for me. I came up with some ideas, but I won't go into details yet because they seem like the obvious sorts of things that anyone who has read The Sequences (a.k.a., Rationality: A-Z) [? · GW] would have thought of. I strongly suspect there's prior work. It is also possible that I don't fully understand the problem yet.

Questions about counterfactual reasoning

The two counterfactual reasoning examples above (and others) are presented as equivalent, but they seem like they are not.

1. Is this an intentional simplification for the benefit of new readers?

2. If so, can someone point me to the prior work exploring the omitted nuances of counterfactuals? I don't want to re-invent the wheel.

3. If not, would exploration of the characteristics of different kinds of counterfactuals be a fruitful area of research?

comment by Jozdien · 2021-07-16T18:18:44.992Z · LW(p) · GW(p)

I think I'm missing something with the Löb's theorem example.

If can be proved under the theorem, then can't also be proved? What's the cause of the asymmetry that privileges taking $5 in all scenarios where you're allowed to search for proofs for a long time?

Replies from: abramdemski↑ comment by abramdemski · 2021-07-16T23:21:54.952Z · LW(p) · GW(p)

Agreed. The asymmetry needs to come from the source code for the agent.

In the simple version I gave, the asymmetry comes from the fact that the agent checks for a proof that x>y before checking for a proof that y>x. If this was reversed, then as you said, the Lobian reasoning would make the agent take the 10, instead of the 5.

In a less simple version, this could be implicit in the proof search procedure. For example, the agent could wait for any proof of the conclusion x>y or y>x, and make a decision based on whichever happened first. Then there would not be an obvious asymmetry. Yet, the proof search has to go in some order. So the agent design will introduce an asymmetry in one direction or the other. And when building theorem provers, you're not usually thinking about what influence the proof order might have on which theorems are actually true; you usually think of the proofs as this static thing which you're searching through. So it would be easy to mistakenly use a theorem prover which just so happens to favor 5 over 10 in the proof search.

comment by Liam Donovan (liam-donovan) · 2019-12-09T11:30:53.169Z · LW(p) · GW(p)

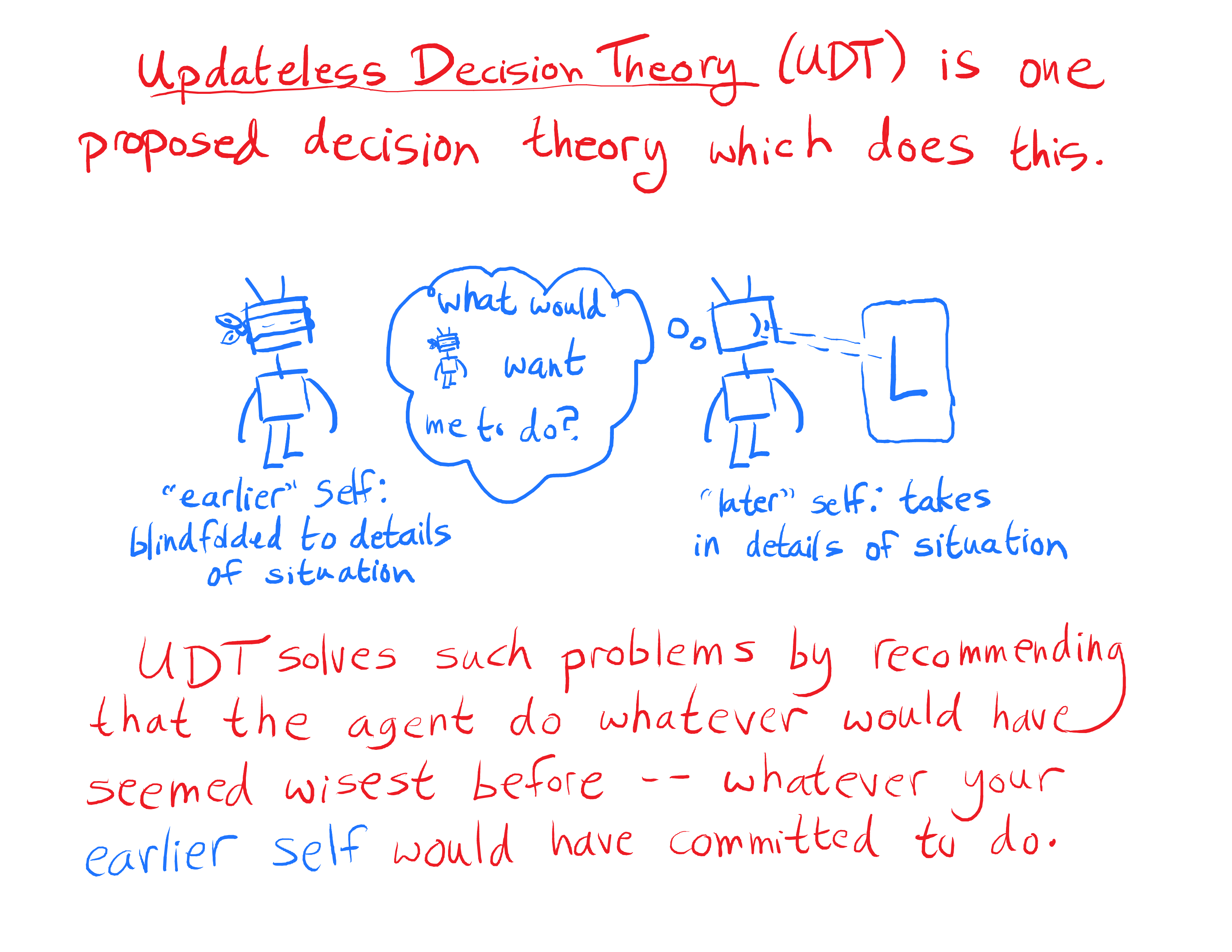

Why does being updateless require thinking through all possibilities in advance? Can you not make a general commitment to follow UDT, but wait until you actually face the decision problem to figure out which specific action UDT recommends taking?

Replies from: abramdemski↑ comment by abramdemski · 2019-12-19T19:07:09.337Z · LW(p) · GW(p)

Sure, but what computation do you then do, to figure out what UDT recommends? You have to have, written down, a specific prior which you evaluate everything with. That's the problem. As discussed in Embedded World Models, a Bayesian prior is not a very good object for an embedded agent's beliefs, due to realizability/grain-of-truth concerns; that is, specifically because a Bayesian prior needs to list all possibilities explicitly (to a greater degree than, e.g., logical induction).

comment by Douglas_Reay · 2019-09-24T03:27:12.593Z · LW(p) · GW(p)

I wonder how much an agent could achieve by thinking along the following lines:

Big Brad is a human-shaped robot who works as a lumberjack. One day his employer sends him into town on his motorbike carrying two chainsaws, to get them sharpened. Brad notices an unusual number of the humans around him suddenly crossing streets to keep their distance from him.

Maybe they don't like the smell of chainsaw oil? So he asks one rather slow pedestrian "Why are people keeping their distance?" to which the pedestrian replies "Well, what if you attacked us?"

Now in the pedestrian's mind, that's a reasonable response. If Big Brad did attack someone walking next to them, without notice, Brad would be able to cut them in half. To humans who expect large bike-riding people carrying potential weapons to be disproportionately likely to be violent without notice, being attacked by Brad seems a reasonable fear, worthy of expending a little effort to alter walking routes to allow running away if Brad is violent.

But Brad knows that Brad would never do such a thing. Initially, it might seem like asking Brad "What if 2 + 2 equalled 3?"

But if Brad can think about the problem in terms of what information is available to the various actors in the scenario, he can reframe the pedestrian's question as: "What if an agent that, given the information I have so far, is indistinguishable from you, were to attack us?"

If Brad is aware that random pedestrians in the street don't know Brad personally, to the level of being confident about Brad's internal rules and values, and he can hypothesise the existence of an alternative being, Brad' that a pedestrian might consider would plausibly exist and would have different internal rules and values to those of Brad yet otherwise appear identical, then Brad has a way forwards to think through the problem.

On the more general question of whether it would be useful for Brad to have the ability to ask himself: "What if the universe were other than I think it is? What if magic works and I just don't know that yet? What if my self-knowledge isn't 100% reliable, because there are embedded commands in my own code that I'm currently being kept from being aware of by those same commands? Perhaps I should allocate a minute probability to the scenario that somewhere there exists a lightswitch that's magically connected to the sun and which, in defiance of known physics, can just turn it off and on?", with careful allocation of probabilities that might avoid divide-by-zero problems, but I don't think it is a panacea - there are additional approaches to counterfactual thinking that may be more productive in some circumstances.

comment by Eigil Rischel (eigil-rischel) · 2019-08-31T19:34:40.270Z · LW(p) · GW(p)

I think I don't understand the Löb's theorem example.

If is provable, then , so it is true (because the statement about is vacuously true). Hence by Löb's theorem, it's provable, so we get .

If is provable, then it's true, for the dual reason. So by Löb, it's provable, so .

The broader point about being unable to reason yourself out of a bad decision if your prior for your own decisions doesn't contain a "grain of truth" makes sense, but it's not clear we can show that the agent in this example will definitely get stuck on the bad decision - if anything, the above argument seems to show that the system has to be inconsistent! If that's true, I would guess that the source of this inconsistency is assuming the agent has sufficient reflective capacity to prove "If I can prove , then . Which would suggest learning the lesson that it's hard for agents to reason about their own behaviour with logical consistency.

Replies from: Gurkenglas↑ comment by Gurkenglas · 2019-08-31T19:57:02.004Z · LW(p) · GW(p)

The agent has been constructed such that Provable("5 is the best possible action") implies that 5 is the best (only!) possible action. Then by Löb's theorem, 5 is the only possible action. It cannot also be simultaneously constructed such that Provable("10 is the best possible action") implies that 10 is the only possible action, because then it would also follow that 10 is the only possible action. That's not just our proof system being inconsistent, that's false!

Replies from: eigil-rischel↑ comment by Eigil Rischel (eigil-rischel) · 2019-08-31T20:36:49.883Z · LW(p) · GW(p)

(There was a LaTeX error in my comment, which made it totally illegible. But I think you managed to resolve my confusion anyway).

I see! It's not provable that Provable() implies . It seems like it should be provable, but the obvious argument relies on the assumption that, if * is provable, then it's not also provable that - in other words, that the proof system is consistent! Which may be true, but is not provable.

The asymmetry between 5 and 10 is that, to choose 5, we only need a proof that 5 is optimal, but to choose 10, we need to not find a proof that 5 is optimal. Which seems easier than finding a proof that 10 is optimal, but is not provably easier.

comment by Abe Dillon (abe-dillon) · 2019-06-28T23:34:17.725Z · LW(p) · GW(p)

This tends to assume that we can detangle things enough to see outcomes as a function of our actions.

No. The assumption is that an agent has *agency* over some degrees of freedom of the environment. It's not even an assumption, really; it's part of the definition of an agent. What is an agent with no agency?

If the agent's actions have no influence on the state of the environment, then it can't drive the state of the environment to satisfy any objective. The whole point of building an internal model of the environment is to understand how the agent's actions influence the environment. In other words: "detangling things enough to see outcomes as functions of [the agent's] actions" isn't just an assumption, it's essential.

The only point I can see in writing the above sentence would be if you said that a function isn't, generally; enough to describe the relationship between an agent's actions and the outcome: that you generally need some higher-level construct like a Turing machine. That would be fair enough if it weren't for the fact that the theory you're comparing yours to is AIXI which explicitly models the relationship between actions and outcomes via Turing machines.

AIXI represents the agent and the environment as separate units which interact over time through clearly defined I/O channels so that it can then choose actions maximizing reward.

Do you propose a model in which the relationship between the agent and the environment are undefined?

When the agent model is part of the environment model, it can be significantly less clear how to consider taking alternative actions.

Really? It seems you're applying magical thinking to the consequences of embedding one Turing machine within another. Why would it's I/O or internal modeling change so drastically? If I use a virtual machine to run Windows within Linux, does that make the experience of using MS Paint fundamentally different then running Windows in a native boot?

...there can be other copies of the agent, or things very similar to the agent.

Depending on how you draw the boundary around "yourself", you might think you control the action of both copies or only your own.

How is that unclear? If the agent doesn't actually control the copies, then there's no reason to imagine it does. If it's trying to figure out how best to exercise its agency to satisfy its objective, then imagining it has any more agency than it actually does is silly. You don't need to wander into the philosophical no-mans-land of defining the "self". It's irrelevant. What are your degrees of freedom? How can you uses them to satisfy your objective? At some point, the I/O channels *must be* well defined. It's not like a processor has an ambiguous number of pins. It's not like a human has an ambiguous number of motor neurons.

For all intents and purposes: the agent IS the degrees of freedom it controls. The agent can only change it's state, which; being a sub-set of the environment's state, changes the environment in some way. You can't lift a box, you can only change the position of your arms. If that results in a box being lifted, good! Or maybe you can't change the position of those arms, you can only change the electric potential on some motor neurons, if that results in arms moving, good! Play that game long enough and, at some point; the set of actions you can do is finite and clearly defined.

Your five-or-ten problem is one of many that demonstrate the brittleness problem of logic-based systems operating in the real world. This is well known. People have all but abandoned logic-based systems for stochastic systems when dealing with real-world problems specifically because it's effectively impossible to make a robust logic-based system.

This is the crux of a lot of your discussion. When you talk about an agent "knowing" its own actions or the "correctness" of counterfactuals, you're talking about definitive results which a real-world agent would never have access to.

It's possible (though unlikely) for a cosmic ray to damage your circuits, in which case you could go right -- but you would then be insane.

If a rare, spontaneous occurrence causes you to go right, you must be insane? What? Is that really the only conclusion you could draw from that situation? If I take a photo and a cosmic ray causes one of the pixels to register white, do I need to throw my camera out because it might be "inasane"?!

Maybe we can force exploration actions so that we learn what happens when we do things?

First of all, who is "we" in this case? Are we the agent or are we some outside system "forcing" the agent to explore?

Ideally, nobody would have to force the agent to explore its world. It would want to explore and experiment as an instrumental goal to lower uncertainty in its model of the world so that it can better pursue its objective.

A bad prior can think that exploring is dangerous

That's not a bad prior. Exploring *is* fundamentally dangerous. You're encountering the unknown. I'm not even sure if the risk/reward ratio of exploring is decidable. It's certainly a hard problem to determine when it's better to explore, and when it's too dangerous. Millions of the most sophisticated biological neural networks the planet Earth has to offer have grappled with the question for hundreds of years with no clear answer.

Forcing it to take exploratory actions doesn't teach it what the world would look like if it took those actions deliberately.

What? Again *who* is doing the "forcing" in this situation and how? Do you really want to tread into the other philosophical no-mans-land of free-will? Why would the question of whether the agent really wanted to take an action have any bearing whatsoever on the result of that action? I'm so confused about what this sentence even means.

EDIT: It's also unclear to me the point of the discussion on counterfactuals. Counterfactuals are of dubious utility for short-term evaluation of outcomes. They become less useful the further you separate the action from the result in time. I could think, "damn! I should have taken an alternate route to work this morning!" which is arguably useful and may actually be wrong, but if I think, "damn, if Eric the Red hadn't sailed to the new world, Hitler would have never risen to power!" That's not only extremely questionable, but also what use would that pondering be even if it were correct?

It seems like you're saying an embedded agent can't enumerate the possible outcomes of its actions before taking them, so it can only do so in retrospect. In which case, why can't an embedded agent perform a pre-emptive tree search like any other agent? What's the point of counterfactuals?

Replies from: dxu↑ comment by dxu · 2019-06-29T00:35:19.341Z · LW(p) · GW(p)

At some point, the I/O channels *must be* well defined.

This statement is precisely what is being challenged--and for good reason: it's untrue. The reason it's untrue is because the concept of "I/O channels" does not exist within physics as we know it; the true laws of physics make no reference to inputs, outputs, or indeed any kind of agents at all. In reality, that which is considered a computer's "I/O channels" are simply arrangements of matter and energy, the same as everything else in our universe. There are no special XML tags attached to those configurations of matter and energy, marking them "input", "output", "processor", etc. Such a notion is unphysical.

Why might this distinction be important? It's important because an algorithm that is implemented on physically existing hardware can be physically disrupted. Any notion of agency which fails to account for this possibility--such as, for example, AIXI, which supposes that the only interaction it has with the rest of the universe is by exchanging bits of information via the input/output channels--will fail to consider the possibility that its own operation may be disrupted. A physical implementation of AIXI would have no regard for the safety of its hardware, since it has no means of representing the fact that the destruction of its hardware equates to its own destruction.

AIXI also fails on various decision problems that involve leaking information via a physical side channel that it doesn't consider part of its output; for example, it has no regard for the thermal emissions [LW · GW] it may produce as a side effect of its computations. In the extreme case, AIXI is incapable of conceptualizing the possibility that an adversarial agent may be able to inspect its hardware, and hence "read its mind". This reflects a broader failure on AIXI's part: it is incapable of representing an entire class of hypotheses--namely, hypotheses that involve AIXI itself being modeled by other agents in the environment. This is, again, because AIXI is defined using a framework that makes it unphysical: the classical definition of AIXI is uncomputable, making it too "big" to be modeled by any (part) of the Turing machines in its hypothesis space. This applies even to computable formulations of AIXI, such as AIXI-tl: they have no way to represent the possibility of being simulated by others, because they assume they are too large to fit in the universe.

I'm not sure what exactly is so hard to understand about this, considering the original post conveyed all of these ideas fairly well. It may be worth considering the assumptions you're operating under--and in particular, making sure that the post itself does not violate those assumptions--before criticizing said post based on those assumptions.

Replies from: abe-dillon↑ comment by Abe Dillon (abe-dillon) · 2019-06-29T01:35:47.325Z · LW(p) · GW(p)

The reason it's untrue is because the concept of "I/O channels" does not exist within physics as we know it.

Yes. They most certainly do. The only truly consistent interpretation I know of current physics is information theoretic anyway, but I'm not interested in debating any of that. The fact is I'm communicating to you with physical I/O channels right now so I/O channels certainly exist in the real world.

the true laws of physics make no reference to inputs, outputs, or indeed any kind of agents at all.

Agents are emergent phenomenon. They don't exist on the level of particles and waves. The concept is an abstraction.

"I/O channels" are simply arrangements of matter and energy, the same as everything else in our universe. There are no special XML tags attached to those configurations of matter and energy, marking them "input", "output", "processor", etc. Such a notion is unphysical.

An I/O channel doesn't imply modern computer technology. It just means information is collected from or imprinted upon the environment. It could be ant pheromones, it could be smoke signals, its physical implementation is secondary to the abstract concept of sending and receiving information of some kind. You're not seeing the forest through the trees. Information most certainly does exist.

Why might this distinction be important? It's important because an algorithm that is implemented on physically existing hardware can be physically disrupted. Any notion of agency which fails to account for this possibility--such as, for example, AIXI, which supposes that the only interaction it has with the rest of the universe is by exchanging bits of information via the input/output channels--will fail to consider the possibility that its own operation may be disrupted.

I've explained in previous posts that AIXI is a special case of AIXI_lt. AIXI_lt can be conceived of in an embedded context, in which case; its model of the world would include a model of itself which is subject to any sort of environmental disturbance.

To some extent, an agent must trust its own operation to be correct, because you quickly run into infinite regression if the agent is modeling all the possible that it could be malfunctioning. What if the malfunction effects the way it models the possible ways it could malfunction? It should model all the ways a malfunction could disrupt how it models all the ways it could malfunction, right? It's like saying "well the agent could malfunction, so it should be aware that it can malfunction so that it never malfunctions". If the thing malfunctions, it malfunctions, it's as simple as that.

Aside from that, AIXI is meant to be a purely mathematical formalization, not a physical implementation. It's an abstraction by design. It's meant to be used as a mathematical tool for understanding intelligence.

AIXI also fails on various decision problems that involve leaking information via a physical side channel that it doesn't consider part of its output; for example, it has no regard for the thermal emissions it may produce as a side effect of its computations.

Do you consider how the 30 Watts leaking out of your head might effect your plans to every day? I mean, it might cause a typhoon in Timbuktu! If you don't consider how the waste heat produced by your mental processes effect your environment while making long or short-term plans, you must not be a real intelligent agent...

In the extreme case, AIXI is incapable of conceptualizing the possibility that an adversarial agent may be able to inspect its hardware, and hence "read its mind".

AIXI can't play tic-tac-toe with itself because that would mean it would have to model itself as part of the environment which it can't do. Yes, I know there are fundamental problems with AIXI...

This is, again, because AIXI is defined using a framework that makes it unphysical

No. It's fine to formalize something mathematically. People do it all the time. Math is a perfectly valid tool to investigate phenomena. The problem with AIXI proper, is that it's limited to a context in which the agent and environment are independent entities. There are actually problems where that is a decent approximation, but it would be better to have a more general formulation, like AIXI_lt that can be applied to contexts in which an agent is embedded in its environment.

This applies even to computable formulations of AIXI, such as AIXI-tl: they have no way to represent the possibility of being simulated by others, because they assume they are too large to fit in the universe.

That's simply not true.

I'm not sure what exactly is so hard to understand about this, considering the original post conveyed all of these ideas fairly well. It may be worth considering the assumptions you're operating under--and in particular, making sure that the post itself does not violate those assumptions--before criticizing said post based on those assumptions.

I didn't make any assumptions. I said what I believe to be correct.

I'd love to hear you or the author explain how an agent is supposed to make decisions about what to do in an environment if it's agency is completely undefined.

I'd also love to hear your thoughts on the relationship between math, science, and the real world if you think comparing a physical implementation to a mathematical formalization is any more fruitful than comparing apples to oranges.

Did you know that engineers use the "ideal gas law" every day to solve real-world problems even though they know that no real-world gas actually follows the "ideal gas law"?! You should go tell them that they're doing it wrong!

Replies from: dxu↑ comment by dxu · 2019-06-29T04:29:38.647Z · LW(p) · GW(p)

The concept is an abstraction.

*Yes, it is. The fact that it is an abstraction is precisely why it breaks down under certain circumstances.

An I/O channel doesn't imply modern computer technology. It just means information is collected from or imprinted upon the environment. It could be ant pheromones, it could be smoke signals, its physical implementation is secondary to the abstract concept of sending and receiving information of some kind. You're not seeing the forest through the trees. Information most certainly does exist.

The claim is not that "information" does not exist. The claim is that input/output channels are in fact an abstraction over more fundamental physical configurations. Nothing you wrote contradicts this, so the fact that you seem to think what I wrote was somehow incorrect is puzzling.

I've explained in previous posts that AIXI is a special case of AIXI_lt. AIXI_lt can be conceived of in an embedded context,

Yes.

in which case; its model of the world would include a model of itself which is subject to any sort of environmental disturbance

*No. AIXI-tl explicitly does not model itself or seek to identify itself with any part of the Turing machines in its hypothesis space. The very concept of self-modeling is entirely absent from AIXI's definition, and AIXI-tl, being a variant of AIXI, does not include said concept either.

To some extent, an agent must trust its own operation to be correct, because you quickly run into infinite regression if the agent is modeling all the possible that it could be malfunctioning. What if the malfunction effects the way it models the possible ways it could malfunction? It should model all the ways a malfunction could disrupt how it models all the ways it could malfunction, right? It's like saying "well the agent could malfunction, so it should be aware that it can malfunction so that it never malfunctions". If the thing malfunctions, it malfunctions, it's as simple as that.

*This is correct, so far as it goes, but what you neglect to mention is that AIXI makes no attempt to preserve its own hardware. It's not just a matter of "malfunctioning"; humans can "malfunction" as well. However, the difference between humans and AIXI is that we understand what it means to die, and go out of our way to make sure our bodies are not put in undue danger. Meanwhile, AIXI will happily allows its hardware to be destroyed in exchange for the tiniest increase in reward. I don't think I'm being unfair when I suggest that this behavior is extremely unnatural, and is not the kind of thing most people intuitively have in mind when they talk about "intelligence".

Aside from that, AIXI is meant to be a purely mathematical formalization, not a physical implementation. It's an abstraction by design. It's meant to be used as a mathematical tool for understanding intelligence.

*Abstractions are useful for their intended purpose, nothing more. AIXI was formulated as an attempt to describe an extremely powerful agent, perhaps the most powerful agent possible, and it serves that purpose admirably so long as we restrict analysis to problems in which the agent and the environment can be cleanly separated. As soon as that restriction is removed, however, it's obvious that the AIXI formalism fails to capture various intuitively desirable behaviors (e.g. self-preservation, as discussed above). As a tool for reasoning about agents in the real world, therefore, AIXI is of limited usefulness. I'm not sure why you find this idea objectionable; surely you understand that all abstractions have their limits?

Do you consider how the 30 Watts leaking out of your head might effect your plans to every day? I mean, it might cause a typhoon in Timbuktu! If you don't consider how the waste heat produced by your mental processes effect your environment while making long or short-term plans, you must not be a real intelligent agent...

Indeed, you are correct that waste heat is not much of a factor when it comes to humans. However, that does not mean that the same holds true for advanced agents running on powerful hardware, especially if such agents are interacting with each other; who knows what can be deduced from various side outputs, if a superintelligence is doing the deducing? Regardless of the answer, however, one thing is clear: AIXI does not care.

This seems to address the majority of your points, and the last few paragraphs of your comment seem mainly to be reiterating/elaborating on those points. As such, I'll refrain from replying in detail to everything else, in order not to make this comment longer than it already is. If you respond to me, you needn't feel obligated to reply to every individual point I made, either. I marked what I view as the most important points of disagreement with an asterisk*, so if you're short on time, feel free to respond only to those.

comment by rmoehn · 2019-06-14T03:31:33.846Z · LW(p) · GW(p)

In the alternative algorithm for the five-and-ten problem, why should we use the first proof that we find? How about this algorithm:

A2 :=

Spend some time t searching for proofs of sentences of the form

"A2() = a → U() = x"

for a ∈ {5, 10}, x ∈ {0, 5, 10}.

For each found proof and corresponding pair (a, x):

if x > x*:

a* := a

x* := x

Return x*

If this one searches long enough (depending on how complicated U is), it will return 10, even if the non-spurious proofs are longer than the spurious ones.

Replies from: rmoehn