How minimal is our intelligence?

post by Douglas_Reay · 2012-11-25T23:34:06.733Z · LW · GW · Legacy · 214 commentsContents

The First City From Village to City Ice Ages Brains, Genes and Calories Summary Comment Navigation Aide None 214 comments

Gwern suggested that, if it were possible for civilization to have developed when our species had a lower IQ, then we'd still be dealing with the same problems, but we'd have a lower IQ with which to tackle them. Or, to put it another way, it is unsurprising that living in a civilization has posed problems that our species finds difficult to tackle, because if we were capable of solving such problems easily, we'd probably also have been capable of developing civilization earlier than we did.

How true is that?

In this post I plan to look in detail at the origins of civilization with an eye to considering how much the timing of it did depend directly upon the IQ of our species, rather than upon other factors.

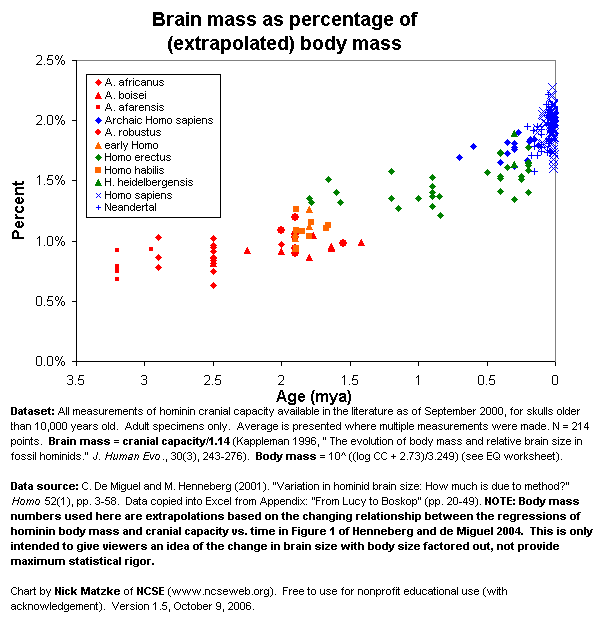

Although we don't have precise IQ test numbers for our immediate ancestral species, the fossil record is good enough to give us a clear idea of how brain size has changed over time:

and we do have archaeological evidence of approximately when various technologies (such as pictograms, or using fire to cook meat) became common.

The First City

About 6,000 years ago (4000 BCE), Ur was a thriving trading village on the flood plain near the mouth of the river Euphrates in what is now called southern Iraq and what historians call Sumeria.

By 3000 BCE it was the heart of a city-state with a core built up populated area covering 37 acres, and would go on over the following thousand years to lead the Sumerian empire, raise a great brick Ziggurat to its patron moon goddess, and become the largest city in the world (65,000 people concentrated in 54 acres).

It was eventually doomed by desertification and soil salination, caused by its own success (over-grazing and land clearing) but, by then, cities had spread throughout the fertile crescent of rivers at the intersection of the European, African and Asian land masses.

Ur may not have been the first city, but it was the first one we know of that wasn't part of a false dawn - one whose culture and technologies did demonstrably spread to other areas. It was the flashpoint.

We don't know for certain what it was about the culture surrounding the dawn of cities that made that particular combination of trade, writing, specialisation, hierarchy and religion communicable, when similar cultures from previous false dawns failed to spread. We can trace each of those elements to earlier sources, none of them were original to Ur, so perhaps it was a case of a critical mass achieving a self-sustaining reaction.

What we can look at is why the conditions to allow a village to become a large enough city for such a critical mass of developments to accumulate, occurred at that time and place.

From Village to City

Motivation aside, the chief problem with sustaining large numbers of people together in a small area, over several generations, keeping them healthy enough for the population to grow without continual immigration, is ensuring access to a scalable renewable predictable source of calories.

To be predictable means surviving famine years, which requires crops that can be stored for several years, such as grasses (wheat, barley and millet) with large seeds, and good storage facilities to store them in. It also means surviving pestilence, which requires having a variety of such crops. To be scalable and renewable means supplying water and nutrients to those crops on an ongoing basis, which requires irrigation and fertiliser from domesticated animals (if you don't have handy regular floods).

Having large mammals available to domesticate, who can provide fertiliser and traction (pulling ploughs and harrows) certainly makes things easier, but doesn't seem to have been a large factor in the timing of the rise of civilisation, or particularly dependent upon the IQ of the human species. Research suggests that domestication may have been driven as much by the animals own behaviour as by human intention, with those animals daring to approach humans more closely getting first choice of discarded food.

Re-planting seeds to ensure plants to gather in following years, leading to low nutrition grasses adapting into grains with high protein concentrations in the seeds, does seem to a mainly intentional human activity in that we can trace most of the gain in size of such plant species seeds to locations where humans have transitioned from the palaeolithic hunter-gatherer culture (about 2.5 million years ago, to about 10,000 years ago) to the neolithic agricultural culture (about 10,000 year ago, onwards).

Good grain storage seems to have developed incrementally starting with crude stone silo pit designs in 9500 BCE, and progressing by 6000 BCE to customised buildings with raised floors and sealed ceramic containers which could store 80 tons of wheat in good condition for 4 years or more. (Earthenware ceramics date to 25,000 BCE and earlier, though the potter's wheel, useful for mass production of regular storage vessels, does date to the Ubaid period.)

The main key to the timing of the transition from village to city seems to have been not human technology but the confluence of climate and biology. Jared Diamond points the finger at the geography of the region - the fertile crescent farmers had access to a wider variety of grains than anywhere else in the world because that area links and has access to the species of three major land masses. The Mediterranean climate has a long dry season with a short period of rain, which made it ideal for growing grains (which are much easier to store for several years than, for instance bananas). And everything kicked off when the climate stabilised after the most recent ice age ended about 12,000 years ago.

Ice Ages

Strictly speaking, we're actually talking about the end of a "glacial period" rather than the end of an entire "ice age". The timeline goes:

200,000 years ago - 130,000 years ago : glacial period

130,000 years ago - 110,000 years ago : interglacial period

110,000 years ago - 12,000 years ago : glacial period

12,000 years ago - present : interglacial period

So the question now is, why didn't humanity spawn civilisation in the fertile crescent 130,000 years ago, during the last interglacial period? Why did it happen in this one? Did we get significantly brighter in the mean time?

It isn't, on the face of it, an implausible idea. 100,000 years is long enough for evolutionary change to happen, and maybe inventing pottery or becoming farmers did take more brain power than humanity had back then. Or, if not IQ, perhaps it was some other mental change like attention span, or the capacity to obey written laws, live as a specialist in a hierarchy, or similar.

But there's no evidence that this is the case, nor is there a need to hypothesise it because there is at least one genetic change we do know about during that time period, that is by itself sufficient to explain the lack of civilisation 130,000 years ago. And it has nothing to do with the brain.

Brains, Genes and Calories

Using the San Bushpeople as a guide to the palaeolithic diet, hunter-gather culture was able to support an average population density of one person per acre. Not that they ate badly, as individuals. Indeed, they seem to have done better than the early Neolithic farmers. But they had to be free to wander to follow nomadic food sources, and they were limited by access to food that the human body could use to create Docosahexaenoic acid, which is a fatty acid required for human brain development. Originally humans got this from fish living in the lakes and rivers of central Africa. However, about 80,000 years ago, we developed a gene that let us synthesise the same acid from other sources, freeing humanity to migrate away from the wet areas, past the dry northern part, and out into the fertile crescent.

But there is a link between diet and brain. Although the human brain represents only 2% of the body weight, it receives 15% of the cardiac output, 20% of total body oxygen consumption, and 25% of total body glucose utilization. Brains are expensive, in terms of calories consumed. Although brain size or brain activity that uses up glucose is not linearly related to individual IQ, they are linked on a species level.

IQ is polygenetic, meaning that many different genes are relevant to a person's potential maximum IQ. (Note: there are many non-genetic factors that may prevent an individual reaching their potential). Algernon's Law suggests that genes affecting IQ that have multiple alleles still common in the human population are likely to have a cost associated with the alleles tending to increase IQ, otherwise they'd have displaced the competing alleles. In the same way that an animal species that develops the capability to grow a fur coat in response to cold weather is more advanced than one whose genes strictly determine that it will have a thick fur coat at all times, whether the weather is cold or hot; the polygenetic nature of human IQ gives human populations the ability to adapt and react on the time scale of just a few generations, increasing or decreasing the average IQ of the population as the environment changes to reduce or increase the penalties of particular trade-offs for particular alleles contributing to IQ. In particular, if the trade-off for some of those alleles is increased energy consumption and we look at a population of humans moving from an environment where calories are the bottleneck on how many offspring can be produced and survive, to an environment where calories are more easily available, then we might expect to see something similar to the Flynn effect.

Summary

There is no cause to suppose, even if the human genome 100,000 years ago had the full set of IQ-related-alleles present in our genome today, that they would have developed civilisation much sooner.

Comment Navigation Aide

link - DuncanS - animal vs human intelligence

link - DuncanS - brain size & brain efficiency

link - JaySwartz - adaptability vs intelligence

link - RichardKennaway - does more intelligence tend to bring more societal happiness?

link - mrglwrf - Ur vs Uruk

link - NancyLebovitz - does decreased variance of intelligence tend to bring more societal happiness?

link - fubarobfusco - victors writing history

link -- consequentialist treatment of library burning

link -- the average net contribution to society of people working in academia

link - John_Maxwell_IV - independent development of civilisation in the Americas

link - shminux - How much of our IQ is dependant upon Docosahexaenoic acid?

link - army1987 - implications for the Great Filter

link - Vladimir_Nesov - genome vs expressed IQ

link - Vladimir_Nesov - Rhetorical nitpick

link - Vaniver - IQ & non-processor-speed components of problem solving

link - JoshuaZ - breakthroughs don't tend to require geniuses in order to be made

link - Desrtopa - cultural factorors

214 comments

Comments sorted by top scores.

comment by fubarobfusco · 2012-11-20T08:14:02.888Z · LW(p) · GW(p)

Ur may not have been the first city, but it was the first one we know of that wasn't part of a false dawn - one whose culture and technologies did demonstrably spread to other areas. It was the flashpoint.

A contrary view — and I'm stating this deliberately rather strongly to make the point vivid:

"False dawn" is a retrospective view; which is to say an anachronistic one; which is to say a mythical one. And myths are written by the victors.

It's true that we perceive more continuity from Ur to today's civilization than from Xyz (some other ancient "dawn of civilization" point) to today. But why? Surely in part because the Sumerians and their Akkadian and Babylonian successors were good at scattering their enemies, killing their scribes, destroying their records, and stealing credit for their innovations. Just as each new civilization claimed that their god had created the world and invented morality, each claimed that their clever forefather had invented agriculture, writing, and tactics. If the Xyzzites had won, they would have done the same.

What's the evidence? Just that that's how civilizations — particularly religious empires — have generally behaved since then. The Hebrews, Catholics, and Muslims, for instance, were all at one time or another pretty big on wiping out their rivals' history and making them out to be barbaric, demonic, subhuman assholes — when they weren't just mass-murdering them. So our prior for the behavior of the Sumerians should be that they were unremarkable in this regard; they did the same wiping-out of rivals' records that the conquistadors and the Taliban did.

Today we have anti-censorship memes; the idea that anyone who would burn books is a villain and an enemy of everyone. But we also have the idea that mass censorship and extirpation of history is "Orwellian" — as if it had been invented in the '40s! This is backwards; it's anti-censorship that is the new, weird idea. Censorship is the normal behavior of normal rulers and normal priests throughout normal history.

Damnatio memoriae, censorship, burning the libraries (of Alexandria or the Yucatán), forcible conversion & assimilation — or just mass murder — are effective ways to make the other guy's civilization into a "false dawn". Since civilizations prior to widespread literacy (and many after it) routinely destroyed the records and lore of their rivals; we should expect that the first X that we have records of is quite certainly not the first X that existed, especially if its lore makes a big deal of claiming that it is.

Put another way — quite a lot of history is really a species of creationism, misrepresenting a selective process as a creative one. So we should not look to "the first city" for unique founding properties of civilization, since it wasn't the first and didn't have any; it was just the conquering power that happened to end up on top.

Replies from: Douglas_Reay, army1987, None↑ comment by Douglas_Reay · 2012-11-20T09:50:55.050Z · LW(p) · GW(p)

Thus my caveat "we know of".

However, while it would be quite possible for a victor to erase written mention of a rival, it is harder to erase beyond all archaeological recovery the signs of a major city that's been stable and populated for a thousand years or more. For instance, if we look at Jericho, which was inhabited earlier than Ur was, we don't see archaeological evidence of it becoming a major city until much later than Ur (see link and link).

If there was a city large enough and long lived enough, around before Ur, that passed onto Ur the bundle of things like writing and hierarchy that we known Ur passed onto others, then I'm unaware of it, and the evidence has been surprisingly thoroughly erased (which isn't impossible, but neither is it a certainty that such a thing happened).

See also the comment about Uruk. There were a number of cities in Sumer close together that would have swapped ideas. But the things said about calories and types of grain apply to all of them.

↑ comment by A1987dM (army1987) · 2012-11-20T19:35:50.471Z · LW(p) · GW(p)

burning the libraries (of Alexandria

Whaaat? Did people do that on purpose? What the hell is wrong with my species?

Replies from: Nornagest, Salemicus, thomblake↑ comment by Nornagest · 2012-11-20T19:57:30.833Z · LW(p) · GW(p)

It's not entirely clear. Wikipedia lists four possible causes of or contributors to the Library of Alexandria's destruction; all were connected to changes in government or religion, but only two (one connected to Christian sources, the other to Muslim) appear deliberate. Both of them seem somewhat dubious, though.

The destruction of Central American literature is a more straightforward case. Bishop Diego de Landa certainly ordered the destruction of Mayan codices where found, which only a few survived.

Replies from: fubarobfusco↑ comment by fubarobfusco · 2012-11-20T21:33:36.406Z · LW(p) · GW(p)

Wikipedia lists four possible causes of or contributors to the Library of Alexandria's destruction; all were connected to changes in government or religion, but only two (one connected to Christian sources, the other to Muslim) appear deliberate.

The Library also wasn't one building; and had some time to recover between one attack and the next. (As an analogy: Burning down some, or even most, of the buildings of a modern university wouldn't necessarily lead to the institution closing up shop.)

I'd been thinking of the 391 CE one, though, which I'd thought was widely understood to be an attack against pagan sites of learning. Updating in progress.

The destruction of Central American literature is a more straightforward case.

It's worth noting that there were people on the Spanish "team" who regretted that decision and spoke out against it, most famously Bartolomé de las Casas.

↑ comment by Salemicus · 2012-11-20T21:01:50.347Z · LW(p) · GW(p)

It's all consequentialism around here... until someone does something to lower the social standing of academia.

Replies from: army1987↑ comment by A1987dM (army1987) · 2012-11-20T21:30:27.900Z · LW(p) · GW(p)

What?

Replies from: Salemicus↑ comment by Salemicus · 2012-11-20T22:06:50.863Z · LW(p) · GW(p)

A consequentialist would ask, with an open mind, whether burning the libraries lead to good or bad consequences. A virtue ethicist would express disgust at the profanity of burning books. Your comment closely resembles the latter, whereas most discussion here on other topics tries to approximate the former.

I think it is no coincidence that this switch occurs in this context. Oh no, some dusty old tomes got destroyed! Compared to other events of the time, piddling for human "utility." But burning books lowers the status of academics, which is why it is considered (in Haidt-ian terms) a taboo by some - including, I would suggest, most on this site.

Replies from: JoshuaZ, army1987, FluffyC, Bugmaster, Peterdjones↑ comment by JoshuaZ · 2012-11-21T00:58:06.405Z · LW(p) · GW(p)

I think it is no coincidence that this switch occurs in this context. Oh no, some dusty old tomes got destroyed! Compared to other events of the time, piddling for human "utility." But burning books lowers the status of academics, which is why it is considered (in Haidt-ian terms) a taboo by some - including, I would suggest, most on this site.

We have good reason to think that the missing volumes of Diophantus were at Alexandria. Much of what Diophantus did was centuries before his time. If people in the 1500s and 1600s had complete access to his and other Greek mathematicians' work, math would have likely progressed at a much faster pace, especially in number theory.

We also have reason to think that Alexandria contained the now lost Greek astronomical records, which likely contained comets and possibly also historical nova observations. While we have some nova and supernova observations from slightly later (primarily thanks to Chinese and Japanese records), the Greeks were doing astronomy well before. This sort of thing isn't just an idle curiosity: understanding the timing of supernova connects to understanding the most basic aspects of our universe. The chemical elements necessary for life are created and spread by supernova. Understanding the exact ratios, how common supernova are, and understanding more how supernova spread out, among other issues, are all important to understanding very important questions like how common life is, which is directly relevant to the Great Filter. We do have a lot of supernova observations in the last few years but historical examples are few and far between.

Compared to other events of the time, piddling for human "utility."

On the contrary. Kill a few people or make them suffer and it has little direct impact beyond a few years in the future. Destroying knowledge has an impact that resonates down for far longer.

But burning books lowers the status of academics, which is why it is considered (in Haidt-ian terms) a taboo by some - including, I would suggest, most on this site.

This is an interesting argument, and I find it unfortunate that you've been downvoted. The hypothesis is certainly interesting. But it may also be taboo for another reason: in many historical cases, book burning has been a precursor to killing people. This is a cliche, but it is a cliche that happens to have historical examples before it. Another consideration is that a high status of academics is arguably quite a good thing from a consequentialist perspective. People like Norman Borlaug, Louis Pasteur, and Alvin Roth have done more lasting good for humanity than almost anyone else. Academics are the main people who have any chance of having a substantial impact on human utility beyond their own lifespans (the only other groups are people who fund academics or people like Bill Gates who give funding to implement academic discoveries on a large scale). So even if it is purely an issue of status and taboo, there's a decent argument that those are taboos which are advantageous to humanity.

Replies from: Salemicus↑ comment by Salemicus · 2012-11-21T19:18:12.883Z · LW(p) · GW(p)

Number theory might have progressed faster... we might better understand the “Great Filter”

Isn’t this kind of thing archetypal of knowledge that in no way contributes to human welfare?

In many historical cases, book burning has been a precursor to killing people.

Perhaps, but note that this wasn’t a precursor to killing people; people were being widely killed regardless. But the modern attention is not on the rape, murder, pillage, etc... it’s on the book-burning. Why the distorted values?

a high status of academics is arguably quite a good thing from a consequentialist perspective

Alvin Roth is no doubt a bright guy, but the idea that he has done more lasting good for humanity than, say, Sam Walton, is absurd. You’re right that Bill Gates has made a huge impact – but his lasting good was achieved by selling computer software, not through the mostly foolish experimentation done by his foundation. Sure, some academics have done some good (although you wildly overstate it) but you have to consider the opportunity cost. The high status of academics causes us to get more academic research than otherwise, but it also encourages our best and brightest to waste their lives in the study of arcana. Can anyone seriously doubt that, on the margin, we are oversupplied with academics, and undersupplied with entrepreneurs and businessmen generally?

Replies from: JoshuaZ, JoshuaZ, Desrtopa, TorqueDrifter, Peterdjones↑ comment by JoshuaZ · 2012-11-21T20:42:13.211Z · LW(p) · GW(p)

Isn’t this kind of thing archetypal of knowledge that in no way contributes to human welfare?

Well, no. In modern times number theory has been extremely relevant for cryptography for example, and pretty much all e-commerce relies on it. But other areas of math have direct, useful applications and have turned out to be quite important. For example, engineering in the late Middle Ages and Renaissance benefited a lot from things like trig and logarithms. Improved math has lead to much better understanding of economies and financial systems as well. These are but a few limited examples.

But the modern attention is not on the rape, murder, pillage, etc... it’s on the book-burning

You are missing the point in this context having the taboo against book burning is helpful because it is something one can use as a warning sign.

Alvin Roth is no doubt a bright guy, but the idea that he has done more lasting good for humanity than, say, Sam Walton, is absurd.

So I'm curious as to how you are defining "good" in any useful sense that you can reach this conclusion. Moreover, the sort of thing that Roth does is in the process of being more and more useful. His work allowing for organ donations for example not only saves lives now but will go on saving lives at least until we have cheap cloned organs.

ou’re right that Bill Gates has made a huge impact – but his lasting good was achieved by selling computer software, not through the mostly foolish experimentation done by his foundation.

This is wrong. His work with malaria saves lives. His work with selling computer software involved making mediocre products and making up for that by massive marketing along with anti-trust abuses. There's an argument to be made that economic productivity can be used as a very rough measure of utility, but that breaks down in a market where advertising, marketing, and network effects of specific product designs matter more than quality of product.

Can anyone seriously doubt that, on the margin, we are oversupplied with academics, and undersupplied with entrepreneurs and businessmen generally?

Yes, to the point where I have to wonder how drastically far off our unstated premises about the world are. If anything, it seems like we have the exact opposite problem. We have a massive oversupply of "quants" and the like who aren't actually producing more utility or even actually working with real market inefficiencies but are instead doing things like moving servers a few feet closer to the exchange so they can shave a fraction of a second off of their transaction times. There may be an "oversupply" of how many academics there are compared to the number of paying positions but that's simply connected to the fact that most research has results that function as externalities(technically public goods) and thus the lack of academic jobs is a market failure.

Replies from: Salemicus↑ comment by Salemicus · 2012-11-21T22:33:33.996Z · LW(p) · GW(p)

No-one is disputing that mathematics can be useful. The question is, if we had slightly more advanced number theory slightly earlier in time, would that have been particularly useful? Answer - no.

You are missing the point in this context having the taboo against book burning is helpful because it is something one can use as a warning sign.

No, I am not missing the point. I am perfectly willing to concede that a taboo against book-burning might be helpful for that reason. But here we have an example where people were,at the same time as burning books, doing the exact worse stuff that book burning is allegedly a warning sign of. But no-one complains about the worse stuff, only the book burning. Which makes me disbelieve that people care about the taboo for that reason.

People say that keeping your lawn tidy keep the area looking well-maintained and so prevents crime. Let's say one guy in the area has a very messy lawn, and also goes around committing burglaries. Now suppose the Neighbourhood Watch shows no interest at all in the burglaries, but is shocked and appalled by the state of his lawn. We would have to conclude that these people don't care about crime, what they care about is lawns, and this story about lawns having an effect on crime is just a story they tell people because they can't justify their weird preference to others on its own terms.

Moreover, the sort of thing that Roth does is in the process of being more and more useful. His work allowing for organ donations for example not only saves lives now but will go on saving lives at least until we have cheap cloned organs.

Or, we could just allow a market for organ donations. Boom, done. Where's my Nobel?

Now, if you specify that we have to find the best fix while ignoring the obvious free-market solutions I don't deny that Alvin Roth has done good work. And I'm certainly not blaming Roth personally for the fact that academia exists as an adjunct to the state - although academics generally do bear the lions share of responsibility for that. But I am definitely questioning the value of this enterprise, compared to bringing cheap food, clothes, etc, to hundreds of millions of people like Sam Walton did.

This is wrong. His work with malaria saves lives. His work with selling computer software involved making mediocre products and making up for that by massive marketing along with anti-trust abuses. There's an argument to be made that economic productivity can be used as a very rough measure of utility, but that breaks down in a market where advertising, marketing, and network effects of specific product designs matter more than quality of product.

I don't see why "saves lives" is the metric, but I bet that Microsoft products have been involved in saving far more lives. Moreover, people are willing to pay for Microsoft products, despite your baseless claims of their inferiority. Gates's charities specifically go around doing things that people say they want but don't bother to do with their own money. I don't know much about the malaria program, but I do know the educational stuff has mostly been disastrous, and whole planks have been abandoned.

Yes, to the point where I have to wonder how drastically far off our unstated premises about the world are.

Obviously very far indeed.

Replies from: JoshuaZ, Bugmaster↑ comment by JoshuaZ · 2012-11-21T23:43:10.745Z · LW(p) · GW(p)

No-one is disputing that mathematics can be useful. The question is, if we had slightly more advanced number theory slightly earlier in time, would that have been particularly useful? Answer - no.

Answer: Yes. Even today, number theory research highly relevant to efficient crypto is ongoing. A few years of difference in when that shows up would have large economic consequences. For example, as we speak, research in ongoing into practical fully homomorphic encryption which if it is implemented will allow cloud computing and deep processing of sensitive information, as well as secure storage and retrieval of sensitive information (such as medical records) from clouds. This is but one example.

But no-one complains about the worse stuff, only the book burning. Which makes me disbelieve that people care about the taboo for that reason.

Well, there is always the danger of lost-purpose. But it may help to keep in mind that the book-burnings and genocides in question both occurred a long-time ago. It is easier for something to be at the forefront of one's mind when one can see more directly how it would have impacted one personally.

Or, we could just allow a market for organ donations. Boom, done. Where's my Nobel?

So, I'm generally inclined to allow for organ donation markets (although there are I think legitimate concerns about them). But since that's not going to happen any time soon, I fail to see its relevance. A lot of problems in the world need to be solved given the political constraints that exist. Roth's solution works in that context. The fact that a politically untenable better solution exists doesn't make his work less beneficial.

But I am definitely questioning the value of this enterprise, compared to bringing cheap food, clothes, etc, to hundreds of millions of people like Sam Walton did.

So, Derstopa already gave some reasons to doubt this. But it is also worth noting that Walton died in 1992, before much of Walmart's expansion. Also, there's a decent argument that Walmart's success was due not to superior organization but rather a large first-mover advantage (one of the classic ways markets can fail): Walmart takes advantage of its size in ways that small competitors cannot do. This means that smaller chains cannot grow to compete with Walmart in any fashion, so even if a smaller competitor is running something more efficiently, it won't matter much. (Please take care to note that this is not at all the mom-and-pop-store argument which I suspect you and I would both find extremely unconvincing.)

I don't see why "saves lives" is the metric

Ok. Do you prefer Quality-adjusted life years ? Bill is doing pretty well by that metric.

but I bet that Microsoft products have been involved in saving far more lives

"Involved with" is an extremely weak standard. The thing is that even if Microsoft had never existed, similar products (such as software or hardware from IBM, Apple, Linux, Tandy) would have been in those positions.

Moreover, people are willing to pay for Microsoft products, despite your baseless claims of their inferiority.

Let's examine why people are willing to do so. It isn't efficiency. For example, by standard benchmarks, Microsoft browsers have been some of the least efficient (although more recent versions of IE have performed very well by some metrics such as memory use ). Microsoft has had a massive marketing campaign to make people aware of their brand (classically marketing in a low information market is a recipe for market failure). And Microsoft has engaged in bundling of essentially unrelated products. Microsoft has also lobbied governments for contracts to the point where many government bids are phrased in ways that make non-Microsoft options essentially impossible. Most importantly: Microsoft gains a network effect: This occurs when the more common a product is, the more valuable it is compared to other similar products. In this context, once a single OS and set of associated products is common, people don't want other other products since they will run into both learning-curve with the "new" product and compatibility issues when trying to get the new product to work with the old.

Gates's charities specifically go around doing things that people say they want but don't bother to do with their own money.

That some people make noise about wanting to help charity but don't doesn't make the people who actually do it as contributing less utility. Or is there some other point here I'm missing?

I don't know much about the malaria program, but I do know the educational stuff has mostly been disastrous, and whole planks have been abandoned.

Yes, there's no question that the education work by the Gates foundation has been profoundly unsuccessful. But the general consensus concerning malaria is that they've done a lot of good work. This may be something you may want to look into.

Replies from: Salemicus↑ comment by Salemicus · 2012-11-22T00:40:20.404Z · LW(p) · GW(p)

Answer: Yes. Even today, number theory research highly relevant to efficient crypto is ongoing...

Yes, but number theory only gained practical application relatively recently. Your claim was that if people in the 1500s and 1600s had had access to this number theory, we'd all be better off now. It seems you believe that we'd now have more advanced number theory because of this. But my claim is that this stuff was seen as useless back then, so they would have mostly sat on this knowledge, and number theory now would be about where it is.

A lot of problems in the world need to be solved given the political constraints that exist...

But those "political constraints" are not laws of nature, they are descriptions of current power relations which academics have helped bring about. I'm glad that the economics faculty spend much of their time thinking of ways to fix the problems caused by the sociology faculty, but it would save everyone time and money if they all went home.

Ok. Do you prefer Quality-adjusted life years ?

No. I'd be more impressed with Gates if he gave people cash to satisfy their own revealed preferences, rather than arbitrary metrics. But that wouldn't look as caring.

"Involved with" is an extremely weak standard. The thing is that even if Microsoft had never existed, similar products (such as software or hardware from IBM, Apple, Linux, Tandy) would have been in those positions.

Yeah, maybe, although presumably it helped on the margin. But if Gates hadn't set up his foundation, the resources involved would have gone to some use. Why are you only looking at one margin, and not the other?

Let's examine why people are willing to do so. It isn't efficiency...

But this notion of "efficiency" that you are using is merely a synonym for what we care about, specifically efficiency to the user. A more convenient UI, for example, is likely orders of magnitude more important than memory usage for efficiency to the user - yet convenience is subjective. Moreover, bundling, marketing, brands and network effects are not examples of market failure. In fact, marketing produces positive externalities. Of course Microsoft has a first-mover advantage over someone trying to make a new OS today, but that's not an "unfair" advantage.

I'll grant you that Microsoft has advantages in government contracting that they wouldn't have in a proper free market, but you should in turn admit that they have also suffered from anti-trust laws.

Gates's charities specifically go around doing things that people say they want but don't bother to do with their own money.

That some people make noise about wanting to help charity but don't doesn't make the people who actually do it as contributing less utility. Or is there some other point here I'm missing?

I wasn't talking about potential donors, I was talking about recipients. If you talk a lot about how much you love literature, but in fact you spend all your money on beer, then some so-called philanthropist building you a library is just a waste of everyone's time.

Replies from: orthonormal, JoshuaZ, army1987↑ comment by orthonormal · 2012-11-22T06:12:50.596Z · LW(p) · GW(p)

Yes, but number theory only gained practical application relatively recently. Your claim was that if people in the 1500s and 1600s had had access to this number theory, we'd all be better off now.

Advances in Diophantine number theory in the Renaissance led directly to complex numbers and analytic geometry, which led to calculus and all of physics. If the Library at Alexandria had been preserved, the Industrial Revolution could have happened centuries earlier.

Replies from: satt↑ comment by JoshuaZ · 2012-11-22T01:00:22.870Z · LW(p) · GW(p)

Yes, but number theory only gained practical application relatively recently. Your claim was that if people in the 1500s and 1600s had had access to this number theory, we'd all be better off now. I

Sorry, poor wording on my part. I mentioned number theory initially as an area because it is the one where we most unambiguously lost Greek knowledge. But it seems pretty clear we lost many other areas also, hence why I mentioned trig, where we know that there were multiple treatises on the geometry of triangles and related things which are no longer extant but are referenced in extant works.

I'm not at all convinced incidentally by the argument that people would have just sat on the number theory. Since the late 1700s, the rate of mathematical progress has been rapid. So while direct focus on the areas relevant to cryptography might not have occurred, closely connected areas (which are relevant to crypto) would certainly be more advanced.

I'm glad that the economics faculty spend much of their time thinking of ways to fix the problems caused by the sociology faculty, but it would save everyone time and money if they all went home.

So what evidence do you have that the economists are fixing the problems created by the sociologists in any meaningful sense?

No. I'd be more impressed with Gates if he gave people cash to satisfy their own revealed preferences, rather than arbitrary metrics. But that wouldn't look as caring.

So that won't work at multiple levels. A major issue when assisting people in the developing world is coordination problems (there are things that will help a lot but if everyone has a little bit of money they don't have an easy way to pool the money together in a useful fashion). Moreover, this assumes a degree of knowledge which people simply don't have. A random African doesn't know necessarily that bednets are an option, or even have any good understanding of where to get them from. And then one has things like vaccine research. You are essentially assuming that market forces will win do what is best when one is dealing with people who are lacking in basic education and institutions to effectively exercise their will even if they had the education.

Moreover, bundling, marketing, brands and network effects are not examples of market failure.

Huh? All of these can result in total utility going down compared to what might happen if one picked a different market equilibrium. How are these not market failures?

In fact, marketing produces positive externalities.

In limited circumstances, marketing can produce positive utility (people learn about products they didn't have knowledge of, or they get more data to compare products), but I'm curious to here how marketing is at all likely to produce positive externalities.

I'll grant you that Microsoft has advantages in government contracting that they wouldn't have in a proper free market, but you should in turn admit that they have also suffered from anti-trust laws.

Yes, they have and that's ok. Anti-trust laws help market stability. The prevent the problem we've seen in the banking and auto industries of being too big to fail, and prevent the problem of bundling to force products on new markets (which again I'm quite curious to here an explanation for how that isn't a market failure).

Replies from: Salemicus↑ comment by Salemicus · 2012-11-22T02:40:47.149Z · LW(p) · GW(p)

So what evidence do you have that the economists are fixing the problems created by the sociologists in any meaningful sense?

I confess I don't understand this question. Could you please clarify?

A major issue when assisting people in the developing world is coordination problems... education... institutions...

But these "institutions" are not laws of nature, they aren't even tangible things - an "institution" is just a description of the way people co-ordinate with each other. So yes, people in developing countries often can't co-ordinate because they have bad institutions, but it would be equally true to say that they can't have good institutions because they don't co-ordinate.

A random African doesn't know necessarily that bednets are an option, or even have any good understanding of where to get them from.

Actually, I think that a "random African" likely knows a lot more about what would improve his standard of living than you or I, and my mind boggles at any other presumption. If he'd rather spend his money on beer than bednets, but you give him a bednet anyway, then I hope it makes you happy, because you're clearly not doing it for him.

I'm curious to here how marketing is at all likely to produce positive externalities.

Apart from the reasons you already mentioned, marketing creates a brand which reduces information costs. This is of course particularly important in a low information market. Spending money to promote your brand is a pre-commitment to provide satisfactory quality products.

Huh? All of these can result in total utility going down compared to what might happen if one picked a different market equilibrium. How are these not market failures?

Firstly, no-one can "pick" a market equilibrium. Secondly, order is defined in the process of its emergence. Thirdly, proof of a possibility and a demonstration of a real-world effect are not the same thing.

Microsoft have also suffered from anti-trust laws

Yes, they have and that's ok... The prevent the problem we've seen in the banking and auto industries of being too big to fail

So every time a business gains on account of departures from the free market, that's a travesty, but every time it loses, that's the way things are supposed to work. No wonder you think academics are the only ones who do any good. Besides, TBTF isn't an economic problem, this is a political problem. They had too many lobbyists to be allowed to fail, that's all.

I'm quite curious to here an explanation for how [bundling] isn't a market failure

How is it a market failure? It's possible for bundling to reduce consumer surplus, but that's just a straight transfer.

Replies from: JoshuaZ, Peterdjones, army1987, RomanDavis↑ comment by JoshuaZ · 2012-11-23T17:56:30.476Z · LW(p) · GW(p)

So what evidence do you have that the economists are fixing the problems created by the sociologists in any meaningful sense?

I confess I don't understand this question. Could you please clarify?

You said earlier that:

I'm glad that the economics faculty spend much of their time thinking of ways to fix the problems caused by the sociology faculty, but it would save everyone time and money if they all went home.

My confusion was over this claim in that it seems to assume that a) sociologists are creating societal problems and b) economists are solving those problems.

But these "institutions" are not laws of nature, they aren't even tangible things - an "institution" is just a description of the way people co-ordinate with each other. So yes, people in developing countries often can't co-ordinate because they have bad institutions, but it would be equally true to say that they can't have good institutions because they don't co-ordinate.

Human behavior is not path independent. Institutions help coordination because prior functioning governments and organizations help people to keep coordinating. Values also come into play: Countries with functioning governments have citizens with more respect for government so they are more likely to cooperate with it an so on.

Apart from the reasons you already mentioned, marketing creates a brand which reduces information costs. This is of course particularly important in a low information market. Spending money to promote your brand is a pre-commitment to provide satisfactory quality products.

This only makes sense in a context where markets are low information and marketing creates actual information and where negative behavior by a brand will have a substantial reduction in sales. In practice, people have strong brand loyalty based on familiarity with logos and the like,. So people will keep buying the same brands not because they are the best but that's because what they've always done. Humans are cognitive misers, and a large part of marketing is hijacking that.

Huh? All of these can result in total utility going down compared to what might happen if one picked a different market equilibrium. How are these not market failures?

Firstly, no-one can "pick" a market equilibrium.

You are missing the point. The point is that there are other stable equilibria that are better off for everyone but issues like networking effects and technological lock-in prevent people from moving off the local maximum.

Secondly, order is defined in the process of its emergence. Thirdly, proof of a possibility and a demonstration of a real-world effect are not the same thing.

What do these two sentences mean?

So every time a business gains on account of departures from the free market, that's a travesty, but every time it loses, that's the way things are supposed to work.

I don't know where you saw a statement that implied that, and I'm curious how you got that sort of idea from what I wrote.

Besides, TBTF isn't an economic problem, this is a political problem. They had too many lobbyists to be allowed to fail, that's all.

There's an argument for that in the case of the car industry, but the economic consensus is that the economy as a whole would have gotten much worse if the banks hadn't been bailed out.

How is it a market failure? It's possible for bundling to reduce consumer surplus, but that's just a straight transfer.

Technological lock-in and network effects again. For example, in the case of Internet Explorer, having it bundled with Windows meant that many people ended up using IE by default, got very used to it, and then it had an advantage compared to other browsers which stayed around (because people then wrote software that needed IE and webpages emphasized looking good in IE). In this context, if the consumers had been given a choice of browsers, it is likely that other browsers, especially Netscape (and later Firefox) would have done much better, and by most benchmarks Netscape was a better browser.

↑ comment by Peterdjones · 2012-11-23T19:54:16.994Z · LW(p) · GW(p)

Actually, I think that a "random African" likely knows a lot more about what would improve his standard of living than you or I, and my mind boggles at any other presumption.

So why haven't they all done so? An RA may well have more on-the-ground knowledge, but a do-gooder may well have more of the kind of knowledge that you learn in school. Since the D-G is not seeking to take over the RA's entire life, it's a case of two heads are better than one.

If he'd rather spend his money on beer than bednets, but you give him a bednet anyway, then I hope it makes you happy, because you're clearly not doing it for him.

You could do with distingusihing short term gains from long term interests. People don't pop out of the womb knowing how to maximise the latter. It takes education. And institions: why save when the banks are crooked.

But these "institutions" are not laws of nature, they aren't even tangible things - an "institution" is just a description of the way people co-ordinate with each other. So yes, people in developing countries often can't co-ordinate because they have bad institutions, but it would be equally true to say that they can't have good institutions because they don't co-ordinate.

It would be truer still to say that good institions take a long and fragile history to evolve. They didn't evolve everywerhe and they arrived late where they did. Go back about 500 years and no one had good (democratic, accountable, fair honest) instituions.

Apart from the reasons you already mentioned, marketing creates a brand which reduces information costs. This is of course particularly important in a low information market. Spending money to promote your brand is a pre-commitment to provide satisfactory quality products.

It's hard to know where to start with that lot. Brands aren't information like lib raries are information, they are an attempt to get people t to buy things by whatever means is permitted. They can be wors than no information at all, since African mothers would have presumably continued to brest fee without nestle's intervention:

"The Nestlé boycott is a boycott launched on July 7, 1977, in the United States against the Swiss-based Nestlé corporation. It spread in the United States, and expanded into Europe in the early 1980s. It was prompted by concern about Nestle's "aggressive marketing" of breast milk substitutes (infant formula), particularly in less economically developed countries (LEDCs), which campaigners claim contributes to the unnecessary suffering and deaths of babies, largely among the poor.[1] Among the campaigners, Professor Derek Jelliffe and his wife Patrice, who contributed to establish the World Alliance for Breastfeeding Action (WABA), were particularly instrumental in helping to coordinate the boycott and giving it ample visibility worldwide."

↑ comment by A1987dM (army1987) · 2012-11-23T09:57:50.153Z · LW(p) · GW(p)

Actually, I think that a "random African" likely knows a lot more about what would improve his standard of living than you or I, and my mind boggles at any other presumption. If he'd rather spend his money on beer than bednets, but you give him a bednet anyway, then I hope it makes you happy, because you're clearly not doing it for him.

Yes, because all humans are perfectly rational and have unlimited willpower. But then again, why doesn't he sell the bednet I gave him and buys beer with it?

Replies from: Salemicus↑ comment by Salemicus · 2012-11-23T10:31:11.814Z · LW(p) · GW(p)

Yes, because all humans are perfectly rational and have unlimited willpower.

And I claim that where? Are you claiming that the donors are perfectly rational and have unlimited willpower - and are also perfectly altruistic?

My claim is the modest and surely uncontroversial one that JoshuaZ's "random African" is a better guardian of his own welfare than you, or Bill Gates, or a random do-gooder.

But then again, why doesn't he sell the bednet I gave him and buys beer with it?

Because you gave everyone else in the area a bednet, so now there's a local glut. But yes, I'm sure some people do sell their bednets.

Replies from: CCC, army1987, ArisKatsaris↑ comment by CCC · 2012-11-23T13:28:27.768Z · LW(p) · GW(p)

My claim is the modest and surely uncontroversial one that JoshuaZ's "random African" is a better guardian of his own welfare than you, or Bill Gates, or a random do-gooder.

It is not (language warning) entirely uncontroversial. Whether through poor education or through giving disproportionate value to present circumstances (and none or too little to future circumstances), people can and often do do things that are, in the long run, self-defeating. (And note that this 'long run' can be measured in weeks or months, even hours in particularly extreme cases).

At least one study suggests that the ability to reject a present reward in favour of a greater future reward is detectable at a young age and is correlated with success in life.

Replies from: Salemicus↑ comment by Salemicus · 2012-11-23T14:49:44.036Z · LW(p) · GW(p)

Of course people can do things that are self-defeating - did I ever suggest otherwise? I never said people are perfect guardians of their own self-interest, I said, and I repeat, that a random person is a better guardians of his own self-interest than a random do-gooder.

I am getting a little frustrated with people arguing against strawmans of my positions, which has now happened several times on this thread. Am I being unclear?

None of those links suggest that people are worse guardians of their own self-interest than the outsider. In fact, quite the reverse. Take the fertilizer study. The reason that the farmers weren't following the advice of the Kenyan Ministry of Agriculture was that it was bad advice. To quote:

[T]he full package recommended by the Ministry of Agriculture is highly unprofitable on average for the farmers in our sample... the official recommendations are not adapted to many farmers in the region.

So the study demonstrates that the farmers were better guardians of their own self-interest than some bureaucrat in Nairobi (no doubt advised by a western NGO). If they had been forced to follow the (no doubt well-meaning) advice, they would have been much worse off. Maybe some would have died. Now, at the same time, they don't know every possible combination, and it turns out that if they changed their farming methodology, they could become more productive. Great! That's how society advances - by persuading people as to what is in their self-interest, not by making someone else their guardian.

Replies from: CCC, Desrtopa, Peterdjones↑ comment by CCC · 2012-11-23T20:16:49.425Z · LW(p) · GW(p)

a random person is a better guardians of his own self-interest than a random do-gooder.

Phrased in this way, I think that I agree with you, on average.

In the original context of your statement, however, I had thought that you meant that "a random charity recipient" instead of "a random person".

Now, charity recipients are still people, of course. However, charity recipients are usually people chosen on the basis of poverty; thus, the group of people who are charity recipients tend to be poor.

Now, some people are good guardians of their own long-term self-interest, and some are not. This is correlated with wealth in an unsurprising way (as demonstrated in the marshmallow experiment linked to above); those people who are better guardians of their own long-term self-interest are, on average, more likely to be above a certain minimum level of wealth than those who are not. They are, therefore, less likely to be charity recipients. Therefore, I conclude that people who are in a position to receive benefits from a charity are, on average, worse guardians of their own long-term self-interest than people who are in a position to contribute to a charity.

So. I therefore conclude that a person, on average, will be a better guardian of his own self-interest than a random person of the category (charity recipient); since the selection of people who fall into that category biases the category to those who are poor guardians of their own self-interest. It's not the only factor selected for in that category, but it's significant enough to have a noticeable effect.

↑ comment by Desrtopa · 2012-11-23T15:09:38.124Z · LW(p) · GW(p)

I said, and I repeat, that a random person is a better guardians of his own self-interest than a random do-gooder.

But likely not a better guardian of his or her own self interest than a nonrandom do-gooder who's researched the specific problems the person is dealing with and developed expertise in solving them with resources that the person is has had no opportunity to learn to make use of.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2012-11-23T16:47:45.605Z · LW(p) · GW(p)

Certainly -- tautologically -- he's not a better guardian of his or her own self interest than some who is in fact a better one. But inventing a fictional character who is in fact a better one does not advance any argument.

One might as well invent a nonrandom do-gooder who thinks they have properly researched what they think are the specific problems the person is dealing with and thinks they have developed expertise in solving them with resources that they think the person has had no opportunity to learn to make use of, but who is nonetheless wrong. As with, apparently, and non-fictionally, the Kenyan Ministry of Agriculture.

Replies from: Desrtopa↑ comment by Desrtopa · 2012-11-23T17:50:55.424Z · LW(p) · GW(p)

Of course, less qualified guardians of an individual's self interest who believe themselves to be more qualified are a legitimate risk, but that doesn't mean that the optimal solution is to have individuals act exclusively as guardians of their own self interest.

If the Kenyan Ministry of agriculture follows the prescriptions of the researchers in the article cited above, they will thereby become better guardians of those farmers' interests than the farmers have thus far been, within that domain.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2012-11-23T18:35:39.070Z · LW(p) · GW(p)

Of course, less qualified guardians of an individual's self interest who believe themselves to be more qualified are a legitimate risk, but that doesn't mean that the optimal solution is to have individuals act exclusively as guardians of their own self interest.

The optimal solution isn't necessarily to have someone else act as the "guardian" of their self-interest, however well informed. BTW, I don't know if this is true of American English, but in British English, one meaning of "guardian" is what an orphan has in place of parents. Doing things for adults without consulting them is usually a bad idea.

If the Kenyan Ministry of agriculture follows the prescriptions of the researchers in the article cited above, they will thereby become better guardians of those farmers' interests than the farmers have thus far been, within that domain.

Not if they try to do so by simply coming in and telling the farmers what to do, nor by deciding the farmers' economic calculations are wrong and manipulating subsidies to get them to do differently. (I've only glanced at the article; I don't know if this is what they did.) Even when they're right about what the farmers should be doing, they will go wrong if they use the wrong means to get that to happen. Providing information might be a better way to go. The presumption that if only you know enough, you can direct other people's lives for them is pretty much always wrong.

Replies from: Peterdjones, DSimon↑ comment by Peterdjones · 2012-11-23T20:00:37.466Z · LW(p) · GW(p)

Doing things for adults without consulting them is usually a bad idea.

But none of the examples given are of one person taking over another's life. Most of this debate revolves around the fallact that someone either runs their own life, or has it run for them. In fact, there are many degress of advice/help/co-operation.

↑ comment by DSimon · 2012-11-23T19:13:10.333Z · LW(p) · GW(p)

I don't know if this is true of American English, but in British English, one meaning of "guardian" is what an orphan has in place of parents.

Yes, this meaning is in American English as well. A typical parental permission form for a child to go on a field trip or what-have-you will ask for the signature of "a parent or legal guardian".

↑ comment by Peterdjones · 2012-11-23T19:58:16.959Z · LW(p) · GW(p)

a random person is a better guardians of his own self-interest than a random do-gooder.

That isn't obvious. D-G's are likely to be qualified to help people, the people they are helping are likely to have a hsitory of not helping themselves sucessfully.

So the study demonstrates that the farmers were better guardians of their own self-interest

Would they have had access to fertilizer at all w/out the govt? Two heads are better than one, again.

↑ comment by A1987dM (army1987) · 2012-11-23T14:15:57.219Z · LW(p) · GW(p)

Are you claiming that the donors are perfectly rational and have unlimited willpower - and are also perfectly altruistic?

I'm not.

My claim is the modest and surely uncontroversial one that JoshuaZ's "random African" is a better guardian of his own welfare than you, or Bill Gates, or a random do-gooder.

I might agree about myself or “a random do-gooder” (dunno about Gates), but these people do look like they've done their homework.

↑ comment by ArisKatsaris · 2012-11-23T11:34:22.589Z · LW(p) · GW(p)

My claim is the modest and surely uncontroversial one that JoshuaZ's "random African" is a better guardian of his own welfare than you, or Bill Gates, or a random do-gooder.

People like Bill Gates aren't "random do-gooders". I'd not it find it strange that in the counterfactual that Bill Gates had the time to guard my own welfare (let alone some random African's), he might do a better job at it than I would myself. Certainly he might provide useful tips about how to invest my money for example, might know ways that I've not even heard of.

Replies from: Salemicus↑ comment by Salemicus · 2012-11-23T14:32:27.718Z · LW(p) · GW(p)

People like Bill Gates aren't "random do-gooders".

Bill Gates was a software executive who got into malaria prevention and education reform and poverty reduction and God knows what else, not because of his deep knowledge and expertise in those subjects, but a generalised wish to become a philanthropist. He's the very archetype of a random do-gooder.

I'd not it find it strange that in the counterfactual that Bill Gates had the time to guard my own welfare (let alone some random African's), he might do a better job at it than I would myself.

But the whole point is that he doesn't have the time (or knowledge, or inclination, or incentives, or ...) to guard your welfare - or that of a "random African". Sure, if Bill Gates was my father he might be a better guardian of my welfare than I am. But he ain't.

Replies from: ArisKatsaris↑ comment by ArisKatsaris · 2012-11-23T14:54:33.818Z · LW(p) · GW(p)

He's the very archetype of a random do-gooder.

My point is that the qualification "random" is rather silly when applied to one of the most wealthy people in the world. That he achieved that wealth (most of which he did not inherit) implies some skills and intelligence most likely beyond that of a randomly selected do-gooder.

An actually randomly-selected do-gooder would probably be more like a middle-class individual who walks to a soup-kitchen and offers to volunteer, or who donates money to UNICEF.

↑ comment by RomanDavis · 2012-11-22T16:45:34.426Z · LW(p) · GW(p)

So every time a business gains on account of departures from the free market, that's a travesty, but every time it loses, that's the way things are supposed to work. No wonder you think academics are the only ones who do any good. Besides, TBTF isn't an economic problem, this is a political problem. They had too many lobbyists to be allowed to fail, that's all.

He didn't say that. You're being a troll.

↑ comment by A1987dM (army1987) · 2012-11-23T14:21:48.144Z · LW(p) · GW(p)

If you talk a lot about how much you love literature, but in fact you spend all your money on beer, then some so-called philanthropist building you a library is just a waste of everyone's time.

I disagree. See this post by Yvain.

↑ comment by Bugmaster · 2012-11-22T01:07:06.726Z · LW(p) · GW(p)

No-one is disputing that mathematics can be useful. The question is, if we had slightly more advanced number theory slightly earlier in time, would that have been particularly useful? Answer - no.

My answer is "probably yes". Mathematics directly enables entire areas of science and engineering. Cathedrals and bridges are much easier to build if you know trigonometry. Electricity is a lot easier to harness if you know trigonometry and calculus, and easier still if you are aware of complex numbers. Optics -- and therefore cameras and telescopes, among many other things -- is a lot easier with linear algebra, and so are many other engineering applications. And, of course, modern electronics are practically impossible without some pretty advanced math and science, which in turn requires all these other things.

If we assume that technology is generally beneficial, then it's best to develop the disciplines which enable it -- i.e., science and mathematics -- as early as possible.

Replies from: army1987↑ comment by A1987dM (army1987) · 2012-11-22T19:47:12.039Z · LW(p) · GW(p)

He was talking about number theory specifically, not mathematics in general -- in the first sentence you quoted he admitted it can be useful. (I doubt advanced number theory would have been that practically useful before the mid-20th century.)

↑ comment by JoshuaZ · 2012-11-21T21:14:54.963Z · LW(p) · GW(p)

Follow up reply in a separate comment since I didn't notice this part of the remark the first time through (and it is substantial enough that it should probably not just be included as an edit):

... we might better understand the “Great Filter”

Isn’t this kind of thing archetypal of knowledge that in no way contributes to human welfare?

If this falls into that category then the archetypes of knowledge that doesn't contribute to human welfare is massively out of whack. Figuring out how much of the Great Filter is in front of us or behind us is extremely important. If most of it is behind us, we have a lot less worry. If most of the Great Filter is in front of us, then existential risk is a severe danger to humanity as a whole. Moreover, if it is in front of us, then it most likely some form of technology and caused by some sort of technological change (since natural disasters aren't common enough to wipe out every civilization that gets off the ground). Since we're just beginning to travel into space, it is likely that if there is heavy Filtration in front of us, it isn't very far ahead but is in the next few centuries.

If there is heavy Filtration in front of us, then it is vitally important that we figure out what that Filter is and what we can do to avert it, if anything. This could be the difference between the destruction of humanity and humanity spreading to the stars. If there are any contributions that contribute to the welfare of humanity, those which involve our existence as a whole should be high up on the list.

↑ comment by Desrtopa · 2012-11-21T22:54:28.873Z · LW(p) · GW(p)

Alvin Roth is no doubt a bright guy, but the idea that he has done more lasting good for humanity than, say, Sam Walton, is absurd.

I wouldn't be so sure about that. I'm not about to investigate the economics of their entire supply chain (I already don't shop at Walmart simply due to location, so it doesn't even stand to influence my buying decisions,) but I wouldn't be surprised if Walmart is actually wealth-negative in the grand scheme. They produce very large profits, but particularly considering that their margins are so small and their model depends on dealing in such large bulk, I think there's a fair likelihood that the negative externalities of their business are in excess of their profit margin.

It's impossible for a business to be GDP negative, but very possible for one to be negative in terms of real overall wealth produced when all externalities are accounted for, which I suspect leads some to greatly overestimate the positive impact of business.

Replies from: Salemicus↑ comment by Salemicus · 2012-11-22T00:51:42.328Z · LW(p) · GW(p)

Why focus on the negative externalities rather than the positive? And why neglect all the partner surpluses - consumer surplus, worker surplus, etc? I'd guess that Walmart produces wealth at least an order of magnitude greater than its profits.

Replies from: Desrtopa↑ comment by Desrtopa · 2012-11-22T02:26:45.162Z · LW(p) · GW(p)

Why focus on the negative externalities rather than the positive?

Because corporations make a deliberate effort to privatize gains while socializing expenses.

GDP is a pretty worthless indicator of wealth production, let alone utility production; the economists who developed the measure in the first place protested that it should by no means be taken as a measure of wealth production. There are other measures of economic growth which paint a less optimistic picture of the last few decades in industrialized nations, although they have problems of their own with making value judgments about what to measure against industrial activity, but the idea that every economic transaction must represent an increase in well-being is trivially false both in principle and practice.

Replies from: Salemicus↑ comment by Salemicus · 2012-11-23T09:57:28.358Z · LW(p) · GW(p)

Because corporations make a deliberate effort to privatize gains while socializing expenses.

This is true of everyone, not just corporations. I'm very suspicious that you take this scepticism only against corporations, but not academics.

Replies from: JoshuaZ, zslastman, Peterdjones, Desrtopa↑ comment by JoshuaZ · 2012-11-23T20:17:36.374Z · LW(p) · GW(p)

Someone who is doing research that is published and doesn't lead to direct patents is socializing gains whether or not they want to.

Replies from: Salemicus, FluffyC↑ comment by Salemicus · 2012-11-24T00:56:17.420Z · LW(p) · GW(p)

Only if there are any gains to socialize. Consider honestly the societal gain from the marginal published paper, particularly given that it gets 0 cites from other papers not by the same author.

Replies from: JoshuaZ↑ comment by JoshuaZ · 2012-11-24T00:59:18.880Z · LW(p) · GW(p)

Consider honestly the societal gain from the marginal published paper, particularly given that it gets 0 cites from other papers not by the same author.

So, I'd be curious what evidence you have that the average paper gets 0 citations from papers not by the same author across a wide variety of fields. But, in any event, the marginal return rate per a paper isn't nearly as important as the marginal return rate per a paper divided by the cost of a paper. For many fields (like math), the average cost per a paper is tiny.

Replies from: Salemicus↑ comment by Salemicus · 2012-11-24T01:46:23.991Z · LW(p) · GW(p)

Consider honestly the societal gain from the marginal published paper, particularly given that it gets 0 cites from other papers not by the same author.

So, I'd be curious what evidence you have that the average paper gets 0 citations from papers not by the same author across a wide variety of fields.

Either I cannot write clearly or others cannot read clearly, because again and again in this thread people are responding to statements that are not what I wrote. The common factor is me, which makes me think it is my failure to write clearly, but then I look at the above. I referred to "the marginal published paper", and even italicised the word marginal. JoshuaZ replies by asking whether I have evidence for my statement about "the average paper." I don't know what else to say at this point.

However, yes, I have plenty of evidence that the marginal paper across a wide variety of fields gets 0 citations, see e.g. Albarran et al. Note incidentally that there are some fields where the average paper gets no citations!

Replies from: nshepperd, JoshuaZ↑ comment by nshepperd · 2012-11-24T02:52:39.416Z · LW(p) · GW(p)

the marginal paper across a wide variety of fields gets 0 citations

I don't think a marginal paper is a thing (marginal cost isn't a type of cost, but represents a derivative of total cost). Do you mean that d(total citations)/d(number of papers) = 0?

Note incidentally that there are some fields where the average paper gets no citations!

This seems impossible, unless all papers get no citations, ie. no-one cites anyone but themselves. That actually happens?

Replies from: Salemicus↑ comment by Salemicus · 2012-11-24T11:40:13.399Z · LW(p) · GW(p)

Of course the marginal paper is a thing. If there were marginally less research funding, the research program cancelled wouldn't be chosen at random, it would be the least promising one. We can't be sure ex ante that that would be the least successful paper, but given that most fields have 20% or more of papers getting no citations at all, and other studies show that papers with very low citation counts are usually being cited by the same author, that's good evidence.

Note that I did not say that papers, on average, get no citations. I said that the average (I.e. median) paper gets no citations.

Replies from: JoshuaZ, army1987, nshepperd↑ comment by JoshuaZ · 2012-11-24T14:35:32.201Z · LW(p) · GW(p)

f there were marginally less research funding, the research program cancelled wouldn't be chosen at random, it would be the least promising one.

Weren't you just a few posts ago talking about the problems of politics getting involved in funding decisions? But now you are convinced that in event of a budget cut it will be likely to go cut the least promising research? This seems slightly contradictory.

↑ comment by A1987dM (army1987) · 2012-11-24T15:20:40.269Z · LW(p) · GW(p)

If there were marginally less research funding, the research program cancelled wouldn't be chosen at random, it would be the least promising one.

Would it? I fear it would be the one whose participants are worst at ‘politics’.

↑ comment by nshepperd · 2012-11-24T14:04:26.459Z · LW(p) · GW(p)

If there were marginally less research funding, the research program cancelled wouldn't be chosen at random, it would be the least promising one.

Ah, right, we're on the same page now. Your argument, however, assumes that a) fruitfulness of research is quite highly predictable in advance, and b) available funds are rationally allocated based on these predictions so as to maximise fruitful research (or the proxy, citations). Insofar as the reality diverges from these assumptions, the expected number of citations of the "marginal" paper is going to approach the average number.

Note that I did not say that papers, on average, get no citations. I said that the average (I.e. median) paper gets no citations.

Oh, by "average" I assumed you meant the arithmetic mean, since that is the usual statistic.

↑ comment by JoshuaZ · 2012-11-24T01:50:44.833Z · LW(p) · GW(p)

Sorry, in this context, I switched talking about the marginal to talking about the average. You shouldn't take my own poor thinking as a sign of anything, although in this context, it is possible that I was without thinking trying to steel man your argument, since when one is talking about completely eliminating academic funding, the average rate matters much more than the marginal rate. But the citation you've given is convincing that the marginal rate is generally quite low across a variety of fields.

Replies from: Salemicus↑ comment by Salemicus · 2012-11-24T11:47:52.794Z · LW(p) · GW(p)

[I]t is possible that I was without thinking trying to steel man your argument, since when one is talking about completely eliminating academic funding...

Who exactly is arguing for completely eliminating academic funding? If you mean me, I hope you can provide a supporting quote.

Replies from: JoshuaZ↑ comment by JoshuaZ · 2012-11-24T14:33:08.247Z · LW(p) · GW(p)

Who exactly is arguing for completely eliminating academic funding?

Well, various statements you've made seemed to imply that, such as your claim that burning down the Library of Alexandria had the advantage that:

Academics now forced to get useful job and contribute to society

and you then stated

The point is that some academics are useful and some are not; there is no market process that forces them to be so. It may be that some of the academics are able to continue doing exactly what they were doing, just for a private employer.

If you prefer, to be explicit, you seem to be arguing that all goverment funding of academics should be cut. Is that an accurate assessment? In that context, given that that's the vast majority of academic research, the relevant difference is still the average not the marginal rate of return.

↑ comment by zslastman · 2012-11-23T10:25:29.896Z · LW(p) · GW(p)

The majority of people, other than psychopaths, are not as ruthless in the quest to externalize their costs. A substantial portion of academics sacrifice renown and glory to do research they believe has intrinsic value. This is in large part the reason they can be paid so much less than people of equivalent ability in the private sector.

I agree with your general point about business men and entrepreneurship being undervalued however.

↑ comment by Peterdjones · 2012-11-23T20:04:16.219Z · LW(p) · GW(p)

This is true of everyone, not just corporations.

Uh huh. Is it true of charities?

↑ comment by Desrtopa · 2012-11-23T14:53:30.422Z · LW(p) · GW(p)