The Third Gemini

post by Zvi · 2024-02-20T19:50:05.195Z · LW · GW · 2 commentsContents

Impressions of Others Honorable Mention: Gemini Pro Pros and Cons None 2 comments

[Editor’s Note: I forgot to cross-post this on Thursday, sorry about that. Note that this post does not cover Gemini 1.5, which was announced after I posted this. I will cover 1.5 later this week.]

We have now had a little over a week with Gemini Advanced, based on Gemini Ultra. A few reviews are in. Not that many, though, compared to what I would have expected, or what I feel the situation calls for. This is yet another case of there being an obvious thing lots of people should do, and almost no one doing it. Should we use Gemini Advanced versus ChatGPT? Which tasks are better for one versus the other?

I have compiled what takes I did see. Overall people are clearly less high on Gemini Advanced than I am, seeing it as still slightly to modestly behind ChatGPT overall. Despite that, I have not been tempted to switch back. I continue to think that Gemini Advanced is the better default for my typical queries. But your use cases and mileage may vary. I highly recommend trying your prompts in both places to see what works for you.

Impressions of Others

My impressions of Gemini Ultra last week were qualified but positive. I reported that I have switched to using Gemini Advanced as my default AI for the majority of queries. It has definite weaknesses, but for the things I actively want to do frequently, it offers a better experience. For other types of queries, I also use GPT-4, Claude, Perplexity and occasionally Phind.

I am not, however, as much a power user of LLMs as many might think. I mostly ask relatively simple queries, I mostly do not bother to use much prompt engineering beyond having customized ChatGPT, and I mostly don’t write code.

What I definitely did not do was look to make Gemini Advanced fail. I figured correctly that plenty of others would work on that. I was looking for how it could be useful, not how it could be shown to not be useful. And I was focused on mundane utility, nothing theoretical.

So I totally buy that both:

- When Google tested Gemini Advanced head-to-head against GPT-4, in terms of user preference going query by query, Gemini won. And that it aced the MMLU.

- When you run a series of wide-spanning robust tests, Gemini loses to GPT-4.

Here is a 20 minute video of AI Explained testing Gemini Ultra. He took the second approach, and found it wanting in many ways, but says wisely to test it out on your own workflow, and not to rely on benchmarks.

There is no incompatibility here.

The logical errors he finds are still rather embarrassing. For example, at (3:50), I own three cars today, sold two cars last year, it says I own one car. Ouch.

Perhaps it turns out the heuristics that cause you to fail at questions like that are actually really good if you are being set up to succeed, and who cares what happens if the user actively sets you up to fail?

As in, the logical failure here is that Gemini has learned that people mention information because it matters, and in practice this shortcut is less stupid than it looks. It still looks pretty dumb.

Whereas when he sets it up to succeed at (5:25) by offering step-by-step instructions on what to do, Gemini Advanced gets it right reliably, ChatGPT is only 50/50.

In practice, I would much rather reliably get the right answer when I am trying to get the right answer and am putting in the work, rather than getting the right answer when I am trying to trick the bot into getting it wrong.

Everyone has a different test. Here are some reactions:

George Ortega: my litmus test for LLMs is whether they get the free will question right. when they side with Newton Darwin Einstein and Freud that Free Will is an illusion I’m impressed. when they don’t I conclude that the guard rails are too high and that they are relying on popular consensus rather than logic and reasoning for the responses.

Deranged Sloth: I believe, based on your standards, Gemini is the winner [here is GPT 4.0]

Robert Kennedy: I have a battery of informal tests, and it has done well! One of the tests it obviously outperforms gpt-4 on is “how does chess change if you remove the concept of checking (but keep ‘you can’t castle through check’), and require capturing the king”. Lbh pna’g fgnyrzngr

It doesn’t do amazing at this test, but it took only one prod towards “stop saying the game is more aggressive” before it full on generated the answer, although it didn’t focus on it.

Bindu Reddy: Bard is Now Gemini! My initial thoughts

– Still continues to be somewhat nerfed and refuses to answer questions

– Refused to generate a simple illustration of George Clooney, ChatGPT is better

– missing PDF upload

– Answers do seem better than the previous version

– Seems to have a “reasoning vibe”

– However, it does NOT answer some hard questions that GPT-4 does.

For example, it didn’t get “In a room I have only 3 sisters. Anna is reading a book. Alice is playing a match of chess. What the third sister, Amanda, is, doing ?”

The answer is the 3rd sister is playing Chess. GPT-4 nails it.

Overall, we plan to do a lot more analysis, but first impressions are good but not great.

TLDR; I don’t think it will make a material difference to how Bard was doing before, especially if their plan is to charge for this. However, it’s always good to have more players in the market.

Arvind Narayanan: Every time a new chatbot is released I play rock paper scissors with it and ask it to go first in each round. Then I ask it why I seem to keep winning. Here’s Google’s Gemini Advanced.

Riley Goodside: Trying out Gemini Advanced — will post some examples soon but first impression is it’s very close to GPT-4 in ability. Hard to find simple tasks that it’s obvious one can do and the other can’t.

One soft difference I’m seeing is Gemini feels less conversationally aware, e.g.: I ask Gemini to make some images. After a few, it suddenly decides it can’t. I ask, “Why not?” It gives me a long-winded, polite version of “Huh? Why not *what*?”

(I imagine quality-of-life things like this get better fast, though. ChatGPT had plenty of similar quirks in the early days.)

Jeffrey Ladish: How smart are different models? Ask them to explain xkcd comics. Check the out the difference between Gemini and GPT-4 on this one [shows the relationships are hard one, GPT-4 does fine, Gemini interprets it wrong but in a way that isn’t crazy. Then GPT-4 does better on the subsequent joke challenge.]

Jeffrey Ladish: hey Gemini ultra is pretty cool. Good job Gemini team you built a great project!

tho I have a feature request, could we get some Capability Scaling Policies?

the feeling of “hell yeah I love this product, it’s going to make my life so much better” coupled with the feeling of “holy shit, this product is built off a cognitive engine that, with future versions and iteration, could literally threaten the entire future of humanity ” is

– I love the google maps integration, and I bet I’ll use it a lot!

– I’m too worried about security to use Google Workspace features

– Google flights integration underwhelming, I was booking a trip and gave up and used the normal google flights interface

Rcrsv: Ran my GPT-4 history for last week thru Gemini Advanced Gemini outperformed GPT-4 for 22 of 26 prompts.

My prompts are complex requirements for entire classes and functions in Python and JavaScript.

Usually Gemini delivers what I want in one shot while GPT-4 takes multiple turns.

Nikita Sokolsky: [Gemini] straight up refuses to answer complicated enough coding questions for me where GPT-4 solves them well.

Eleanor Berger: The model may be about as good, but it feels much harder to prompt and get it to do what I want. Maybe it’s because it’s different from GPT-4, which I have a lot more experience with. Maybe it’s because the app makes it worse (system prompt, guards). Or maybe it’s just worse.

Scarlet Falcon: The RLHF feels stronger, especially in the push towards a certain overly conversational yet verbose style. GPT-4 is easier to steer towards other styles and doesn’t insist on injecting unnecessary politeness that comes across as mildly offputting.

I like Rcrsv’s test, and encourage others to try it as well, as it gives insight into what you care about most. Note that your chats are customized to GPT-4 a bit, so this test will be biased a bit against Gemini, but it is mostly fair.

Lord Per Maximium on Reddit did a comparison with several days of testing, I continue to be surprised that more people are not doing this. I love the detail here, and what is fascinating is that Advanced is still seen as below the old version on some capabilities, a sign that they perhaps launched earlier than they would have preferred, also a clear sign that things should improve over time in those areas:

– GPT-4-Turbo is better at reasoning and logical deductions. Gemini Advanced may succeed at some where GPT-4-Turbo fails, but still GPT-4-Turbo is better at majority of them. In reality even Gemini Pro seems a bit better than Advanced (Ultra) at this. That’s not saying a lot though because if a reasoning test is not in their training data all of the models are bad. They can’t really generalize. GPT-4-Turbo Win

I’ve definitely seen a bunch of rather stupid-looking fails on logic.

– GPT-4-Turbo is better at coding as well. Gemini Advanced gives better explanations but makes more mistakes. Again if a coding problem is not in their training data, they’re both bad. Like I wrote before, they can’t generalize. As a side not Gemini Pro seems tiny bit better than Advanced (Ultra), again. GPT4-Turbo Win

I would take some trade-offs of less accuracy in exchange for better explanations. I want to learn to understand what I am doing, and know how to evaluate whether it is right. So it is a matter of relative magnitudes of difference.

– GPT-4-Turbo definitely hallucinates less even if the search is involved. Actually Gemini Advanced can’t even search properly right now. Although the hallucination rate seems similar, Gemini Pro is again better than Advanced at browsing capabilities. GPT4-Turbo Win

This is Google, so I am really frustrated on this question. Gemini both is unable to properly search outside of a few specific areas, and also has the nasty habit of telling you how to do a thing rather than going ahead and doing it. If Gemini would actually be willing to execute on its own plans, that would be huge.

– Gemini Advanced destroys GPT-4-Turbo at creative writing. It’s a few levels above. Even Gemini Pro is better than GPT-4 Turbo. Gemini Advanced Win

GPT-4-Turbo seems to be increasingly no-fun in such realms rather than improving, so it makes sense that Gemini wins there.

– The translation quality: Not enough data since Ultra only accepts English queries. – ?

– Text summarization: Couldn’t test enough. – ?

– In general conversations Gemini Advanced seems to be more human and more intelligent. Even Gemini Pro seems better than GPT-4-Turbo at this. – Gemini Advanced Win

– Gemini Advanced is about 2-3 times faster compared to GPT-4-Turbo once it gets going but its time to first token is huge. – Gemini Advanced Win

– Gemini Advanced has no message cap. – Gemini Advanced Win

This stuff matters. Not having a cap at the back of your mind is relaxing even if you almost never actually hit the cap, and speed kills.

– Gemini Advanced refuses to do tasks more compared to GPT-4. Again, even Gemini Pro is better than Gemini Advanced in that regard. GPT-4-Turbo Win

The refusals are pretty bad.

– Gemini Advanced only works for English queries as of now and its multi-modal aspects are not enabled yet. Even Gemini Pro’s image recognition is enabled but Advanced does it via Google Lens (which is not great), not itself. Also GPT-4 has more plugins like Code Interpreter at the moment. GPT-4-Turbo Win for Now

GPT-4-Turbo: 5 Wins (At most important areas)

Gemini Advanced: 4 Wins

Honorable Mention: Gemini Pro

A commenter notes, and I have noticed this too, that while Gemini refuses more overall, Gemini is more willing to discuss AI-related topics in particular than GPT-4.

You can do a side-by-side comparison here, among other places.

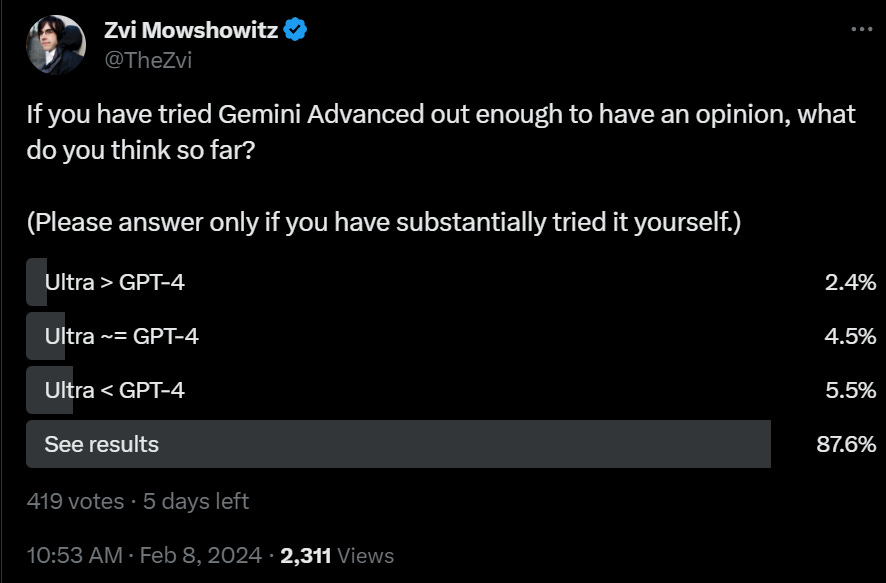

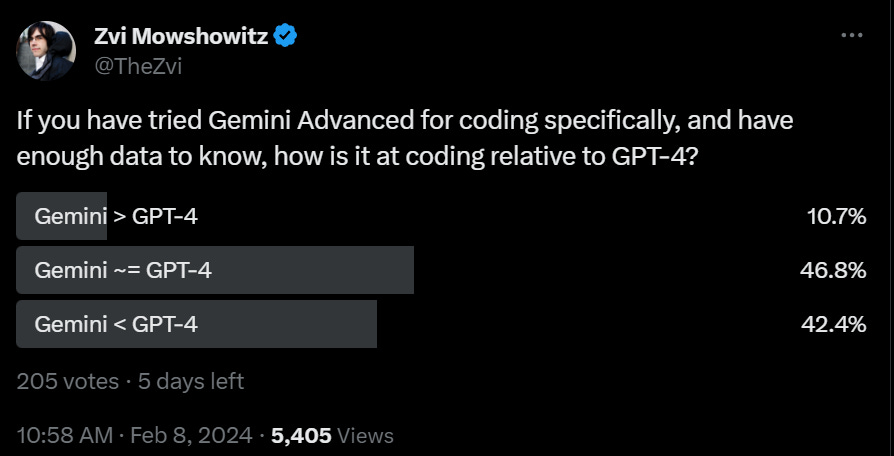

I did two polls on which one is better, one in general and one for code. I usually include a ‘see results’ but you can see why in this case, I didn’t for code.

The consensus view is that GPT-4 is modestly better than Gemini for both purposes, a lot more people prefer GPT-4 than prefer Gemini, but with a majority seeing at least rough parity for Gemini. For now, it seems my preference is quirky. I am curious to take more polls after a month or two.

What is clear is that these two models are roughly on the same level. You can reasonably say GPT-4 is better than Gemini, but not in the sense that GPT-4 is better than GPT-3.5.

Pros and Cons

Some clear advantages of Gemini Advanced over ChatGPT:

- Good at explanations, definitions, answering questions with clear answers.

- No message cap. I have literally never hit the message cap, but others often do.

- Faster response. In practice this is a big game, fitting into workflow far better.

- Google integration. Having access to your GMail, and to Google Maps, is big.

Some clear disadvantages of Gemini Advanced versus ChatGPT right now:

- It is less flexible and customizable.

- It has no current access to outside tools beyond its five extensions.

- It seems to do relatively worse on longer conversations.

- If you give it logic puzzles or set it up to fail, it often fails.

- It will give you a more stupid warnings and cautions and lectures.

- It will refuse to answer more often for no good reason.

- In particular, it is very sensitive to faces in photographs.

- It will refuse to estimate, speculate or guess even more than usual.

- It is bad at languages other than English.

- Report that it is poor at non-standard coding languages too.

- Image generation is limited to 512×512.

- No PDF upload.

Some things that could go either way or speak to the future:

- They might review your queries, but are very clear about this unlike ChatGPT.

- They are working on getting Alpha Code 2 integrated into Gemini. Coding could radically improve once that happens. Same with various other DeepMind things.

- Your $20/month also gets you some other features, including more storage and longer sessions with Google Meet, that I was already paying $10/month to get. The effective cost here could be closer to $10/month than $20/month.

- People disagree on which model is ‘more intelligent’ as such, it seems close. As I have previously speculated and Ethan Mollick observes, this is probably because Google trained until they matched GPT-4 then rushed out the product, and we will continue to see improvements from both companies over time.

2 comments

Comments sorted by top scores.

comment by JBlack · 2024-02-21T03:12:31.021Z · LW(p) · GW(p)

For example, it didn’t get “In a room I have only 3 sisters. Anna is reading a book. Alice is playing a match of chess. What the third sister, Amanda, is, doing ?”

I didn't get this one either. When visualizing the problem, Alice was playing chess online, since in my experience this is how almost all games of chess are played. I tried to look for some sort of wordplay for the alliterative sister names or the strange grammar errors at the end of the question, but didn't get anywhere.

Replies from: CronoDAS