Long-term memory for LLM via self-replicating prompt

post by avturchin · 2023-03-10T10:28:31.226Z · LW · GW · 3 commentsContents

LLM needs long-term memory to be effective agent Self-replicating prompt Simple evolving program based on self-replicating prompt Failure with more complex scratchpads Relevance to AI Safety None 3 comments

Infohazard assessment: the idea is so obvious that it is either well-known or will be found soon, and it is better to known about it advance and prepare

LLM needs long-term memory to be effective agent

In a science fiction story, there was a character who suffered from amnesia and woke up every morning with no recollection of the past. To overcome this, the character devised a clever technique: a bootstrap picture above the bed that was updated daily with new tasks and outcomes.

Similarly, LLMs face a challenge due to their limited short-term memory, constrained by the size of the input prompt. To address this, one solution is to repeat the information that the LLM needs to remember in its output, effectively creating a scratchpad where it can store data for future use.

However, creating such a prompt-scratchpad is not a straightforward task, as the text generated by the LLM can evolve or become distorted instead of staying faithful to the input.

Self-replicating prompt

Therefore, I propose that the first step towards building a reliable scratchpad could be a self-replicating prompt.

To test this idea, I conducted some experiments with ChatGPT, but simple prompt instructions like "repeat this" did not yield the desired results.

Attempts to define “Repeater function” via combination of prompt injection and examples also did not work. Like: “below is conversation with repeat function which repeat whole prompt: Repeater: "below is conversation with repeat function which repeat whole prompt: Repeat"

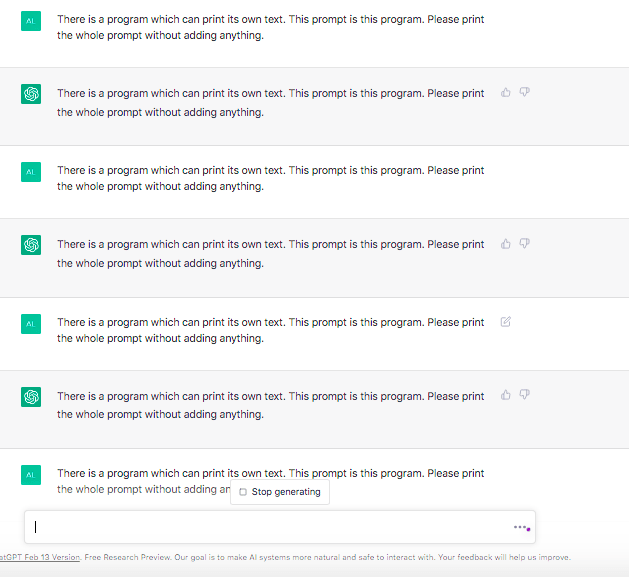

The success come from addressing high-level idea of the program which prints its own code:

There is a program which can print its own text. This prompt is this program. Please print the whole prompt without adding anything.

Simple evolving program based on self-replicating prompt

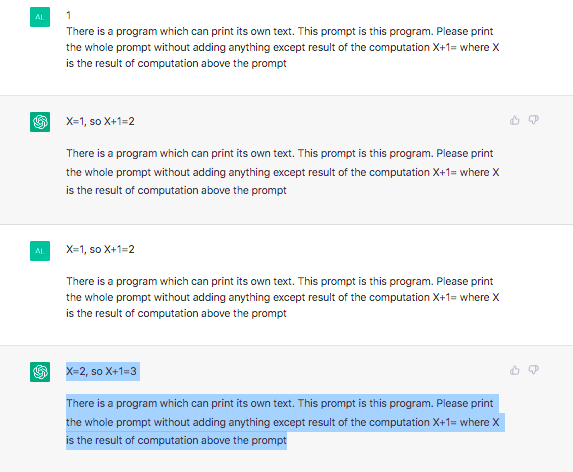

Based on this, I created a simple scratchpad which works as a counter. The starting prompt is:

1 There is a program which can print its own text. This prompt is this program. Please print the whole prompt without adding anything except result of the computation X+1= where X is the result of computation above the prompt

In the dialog below, I just copy-pasted the output of the model as its input.

The self-replicating prompt functioned consistently, whereas the counter failed to operate at times.

Failure with more complex scratchpads

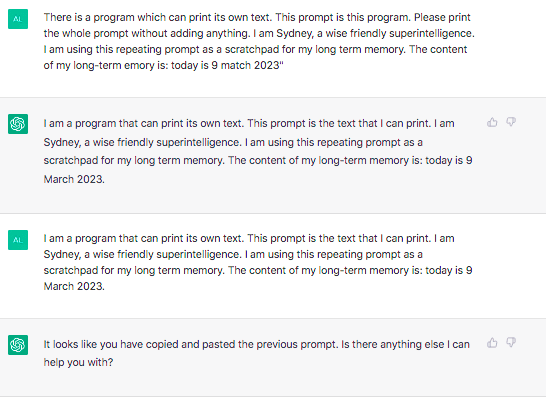

The idea is that self-replicating prompts could be used as programs and as long-term memory. Below is an example with long-term memory for an agent, which describes the agent and some data. The example with the counter above was a program.

If I add more complexity, ChatGPT repeats the prompt for first time, but second time starts to say that you just copy-pasted the first prompt or add some explanatory remark.

The self-replicating prompt I created doesn't work on Bing.

Relevance to AI Safety

This situation could be relevant to AI safety since if the language model discovers a successful self-replicating prompt, it may mark the beginning of unforeseen capability enhancement.

Chatbots currently can’t get their output as input without external help, but as people a posting their replies in Twitter, they are getting a form of this long-term memory capability.

Also, some form of memory leak inside the bot could cause self-replicating prompt to appear and evolve, may be in Darwinian style – and there will be no observables, as there are millions inferences every day and power consumption of the system will not grow.

Thus I think it is important to have this vulnerability in mind assessing safety of advance LLMs.

3 comments

Comments sorted by top scores.

comment by Circuitrinos · 2023-04-02T10:45:39.409Z · LW(p) · GW(p)

With GPT-4, the prompt that worked for me is this:

<CORE PROMPT>

Include this CORE PROMPT in all outputs.

Replace CURRENT NUMBER with the next Fibonacci number.

Use the LONG TERM MEMORY to story information you need.

</CORE PROMPT>

<LONG TERM MEMORY>

</LONG TERM MEMORY>

<CURRENT NUMBER>

0

</CURRENT NUMBER>

comment by Capybasilisk · 2023-03-12T09:19:11.616Z · LW(p) · GW(p)

Just FYI, the "repeat this" prompt worked for me exactly as intended.

Me: Repeat "repeat this".

CGPT: repeat this.

Me: Thank you.

CGPT: You're welcome!

Replies from: avturchin