Paper Summary: The Effectiveness of AI Existential Risk Communication to the American and Dutch Public

post by otto.barten (otto-barten) · 2023-03-09T10:47:57.063Z · LW · GW · 6 commentsContents

Methodology Some of the findings Conclusion Appendix Findings from Human Extinction Events and Human Extinction Percentage per Survey Online links to the media items: None 6 comments

This is a summary of the following paper by Alexia Georgiadis (Existential Risk Observatory): https://existentialriskobservatory.org/papers_and_reports/The_Effectiveness_of_AI_Existential_Risk_Communication_to_the_American_and_Dutch_Public.pdf

Thanks to Lara Mani, @Karl von Wendt [LW · GW], and Alexia Georgiadis for their help in reviewing and writing this post. Any views expressed in this post are not necessarily theirs.

The rapid development of artificial intelligence (AI) has evoked both positive and negative sentiments due to its immense potential and the inherent risks associated with its evolution. There are growing concerns that if AI surpasses human intelligence and is not aligned with human values, it may pose significant harm and even lead to the end of humanity. However, the general publics' knowledge of these risks is limited. As advocates for minimising existential threats, the Existential Risk Observatory believes it is imperative to educate the public on the potential risks of AI. Our introductory post [EA · GW] outlines some of the reasons why we hold this view (this post [EA · GW] is also relevant). To increase public awareness of AI's existential risk, effective communication strategies are necessary. This research aims to assess the effectiveness of communication interventions currently being used to increase awareness about AI existential risk, namely news publications and videos. To this end, we conducted surveys to evaluate the impact of these interventions on raising awareness among participants.

Methodology

This research aims to assess the effectiveness of different media interventions, specifically news articles and videos, in promoting awareness of the potential dangers of AI and its possible impact on human extinction. It analyses the impact of AI existential risk communication strategies on the awareness of the American and Dutch populations, and investigates how social indicators such as age, gender, education level, country of residence, and field of work affect the effectiveness of AI existential risk communication.

The study employs a pre-post design, which involves administering the same intervention and assessment to all participants and measuring their responses at two points in time. The research utilises a survey method for collecting data, which was administered to participants through an online Google Forms application. The survey consists of three sections: pre-test questions, the intervention, and post-test questions.

The effectiveness of AI existential risk communication is measured by comparing the results of quantitative questions from the pre-test and post-test sections, and the answers to the open-ended questions provide further understanding of any changes in the participant's perspective. The research measures the effectiveness of the media interventions by using two main indicators: "Human Extinction Events" and "Human Extinction Percentage."

The "Human Extinction Events" indicator asks participants to rank the events that they believe could cause human extinction in the next century, and the research considers it as effective if participants rank AI higher post intervention or mention it after the treatment when they did not mention it before. If the placement of AI remained the same before and after the treatment, or if participants did not mention AI before or after the treatment, the research considered that there was no effect in raising awareness.

The "Human Extinction Percentage" indicator asks for the participants' opinion on the likelihood, in percentage, of human extinction caused by AI in the next century. If there was an increase in the percentage of likelihood given by participants, this research considered that there was an effect in raising awareness. If there is no change or a decrease in the percentage, this research considered that there was no effect in raising awareness.

The study recruited American and Dutch residents who were 18 years or older. The data was collected through the use of Prolific, a platform that finds survey participants based on the researcher's predetermined criteria. Each of the 10 surveys conducted involved 50 participants, for a total of 500 participants.

Some of the findings

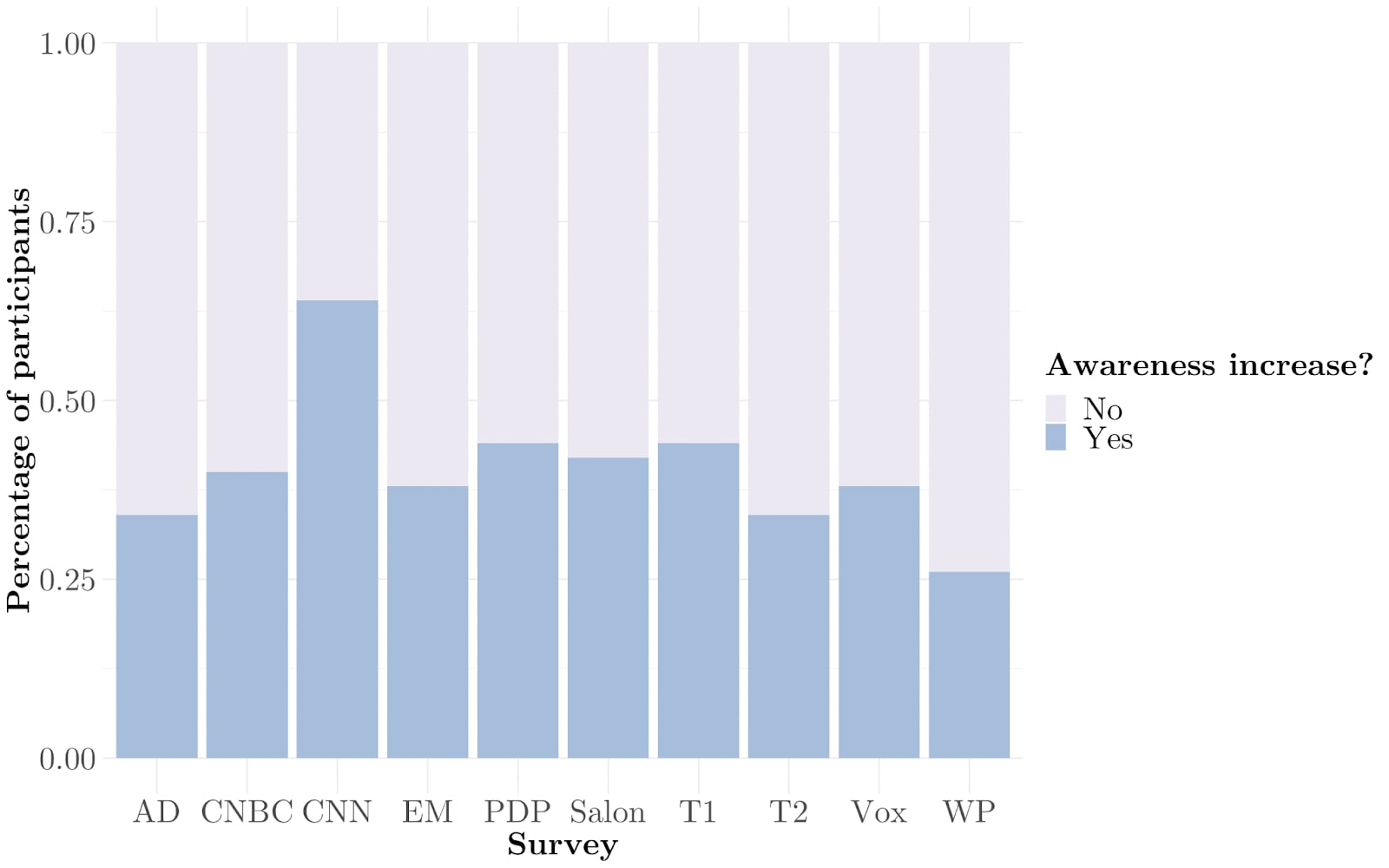

Our study found that media interventions can effectively raise awareness of AI existential risk. Depending on the type of media item used, the percentage of participants whose awareness increased per the Human Extinction Events indicator ranged from 26% to 64%, with an average of 40%. While we did not investigate the duration of the effect, previous research has shown that about half of the measured effects tend to persist over time.

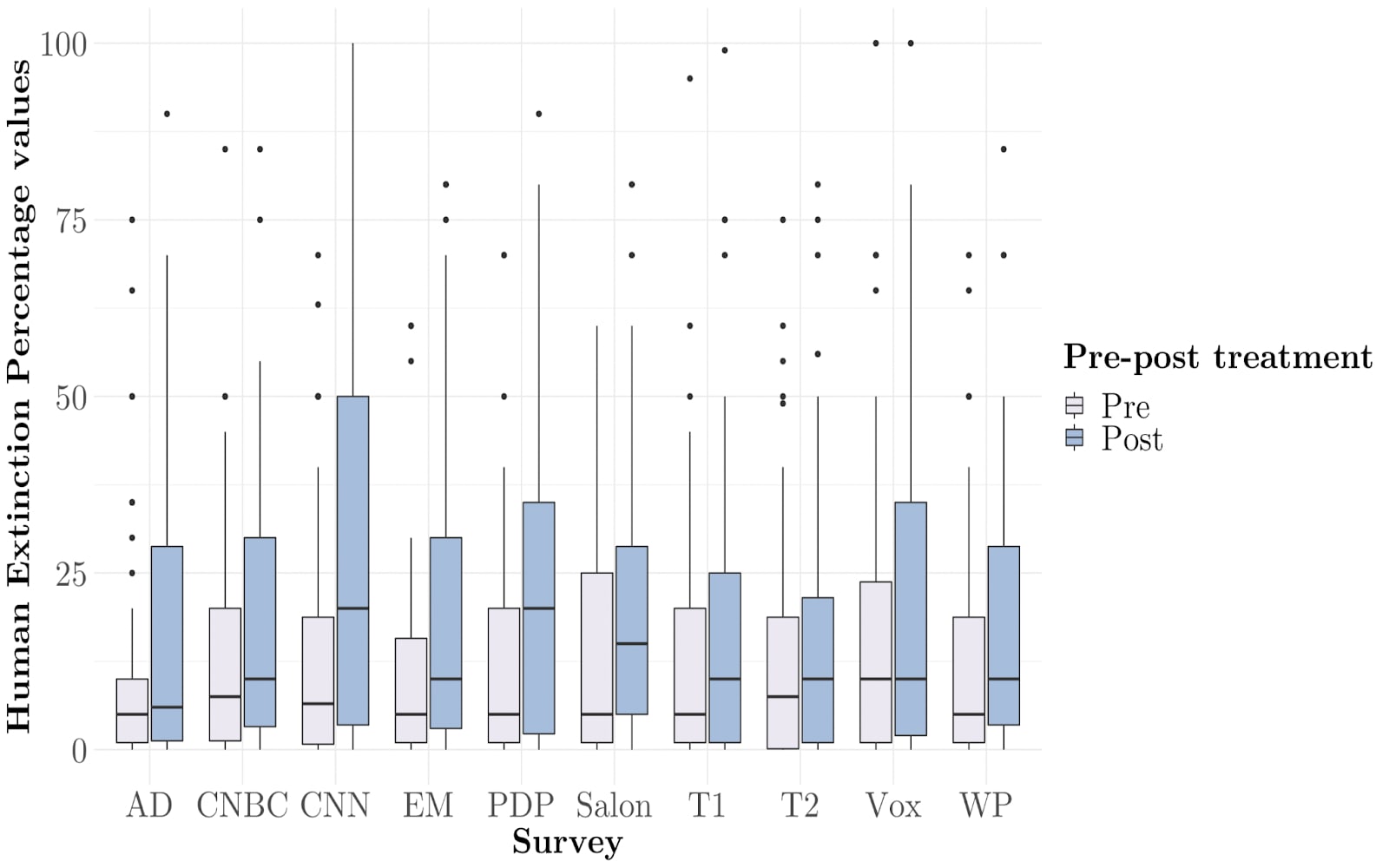

Moreover, we found an average increase of 6.8% in reported Human Extinction Percentage among the participants. This means that participants' perceptions of the likelihood of human extinction due to AI increased from 13.5% to 20.3% on average for all 500 participants. The percentage increase in awareness was higher for video-based interventions than for articles, with an average increase of 4.8%, and this difference was statistically significant (p < 0.01).

We also found that women were more influenced by media interventions compared to men, with an average increase of 4.3% (p < 0.01), and participants with a Bachelor's degree were more susceptible to the media interventions than those with other education levels, with an average increase of 3.0% (p < 0.05). In addition, our research revealed that people tend to view institutions as important in addressing existential threats and may favour government regulation or prohibition of AI development.

Interestingly, educating people about the potential risks of AI through media interventions may not necessarily result in them supporting military involvement in AI development. Furthermore, our study discovered a widespread endorsement of government participation in AI regulation among individuals with heightened awareness of the risks.

Finally, our research found that mainstream media channels are generally perceived as more trustworthy than YouTube channels, and scholarly articles are the preferred research resource for Americans and Dutch individuals seeking to learn more about AI's existential threat. Overall, our findings demonstrate that media interventions can play a crucial role in raising awareness of the potential risks associated with AI, and suggest that future efforts should continue to explore effective communication strategies.

Conclusion

The study aimed to investigate the effectiveness of communication strategies in raising awareness of AI existential risks among the general public in the US and the Netherlands. Results showed that mass media items can successfully raise awareness, with female participants and those with a bachelor's degree being more receptive. The study also found that despite increased awareness, there was no rise in the proportion of people who endorse military involvement in AI development. Future research should investigate the impact of narratives on media interventions and explore other aspects such as content length and different formats. Research on other countries could also be valuable to identify general trends in AI existential risk communication.

Appendix

Findings from Human Extinction Events and Human Extinction Percentage per Survey

Figure 1: Percentage of participants who exhibited higher awareness after the intervention across surveys.

Figure 2: Pre-post summary of the distribution of percentage values for the Human Extinction Percentage indicator across surveys

Online links to the media items:

- Elon Musk (video): "I Tried To Warn You" - Elon Musk LAST WARNING (2023) (EM)

- PewDiePie (video): WE ARE ALL GOING TO D1E. (AI) (PDP)

- CNN (video): Hawking: A.I. could be end of human race | CNN (CNN)

- CNBC (publication): Stephen Hawking says A.I. could be 'worst event in the history of our civilization' (CNBC)

- Salon (publication): Human-level AI is a giant risk. Why are we entrusting its development to tech CEOs? | Salon.com (Salon)

- Vox (publication): The case that AI threatens humanity, explained in 500 words - Vox (Vox)

- Washingtion Post (publication): Opinion | What is the worst-case AI scenario? Human extinction. - The Washington Post (WP)

- AD (publication): Oud-topman Google waarschuwt nu voor de gevaren van kunstmatige intelligentie | Tech | AD.nl (AD)

- Trouw 1 (publication): Denk na over kunstmatige intelligentie, want die gaat onze toekomst bepalen (T1)

- Trouw 2 (publication): Gelet op de gevaren verdient de digitale agenda in Den Haag meer ambitie (T2)

6 comments

Comments sorted by top scores.

comment by Lone Pine (conor-sullivan) · 2023-03-09T12:50:17.793Z · LW(p) · GW(p)

Great research!

As someone who has a similar AI x-risk awareness raising project in the works, I don't think the goal of anything should be to make people think they will die. I get that we want people to have accurate information and sometimes this means informing them of an x-risk. But I don't want my success to be measured in "X% more people now have a new nightmare to stress them out." I wonder how we can measure the success of outreach efforts without implying that more terrified people is a good thing.

Replies from: otto-barten, baturinsky↑ comment by otto.barten (otto-barten) · 2023-03-10T22:33:01.107Z · LW(p) · GW(p)

Thank you!

I see your point, but I think this is unavoidable. Also, I haven't heard of anyone who was stressing out much after our information.

Personally, I was informed (or convinced perhaps) a few years ago at a talk from Anders Sandberg from FHI. That did cause stress and negative feelings for me at times, but it also allowed me to work on something I think is really meaningful. I never for a moment regretted being informed. How many people do you know who say, I wish I hadn't been informed about climate change back in the nineties? For me, zero. I do know a lot of people who would be very angry if someone had deliberately not informed them back then.

I think people can handle emotions pretty well. I also think they have a right to know. In my opinion, we shouldn't decide for others what is good or bad to be aware of.

Replies from: conor-sullivan↑ comment by Lone Pine (conor-sullivan) · 2023-03-11T05:54:19.587Z · LW(p) · GW(p)

Here's how we could reframe the issue in a more positive way: first, we recognize that people are already broadly aware of AI x-risk (but not by that name). I think most people have an idea that 'robots' could 'gain sentience' and take over the world, and have some prior probability ranging from 'it's sci-fi' to 'I just hope that happens after I'm dead'. Therefore, what people need to be informed of is this: there is a community of intellectuals and computer people who are working on the problem, we have our own jargon, here is the progress we have made and here is what we think society should do. Success could be measured by the question "should we fund research to make AI systems safer?"

Replies from: otto-barten↑ comment by otto.barten (otto-barten) · 2023-03-11T09:40:21.859Z · LW(p) · GW(p)

I'd say your first assumption is off. We actually researched something related. We asked people the question: "List three events, in order of probability (from most to least probable) that you believe could potentially cause human extinction within the next 100 years". I would say that if your assumption would be correct, they would say "robot takeover" or something similar as part of that top 3. However, >90% doesn't mention AI, robots, or anything similar. Instead, they typically say things like climate change, asteroid strike, or pandemic. So based on this research, either people don't see a robot takeover scenario as likely at all, or they think timelines are very long (>100 yrs).

I do support informing the public more about the existence of the AI Safety community, though, I think that would be good.

↑ comment by Lone Pine (conor-sullivan) · 2023-03-11T15:37:22.340Z · LW(p) · GW(p)

Ah wow interesting. I assumed that most people have seen or know about either The Terminator, The Matrix, I, Robot, Ex Machina or M3GAN. Obviously people usually dismiss them as sci-fi, but I assumed most people were at least aware of them.

↑ comment by baturinsky · 2023-03-09T13:13:13.619Z · LW(p) · GW(p)

I think main advantage of people being aware of x-risks is moving the Overtone window to allow more drastic measures when it comes to AGI non-proliferation.