Research Questions from Stained Glass Windows

post by StefanHex (Stefan42) · 2022-06-08T12:38:44.848Z · LW · GW · 0 commentsContents

Case 1: Patterns that trigger styles Case 2: Triggering more advanced characteristics: Mood, Perspective, ... Case 3: What does DALL-E-2 know? None No comments

I just read Scott Alexander's recent ACX blog post on DALL-E 2 and I think it suggests many possible hypotheses about the internals of models. Could we test these with our current interpretability tools, e.g. for transformers? (DALL-E 2 seems to contain a transformer part)

Note: You might want to read or skim the blog post, otherwise the examples below will seem a bit out-of-context.

Case 1: Patterns that trigger styles

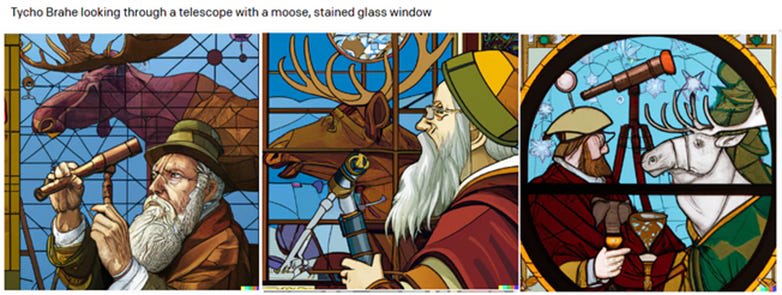

Example: Adding moose to the Tycho Brahe prompt

The stained glass has retreated from the style to become a picture element again. The style has become generally more modern.

(image linked from ACX)

Can we find out how the network decides what style to use? Can we use this to find out how to force the style? I would be surprised if there was no better way than saying "stained glass" to make DALL-E-2 produce a stained-glass style image (rather than a picture of a stained-glass window).

This might require 2 steps: Step 1 is to find out what neuron stores whether or not to make a stained-glass window (ideas see below), step 2 is to find inputs that maximize the excitation of this neuron (e.g. by iterating over random word input sequences, maybe enhanced with various Monte Carlo sampling techniques such as MCMC).

If we know how exactly the style information is stored, can we inject it somewhere along the way? I.e. can we write a "stained-glass image generator" by adding some inputs in feature-space (i.e. not just in the input sequence but e.g. between the encode- and decode processes of the Transformer), that always generates stained-glass style images?

Case 2: Triggering more advanced characteristics: Mood, Perspective, ...

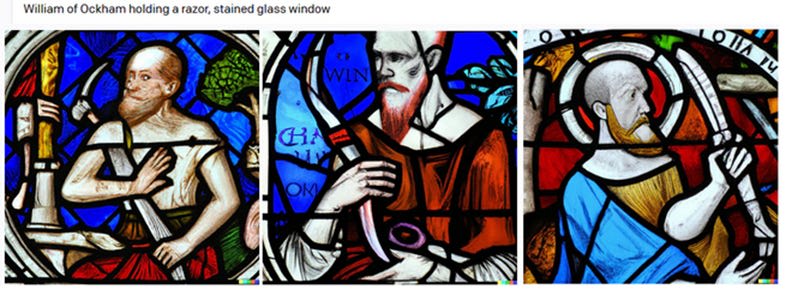

Styles are a pretty straightforward thing people do with DALL-E-2, make picture of X in style Y. But look at the Occam's razor pictures, some of them ("GILA WHAMM") show a person looking quite scared or somewhat dreadful, others (like "William of Ockham" ones) show different kind of emotions (confusion? scared?).

Is this something the NN "knows"? Could we find out where in the network this is stored, and again, find patterns that provoke the behavior?

Possible idea: Use emotion-classifier network to classify outputs (generated from random inputs), group them, and look at what neuron excitation are common in a category, but different between the different categories. (Possibly difficult since the outputs will be anything, not just faces, but we could deal with this by limiting the random inputs.)

Or the other way around again, take somewhat-random input strings, select inputs that maximally excite a certain neuron, collect their outputs and finally have a human see if they recognize a common theme in the output image. Like we have humans look at OpenAI Microscope images and find "eye" or "edge" features.

Case 3: What does DALL-E-2 know?

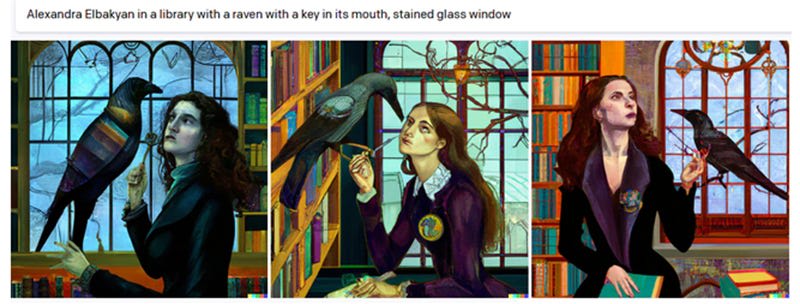

I think the section trying to make images of Alexandra Elbakyan is very interesting.

For the record, Alexandra Elbakyan looks like this: …and not like a Goth version of Hermione Granger. I think DALL-E assumes that anybody in the vicinity of a raven must be a Goth, and anybody in a library must be at least sort of Hermione Granger

(image linked from ACX)

Once we solved questions like cases 1 and 2 we can think of more advanced questions. How does Alexandra Elbakyan + Raven + Library excite neurons associated with Goth or Hermione Granger? And can we find "anti-patterns" to subtract / counteract these unwanted effects?

These are just some ideas I had while reading the blog post, I am not (at the moment) working on any of this. But I would love to hear if anyone is going to trying this out, or about ideas how to approach this -- I'm sure there must be some decent interpretability tools already out there.

0 comments

Comments sorted by top scores.