Nash Equilibria and Schelling Points

post by Scott Alexander (Yvain) · 2012-06-29T02:06:13.524Z · LW · GW · Legacy · 82 commentsContents

82 comments

A Nash equilibrium is an outcome in which neither player is willing to unilaterally change her strategy, and they are often applied to games in which both players move simultaneously and where decision trees are less useful.

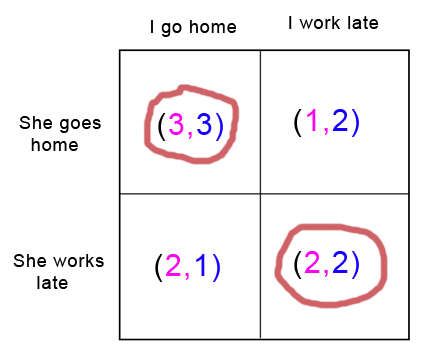

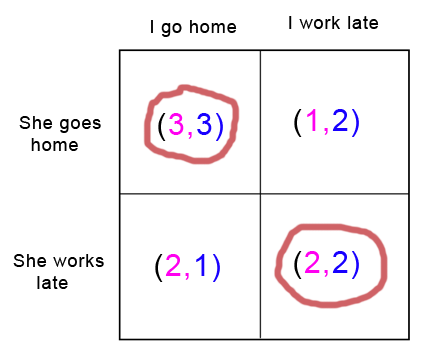

Suppose my girlfriend and I have both lost our cell phones and cannot contact each other. Both of us would really like to spend more time at home with each other (utility 3). But both of us also have a slight preference in favor of working late and earning some overtime (utility 2). If I go home and my girlfriend's there and I can spend time with her, great. If I stay at work and make some money, that would be pretty okay too. But if I go home and my girlfriend's not there and I have to sit around alone all night, that would be the worst possible outcome (utility 1). Meanwhile, my girlfriend has the same set of preferences: she wants to spend time with me, she'd be okay with working late, but she doesn't want to sit at home alone.

This “game” has two Nash equilibria. If we both go home, neither of us regrets it: we can spend time with each other and we've both got our highest utility. If we both stay at work, again, neither of us regrets it: since my girlfriend is at work, I am glad I stayed at work instead of going home, and since I am at work, my girlfriend is glad she stayed at work instead of going home. Although we both may wish that we had both gone home, neither of us specifically regrets our own choice, given our knowledge of how the other acted.

When all players in a game are reasonable, the (apparently) rational choice will be to go for a Nash equilibrium (why would you want to make a choice you'll regret when you know what the other player chose?) And since John Nash (remember that movie A Beautiful Mind?) proved that every game has at least one, all games between well-informed rationalists (who are not also being superrational in a sense to be discussed later) should end in one of these.

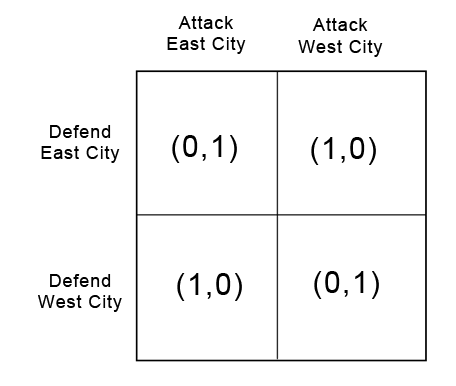

What if the game seems specifically designed to thwart Nash equilibria? Suppose you are a general invading an enemy country's heartland. You can attack one of two targets, East City or West City (you declared war on them because you were offended by their uncreative toponyms). The enemy general only has enough troops to defend one of the two cities. If you attack an undefended city, you can capture it easily, but if you attack the city with the enemy army, they will successfully fight you off.

Here there is no Nash equilibrium without introducing randomness. If both you and your enemy choose to go to East City, you will regret your choice - you should have gone to West and taken it undefended. If you go to East and he goes to West, he will regret his choice - he should have gone East and stopped you in your tracks. Reverse the names, and the same is true of the branches where you go to West City. So every option has someone regretting their choice, and there is no simple Nash equilibrium. What do you do?

Here the answer should be obvious: it doesn't matter. Flip a coin. If you flip a coin, and your opponent flips a coin, neither of you will regret your choice. Here we see a "mixed Nash equilibrium", an equilibrium reached with the help of randomness.

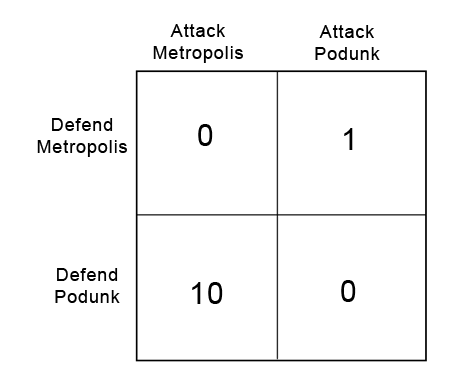

We can formalize this further. Suppose you are attacking a different country with two new potential targets: Metropolis and Podunk. Metropolis is a rich and strategically important city (utility: 10); Podunk is an out of the way hamlet barely worth the trouble of capturing it (utility: 1).

A so-called first-level player thinks: “Well, Metropolis is a better prize, so I might as well attack that one. That way, if I win I get 10 utility instead of 1”

A second-level player thinks: “Obviously Metropolis is a better prize, so my enemy expects me to attack that one. So if I attack Podunk, he'll never see it coming and I can take the city undefended.”

A third-level player thinks: “Obviously Metropolis is a better prize, so anyone clever would never do something as obvious as attack there. They'd attack Podunk instead. But my opponent knows that, so, seeking to stay one step ahead of me, he has defended Podunk. He will never expect me to attack Metropolis, because that would be too obvious. Therefore, the city will actually be undefended, so I should take Metropolis.”

And so on ad infinitum, until you become hopelessly confused and have no choice but to spend years developing a resistance to iocane powder.

But surprisingly, there is a single best solution to this problem, even if you are playing against an opponent who, like Professor Quirrell, plays “one level higher than you.”

When the two cities were equally valuable, we solved our problem by flipping a coin. That won't be the best choice this time. Suppose we flipped a coin and attacked Metropolis when we got heads, and Podunk when we got tails. Since my opponent can predict my strategy, he would defend Metropolis every time; I am equally likely to attack Podunk and Metropolis, but taking Metropolis would cost them much more utility. My total expected utility from flipping the coin is 0.5: half the time I successfully take Podunk and gain 1 utility, and half the time I am defeated at Metropolis and gain 0.And this is not a Nash equilibrium: if I had known my opponent's strategy was to defend Metropolis every time, I would have skipped the coin flip and gone straight for Podunk.

So how can I find a Nash equilibrium? In a Nash equilibrium, I don't regret my strategy when I learn my opponent's action. If I can come up with a strategy that pays exactly the same utility whether my opponent defends Podunk or Metropolis, it will have this useful property. We'll start by supposing I am flipping a biased coin that lands on Metropolis x percent of the time, and therefore on Podunk (1-x) percent of the time. To be truly indifferent which city my opponent defends, 10x (the utility my strategy earns when my opponent leaves Metropolis undefended) should equal 1(1-x) (the utility my strategy earns when my opponent leaves Podunk undefended). Some quick algebra finds that 10x = 1(1-x) is satisfied by x = 1/11. So I should attack Metropolis 1/11 of the time and Podunk 10/11 of the time.

My opponent, going through a similar process, comes up with the suspiciously similar result that he should defend Metropolis 10/11 of the time, and Podunk 1/11 of the time.

If we both pursue our chosen strategies, I gain an average 0.9090... utility each round, soundly beating my previous record of 0.5, and my opponent suspiciously loses an average -.9090 utility. It turns out there is no other strategy I can use to consistently do better than this when my opponent is playing optimally, and that even if I knew my opponent's strategy I would not be able to come up with a better strategy to beat it. It also turns out that there is no other strategy my opponent can use to consistently do better than this if I am playing optimally, and that my opponent, upon learning my strategy, doesn't regret his strategy either.

In The Art of Strategy, Dixit and Nalebuff cite a real-life application of the same principle in, of all things, penalty kicks in soccer. A right-footed kicker has a better chance of success if he kicks to the right, but a smart goalie can predict that and will defend to the right; a player expecting this can accept a less spectacular kick to the left if he thinks the left will be undefended, but a very smart goalie can predict this too, and so on. Economist Ignacio Palacios-Huerta laboriously analyzed the success rates of various kickers and goalies on the field, and found [? · GW] that they actually pursued a mixed strategy generally within 2% of the game theoretic ideal, proving that people are pretty good at doing these kinds of calculations unconsciously.

So every game really does have at least one Nash equilibrium, even if it's only a mixed strategy. But some games can have many, many more. Recall the situation between me and my girlfriend:

There are two Nash equilibria: both of us working late, and both of us going home. If there were only one equilibrium, and we were both confident in each other's rationality, we could choose that one and there would be no further problem. But in fact this game does present a problem: intuitively it seems like we might still make a mistake and end up in different places.

Here we might be tempted to just leave it to chance; after all, there's a 50% probability we'll both end up choosing the same activity. But other games might have thousands or millions of possible equilibria and so will require a more refined approach.

Art of Strategy describes a game show in which two strangers were separately taken to random places in New York and promised a prize if they could successfully meet up; they had no communication with one another and no clues about how such a meeting was to take place. Here there are a nearly infinite number of possible choices: they could both meet at the corner of First Street and First Avenue at 1 PM, they could both meet at First Street and Second Avenue at 1:05 PM, etc. Since neither party would regret their actions (if I went to First and First at 1 and found you there, I would be thrilled) these are all Nash equilibria.

Despite this mind-boggling array of possibilities, in fact all six episodes of this particular game ended with the two contestants meeting successfully after only a few days. The most popular meeting site was the Empire State Building at noon.

How did they do it? The world-famous Empire State Building is what game theorists call focal: it stands out as a natural and obvious target for coordination. Likewise noon, classically considered the very middle of the day, is a focal point in time. These focal points, also called Schelling points after theorist Thomas Schelling who discovered them, provide an obvious target for coordination attempts.

What makes a Schelling point? The most important factor is that it be special. The Empire State Building, depending on when the show took place, may have been the tallest building in New York; noon is the only time that fits the criteria of “exactly in the middle of the day”, except maybe midnight when people would be expected to be too sleepy to meet up properly.

Of course, specialness, like beauty, is in the eye of the beholder. David Friedman writes:

Two people are separately confronted with the list of numbers [2, 5, 9, 25, 69, 73, 82, 96, 100, 126, 150 ] and offered a reward if they independently choose the same number. If the two are mathematicians, it is likely that they will both choose 2—the only even prime. Non-mathematicians are likely to choose 100—a number which seems, to the mathematicians, no more unique than the other two exact squares. Illiterates might agree on 69, because of its peculiar symmetry—as would, for a different reason, those whose interest in numbers is more prurient than mathematical.

A recent open thread comment [? · GW] pointed out that you can justify anything with “for decision-theoretic reasons” or “due to meta-level concerns”. I humbly propose adding “as a Schelling point” to this list, except that the list is tongue-in-cheek and Schelling points really do explain almost everything - stock markets, national borders, marriages, private property, religions, fashion, political parties, peace treaties, social networks, software platforms and languages all involve or are based upon Schelling points. In fact, whenever something has “symbolic value” a Schelling point is likely to be involved in some way. I hope to expand on this point a bit more later.

Sequential games can include one more method of choosing between Nash equilibria: the idea of a subgame-perfect equilibrium, a special kind of Nash equlibrium that remains a Nash equilibrium for every subgame of the original game. In more intuitive terms, this equilibrium means that even in a long multiple-move game no one at any point makes a decision that goes against their best interests (remember the example from the last post, where we crossed out the branches in which Clinton made implausible choices that failed to maximize his utility?) Some games have multiple Nash equilibria but only one subgame-perfect one; we'll examine this idea further when we get to the iterated prisoners' dilemma and ultimatum game.

In conclusion, every game has at least one Nash equilibrium, a point at which neither player regrets her strategy even when she knows the other player's strategy. Some equilibria are simple choices, others involve plans to make choices randomly according to certain criteria. Purely rational players will always end up at a Nash equilibrium, but many games will have multiple possible equilibria. If players are trying to coordinate, they may land at a Schelling point, an equilibria which stands out as special in some way.

82 comments

Comments sorted by top scores.

comment by Kawoomba · 2012-06-29T10:16:19.301Z · LW(p) · GW(p)

The actual equilibria can seem truly mind boggling at first glance. Consider this famous example:

There are 5 rational pirates, A, B, C, D and E. They find 100 gold coins. They must decide how to distribute them.

The pirates have a strict order of seniority: A is superior to B, who is superior to C, who is superior to D, who is superior to E.

The pirate world's rules of distrubution are thus: that the most senior pirate should propose a distribution of coins. The pirates, including the proposer, then vote on whether to accept this distribution. If the proposed allocation is approved by a majority or a tie vote, it happens. If not, the proposer is thrown overboard from the pirate ship and dies, and the next most senior pirate makes a new proposal to begin the system again.

Pirates base their decisions on three factors.

1) Each pirate wants to survive.

2) Given survival, each pirate wants to maximize the number of gold coins he receives.

3) Each pirate would prefer to throw another overboard, if all other results would otherwise be equal.

The pirates do not trust each other, and will neither make nor honor any promises between pirates apart from the main proposal.

It might be expected intuitively that Pirate A will have to allocate little if any to himself for fear of being voted off so that there are fewer pirates to share between. However, this is quite far from the theoretical result.

Which is ...

...

...

A: 98 coins

B: 0 coins

C: 1 coin

D: 0 coins

E: 1 coin

Proof is in the article linked. Amazing, isn't it? :-)

Replies from: Eliezer_Yudkowsky, MarkusRamikin, wedrifid, Vaniver↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-06-29T15:52:07.883Z · LW(p) · GW(p)

It's amazing, the results people come up with when they don't use TDT (or some other formalism that doesn't defect in the Prisoner's Dilemma - though so far as I know, the concept of the Blackmail Equation is unique to TDT.)

(Because the base case of the pirate scenario is, essentially, the Ultimatum game, where the only reason the other person offers you $1 instead of $5 is that they model you as accepting a $1 offer, which is a very stupid answer to compute if it results in you getting only $1 - only someone who two-boxed on Newcomb's Problem would contemplate such a thing.)

Replies from: cousin_it, raptortech97, Pentashagon↑ comment by cousin_it · 2012-06-29T16:10:17.200Z · LW(p) · GW(p)

At some point you proposed to solve the problem of blackmail by responding to offers but not to threats. Do you have a more precise version of that proposal? What logical facts about you and your opponent indicate that the situation is an offer or a threat? I had problems trying to figure that out.

Replies from: None, bideup↑ comment by [deleted] · 2012-06-29T18:33:01.219Z · LW(p) · GW(p)

I have a possible idea for this, but I think I need help working out more the rules for the logical scenario as well. All I have are examples (and It's not like examples of a threat are that tricky to imagine.)

Person A makes situations that involve some form of request (an offer, a a series of offers, a threat, etc.). Person B may either Accept, Decline, or Revoke Person A's requests. Revoking a request blocks requests from occurring at all, at a cost.

Person A might say "Give me 1 dollar and I'll give you a Frozen Pizza." And Person B might "Accept" if Frozen Pizza grants more utility than a dollar would.

Person A might say "Give me 100 dollars and I'll give you a Frozen Pizza." Person B would "Decline" the offer, since Frozen Pizza probably wouldn't be worth more than 100 dollars, but he probably wouldn't bother to revoke it. Maybe Person A's next situation will be more reasonable.

Or Person A might say "Give me 500 dollars or I'll kill you." And Person B will pick "Revoke" because he doesn't want that situation to occur at all. The fact that there is a choice between death or minus 500 dollars is not a good situation. He might also revoke future situations from that person.

Alternate examples: If you're trying to convince someone to go out on a date, they might say "Yes", "No", or "Get away from me, you creep!"

If you are trying to enter a password to a computer system, they might allow access (correct password), deny access (incorrect password), or deny access and lock access attempts for some period (multiple incorrect passwords)

Or if you're at a receptionist desk:

A: "I plan on going to the bathroom, Can you tell me where it is?"

B: "Yes."

A: "I plan on going to a date tonight, Would you like to go out with me to dinner?"

B: "No."

A: "I plan on taking your money, can you give me the key to the safe this instant?"

B: "Security!"

The difference appears to be that if it is a threat (or a fraud) you not only want to decline the offer, you want to decline future offers even if they look reasonable because the evidence from the first offer was that bad. Ergo, if someone says:

A: "I plan on taking your money, can you give me the key to the safe this instant?"

B: "Security!"

A: "I plan on going to the bathroom, Can you tell me where it is?"

B: (won't say yes at this point because of the earlier threat) "SECURITY!"

Whereas for instance, in the reception scenario the date isn't a threat, so:

A: "I plan on going to a date tonight, Would you like to go out with me to dinner?"

B: "No."

A: "I plan on going to the bathroom, Can you tell me where it is?"

B: "Yes."

I feel like this expresses threats or frauds to clearly me, but I'm not sure if it would be clear to someone else. Did it help? Are there any holes I need to fix?

Replies from: Vaniver↑ comment by Vaniver · 2012-06-29T18:51:20.474Z · LW(p) · GW(p)

The doctor walks in, face ashen. "I'm sorry- it's likely we'll lose her or the baby. She's unconscious now, and so the choice falls to you: should we try to save her or the child?"

The husband calmly replies, "Revoke!"

In non-story format: how do you formalize the difference between someone telling you bad news and someone causing you to be in a worse situation? How do you formalize the difference between accidental harm and intentional harm? How do you determine the value for having a particular resistance to blackmail, such that you can distinguish between blackmail you should and shouldn't give in to?

Replies from: army1987, None, TheOtherDave↑ comment by A1987dM (army1987) · 2012-07-01T09:32:44.018Z · LW(p) · GW(p)

How do you determine the value for having a particular resistance to blackmail, such that you can distinguish between blackmail you should and shouldn't give in to?

The doctor has no obvious reason to prefer you to want to save your wife or your child. On the other hand, the mugger would very much prefer you to hand him your wallet than to accept to be killed, and so he's deliberately making the latter possibility as unpleasant to you as possible to make you choose the former; but if you had precommitted to not choosing the former (e.g. by leaving your wallet at home) and he had known it, he wouldn't have approached you in the first place.

IOW this is the decision tree:

mug give in

------------------------------------------------ (+50,-50)

| |

| | don't give in

| ---------------------- (-1,-1e6)

| don't mug

---------------------------------------------- (0, 0)

where the mugger makes the first choice, you make the second choices, and the numbers in parentheses are the pay-offs for the mugger and for you respectively. If you precommit not to choose the top branch, the mugger will take the bottom branch. (How do I stop multiple spaces from being collapsed into one?

↑ comment by [deleted] · 2012-06-29T19:27:48.719Z · LW(p) · GW(p)

The doctor walks in, face ashen. "I'm sorry- it's likely we'll lose her or the baby. She's unconscious now, and so the choice falls to you: should we try to save her or the child?" The husband calmly replies, "Revoke!"

An eloquent way of pointing out what I was missing. Thank you!

In non-story format: How do you formalize the difference between someone telling you bad information and someone causing you to be in a worse situation?

I will try to think on this more. The only thing that's occurred to me so far is that if that it seems like if you have a formalization, it may not be a good idea to announce your formalization. Someone who knows your formalization might be able to exploit it by customizing their imposed worse situation to look like simply telling you bad information, their intentional harm to look like accidental harm, or their blackmail to extort the maximum amount of money out of you, if they had an explicit set of formal rules about where those boundaries were.

And for instance, it seems like a person would prefer it someone else blackmailed that person less than they could theoretically get away with because they were being cautious, rather than having every blackmailer immediately blackmail at maximum effective blackmail. (at that point, since the threshold can change)

Again, I really do appreciate you helping me focus my thoughts on this.

↑ comment by TheOtherDave · 2012-06-29T19:33:30.409Z · LW(p) · GW(p)

If I have a choice of whether or not to perform an action A, and I believe that performing A will harm agent X and will not in and of itself benefit me, and I credibly commit to performing A unless X provides me with some additional value V, I would consider myself to be threatening X with A unless they provide V. Whether that is a threat of blackmail or some other kind of threat doesn't seem like a terribly interesting question.

Edit: my earlier thoughts on extortion/blackmail, specifically, here.

Replies from: Vaniver↑ comment by Vaniver · 2012-06-29T21:29:59.419Z · LW(p) · GW(p)

will not in and of itself benefit me

Did you ever see Shawshank Redemption? One of the Warden's tricks is not just to take construction projects with convict labor, but to bid on any construction project (with the ability to undercut any competitor because his labor is already paid for) unless the other contractors paid him to stay away from that job.

My thought, as hinted at by my last question, is that refusing or accepting any particular blackmail request depends on the immediate and reputational costs of refusing or accepting. A flat "we will not accept any blackmail requests" is emotionally satisfying to deliver, but can't be the right strategy for all situations. (When the hugger mugger demands "hug me or I'll shoot!", well, I'll give him a hug.) A "we will not accept any blackmail requests that cost more than X" seems like the next best step, but as pointed out here that runs the risk of people just demanding X every time. Another refinement might be to publish a "acceptance function"- you'll accept a (sufficiently credible and damaging) blackmail request for x with probability f(x), which is a decreasing (probably sigmoidal) function.

But the reputational costs of accepting or rejecting vary heavily based on the variety of threat, what you believe about potential threateners, whose opinions you care about, and so on. Things get very complex very fast.

Replies from: TheOtherDave↑ comment by TheOtherDave · 2012-06-29T22:54:12.560Z · LW(p) · GW(p)

If I am able to outbid all competitors for any job, but cannot do all jobs, and I let it be known that I won't bid on jobs if bribed accordingly, I would not consider myself to be threatening all the other contractors, or blackmailing them. In effect this is a form of rent-seeking.

The acceptance-function approach you describe, where the severity and credibility of the threat matter, makes sense to me.

Replies from: Vaniver↑ comment by Vaniver · 2012-06-30T01:02:03.648Z · LW(p) · GW(p)

Blackmail seems to me to be a narrow variety of rent-seeking, and reasons for categorically opposing blackmail seem like reasons for categorically opposing rent-seeking. But I might be using too broad a category for 'rent-seeking.'

Replies from: TheOtherDave↑ comment by TheOtherDave · 2012-06-30T01:09:41.995Z · LW(p) · GW(p)

reasons for categorically opposing blackmail seem like reasons for categorically opposing rent-seeking

Well, I agree, but only because in general the reasons for categorically opposing something that would otherwise seem rational to cooperate with are similar. That is, the strategy of being seen to credibly commit to a policy of never rewarding X, even when rewarding X would leave me better off, is useful whenever such a strategy reduces others' incentive to X and where I prefer that people not X at me. It works just as well where X=rent-seeking as where X=giving me presents as where X=threatening me.

Can you expand on your model if rent-seeking?

Replies from: Vaniver↑ comment by Vaniver · 2012-06-30T01:22:37.083Z · LW(p) · GW(p)

Can you expand on your model if rent-seeking?

Yes but I'm not sure how valuable it is to. Basically, it boils down to 'non-productive means of acquiring wealth,' but it's not clear if, say, petty theft should be included. (Generally, definitional choices like that there are made based on identity implications, rather than economic ones.) The general sentiment of things "I prefer that people not X at me" captures the essence better, perhaps.

There are benefits to insisting on a narrower definition: perhaps something like legal non-productive means of acquiring wealth, but part of the issue is that rent-seeking often operates by manipulating the definition of 'legal.'

↑ comment by bideup · 2021-03-09T16:36:44.709Z · LW(p) · GW(p)

Here's my version of the definition used by Schelling in The Strategy of Conflict: A threat is when I commit myself to an action, conditional on an action of yours, such that if I end up having to take that action I would have reason to regret having committed myself to it.

So if I credibly commit myself to the assertion, 'If you don't give me your phone, I'll throw you off this ship,' then that's a threat. I'm hoping that the situation will end with you giving me your phone. If it ends with me throwing you overboard, the penalties I'll incur will be sufficient to make me regret having made the commitment.

But when these rational pirates say, 'If we don't like your proposal, we'll throw you overboard,' then that's not a threat; they're just elucidating their preferences. Schelling uses 'warning' for this sort of statement.

↑ comment by raptortech97 · 2016-09-12T03:37:39.373Z · LW(p) · GW(p)

So if all pirates implement TDT, what happens?

↑ comment by Pentashagon · 2012-06-29T23:42:55.406Z · LW(p) · GW(p)

I'll guess that in your analysis, given the base case of D and E's game being a tie vote on a (D=100, E=0) split, results in a (C=0, D=0, E=100) split for three pirates since E can blackmail C into giving up all the coins in exchange for staying alive? D may vote arbitrarily on a (C=0, D=100, E=0) split, so C must consider E to have the deciding vote.

If so, that means four pirates would yield (B=0, C=100, D=0, E=0) or (B=0, C=0, D=100, E=0) in a tie. E expects 100 coins in the three-pirate game and so wouldn't be a safe choice of blackmailer, but C and D expect zero coins in a three-pirate game so B could choose between them arbitrarily. B can't give fewer than 100 coins to either C or D because they will punish that behavior with a deciding vote for death, and B knows this. It's potentially unintuitive for C because C's expected value in a three-pirate game is 0 but if C commits to voting against B for anything less than 100 coins, and B knows this, then B is forced to give either 0 or 100 coins to C. The remaining coins must go to D.

In the case of five pirates C and D except more than zero coins on average if A dies because B may choose arbitrarily between C or D as blackmailer. B and E expect zero coins from the four-pirate game. A must maximize the chance that two or more pirates will vote for A's split. C and D have an expected value of 50 coins from the four-pirate game if they assume B will choose randomly, and so a (A=0, B=0, C=50, D=50, E=0) split is no better than B's expected offer for C and D and any fewer than 50 coins for C or D will certainly make them vote against A. I think A should offer (A=0, B=n, C=0, D=0, E=100-n) where n is mutually acceptable to B and E.

Because B and E have no relative advantage in a four-pirate game (both expect zero coins) they don't have leverage against each other in the five-pirate game. If B had a non-zero probability of being killed in a four-pirate game then A should offer E more coins than B at a ratio corresponding to that risk. As it is, I think B and E would accept a fair split of n=50, but I may be overlooking some potential for E to blackmail B.

Replies from: Brilliand↑ comment by Brilliand · 2015-09-30T22:08:02.338Z · LW(p) · GW(p)

In every case of the pirates game, the decision-maker assigns one coin to every pirate an even number of steps away from himself, and the rest of the coins to himself (with more gold than pirates, anyway; things can get weird with large numbers of pirates). See the Wikipedia article Kawoomba linked to for an explanation of why.

↑ comment by MarkusRamikin · 2012-06-29T11:22:15.341Z · LW(p) · GW(p)

There are 5 rational pirates, A, B, C, D and E

I see 1 rational pirate and 4 utter morons who have paid so much attention to math that they forgot to actually win. I mean, if they were "less rational", they'd be inclined to get outraged over the unfairness, and throw A overboard, right? And A would expect it, so he'd give them a better deal. "Rational" is not "walking away with less money".

It's still an interesting example and thank you for posting it.

Replies from: Vaniver, Pentashagon, Kawoomba↑ comment by Vaniver · 2012-06-29T18:09:05.108Z · LW(p) · GW(p)

I see 1 rational pirate and 4 utter morons who have paid so much attention to math that they forgot to actually win.

Remember, this isn't any old pirate crew. This is a crew with a particular set of rules that gives different pirates different powers. There's no "hang the rules!" here, and since it's an artificial problem there's an artificial solution.

D has the power to walk away with all of the gold, and A, B, and C dead. He has no incentive to agree to anything less, because if enough votes fail he'll be in the best possible position. This determines E's default result, which is what he makes decisions based off of. Building out the game tree helps here.

↑ comment by Pentashagon · 2012-06-29T22:10:21.439Z · LW(p) · GW(p)

If B, C, D, and E throw A overboard then C, D, and E are in a position to throw B overboard and any argument B, C, D, and E can come up with for throwing A overboard is just as strong an argument as C, D, and E can come up with for throwing B overboard. In fact, with fewer pirates an even split would be even more advantageous to C, D, and E. So B will always vote for A's split out of self-preservation. If C throws A overboard he will end up in the same situation as B was originally; needing to support the highest ranking pirate to avoid being the next one up for going overboard. Since the second in command will always vote for the first in command out of self preservation he or she will accept a split with zero coins for themselves. Therefore A only has to offer C 1 coin to get C's vote. A, B, and C's majority rules.

In real life I imagine the pirate who is best at up-to-5-way fights is left with 100 coins. If the other pirates were truly rational then they would never have boarded a pirate ship with a pirate who is better at an up-to-5-way fight than them.

Replies from: beberly37, wedrifid↑ comment by beberly37 · 2012-06-29T22:44:03.145Z · LW(p) · GW(p)

If the other pirates were truly rational then they would never have boarded a pirate ship with a pirate who is better at an up-to-5-way fight than them.

When someone asks me how I would get out of a particularly sticky situation, I often fight the urge to glibly respond, by not getting it to said situation.

I digress, if the other pirates were truly rational then they would never let anyone know how good they were at an up-to-X-way fight.

Replies from: Pentashagon↑ comment by Pentashagon · 2012-06-29T23:59:45.860Z · LW(p) · GW(p)

By extension, should truly rational entities never let anyone know how rational they are?

Replies from: wedrifid↑ comment by wedrifid · 2012-06-30T00:24:33.945Z · LW(p) · GW(p)

By extension, should truly rational entities never let anyone know how rational they are?

No, they should sometimes not let people know that. Sometimes it is an advantage - either by allowing cooperation that would not otherwise have been possible or demonstrating superior power and so allow options for dominance.

↑ comment by wedrifid · 2012-06-29T22:32:04.588Z · LW(p) · GW(p)

In real life I imagine the pirate who is best at up-to-5-way fights is left with 100 coins.

I doubt it. Humans almost never just have an all in winner takes all fight. The most charismatic pirate will end up with the greatest share, while the others end up with lesser shares depending on what it takes for the leader to maintain alliances. (Estimated and observed physical prowess is one component of said charisma in the circumstance).

↑ comment by Kawoomba · 2012-06-29T11:37:14.502Z · LW(p) · GW(p)

I see 1 rational pirate and 4 utter morons who have paid so much attention to math that they forgot to actually win. I mean, if they were "less rational", they'd be inclined to get outraged over the unfairness, and throw A overboard, right? And A would expect it, so he'd give them a better deal. "Rational" is not "walking away with less money".

Well if they chose to decline A's proposal for whatever reason, that would put C and E in a worse position than if they didn't.

Outrage over being treated unfairly doesn't come into one time prisoner's dilemmata. Each pirate wants to survive with the maximum amount of gold, and C and E would get nothing if they didn't vote for A's proposal.

Hence, if C and E were outraged as you suggest, and voted against A's proposal, they would walk away with even less. One gold coin buys you infinitely more blackjack and hookers than zero gold coins.

Replies from: MarkusRamikin↑ comment by MarkusRamikin · 2012-06-29T11:53:05.932Z · LW(p) · GW(p)

If C and E - and I'd say all 4 of them really, at least regarding a 98 0 1 0 1 solution - were inclined to be outraged as I suggest, and A knew this, they would walk away with more money. For me, that trumps any possible math and logic you could put forward.

And just in case A is stupid:

"But look, C and E, this is the optimal solution, if you don't listen to me you'll get less gold!"

"Nice try, smartass. Overboard you go."

B watched on, starting to sweat...

EDIT: Ooops, I notice that I missed the fact that B doesn't need to sweat since he just needs D. Still, my main point isn't about B, but A.

Also I wanna make it 100% clear: I don't claim that the proof is incorrect, given all the assumptions of the problem, including the ones about how the agents work. I'm just not impressed with the agents, with their ability to achieve their goals. Leave A unchanged and toss in 4 reasonably bright real humans as B C D E, at least some of them will leave with more money.

Replies from: othercriteria, FluffyC, Kawoomba↑ comment by othercriteria · 2012-06-29T14:55:31.080Z · LW(p) · GW(p)

...because it's very hot in Pirate Island's shark-infested Pirate Bay, not out of any fear or distress at an outcome that put her in a better position than she had occupied before.

Whereas A had to build an outright majority to get his plan approved, B just had to convince one other pirate. D was the natural choice, both from a strict logico-mathematical view, and because D had just watched C and E team up to throw their former captain overboard. It wasn't that they were against betraying their superiors for a slice of the treasure, it was that the slice wasn't big enough! D wasn't very bright--B knew from sharing a schooner with him these last few months--but team CE had been so obliging as to slice a big branch off D's decision tree. What was left was a stump. D could take her offer of 1 coin, or be left to the mercy of the outrageously blood-thirsty team CE.

C and E watched on, dreaming of all the wonderful things they could do with their 0 coins.

[I think the "rationality = winning" story holds here (in the case where A's proposal passes, not in this weird counterfactual cul-de-sac) but in a more subtle way. The 98 0 1 0 1 solution basically gives a value of the ranks, i.e., how much a pirate should be willing to pay to get into that rank at the time treasure will be divided. From this perspective, being A is highly valuable, and A should have been willing to pay, say, 43 coins for his ship, 2 coins for powder, 7 coins for wages to B, C, D, E, etc., to make it into that position. C, on the other hand, might turn down a promotion to second-in-command over B, unless it's paired with a wage hike of one 1 coin; B would be surprisingly happy to be given such a demotion, if her pay remained unchanged. All the pirates can win even in a 98 0 1 0 1 solution, if they knew such a treasure would be found and planned accordingly.]

↑ comment by FluffyC · 2012-11-24T00:02:46.448Z · LW(p) · GW(p)

It seems to me that the extent to which B C D E will be able to get more money is to some extent dependent on their ability to plausibly precommit to rejecting an "unfair" deal... and possibly their ability to plausibly precommit to accepting a "fair" one.

Emphasis on "plausibly" and "PIRATES."

At minimum, if they can plausibly precommit to things, I'd expect at the very least CDE to precommit to tossing A B overboard no matter what is offered and splitting the pot three ways. There are quite possibly better commitments to make even than this.

↑ comment by Kawoomba · 2012-06-29T12:08:46.682Z · LW(p) · GW(p)

Heh, if I can't convince you with "any possible math and logic", let me try with this great video (also that one) that shows the consequences of "reasoning via outrage".

Replies from: MarkusRamikin, wedrifid, Vaniver↑ comment by MarkusRamikin · 2012-06-29T12:53:54.856Z · LW(p) · GW(p)

Watched the first one. It was very different from the scenario we're discussing. No one's life was at stake. Also the shares were unequal from the start, so there was no fair scenario being denied, to get outraged about.

I'm not in favor of "reasoning via outrage" in general. I'm simply in favor of possessing a (known) inclination to turn down overly skewed deals (like humans generally have, usefully I might add); if I have it, and your life is at stake, you'd have to be suicidal to propose a 98 0 1 0 1 if I'm one of the people whose vote you need.

What makes it different from the video example is that, in the pirate example, if I turn down the deal the proponent loses far, far more than I do. Not just 98 coins to my 1, but their life, which should be many orders of magnitude more precious. So there's clearly room for a more fair deal. The woman in that case wasn't like my proposed E or C, she was like a significantly stupider version of A, wanting an unfairly good deal in a situation when there was no reason for her to believe her commitment could reliably prevail over the other players' ability to do the same.

Replies from: wedrifid, sixes_and_sevens, sixes_and_sevens↑ comment by wedrifid · 2012-06-29T21:36:22.586Z · LW(p) · GW(p)

Watched the first one. It was very different from the scenario we're discussing. No one's life was at stake. Also the shares were unequal from the start, so there was no fair scenario being denied, to get outraged about.

A suggestion to randomize was made and denied. They fail at thinking. Especially Jo, who kept trying to convince herself and others that she didn't care about money. Sour grapes---really pathetic.

↑ comment by sixes_and_sevens · 2012-06-29T14:45:12.964Z · LW(p) · GW(p)

Whenever I come across highly counterintuitive claims along these lines, I code them up and see how they perform over many iterations.

This is a lot trickier to do in this case compared to, say, the Monty Hall problem, but if you restricted it just to cases in which Pirate A retained 98 of the coins, you could demonstrate whether the [98, 0, 1, 0, 1] distribution was stable or not.

↑ comment by sixes_and_sevens · 2012-06-29T14:58:37.762Z · LW(p) · GW(p)

Also, I'd suggest thinking about this in a slightly different way to the way you're thinking about it. The only pirate in the scenario who doesn't have to worry about dying is pirate E, who can make any demands he likes from pirate D. What distribution would he suggest?

Edit: Rereading the wording of the scenario, pirate E can't make any demands he likes from pirate D, and pirate D himself also doesn't need to worry about dying.

↑ comment by wedrifid · 2012-06-29T21:33:11.738Z · LW(p) · GW(p)

Heh, if I can't convince you with "any possible math and logic", let me try with this great video (also that one) that shows the consequences of "reasoning via outrage".

That shows one aspect of the consequences of reasoning via outrage. It doesn't indicate that the strategy itself is bad. In a similar way the consequences of randomizing and defending Podunk 1/11 times is that 1/11 times (against a good player) you will end up on youtube losing Metropolis.

↑ comment by Vaniver · 2012-06-29T18:00:52.920Z · LW(p) · GW(p)

let me try with this great video

Whoever came up with that game show is a genius.

Replies from: Decius↑ comment by Decius · 2013-02-16T08:01:42.231Z · LW(p) · GW(p)

"I will stand firm on A until A becomes worth less than C is now, then I will accept B or C. You get more by accepting B or C now than you get by trying to get more."

One rational course of action is to mutually commit to a random split, and follow through. What's the rational course of action to respond to someone who makes that threat and is believed to follow through on it? If it is known that the other two participants are rational, why isn't making a threat of that nature rational?

↑ comment by wedrifid · 2012-06-29T21:57:02.658Z · LW(p) · GW(p)

Proof is in the article linked.

With the caveat that this 'proof' relies on the same assumptions that 'prove' that the rational prisoners defect in the one shot prisoners dilemma - which they don't unless they have insufficient (or inaccurate) information about each other. At a stretch we could force the "do not trust each other" premise to include "the pirates have terrible maps of each other" but that's not a realistic interpretation of the sentence. Really there is the additional implicit assumption "Oh, and all these pirates are agents that implement Causal Decision Theory".

Amazing, isn't it? :-)

It gets even more interesting when there are more than 200 pirates (and still only 100 coins).

↑ comment by Vaniver · 2012-06-29T18:09:08.207Z · LW(p) · GW(p)

Actually, does the traditional result work? E would rather the result be (dead, dead, 99, 0, 1) than (98, 0, 1, 0, 1). I think it has to be (97, 0, 1, 0, 2).

[edit]It appears that E should assume that B will make a successful proposition, which will include nothing for E, and so (dead, dead, 99, 0, 1) isn't on the table.

comment by drnickbone · 2012-06-29T20:13:16.071Z · LW(p) · GW(p)

This “game” has two Nash equilibria. If we both go home, neither of us regrets it: we can spend time with each other and we've both got our highest utility. If we both stay at work, again, neither of us regrets it: since my girlfriend is at work, I am glad I stayed at work instead of going home, and since I am at work, my girlfriend is glad she stayed at work instead of going home.

Looking at the problem, I believe there is a third equilibrium, a mixed one. Both you and your girlfriend toss a coin, and choose to go home with probability one half, or stay at work with probability one half. This gives you both an expected utility of 2. If you are playing that strategy, then it doesn't matter to your girlfriend whether she stays at work (definite utility of 2) or goes home (50% probability of 1, 50% probability of 3), so she can't do better than tossing a coin.

Incidentally, this is expected from Wilson's oddness theorem (1971) - almost all finite games have an odd number of equilibria.

comment by Maelin · 2012-06-29T08:13:56.958Z · LW(p) · GW(p)

This is a minor quibble, but while reading I got stuck at this point:

And since John Nash (remember that movie A Beautiful Mind?) proved that every game has at least one,

followed by a description of a game that didn't seem to have a Nash equilibrium and confirming text "Here there is no pure Nash equilibrium." and "So every option has someone regretting their choice, and there is no simple Nash equilibrium. What do you do?"

I kept re-reading this section, trying to work out how to reconcile these statements since it seemed like you have just offered an irrefutable counterexample to John Nash's theorem. It could use a bit of clarification (maybe something like "This game does have a Nash equilibrium, but one that is a little more subtle" or something similar.

Other than that I'm finding this sequence excellent so far.

Replies from: shokwave, Yvain, Ezekiel↑ comment by shokwave · 2012-06-29T09:04:15.719Z · LW(p) · GW(p)

There is no pure equilibrium, but there is a mixed equilibrium.

A pure strategy is a single move played ad infinitum.

A mixed strategy is a set of moves, with each turn's move randomly selected from this set.

A pure equilibrium is one where every player follows a pure strategy, and a mixd equilibrium is one where at least some players follow a mixed strategy.

Both pure equilibriums and mixed equilibriums are Nash equilibriums. Nash's proof that every game has an equilibrium rests on his previous work where he and von Neumann invented the concept of a mixed equilibrium and proving that it satisfies the criteria.

So this game has no pure equilibrium, but it does have a mixed one. Yvain goes on to describe how you calculate and determine that mixed equilibrium, and shows that it is the attacker playing Podunk 1/11th the time, and Metropolis 10/11th the time.

EDIT: The post explains this at the end:

Some equilibria are simple choices, others involve plans to make choices randomly according to certain criteria.

Yvain: I would strongly recommend including a quick explanation of mixed and pure strategies, and defining equilibriums as either mixed or pure, as a clarification. At the least, move this line up to near the top. Excellent post and excellent sequence.

↑ comment by Scott Alexander (Yvain) · 2012-06-30T00:44:42.801Z · LW(p) · GW(p)

Good point. I've clarified pure vs. mixed equilibria above.

Replies from: Maelin↑ comment by Maelin · 2012-07-01T14:29:33.624Z · LW(p) · GW(p)

Here the answer should be obvious: it doesn't matter. Flip a coin. If you flip a coin, and your opponent flips a coin, neither of you will regret your choice. Here we see a "mixed Nash equilibrium", an equilibrium reached with the help of randomness.

Hmm, I'm still not finding this clear. If I flip a coin and it comes up heads so I attack East City, and my opponent flips a coin and it comes up to defend East City, so I get zero utility and my opponent gets 1, wouldn't I regret not choosing to just attack West City instead? Or not choosing to allocate 'heads' to West City instead of East?

Is there a subtlety by what we mean by 'regret' here that I'm missing?

Replies from: TheOtherDave, Yvain↑ comment by TheOtherDave · 2012-07-01T15:32:01.584Z · LW(p) · GW(p)

I usually understand "regret" in the context of game theory to mean that I would choose to do something different in the same situation (which also means having the same information).

That's different from "regret" in the normal English sense, which roughly speaking means I have unpleasant feelings about a decision or state of affairs.

For example, in the normal sense I can regret things that weren't choices in the first place (e.g., I can regret having been born), regret decisions I would make the same way with the same information (I regret having bet on A rather than B), and regret decisions I would make the same way even knowing the outcome (I regret that I had to shoot that mugger, but I would do it again if I had to). In the game-theory sense none of those things are regret.

There are better English words for what's being discussed here -- "reject" comes to mind -- but "regret" is conventional. I generally think of it as jargon.

Replies from: Magnap↑ comment by Magnap · 2015-04-05T16:34:42.345Z · LW(p) · GW(p)

Sorry to bring up such an old thread, but I have a question related to this. Consider a situation in which you have to make a choice between a number of actions, then you receive some additional information regarding the consequences of these actions. In this case there are two ways of regretting your decision, one of which would not occur for a perfectly rational agent. The first one is "wishing you could have gone back in time with the information and chosen differently". The other one (which a perfectly rational agent wouldn't experience) is "wishing you could go back in time, even without the information, and choose differently", that is, discovering afterwards (e.g. by additional thinking or sudden insight) that your decision was the wrong one even with the information you had at the time, and that if you were put in the same situation again (with the same knowledge you had at the beginning), you should act differently.

Does English have a way to distinguish these two forms of regret (one stemming from lack of information, the other from insufficent consideration)? If not, does some other language have words for this we could conveniently borrow? It might be an important difference to bear in mind when considering and discussing akrasia.

Replies from: TheOtherDave↑ comment by TheOtherDave · 2015-04-05T19:51:23.810Z · LW(p) · GW(p)

So, I consider the "go back in time" aspect of this unnecessarily confusing... the important part from my perspective is what events my timeline contains, not where I am on that timeline. For example, suppose I'm offered a choice between two identical boxes, one of which contains a million dollars. I choose box A, which is empty. What I want at that point is not to go back in time, but simply to have chosen the box which contained the money... if a moment later the judges go "Oh, sorry, our mistake... box A had the money after all, you win!" I will no longer regret choosing A. If a moment after that they say "Oh, terribly sorry, we were right the first time... you lose" I will once more regret having chosen A (as well as being irritated with the judges for jerking me around, but that's a separate matter). No time-travel required.

All of that said, the distinction you raise here (between regretting an improperly made decision whose consequences were undesirable, vs. regretting a properly made decision whose consequences were undesirable) applies either way. And as you say, a rational agent ought to do the former, but not the latter.

(There's also in principle a third condition, which is regretting an improperly made decision whose consequences were desirable. That is, suppose the judges rigged the game by providing me with evidence for "A contains the money," when in fact B contains the money. Suppose further that I completely failed to notice that evidence, flipped a coin, and chose B. I don't regret winning the money, but I might still look back on my decision and regret that my decision procedure was so flawed. In practice I can't really imagine having this reaction, though a rational system ought to.)

(And of course, for completeness, we can consider regretting a properly made decision whose consequences were desirable. That said, I have nothing interesting to say about this case.)

All of which is completely tangential to your lexical question.

I can't think of a pair of verbs that communicate the distinction in any language I know. In practice, I would communicate it as "regret the process whereby I made the decision" vs "regret the results of the decision I made," or something of that sort.

Replies from: Magnap, Quill_McGee↑ comment by Magnap · 2015-04-08T11:06:37.034Z · LW(p) · GW(p)

So, I consider the "go back in time" aspect of this unnecessarily confusing... the important part from my perspective is what events my timeline contains, not where I am on that timeline.

Indeed, that is my mistake. I am not always the best at choosing metaphors or expressing myself cleanly.

regretting an improperly made decision whose consequences were undesirable, vs. regretting a properly made decision whose consequences were undesirable

That is a very nice way of expressing what I meant. I will be using this from now on to explain what I mean. Thank you.

Your comment helped me to understand what I myself meant much better than before. Thank you for that.

Replies from: TheOtherDave↑ comment by TheOtherDave · 2015-04-08T17:03:10.114Z · LW(p) · GW(p)

(smiles) I want you to know that I read your comment at a time when I was despairing of my ability to effectively express myself at all, and it really improved my mood. Thank you.

↑ comment by Quill_McGee · 2015-04-05T21:48:44.543Z · LW(p) · GW(p)

In my opinion, one should always regret choices with bad outcomes and never regret choices with good outcomes. For Lo It Is Written ""If you fail to achieve a correct answer, it is futile to protest that you acted with propriety."" As well It Is Written "If it's stupid but it works, it isn't stupid." More explicitly, if you don't regret bad outcomes just because you 'did the right thing,' you will never notice a flaw in your conception of 'the right thing.' This results in a lot of unavoidable regret, and so might not be a good algorithm in practice, but at least in principle it seems to be better.

Replies from: Epictetus, TheOtherDave↑ comment by Epictetus · 2015-04-06T02:29:17.212Z · LW(p) · GW(p)

In my opinion, one should always regret choices with bad outcomes and never regret choices with good outcomes.

Take care to avoid hindsight bias. Outcomes are not always direct consequences of choices. There's usually a chance element to any major decision. The smart bet that works 99.99% of the time can still fail. It doesn't mean you made the wrong decision.

↑ comment by TheOtherDave · 2015-04-06T02:11:35.536Z · LW(p) · GW(p)

It not only results in unavoidable regret, it sometimes results in regretting the correct choice.

Given a choice between "$5000 if I roll a 6, $0 if I roll between 1 and 5" and "$5000 if I roll between 1 and 5, $0 if I roll a 6," the correct choice is the latter. If I regret my choice simply because the die came up 6, I run the risk of not noticing that my conception of "the right thing" was correct, and making the wrong choice next time around.

Replies from: Kindly↑ comment by Kindly · 2015-04-06T04:36:05.134Z · LW(p) · GW(p)

I'm not sure that regretting correct choices is a terrible downside, depending on how you think of regret and its effects.

If regret is just "feeling bad", then you should just not feel bad for no reason. So don't regret anything. Yeah.

If regret is "feeling bad as negative reinforcement", then regretting things that are mistakes in hindsight (as opposed to correct choices that turned out bad) teaches you not to make such mistakes. Regretting all choices that led to bad outcomes hopefully will also teach this, if you correctly identify mistakes in hindsight, but this is a noisier (and slower) strategy.

If regret is "feeling bad, which makes you reconsider your strategy", then you should regret everything that leads to a bad outcome, whether or not you think you made a mistake, because that is the only kind of strategy that can lead you to identify new kinds of mistakes you might be making.

Replies from: TheOtherDave, Quill_McGee↑ comment by TheOtherDave · 2015-04-06T17:40:12.913Z · LW(p) · GW(p)

If we don't actually have a common understanding of what "regret" refers to, it's probably best to stop using the term altogether.

If I'm always less likely to implement a given decision procedure D because implementing D in the past had a bad outcome, and always more likely to implement D because doing so had a good outcome (which is what I understand Quill_McGee to be endorsing, above), I run the risk of being less likely to implement a correct procedure as the result of a chance event.

There are more optimal approaches.

I endorse re-evaluating strategies in light of surprising outcomes.(It's not necessarily a bad thing to do in the absence of surprising outcomes, but there's usually something better to do with our time.) A bad outcome isn't necessarily surprising -- if I call "heads" and the coin lands tails, that's bad, but unsurprising. If it happens twice, that's bad and a little surprising. If it happens ten times, that's bad and very surprising.

↑ comment by Quill_McGee · 2015-04-06T18:56:06.906Z · LW(p) · GW(p)

I was thinking of the "feeling bad and reconsider" meaning. That is, you don't want regret to occur, so if you are systematically regretting your actions it might be time to try something new. Now, perhaps you were acting optimally already and when you changed you got even /more/ regret, but in that case you just switch back.

Replies from: Kindly↑ comment by Kindly · 2015-04-06T19:12:21.644Z · LW(p) · GW(p)

That's true, but I think I agree with TheOtherDave that the things that should make you start reconsidering your strategy are not bad outcomes but surprising outcomes.

In many cases, of course, bad outcomes should be surprising. But not always: sometimes you choose options you expect to lose, because the payoff is sufficiently high. Plus, of course, you should reconsider your strategy when it succeeds for reasons you did not expect: if I make a bad move in chess, and my opponent does not notice, I still need to work on not making such a move again.

I also worry that relying on regret to change your strategy is vulnerable to loss aversion and similar bugs in human reasoning. Betting and losing $100 feels much more bad than betting and winning $100 feels good, to the extent that we can compare them. If you let your regret of the outcome decide your strategy, then you end up teaching yourself to use this buggy feeling when you make decisions.

Replies from: TheOtherDave↑ comment by TheOtherDave · 2015-04-08T17:09:19.781Z · LW(p) · GW(p)

Right. And your point about reconsidering strategy on surprising good outcomes is an important one. (My go-to example of this is usually the stranger who keeps losing bets on games of skill, but is surprisingly willing to keep betting larger and larger sums on the game anyway.)

↑ comment by Scott Alexander (Yvain) · 2012-07-01T14:35:49.365Z · LW(p) · GW(p)

Here we're not thinking of your strategy as "Attack East City because the coin told me." We're thinking of your strategy as "flip a coin". The same is true of your opponent: his strategy is not "Defend East City" but "flip a coin to decide where to defend"

Suppose this scenario happened, and we offered you a do-over. You know what your opponent's strategy is going to be (flip a coin). You know your opponent is a mind-reader and will know what your strategy will be. Here your best strategy is still to flip a coin again and hope for better luck than last time.

Replies from: Maelin↑ comment by Maelin · 2012-07-02T02:13:09.782Z · LW(p) · GW(p)

Okay, I think I get it. You're both mind-readers, and you can't go ahead until both you and the opponent have committed to your respective plans; if one of you changes your mind about the plan the other gets the opportunity to change their mind in response. But the actual coin toss occurs as part-of-the-move, not part-of-the-plan, so while you might be sad about how the coin toss plan actually pans out, there won't be any other strategy (e.g. 'Attack West') that you'd prefer to have adopted, given that the opponent would have been able to change their strategy (to e.g. 'Defend West') in response, if you had.

...I think. Wait, why wouldn't you regret staying at work then, if you know that by changing your mind your girlfriend would have a chance to change her mind, thus getting you the better outcome..?

Replies from: Yvain↑ comment by Scott Alexander (Yvain) · 2012-07-03T02:51:40.512Z · LW(p) · GW(p)

I explained it poorly in my comment above. The mind-reading analogy is useful, but it's just an analogy. Otherwise the solution would be "Use your amazing psionic powers to level both enemy cities without leaving your room".

If I had to extend the analogy, it might be something like this: we take a pair of strategies and run two checks on it. The first check is "If your opponent's choice was fixed, and you alone had mind-reading powers, would you change your choice, knowing your opponent's?". The second check, performed in a different reality unbeknownst to you, is "If your choice was fixed, and your opponent alone had mind-reading powers, would she change her choice, knowing yours?" If the answer to both checks is "no", then you're at Nash equilibrium. You don't get to use your mind-reading powers for two-way communication.

You can do something like what you described - if you and your girlfriend realize you're playing the game above and both share the same payoff matrix, then (go home, go home) is the obvious Schelling point because it's a just plain better option, and if you have good models of each others' minds you can get there. But both that and (stay, stay) are Nash equilibria.

↑ comment by Ezekiel · 2012-06-29T08:41:12.129Z · LW(p) · GW(p)

No simple Nash equilibrium. Both players adopting the mixed (coin-flipping) strategy is the Nash equilibrium in this case. Remember: a Nash equilibrium isn't a specific choice-per-player, but a specific strategy-per-player.

Replies from: Vaniver↑ comment by Vaniver · 2012-06-29T17:54:11.956Z · LW(p) · GW(p)

Remember

If this is actually an introductory post to game theory, is this really the right approach?

Replies from: wedrifid↑ comment by wedrifid · 2012-06-29T21:16:12.283Z · LW(p) · GW(p)

If this is actually an introductory post to game theory, is this really the right approach?

If the post contains the information in question (it does) then there doesn't seem to be a problem using 'remember' as a pseudo-reference from the comments section to the post itself.

Replies from: Vaniver↑ comment by Vaniver · 2012-06-29T21:39:48.093Z · LW(p) · GW(p)

The words "pure," "simple," and "mixed" are not meaningful to newcomers, and so Yvain's post, which assumes that readers know the meanings of those terms with regards to game theory, is not introducing the topic as smoothly as it could. That's what I got out of Maelin's post.

Replies from: Ezekiel↑ comment by Ezekiel · 2012-06-30T00:47:08.124Z · LW(p) · GW(p)

I've never heard the word "simple" used in game-theoretic context either. It just seemed that word was better suited to describe a [do x] strategy than a [do x with probability p and y with probability (1-p)] strategy.

If the word "remember" is bothering you, I've found people tend to be more receptive to explanations if you pretend you're reminding them of something they knew already. And the definition of a Nash equilibrium was in the main post.

Replies from: Vaniver↑ comment by Vaniver · 2012-06-30T01:06:49.401Z · LW(p) · GW(p)

If the word "remember" is bothering you, I've found people tend to be more receptive to explanations if you pretend you're reminding them of something they knew already.

Agreed. Your original response was fine as an explanation to Maelin; I singled out 'remember' in an attempt to imply the content of my second post (to Yvain), but did so in a fashion that was probably too obscure.

comment by Sniffnoy · 2012-06-30T22:24:12.544Z · LW(p) · GW(p)

Two people are separately confronted with the list of numbers [2, 5, 9, 25, 69, 73, 82, 96, 100, 126, 150 ] and offered a reward if they independently choose the same number. If the two are mathematicians, it is likely that they will both choose 2—the only even prime. Non-mathematicians are likely to choose 100—a number which seems, to the mathematicians, no more unique than the other two exact squares. Illiterates might agree on 69, because of its peculiar symmetry—as would, for a different reason, those whose interest in numbers is more prurient than mathematical.

This is a trivial point, but as a student of mathematics, I feel compelled to point out that while I think he is correct that most mathematicians would choose 2, his reasoning for why is wrong. Mathematicians would pick 2 because there is a convention in mathematics of, when you have to make an arbitrary choice (but want to specify it anyway), pick the smallest (if this makes sense in context).

comment by wedrifid · 2012-06-29T21:07:44.559Z · LW(p) · GW(p)

Two people are separately confronted with the list of numbers [2, 5, 9, 25, 69, 73, 82, 96, 100, 126, 150 ] and offered a reward if they independently choose the same number. If the two are mathematicians, it is likely that they will both choose 2—the only even prime. Non-mathematicians are likely to choose 100—a number which seems, to the mathematicians, no more unique than the other two exact squares. Illiterates might agree on 69, because of its peculiar symmetry—as would, for a different reason, those whose interest in numbers is more prurient than mathematical.

I'm a programmer and it seemed like the obvious choice was "2". It is right there are the start of the list! Was there some indication that the individuals were given different (ie. shuffled) lists? If they were shuffled or, say, provided as a heap of cards with numbers on them it may be slightly more credible "eye of the beholder" scenario.

Replies from: army1987↑ comment by A1987dM (army1987) · 2012-06-29T22:13:12.462Z · LW(p) · GW(p)

I would have chosen 2 if playing with a copy of myself, but 100 if playing with a randomly chosen person from my culture I hadn't met before.

Replies from: wedrifid↑ comment by wedrifid · 2012-06-29T22:24:06.678Z · LW(p) · GW(p)

I would have chosen 2 if playing with a copy of myself, but 100 if playing with a randomly chosen person from my culture I hadn't met before.

I'd have chosen 73 if I was playing with a copy of myself. Just because all else being equal I'd prefer to mess with the researcher.

Replies from: Anatoly_Vorobey, army1987↑ comment by Anatoly_Vorobey · 2013-02-15T10:27:35.729Z · LW(p) · GW(p)

I'm not very sure, but I think I'd have chosen 73 as the only nontrivial prime. It stands out in the list because as I go over it it's the only one I wonder about.

↑ comment by A1987dM (army1987) · 2012-06-29T23:14:59.230Z · LW(p) · GW(p)

I'd have chosen 73 if I was playing with a copy of myself. Just because all else being equal I'd prefer to mess with the researcher.

Before reading the last sentence I thought of this.

Replies from: wedrifidcomment by shokwave · 2012-06-29T09:34:59.016Z · LW(p) · GW(p)

Excellent post, excellent sequence. Advanced game theory is definitely something rationalists should have in their toolbox, since so much real-world decision-making involves other peoples' decisions.

Here there are a nearly infinite number of possible choices ... these are all Nash equilibria. Despite this mind-boggling array of possibilities, in fact all six episodes of this particular game ended with the two contestants meeting successfully after only a few days.

An interesting analysis when you have "nearly infinite" Nash equilibria, is the metagame of selecting which equilibrium to play. Do you plan on exploring this in the sequence?

Of course, specialness, like beauty, is in the eye of the beholder.

I strongly urge readers to explore this! What should you do if you suspect the other player beholds 'specialness' in a different way to you? Does your plan of action change if you suspect the other player also suspects a difference in beholding?

Replies from: wedrifid↑ comment by wedrifid · 2012-06-29T21:13:53.502Z · LW(p) · GW(p)

I strongly urge readers to explore this! What should you do if you suspect the other player beholds 'specialness' in a different way to you?

Choose a different person to date. Seriously---I suggest that at least part of what we do when dating and to a lesser extent non-romantic relationship formation is identify and select people who have vaguely compatible intuitions about where the shelling points are in relevant game theoretic scenarios.

Replies from: shokwavecomment by Emiya (andrea-mulazzani) · 2020-09-30T11:06:25.575Z · LW(p) · GW(p)

Could someone explain how does the defending general in the Metropolis - Podunk example calculates his strategy?

I understand why the expected loss stays the same only if he defends Metropolis 10 /11 times and Podunk 1/11 times, but when I tried to calculate his decisions with the suggested procedure I ended up with the (wrong) result he should defend Podunk 10/11 times and Metropolis 1/11, and I'm not understanding what I'm doing wrong, so I'd likely botch the numbers when trying to calculate what to do for a more difficult decision.

Replies from: mohamed-benaicha↑ comment by Mohamed Benaicha (mohamed-benaicha) · 2021-01-01T15:14:47.744Z · LW(p) · GW(p)

Think of the attacker as deriving the same utility whether he/she attacks Podunk 10 times, or Metropolis once. That's why the attacker is ready to attack Metropolis 10 times before occupying it, or Podunk once.

Likewise, the defender derives a utility of 10 for defending Metropolis and 1 from defending Podunk. What this means is that the defender is able to bear 9 blows from the enemy and it would still be worth it to defend Metropolis. After the 10th attack, the defender would derive the same utility from defending Metropolis as if he/she were to defend Podunk.

I hope that sorta explains it. Someone correct me if I got it wrong.

Replies from: andrea-mulazzani↑ comment by Emiya (andrea-mulazzani) · 2021-01-02T10:10:56.527Z · LW(p) · GW(p)

I see, expressed like this it's obvious...

I think I should calculate the loss of utility multiplying or the missed defences, then, since the utility is different only when the defence is unsuccessful.

This way I get -10 x 1/11 and -1 x 10/11, so the utility stays the same and I'm defending Metropolis 10 times out of eleven as I'm supposed to.

comment by [deleted] · 2012-07-02T08:07:55.273Z · LW(p) · GW(p)

Nice post, thanks for writing it up. It was interesting and very easy to read because principles were all first motivated by problems.

That said, I still feel confused. Let me try to re-frame your post in a decision theory setting: We have to make a decision, so the infinite regress of dependencies from rational players modelling each other, which doesn't generally settle to a fixed set of actions for all players who model each other naively (as shown by the penny matching example), has to be truncated some how. To do that, the game theory people decided that they'd build a new game where instead of rational players picking actions, rational players choose among strategies which return irrational players who take constant actions. These strategies make use of "biased dice", which are facts whose values the rational players are ignorant of, at least until they've selected their strategies. Each player gets their own dice of whatever biases they want, and all the dice are independent of each other. By introducing the dice, a rational player makes themself and all the other players partially uncertain about what constant action player they will select. This common enforced ignorance (or potential ignorance, since the rational players can submit pure strategies and skip the dice all together) can have some interesting properties: there will always be (for games with whatever reasonable conditions) at least one so called Nash equilibrium, which is a set of strategies returned by the rational players for which no player would unilaterally modify their decision upon learning the decisions of the others (though this and the Schelling point thing sound like an inconsistent bag of features from people who aren't sure whether the agents' decisions do or don't depend on each other).

That's as much as I got. I don't understand the conditions for a Nash equilibrium to be optimal for everybody, i.e. something the rational agents would actually all want to do instead of something that just has nice properties. You say that "purely rational players will always end up at a Nash equilibrium", but I don't think your post quite established that and I don't think it can be completely true, because of things like the self-PD. Is that what the SI's open problems of "blackmail-free equilibrium among timeless strategists" and "fair division by continuous / multiparty agents" are about maybe? That would be cool.

Also, and probably related to not having clear conditions for an optimal equilibrium, the meta-game of submitting strategies doesn't really look like it solves the infinite regress: if we find ourselves in a Nash equilibrium and agents' decisions are independent, then great, we don't have to model anything because the particular blend of utilities and enforced common ignorance means no strategy change is expected to help, but getting everyone into an equilibrium rationally (instead of hoping everyone likes the same flavor of Schelling's Heuristic Soup) still looks hard.

If Nash equilibrium is about indifference of unilateral strategy changes for independent decisions, are there ever situations where a player can switch to a strategy with equal expected utility, putting the strategy set in disequilibrium, so that other players could change their strategies, with all players improving or keeping their expected utility constant, putting the strategy set in a different equilibrium (or into a strategy set where everyone is better off, like mutual cooperation in PD)? That is, are there negotiation paths out of equilibria whose steps make no one worse off? If so, it'd then be nice to find a general selfish scheme by which players will limit themselves to those paths. This sounds like a big fun problem.

Replies from: Yvain↑ comment by Scott Alexander (Yvain) · 2012-07-03T03:09:46.280Z · LW(p) · GW(p)

That's as much as I got. I don't understand the conditions for a Nash equilibrium to be optimal for everybody, i.e. something the rational agents would actually all want to do instead of something that just has nice properties. You say that "purely rational players will always end up at a Nash equilibrium", but I don't think your post quite established that and I don't think it can be completely true, because of things like the self-PD. Is that what the SI's open problems of "blackmail-free equilibrium among timeless strategists" and "fair division by continuous / multiparty agents" are about maybe? That would be cool.

"Optimal for everybody" is a very un-game-theoretic outlook. Game theorists think more in terms of "optimal for me, and screw the other guy". If everyone involved is totally selfish, and they expect other players to be pretty good at figuring out their strategy, and they don't have some freaky way of correlating their decisions with those of other agents like TDT, then they'll aim for a Nash equilibrium (though if there are multiple Nash equilibria, they might not hit the same one).

This fails either when agents aren't totally selfish (if, like you, they're looking for what's optimal for everyone, which is a very different problem), or if they're using an advanced decision theory to correlate their decisions, which is harder for normal people than it is for people playing against clones of themselves or superintelligences that can output their programs. I'll discuss this more later.

Also, and probably related to not having clear conditions for an optimal equilibrium, the meta-game of submitting strategies doesn't really look like it solves the infinite regress: if we find ourselves in a Nash equilibrium and agents' decisions are independent, then great, we don't have to model anything because the particular blend of utilities and enforced common ignorance means no strategy change is expected to help, but getting everyone into an equilibrium rationally (instead of hoping everyone likes the same flavor of Schelling's Heuristic Soup) still looks hard.

Agreed.

Replies from: shokwave, None↑ comment by shokwave · 2012-07-03T04:57:33.988Z · LW(p) · GW(p)

This fails either when agents aren't totally selfish (if, like you, they're looking for what's optimal for everyone, which is a very different problem)

It's not very different - you just need to alter the agents' utility functions slightly, to value the other player gaining utility as well.